[CDH] Redis: Remote Dictionary Server

基本概念

一、安装

Redis: Remote Dictionary Server 远程字典服务

使用ANSI C语言编写、支持网络、可基于内存亦可持久化的日志型、Key-Value数据库,并提供多种语言的API。

其他接口支持:https://redis.io/clients

原代码下载:https://github.com/antirez/redis

二、启动服务

[root@node01 bin]# ls dump.rdb mkreleasehdr.sh redis-benchmark redis-check-aof redis-check-rdb redis-cli redis-server

[root@node01 bin]# ./redis-server ../etc/redis.conf 8952:C 08 Dec 17:22:00.641 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo 8952:C 08 Dec 17:22:00.641 # Redis version=4.0.11, bits=64, commit=00000000, modified=0, pid=8952, just started 8952:C 08 Dec 17:22:00.641 # Configuration loaded

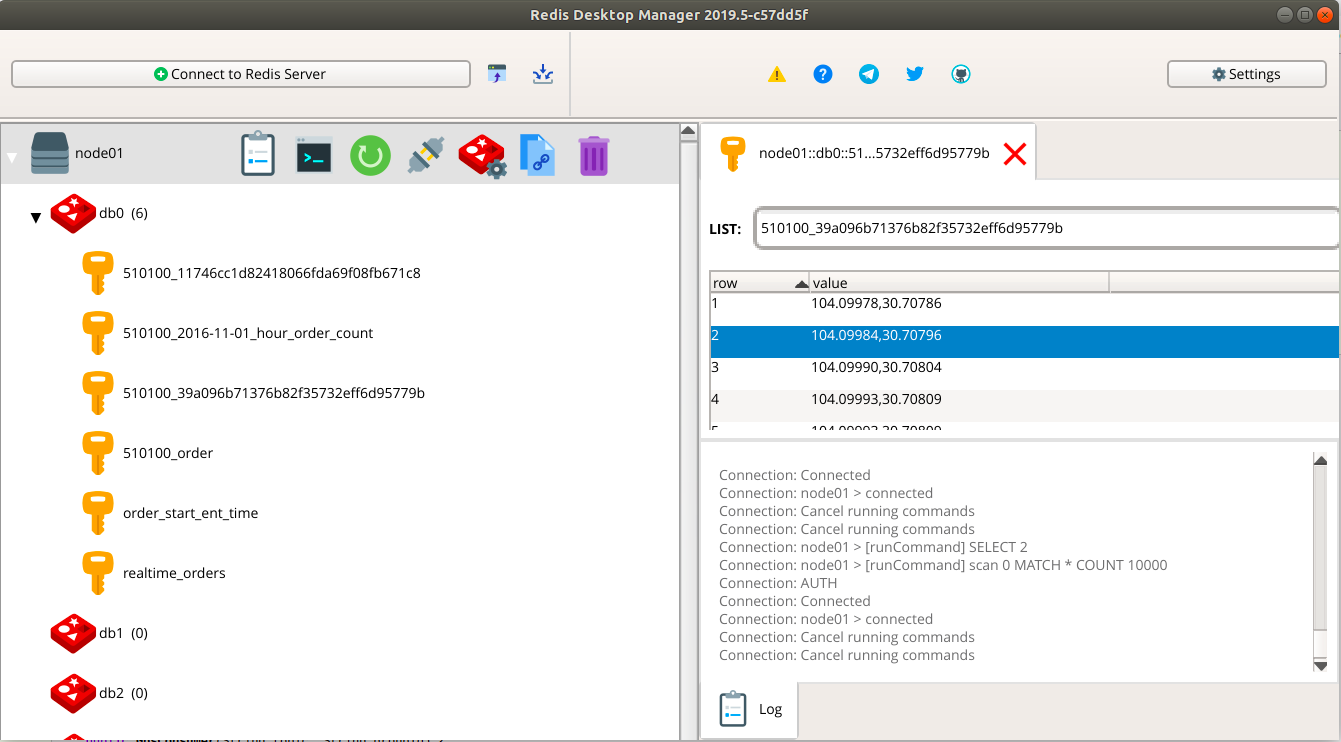

三、图形界面

Redis 教程

一,"client命令行"模式

[菜鸟教程]:https://www.runoob.com/redis/redis-tutorial.html

[root@node01 bin]# ls dump.rdb mkreleasehdr.sh redis-benchmark redis-check-aof redis-check-rdb redis-cli redis-server

# 客户端命令行模式

[root@node01 bin]# ./redis-cli 127.0.0.1:6379>

# 远程登录

$redis-cli -h 127.0.0.1 -p 6379 -a "mypass"

redis 127.0.0.1:6379>

redis 127.0.0.1:6379> PING

PONG

二,菜鸟Redis 教程

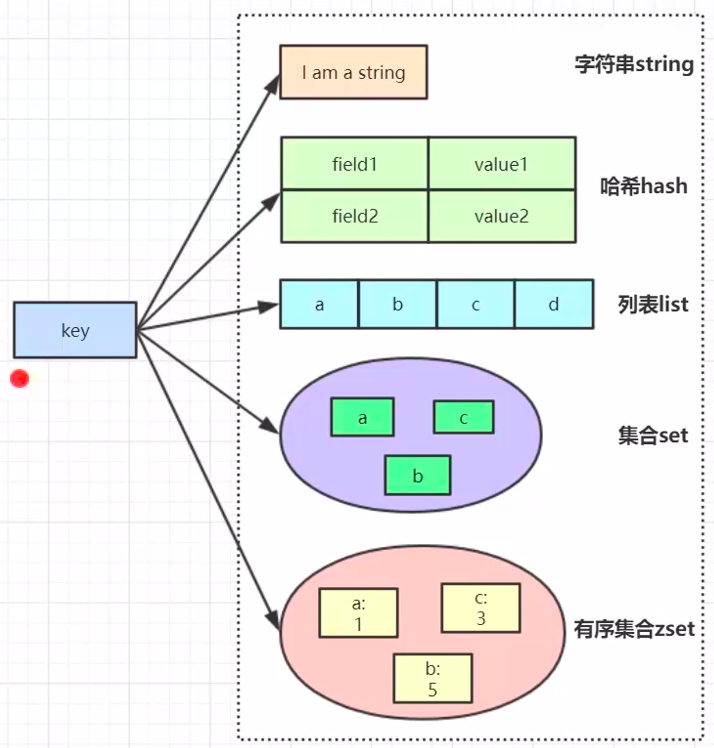

- 数据类型

Redis支持五种数据类型:string(字符串),hash(哈希),list(列表),set(集合)及 zset (sorted set:有序集合)。Redis 在 2.8.9 版本添加了 HyperLogLog 结构

(1) 字符串

redis 127.0.0.1:6379> SET runoobkey redis OK

redis 127.0.0.1:6379> GET runoobkey "redis"

(2) 哈希

127.0.0.1:6379> HMSET runoobkey name "redis tutorial" description "redis basic commands for caching" likes 20 visitors 23000 OK

127.0.0.1:6379> HGETALL runoobkey 1) "name" 2) "redis tutorial" 3) "description" 4) "redis basic commands for caching" 5) "likes" 6) "20" 7) "visitors" 8) "23000"

(3) 列表

redis 127.0.0.1:6379> LPUSH runoobkey redis (integer) 1

redis 127.0.0.1:6379> LPUSH runoobkey mongodb (integer) 2

redis 127.0.0.1:6379> LPUSH runoobkey mysql (integer) 3

redis 127.0.0.1:6379> LRANGE runoobkey 0 10 1) "mysql" 2) "mongodb" 3) "redis"

(4) 集合

redis 127.0.0.1:6379> SADD runoobkey redis (integer) 1

redis 127.0.0.1:6379> SADD runoobkey mongodb (integer) 1

redis 127.0.0.1:6379> SADD runoobkey mysql (integer) 1

redis 127.0.0.1:6379> SADD runoobkey mysql (integer) 0

redis 127.0.0.1:6379> SMEMBERS runoobkey 1) "mysql" 2) "mongodb" 3) "redis"

(5) 有序集合

redis 127.0.0.1:6379> ZADD runoobkey 1 redis (integer) 1

redis 127.0.0.1:6379> ZADD runoobkey 2 mongodb (integer) 1

redis 127.0.0.1:6379> ZADD runoobkey 3 mysql (integer) 1

redis 127.0.0.1:6379> ZADD runoobkey 3 mysql (integer) 0

redis 127.0.0.1:6379> ZADD runoobkey 4 mysql # 把之前的两个覆盖了呢 (integer) 0

redis 127.0.0.1:6379> ZRANGE runoobkey 0 10 WITHSCORES 1) "redis" 2) "1" 3) "mongodb" 4) "2" 5) "mysql" 6) "4"

(6) HyperLogLog

redis 127.0.0.1:6379> PFADD runoobkey "redis" 1) (integer) 1 redis 127.0.0.1:6379> PFADD runoobkey "mongodb" 1) (integer) 1 redis 127.0.0.1:6379> PFADD runoobkey "mysql" 1) (integer) 1 redis 127.0.0.1:6379> PFCOUNT runoobkey (integer) 3

- 发布订阅

有例子,内容也不错.goto: Redis的发布/订阅工作模式详解

如下的Java代码示范不错.

package org.xninja.ghoulich.JedisTest; import redis.clients.jedis.Jedis;

public class App {

@SuppressWarnings("resource") public static void main(String[] args) {

final Jedis jedis = new Jedis("192.168.1.109", 6379); final Jedis pjedis = new Jedis("192.168.1.109", 6379);

final MyListener listener = new MyListener(); final MyListener plistener = new MyListener();

// new 一个构造函数,并定义类中的方法 Thread thread = new Thread(new Runnable() { public void run() { jedis.subscribe(listener, "mychannel"); } });

Thread pthread = new Thread(new Runnable() { public void run() { pjedis.psubscribe(plistener, "mychannel.*"); } });

thread.start(); pthread.start(); } }

如下监听器,会对频道和模式的订阅、接收消息和退订等事件进行监听,然后进行相应的处理。

package org.xninja.ghoulich.JedisTest; import redis.clients.jedis.JedisPubSub; public class MyListener extends JedisPubSub { // 取得订阅的消息后的处理 public void onMessage(String channel, String message) { System.out.println("onMessage: " + channel + "=" + message); if (message.equals("quit")) this.unsubscribe(channel); }

// 初始化订阅时候的处理 public void onSubscribe(String channel, int subscribedChannels) { System.out.println("onSubscribe: " + channel + "=" + subscribedChannels); }

// 取消订阅时候的处理 public void onUnsubscribe(String channel, int subscribedChannels) { System.out.println("onUnsubscribe: " + channel + "=" + subscribedChannels); }

// 初始化按模式的方式订阅时候的处理 public void onPSubscribe(String pattern, int subscribedChannels) { System.out.println("onPSubscribe: " + pattern + "=" + subscribedChannels); }

// 取消按模式的方式订阅时候的处理 public void onPUnsubscribe(String pattern, int subscribedChannels) { System.out.println("onPUnsubscribe: " + pattern + "=" + subscribedChannels); }

// 取得按模式的方式订阅的消息后的处理 public void onPMessage(String pattern, String channel, String message) { System.out.println("onPMessage: " + pattern + "=" + channel + "=" + message); if (message.equals("quit")) this.punsubscribe(pattern); } }

- 事务

先以 MULTI 开始一个事务, 然后将多个命令入队到事务中, 最后由 EXEC 命令触发事务, 一并执行事务中的所有命令。

单个 Redis 命令的执行是原子性的,但 Redis 没有在事务上增加任何维持原子性的机制,所以 Redis 事务的执行并不是原子性的。

事务可以理解为一个打包的批量执行脚本,但批量指令并非原子化的操作,中间某条指令的失败不会导致前面已做指令的回滚,也不会造成后续的指令不做。

redis 127.0.0.1:7000> multi OK

redis 127.0.0.1:7000> set a aaa QUEUED

redis 127.0.0.1:7000> set b bbb # 如果在 set b bbb 处失败,set a 已成功不会回滚,set c 还会继续执行。 QUEUED

redis 127.0.0.1:7000> set c ccc QUEUED

redis 127.0.0.1:7000> exec 1) OK 2) OK 3) OK

Redis 实战

- Kafka --> Redis

Kafka输出数据到Redis,以及Hbase。

Hbase api另外单独讲解,此处只涉及到redis部分.

public class GpsConsumer implements Runnable {

private static Logger log = Logger.getLogger(GpsConsumer.class); private final KafkaConsumer<String, String> consumer; private final String topic;

//计数消费到的消息条数 private static int count = 0; private FileOutputStream file = null; private BufferedOutputStream out = null; private PrintWriter printWriter = null; private String lineSeparator = null; private int batchNum = 0; JedisUtil instance = null; Jedis jedis = null; private String cityCode = ""; private Map<String, String> gpsMap = new HashMap<String, String>(); SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

public GpsConsumer(String topic, String groupId) {

if (topic.equalsIgnoreCase(TopicName.CHENG_DU_GPS_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_CHENG_DU; } else if (topic.equalsIgnoreCase(TopicName.XI_AN_GPS_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_XI_AN; } else if (topic.equalsIgnoreCase(TopicName.HAI_KOU_ORDER_TOPIC.getTopicName())) { cityCode = Constants.CITY_CODE_HAI_KOU; }else{ throw new IllegalArgumentException(topic+",主题名称不合法!"); }

Properties props = new Properties();//pro-cdh props.put("bootstrap.servers", Constants.KAFKA_BOOTSTRAP_SERVERS); // 设置好kafka集群 props.put("group.id", groupId); props.put("enable.auto.commit", "true"); props.put("auto.offset.reset", "earliest"); props.put("session.timeout.ms", "30000"); props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer"); consumer = new KafkaConsumer<String,String>(props); # 第一步,构造好了kafka consumer this.topic = topic; }

//----------------------------------------------------------------------------------------------

@Override public void run() { while (true) { try { doWork(); } catch (Exception e) { e.printStackTrace(); } } } public void doWork() throws Exception { batchNum++; consumer.subscribe(Collections.singletonList(this.topic)); ConsumerRecords<String, String> records = consumer.poll(1000);

System.out.println("第" + batchNum + "批次," + records.count());

//司机ID String driverId = ""; //订单ID String orderId = ""; //经度 String lng = ""; //维度 String lat = ""; //时间戳 String timestamp = "";

Order order = null; Order startEndTimeOrder = null; Object tmpOrderObj = null;

/**

* Jeff: 如果有数据,则记录下来

*/ if (records.count() > 0) {

// (1) hbase就绪

Table table = HBaseUtil.getTable(Constants.HTAB_GPS);

// (2) Redis就绪 JedisUtil instance = JedisUtil.getInstance(); jedis = instance.getJedis();

/////////////////////////////////////////////////////

List<Put> puts = new ArrayList<>(); String rowkey = ""; if (gpsMap.size() > 0) { gpsMap.clear(); } //表不存在时创建表 if (!HBaseUtil.tableExists(Constants.HTAB_GPS)) { HBaseUtil.createTable(HBaseUtil.getConnection(), Constants.HTAB_GPS, Constants.DEFAULT_FAMILY); } for (ConsumerRecord<String, String> record : records) { count++; log.warn("Received message: (" + record.key() + ", " + record.value() + ") at offset " + record.offset() + ",count:" + count);

String value = record.value(); if (value.contains(",")) { order = new Order(); String[] split = value.split(","); driverId = split[0]; orderId = split[1]; timestamp = split[2]; lng = split[3]; lat = split[4]; rowkey = orderId + "_" + timestamp; gpsMap.put("CITYCODE", cityCode); gpsMap.put("DRIVERID", driverId); gpsMap.put("ORDERID", orderId); gpsMap.put("TIMESTAMP", timestamp + ""); gpsMap.put("TIME", sdf.format(new Date(Long.parseLong(timestamp+"000")))); gpsMap.put("LNG", lng); gpsMap.put("LAT", lat); order.setOrderId(orderId); puts.add(HBaseUtil.createPut(rowkey, Constants.DEFAULT_FAMILY.getBytes(), gpsMap));

////////////

// hbase //

//////////////////////////////////////////////////////////////////////////////////////////////

// redis //

///////////

//1.存入实时订单单号 jedis.sadd(Constants.REALTIME_ORDERS, cityCode + "_" + orderId);

//2.存入实时订单的经纬度信息 jedis.lpush(cityCode + "_" + orderId, lng + "," + lat);

//3.存入订单的开始结束时间信息 byte[] orderBytes = jedis.hget(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes()); if (orderBytes != null) { tmpOrderObj = ObjUtil.deserialize(orderBytes); } if (null != tmpOrderObj) { startEndTimeOrder = (Order) tmpOrderObj; startEndTimeOrder.setEndTime(Long.parseLong(timestamp+"000")); jedis.hset(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes(), ObjUtil.serialize(startEndTimeOrder)); } else { //第一次写入订单的开始时间,开始时间和结束时间一样 order.setStartTime(Long.parseLong(timestamp)); order.setEndTime(Long.parseLong(timestamp)); jedis.hset(Constants.ORDER_START_ENT_TIME.getBytes(), orderId.getBytes(), ObjUtil.serialize(order)); }

hourOrderInfoGather(jedis, gpsMap);

} else if (value.contains("end")) { jedis.lpush(cityCode + "_" + orderId, value); } }

table.put(puts); instance.returnJedis(jedis); } log.warn("正常结束..."); }

/** * 统计城市的每小时的订单信息和订单数 * @throws Exception */ public void hourOrderInfoGather(Jedis jedis, Map<String, String> gpsMap) throws Exception{

String time = gpsMap.get("TIME"); String orderId = gpsMap.get("ORDERID"); String day = time.substring(0,time.indexOf(" ")); String hour = time.split(" ")[1].substring(0,2);

//redis表名,小时订单统计 String hourOrderCountTab = cityCode + "_" + day + "_hour_order_count"; //redis表名,小时订单ID String hourOrderField = cityCode + "_" + day + "_" + hour; String hourOrder = cityCode + "_order"; int hourOrderCount = 0;

//redis set集合中存放每小时内的所有订单id if(!jedis.sismember(hourOrder,orderId)){ //使用set存储小时订单id jedis.sadd(hourOrder,orderId); String hourOrdernum = jedis.hget(hourOrderCountTab, hourOrderField); if(StringUtils.isEmpty(hourOrdernum)){ hourOrderCount = 1; }else{ hourOrderCount = Integer.parseInt(hourOrdernum) + 1; } //HashMap 存储每个小时的订单总数 jedis.hset(hourOrderCountTab, hourOrderField, hourOrderCount+""); } } public static void main(String[] args) { Logger.getLogger("org.apache.kafka").setLevel(Level.INFO); //kafka主题 String topic = "cheng_du_gps_topic"; //消费组id String groupId = "cheng_du_gps_consumer_01"; GpsConsumer gpsConsumer = new GpsConsumer(topic, groupId); Thread start = new Thread(gpsConsumer); start.start(); } }

Redis与业务逻辑

以上代码涉及到如下几个操作,侧面也反映了redis的若干数据结构如何使用的问题,以切合业务逻辑.

jedis.sadd

jedis.lpush

jedis.hget

jedis.hset

腾讯课堂,Redis使用精髓-微博与微信如何用Redis巧妙构建

- String

(1) 减库存方法,利用分布式锁:

线程1 SETNX product:1001 true

1. 查询商品1001的库存

2. 减库存

3. 写回数据库

del product:1001

(2) 博客浏览量的实现,原子加减:

INCR article:readcount:1000

- Hash

(1) 购物车界面:

添加商品:hset cart:1001 10088 1

增加数量:hincrby cart:1001 10088 1

商品总数:hlen cart:1001

删除商品:hdel cart:1001 20088

获取购物车所有商品:hgetall cart:1001

- List

(1) 常见数据结构:

Stack = LPUSH + LPOP

Queue = LPUSH + RPOP

Blocking MQ = LPUSH + BRPOP

(2) 微博消息流:

1. MacTalk发微博,消息ID为10018 --> LPUSH msg:18888 10018

2. 博猪二发微博,消息ID为10018 --> LPUSH msg:18888 10086

3. 查看最新微博消息: --> LRANGE msg:18888 0 5

- Set

(1) 微信抽奖小程序

1. 点击参与抽奖加入集合:SADD key {userID}

2. 查看参与抽奖所有用户:SMEMBERS key

3. 抽取count名中奖者:SRANDMEMBER key [count] / SPOP key [count]

(2) 微信朋友圈操作

1. 点赞:SADD like:{msg id} {user id}

2. 取消点赞:SREM like:{msg id} {user id}

3. 检查用户是否点过赞:SISMEMBER like:{msg id} {user id}

4. 获取点赞的用户列表:SMEMBERS like:{msg id}

5. 获取点赞用户数:SCARD like:{msg id}

(3) 关注关系查询

1. 共同关注:SINTER zhugeSet yangguoSet

2. 我关注的人也关注他 (yangguo):SISMEMBER simaSet yangguo;SISMEMBER lubanSet yangguo

3. 我可能认识的人:SDIFF yangguoSet zhugeSet

- Zset

(1) 排行榜实现

1. 点击新闻:ZINCRBY hotNews:20190919 1 守护地球

2. 展示当日排行前十:ZREVRANGE hotNews:20190819 0 10 WITHSCORES

3. 展示七日排行前十:ZREVRANGE hotNews:20190813-20190819 0 10 WITHSCORES

高级知识点

听说Redis都会遇到并发、雪崩等难题?我用10分钟就解决了

2019BATJ面试题汇总详解:MyBatis+MySQL+Spring+Redis+多线程

End.

浙公网安备 33010602011771号

浙公网安备 33010602011771号