第2章、Kafka集群部署

一、环境准备

1.1、集群规划

| broker0 | broker1 | broker2 |

| zk | zk | zk |

| kafka | kafka | kafka |

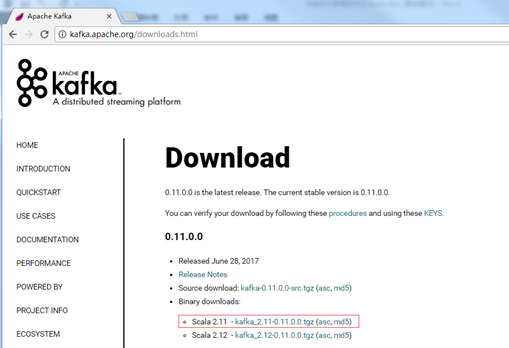

1.2、jar包下载

http://kafka.apache.org/downloads.html

二、Kafka集群部署

- 解压安装包

tar -zxvf kafka_2.11-0.11.0.0.tgz -C /usr/local

- 修改解压后的文件名称

mv /usr/local/kafka_2.11-0.11.0.0/ /usr/local/kafka

- 在/opt/module/kafka目录下创建logs文件夹

mkdir -p /opt/module/kafka/logs

- 修改配置文件

# 操作前要备份

cp /usr/local/kafka/config/zookeeper.properties /usr/local/kafka/config/server.properties.bat

vim /usr/local/kafka/config/server.properties

输入以下内容:

#broker的全局唯一编号,不能重复 broker.id=0

listeners=PLAINTEXT://192.168.86.134:9092 #写各自的IP #删除topic功能使能 delete.topic.enable=true #处理网络请求的线程数量 num.network.threads=3 #用来处理磁盘IO的现成数量 num.io.threads=8 #发送套接字的缓冲区大小 socket.send.buffer.bytes=102400 #接收套接字的缓冲区大小 socket.receive.buffer.bytes=102400 #请求套接字的缓冲区大小 socket.request.max.bytes=104857600 #kafka运行日志存放的路径 log.dirs=/opt/module/kafka/logs #topic在当前broker上的分区个数 num.partitions=1 #用来恢复和清理data下数据的线程数量 num.recovery.threads.per.data.dir=1 #segment文件保留的最长时间,超时将被删除 log.retention.hours=168 #配置连接Zookeeper集群地址 zookeeper.connect=kafka01:2181,kafka02:2181,kafka03:2181

- 配置好后分发

scp -r /usr/local/kafka 192.168.86.133:/usr/local

- 配置环境变量

#KAFKA_HOME(每个broker都要配置)

echo "export KAFKA_HOME=/usr/local/kafka" >> /etc/profile

echo "export PATH=$PATH:$KAFKA_HOME/bin" >> /etc/profile

source /etc/profile

- 安装好之后可以通过虚拟机克隆,也可以通过脚本分发,

注意:分发之后记得配置其他机器的环境变量;分别在kafka02和kafka03上修改配置文件/usr/local/kafka/config/zookeeper.properties中的broker.id=1、broker.id=2broker.id不得重复

- 配置Systemctl kafka服务

cd /lib/systemd/system/

#创建kafka服务文件

vim kafka.service

[Unit]

Description=broker(Apache Kafka server)

After=network.target zookeeper.service

[Service]

Type=simple

Environment="PATH=/usr/local/jdk/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin"

User=root

Group=root

ExecStart=/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties

ExecStop=/usr/local/kafka/bin/kafka-server-stop.sh

Restart=on-failure

[Install]

WantedBy=multi-user.target

# 刷新systemctl systemctl daemon-reload # 设置kafka服务开机自启 systemctl enable kafka # 启动kafka systemctl start kafka # 关闭kafka systemctl stop kafka # 重启kafka systemctl restart kafka # 查看kafka实例状态 systemctl status kafka

分发: scp -r /lib/systemd/system/kafka.service 192.168.86.133:/lib/systemd/system/

三、Kafka命令行操作

- Kafka命令行操作

# 显示topic列表 /usr/local/kafka/bin/kafka-topics.sh --zookeeper 192.168.86.132:2181,192.168.86.133:2181,192.168.86.134:2181 --list # 创建topic /usr/local/kafka/bin/kafka-topics.sh --zookeeper 192.168.86.132:2181,192.168.86.133:2181,192.168.86.134:2181 --create --replication-factor 2 --partitions 2 --topic first # 删除topic /usr/local/kafka/bin/kafka-topics.sh --zookeeper 192.168.86.132:2181,192.168.86.133:2181,192.168.86.134:2181 --delete --topic first # 生产者发送信息 /usr/local/kafka/bin/kafka-console-producer.sh --broker-list 192.168.86.132:9092,192.168.86.133:9092,192.168.86.134:9092 --topic first # 消息者消费消息 /usr/local/kafka/bin/kafka-console-consumer.sh --bootstrap-server 192.168.86.132:9092,192.168.86.133:9092,192.168.86.134:9092 --topic first --from-beginning #查看某个Topic的详情 /usr/local/kafka/bin/kafka-topics.sh --zookeeper 192.168.86.132:2181,192.168.86.133:2181,192.168.86.134:2181 --describe --topic first

选项说明:

-

- --topic 定义topic名

- --replication-factor 定义副本数:副本数不能超过机器broker的数量

- --partitions 定义分区数

- 需要server.properties中设置delete.topic.enable=true否则只是标记删除或者直接重启。

- --from-beginning:会把first主题中以往所有的数据都读取出来。根据业务场景选择是否增加该配置。