rke高可用k8s集群安装和实现手册

一、环境基础要求

- 软件环境: 下表为rke安装k8s集群需要的软件环境

|

软件 |

版本 |

|

操作系统system |

Centos7.9 |

|

Docker |

20.10.20 |

|

k8s |

1.25.9 |

|

rke |

1.4.5 |

|

Docker Compose |

v2.18.1 |

- 主机、ip、角色

|

主机名称 |

ip地址 |

角色 |

|

master01 |

192.168.149.200 |

Controlplane、rancher、rke |

|

master02 |

192.168.149.201 |

Controlplane |

|

Worker01 |

192.168.149.205 |

Worker |

|

Worker02 |

192.168.149.206 |

Worker |

|

etcd01 |

192.168.149.210 |

Etcd |

- 硬件要求

l Cpu:最低要求cpu为2核;内存:4GB;硬盘:100GB以上

l Cpu和内存比列为:1:2或者1:4

l 要求能访问外网

l 禁止swap分区

二、软件基础配置

- 集群主机名配置

|

# hostnamectl set-hostname XXX |

- 配置静态ip地址

|

# vi /etc/sysconfig/network-scripts/ifcfg-ens33 TYPE="Ethernet" PROXY_METHOD="none" BROWSER_ONLY="no" BOOTPROTO="none" DEFROUTE="yes" IPV4_FAILURE_FATAL="no" IPV6INIT="yes" IPV6_AUTOCONF="yes" IPV6_DEFROUTE="yes" IPV6_FAILURE_FATAL="no" IPV6_ADDR_GEN_MODE="stable-privacy" NAME="ens33" UUID="a80f81c1-928c-4fc8-83c1-73366be7d684" DEVICE="ens33" ONBOOT="yes" IPADDR="192.168.149.200" PREFIX="24" GATEWAY="192.168.149.2" DNS1="192.168.149.2" IPV6_PRIVACY="no" |

- 实现主机名与ip地址解析

# vi /etc/hosts

|

192.168.149.200 master01 192.168.149.201 master02 192.168.149.205 worker01 192.168.149.206 worker02 192.168.149.210 etcd01 |

- 配置ip_forward过滤机制

|

# vi /etc/sysctl.conf net.ipv4.ip_forward=1 net.bridge.bridge-nf-call-ip6tables=1 net.bridge.bridge-nf-call-iptables=1 # modprobe br_netfilter # sysctl -p |

- 关闭防火墙

|

# systemctl stop firewalld # systemctl disable firewalld # systemctl status firewalld # firewall-cmd --state |

- Swap分区的设置

|

# sed -ri ‘s/.*swap/#&/’ /etc/fstab # swapoff -a # free -m |

- 时间同步

|

# yum -y install update # crontab -e 0 */1 * * * ntpdate ntp.aliyun.com # crontab -l |

- 关闭selinux

|

# sed -ri ‘s/SELINUX=enforcing/SELINUX=disable/’ /etc/selinux/config # setenforce 0 # sestatus |

三、docker部署

- 配置docker yum源: 这里使用的清华yum源,每一台集群机器都需要做

如果你之前安装过 docker,请先删掉

|

# yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine # yum install -y yum-utils device-mapper-persistent-data lvm2 # yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo # sed -i 's+https://download.docker.com+https://mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo # yum makecache fast |

- 安装docker ce:每一台集群机器都需要做

|

# yum install -y docker-ce-20.10.22 docker-cli-20.10.22 containerd.io |

- 启用docker ce:每一台集群机器都需要做

|

# systemctl enable docker # systemctl start docker # docker version |

- 配置docker镜像加速:每一台集群机器都需要做

# vi /etc/docker/daemon.json

|

{ "registry-mirrors":["https://81v7jdo5.mirror.aliyuncs.com"] } |

- docker-compose安装:

无法上网用户可以直接去github下载,然后上传操作;下载地址为:https://github.com/docker/compose/releases/download/v2.18.1/docker-compose-linux-x86_64

|

# curl -L “https://github.com/docker/compose/releases/download/2.18.1/docker-compose-`uname -s`-`uname -m` -O /usr/local/bin/docker-compose # chmod +x /usr/local/bin/docker-compose # ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose # docker-compose --version |

- 添加rancher用户:每一台集群机器都需要做建立rancher用户

|

# useradd rancher # usermod -aG docker rancher # echo 123 | passwd --stdin rancher |

- 生成ssh证书:每一台集群机器都需要做

|

# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:AgWS6I15+VqbrW70wI4qCP+G2r5hMbkqmq9lkbC66zQ root@master01 The key's randomart image is: +---[RSA 2048]----+ | ...... | |. .. . | |o + o | | * * . | |. B o . S | |o = * . | |+E*.* * | |=O++.= o | |&O*++o. | +----[SHA256]-----+ |

- 复制证书到所有主机:只在管理机器上做

|

# ssh-copy-id rancher@master01 # ssh-copy-id rancher@master02 # ssh-copy-id rancher@worker01 # ssh-copy-id rancher@worker02 # ssh-copy-id rancher@etcd01 |

- rke工具下载和安装

如果本机无法访问rke,需要下载,下载地址:https://github.com/rancher/rke/releases/download/v1.4.5/rke_linux-amd64;

rke支持版本Kubernetes version为:v1.25.9-rancher2-1 (Default)、v1.24.13-rancher2-1、v1.23.16-rancher2-2

|

# wget https://github.com/rancher/rke/releases/download/v1.4.5/rke_linux-amd64 # mv rke_linux-amd64 /usr/local/bin/rke # chmod +x /usr/local/bin/rke # ln -s /usr/local/bin/rke /usr/bin/rke # rke --version |

- rke安装k8s集群产生的配置文件

#mkdir -p /app/rancher

# rke config --name cluster.yml

|

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]: 集群私钥路径:~/.ssh/id_rsa [+] Number of Hosts [1]: 3 集群拥有几个节点:3 [+] SSH Address of host (1) [none]: 192.168.149.200 第一个节点ip地址:192.168.149.200 [+] SSH Port of host (1) [22]: 22 第一个节点端口:22 [+] SSH Private Key Path of host (192.168.149.200) [none]: ~/.ssh/id_rsa 第一个节点私钥路径:~/.ssh/id_rsa [+] SSH User of host (192.168.149.200) [ubuntu]: rancher 远程用户名:rancher [+] Is host (192.168.149.200) a Control Plane host (y/n)? [y]: y 是否是k8s集群控制节点:y [+] Is host (192.168.149.200) a Worker host (y/n)? [n]: n 是否是k8s集群工作节点:n [+] Is host (192.168.149.200) an etcd host (y/n)? [n]: n 是否是k8s集群etcd节点:n [+] Override Hostname of host (192.168.149.200) [none]: 不覆盖现有主机:回车默认 [+] Internal IP of host (192.168.149.200) [none]: 主机局域网地址:没有更改回车默认 [+] Docker socket path on host (192.168.149.200) [/var/run/docker.sock]: /var/run/docker.sock 主机上docker.sock路径:/var/run/docker.sock [+] SSH Address of host (2) [none]: 192.168.149.205 第二个节点ip地址:192.168.149.205 [+] SSH Port of host (2) [22]: 22 第二个节点远程端口:22 [+] SSH Private Key Path of host (192.168.149.205) [none]: ~/.ssh/id_rsa 第二个节点私钥路径:~/.ssh/id_rsa [+] SSH User of host (192.168.149.205) [ubuntu]: rancher 第二个节点远程用户名:rancher [+] Is host (192.168.149.205) a Control Plane host (y/n)? [y]: n 是否是k8s集群控制节点:n [+] Is host (192.168.149.205) a Worker host (y/n)? [n]: y 是否是k8s集群工作节点:y [+] Is host (192.168.149.205) an etcd host (y/n)? [n]: n 是否是k8s集群etcd节点:n [+] Override Hostname of host (192.168.149.205) [none]: 不覆盖现有主机:回车默认 [+] Internal IP of host (192.168.149.205) [none]: 主机局域网地址:没有更改回车默认 [+] Docker socket path on host (192.168.149.205) [/var/run/docker.sock]: /var/run/docker.sock 主机上docker.sock路径:/var/run/docker.sock [+] SSH Address of host (3) [none]: 192.168.149.210 第三个节点ip地址:192.168.149.210 [+] SSH Port of host (3) [22]: 22 第三个节点远程端口:22 [+] SSH Private Key Path of host (192.168.149.210) [none]: ~/.ssh/id_rsa 第三个节点私钥路径:~/.ssh/id_rsa [+] SSH User of host (192.168.149.210) [ubuntu]: rancher 第三个节点远程用户名:rancher [+] Is host (192.168.149.210) a Control Plane host (y/n)? [y]: n 是否是k8s集群控制节点:n [+] Is host (192.168.149.210) a Worker host (y/n)? [n]: n 是否是k8s集群工作节点:n [+] Is host (192.168.149.210) an etcd host (y/n)? [n]: y 是否是k8s集群etcd节点:y [+] Override Hostname of host (192.168.149.210) [none]: 不覆盖现有主机:回车默认 [+] Internal IP of host (192.168.149.210) [none]: 主机局域网地址:没有更改回车默认 [+] Docker socket path on host (192.168.149.210) [/var/run/docker.sock]: /var/run/docker.sock 主机上docker.sock路径:/var/run/docker.sock [+] Network Plugin Type (flannel, calico, weave, canal, aci) [canal]: calico 网络插件类型:自选,我选择的是calico [+] Authentication Strategy [x509]: 认证策略形式:X509 [+] Authorization Mode (rbac, none) [rbac]: rbac 认证模式:rbac [+] Kubernetes Docker image [rancher/hyperkube:v1.25.9-rancher2]: rancher/hyperkube:v1.25.9-rancher2 k8s集群使用的docker镜像:rancher/hyperkube:v1.25.9-rancher2 [+] Cluster domain [cluster.local]: sbcinfo.com 集群域名:默认即可 [+] Service Cluster IP Range [10.43.0.0/16]: 集群IP、server地址:默认即可 [+] Enable PodSecurityPolicy [n]: 开启pod安全策略:n [+] Cluster Network CIDR [10.42.0.0/16]: 集群pod ip地址:默认即可 [+] Cluster DNS Service IP [10.43.0.10]: 集群DNS ip地址:默认即可 [+] Add addon manifest URLs or YAML files [no]: 添加加载项清单url或yaml文件:回车默认即可或者no |

四、docker集群部署

1.集群部署

|

# rke up |

2.安装kubectl客户端管理工具

这里选择Kubernetes version为kubectl v1.27.2

下载地址为:curl -LO https://dl.k8s.io/release/v1.27.2/bin/linux/amd64/kubectl

|

# wget https://storage.googleapis.com/kubernetes-release/release/v1.27.2/bin/linux/amd64/kubectl # chmod +x kubectl # mv kubectl /usr/local/bin # kubectl version --client |

3.Kubectl客户端管理工具配置和应用的验证

集群创建过程中,会形成两个文件,一个是cluster.rkestate状态文件、另外一个文件是kube_config_cluster.yml入口文件

|

[root@master01 rancher]# ll 总用量 132 -rw------- 1 root root 108380 5月 27 12:19 cluster.rkestate -rw-r----- 1 root root 6579 5月 27 12:09 cluster.yml -rw------- 1 root root 5504 5月 27 12:16 kube_config_cluster.yml |

|

# ls /app/rancher # mkdir ./.kube # cp /app/rancher/kube_config_cluster.yml /root/.kube/config [root@master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 192.168.3.100 Ready controlplane 38m v1.25.9 192.168.3.105 Ready worker 38m v1.25.9 192.168.3.110 Ready etcd 38m v1.25.9 [root@master01 ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5b5d9f577c-lsnk8 1/1 Running 0 38m calico-node-gb9zt 1/1 Running 0 38m calico-node-sk4vg 1/1 Running 0 38m calico-node-whfzz 1/1 Running 0 38m coredns-autoscaler-74d474f45c-g6sf6 1/1 Running 0 38m coredns-dfb7f8fd4-smpc4 1/1 Running 0 38m metrics-server-c47f7c9bb-98th2 1/1 Running 0 38m rke-coredns-addon-deploy-job-rm8bt 0/1 Completed 0 38m rke-ingress-controller-deploy-job-v2x6d 0/1 Completed 0 38m rke-metrics-addon-deploy-job-m8lq2 0/1 Completed 0 38m rke-network-plugin-deploy-job-nm6kk 0/1 Completed 0 38m |

4.使用docker run启动一个reancher

|

# docker run -d --privileged -p 80:80 -p 443:443 -v /opt/data/rancher_data:/var/lib/rancher --restart=always --name rancher-2-7-0 rancher/rancher:v2.7.0 # docker ps # ss -anput | grep “ :80” tcp LISTEN 0 128 *:80 *:* users:(("docker-proxy",pid=59286,fd=4)) tcp LISTEN 0 128 [::]:80 [::]:* users:(("docker-proxy",pid=59294,fd=4)) |

4.1、设置网页登录的密码,docker下查询密码,然后自己重新输入密码

# docker logs container-id 2>&1 | grep "Bootstrap Password:"

# docker logs 9190a38d7627 2>&1 | grep "Bootstrap Password:"

2023/05/27 05:13:05 [INFO] Bootstrap Password: wknrt6mjxf8k8xgk7p5fcks66r8smhr9f7vqkmtsnzrrxx46skxc9b

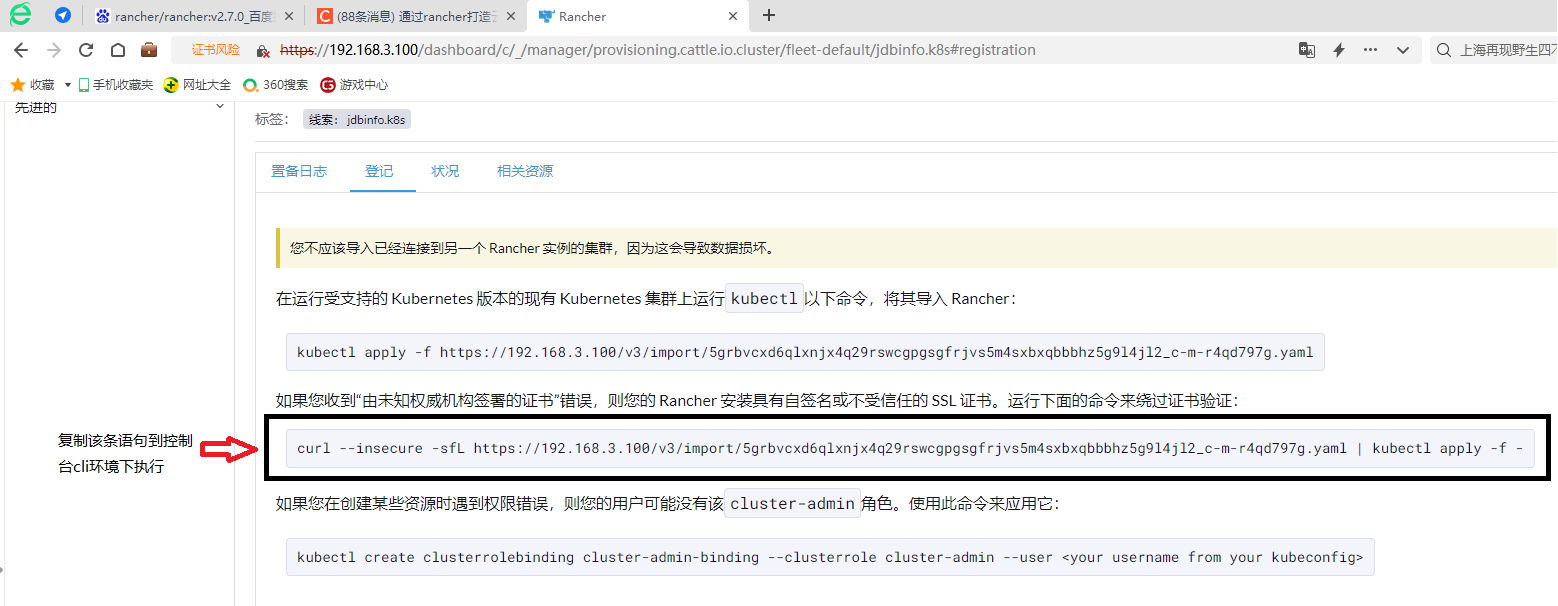

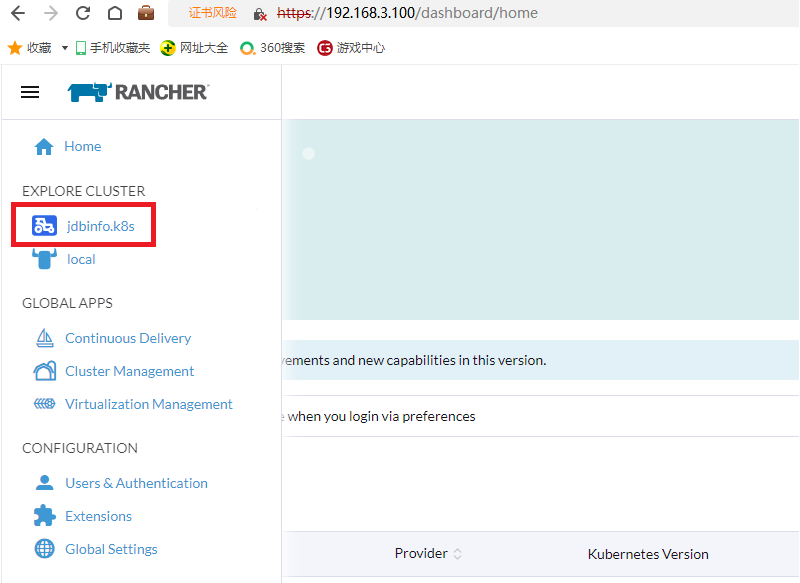

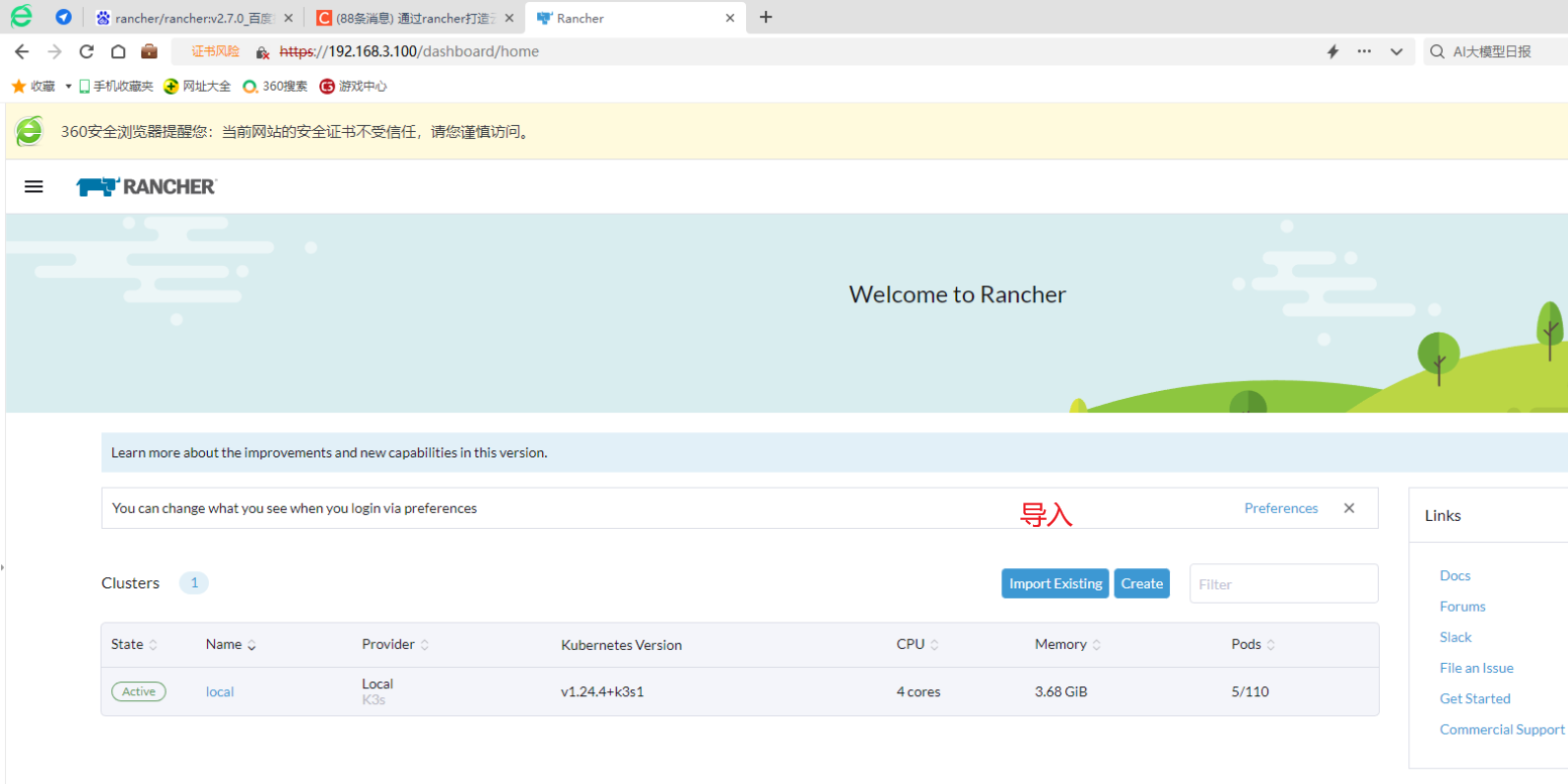

4.2、进入网页后,导入集群设置

|

|

|

|

|

|

|

#curl --insecure -sfL https://192.168.3.100/v3/import/5grbvcxd6qlxnjx4q29rswcgpgsgfrjvs5m4sxbxqbbbhz5g9l4jl2_c-m-r4qd797g.yaml | kubectl apply -f – # kubectl get ns |

|

|

5.增加worker节点

① 增加主机名称

② IP地址配置

③ 主机与IP地址解析配置

④ 配置ip_forward过滤机制

⑤ 防火墙配置

⑥ Selinux配置

⑦ Swap分区设置

⑧ Docker源配置

⑨ 安装docker并启动

⑩ 配置Docker镜像加速

⑪ 安装docker compose

⑫ 添加rancher用户,并加入docker组

⑬ 复制证书到这台机器,并验证

⑭ 修改cluster.yml文件

⑮ 使用rke up --update-only更新集群

6.启动一个项目验证

|

# vi nginx.yml apiversion:apps/v1 kind: Deployment metadata: name: nginx-test spec: selector: matchlables: app: nginx env: test owner: rancher replicas: 2 template: metadata: labels: app: nginx env: test owner: rancher spec: contaiers: - name: nginx-test image: nginx:1.19.9 ports: - contaiersPort: 80 |

|

# kubectl apply -f nginx.yml |

|

# vi nginx-service.yml apiversion:v1 kind: Service metadata: name: nginx-test labels: run: nginx spec: type: NodePort ports: - port: 80 protocol: tcp selector: owner: rancher |

|

# kubectl apply -f nginx-service.yml |

|

# kubectl get pods -o wide |

浙公网安备 33010602011771号

浙公网安备 33010602011771号