2020寒假生活学习日记(十五)

后来在用JAVA爬取北京信件内容过程中出现好多问题。

我该用python爬取。

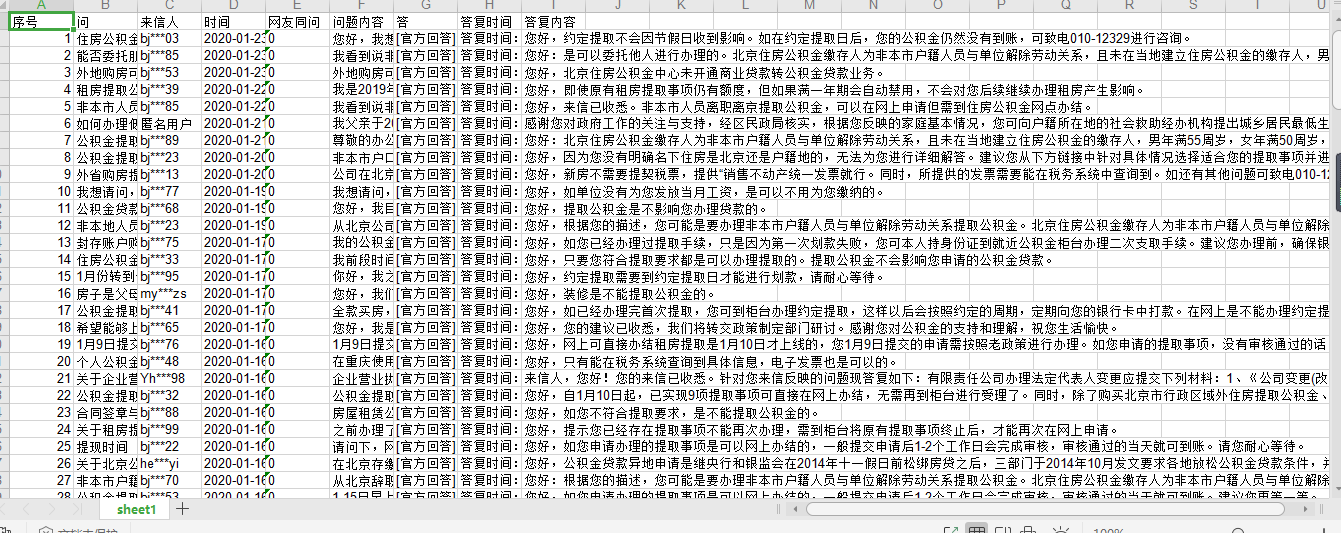

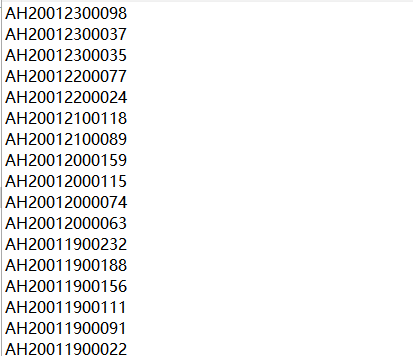

这个是我爬取出来的各个信件网址的后缀即(http://www.beijing.gov.cn/hudong/hdjl/com.web.suggest.suggesDetail.flow?originalId=AH20021200370)

然后编写代码:

import requests

import re

import xlwt

# #https://flightaware.com/live/flight/CCA101/history/80

url = 'http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=AH20021300174'

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36"

}

def get_page(url):

try:

response = requests.get(url, headers=headers)

if response.status_code == 200:

#print('获取网页成功')

return response.text

else:

print('获取网页失败')

except Exception as e:

print(e)

fopen = open('C:\\Users\\hp\\Desktop\\list.txt', 'r')//这个是存取信件内容网址后缀

lines = fopen.readlines()

urls = ['http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId={}'.format(line) for line in lines]

for url in urls:

print(url)

page = get_page(url)

items = re.findall('',page,re.S)

print(items)

print(len(items))

但是在用正则法爬取内容的时候出现了一些问题。

修改之后源代码:

我发现爬不出去的原因并不是正则表达式有错误,而是在遍历网址出现了换行符。所以网址本身就是不正确的。所以在url后面加上.replace("\n", ""),就OK了。

import requests

import re

import xlwt

# #https://flightaware.com/live/flight/CCA101/history/80

url = 'http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId=AH20021300174'

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/77.0.3865.90 Safari/537.36"

}

def get_page(url):

try:

response = requests.get(url, headers=headers)

if response.status_code == 200:

print('获取网页成功')

return response.text

else:

print('获取网页失败')

except Exception as e:

print(e)

f = xlwt.Workbook(encoding='utf-8')

sheet01 = f.add_sheet(u'sheet1', cell_overwrite_ok=True)

sheet01.write(0, 0, '序号') # 第一行第一列

sheet01.write(0, 1, '问') # 第一行第二列

sheet01.write(0, 2, '来信人') # 第一行第三列

sheet01.write(0, 3, '时间') # 第一行第四列

sheet01.write(0, 4, '网友同问') # 第一行第五列

sheet01.write(0, 5, '问题内容') # 第一行第六列

sheet01.write(0, 6, '答') # 第一行第七列

sheet01.write(0, 7, '答复时间') # 第一行第八列

sheet01.write(0, 8, '答复内容') # 第一行第九列

fopen = open('C:\\Users\\hp\\Desktop\\list2.txt', 'r')

lines = fopen.readlines()

temp=0

urls = ['http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId={}'.format(line) for line in lines]

for url in urls:

print(url.replace("\n", ""))

page = get_page(url.replace("\n", ""))

items = re.findall('<div class="col-xs-10 col-sm-10 col-md-10 o-font4 my-2"><strong>(.*?)</strong></div>.*?<div class="col-xs-10 col-lg-3 col-sm-3 col-md-4 text-muted ">(.*?)</div>.*?<div class="col-xs-5 col-lg-3 col-sm-3 col-md-3 text-muted ">(.*?)</div>.*?<div class="col-xs-4 col-lg-3 col-sm-3 col-md-3 text-muted ">(.*?)<label.*?">(.*?)</label>.*? <div class="col-xs-12 col-md-12 column p-2 text-muted mx-2">(.*?)</div>.*?<div class="col-xs-9 col-sm-7 col-md-5 o-font4 my-2">.*?<strong>(.*?)</div>.*?<div class="col-xs-12 col-sm-3 col-md-3 my-2 ">(.*?)</div>.*?<div class="col-xs-12 col-md-12 column p-4 text-muted my-3">(.*?)</div>',page,re.S)

print(items)

print(len(items))

for i in range(len(items)):

sheet01.write(temp + i + 1, 0,temp+1)

sheet01.write(temp + i + 1, 1,items[i][0].replace( ' ','').replace(' ',''))

sheet01.write(temp + i + 1, 2, items[i][1].replace('来信人:\r\n\t \t\r\n\t \t','').replace( '\r\n \t\r\n \t\r\n ',''))

sheet01.write(temp + i + 1, 3, items[i][2].replace('时间:',''))

sheet01.write(temp + i + 1, 4, items[i][4].replace('\r\n\t\t \t\r\n\t \t','').replace('\r\n\t \r\n\t \r\n ',''))

sheet01.write(temp + i + 1, 5,items[i][5].replace('\r\n\t \t', '').replace('\r\n\t ', '').replace('<p>','').replace(' ','').replace(' ','').replace(' ','').replace(' ',''))

sheet01.write(temp + i + 1, 6,items[i][6].replace('</strong>', ''))

sheet01.write(temp + i + 1, 7,items[i][7])

sheet01.write(temp + i + 1, 8, items[i][8].replace('\r\n\t\t\t\t\t\t\t\t','').replace(' ','').replace('\r\n\t\t\t\t\t\t\t','').replace('<p>','').replace(' ','').replace(' ','').replace(' ',''))

temp+=len(items)

print("总爬取完毕数量:"+str(temp))

print("打印完!!!")

f.save('letter.xls')