LogisticRegression Algorithm——机器学习(西瓜书)读书笔记

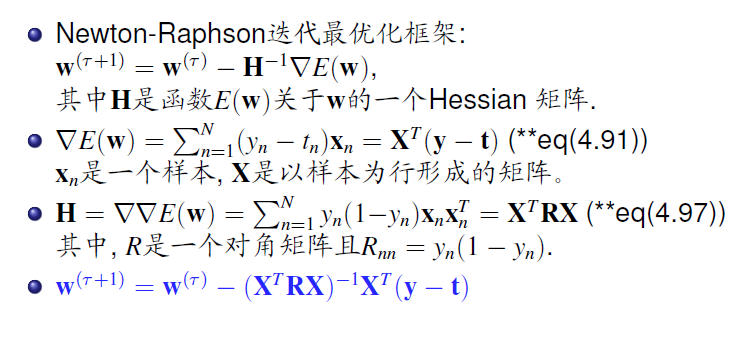

import numpy as np from sklearn.datasets import load_breast_cancer import sklearn.linear_model from numpy.linalg import inv # numpy.linalg 是处理线性代数的包,inv为矩阵求逆 #sigmoid函数 def sigmoid(x): # Sigmoid function return 1.0/(1 + np.exp(-x)) # 梯度函数 def gradient(t, y, phi): grad = phi.T * (y - t) return grad # 计算海森矩阵 def Hessian(t, y, phi): H = phi.T * (np.diag(np.diag(y * (1 - y).T))) * phi return H def Newton_Raphson(t, w, phi): #Newton_Raphson algorithm 牛顿法迭代 for i in range(0,100): y = sigmoid(phi * w) grad = gradient(t, y, phi) H = Hessian(t, y, phi) w = w - inv(H+0.0001*np.eye(H.shape[0])) * grad return w # 测试算法(一个例子:sklearn中预测癌症数据包) # 导入数据 cancer = load_breast_cancer() # 查看关键字 print (cancer.keys()) #标准化处理数据 phi = np.mat(cancer.data) t = np.mat(cancer.target) phi = (phi - np.mean(phi, axis = 0))/(np.std(phi, axis = 0)) # 切分数据集为训练集与测试集 phi_train = np.mat(phi[0:200]) t_train =np.mat(cancer.target[0:200].reshape((len(phi_train),1))) phi_test = np.mat(phi[200:-1]) t_test = np.mat(cancer.target[200:-1].reshape((len(phi_test),1))) # 添加偏置项 b1 = np.ones(len(phi_train)) b2 = np.ones(len(phi_test)) phi_train_b = np.c_[phi_train, b1] phi_test_b = np.c_[phi_test, b2] # 初始化权重 np.random.seed(666) #使随机数产生后就固定下来 w = np.mat(np.random.normal(0, 0.01, phi_train_b.shape[-1])).T W = Newton_Raphson(t_train, w, phi_train_b) # 计算预测正确的训练样本比例 y_pred = sigmoid(phi_train_b * W) t_pred = np.where(y_pred > 0.5, 1 ,0) accuracy_train = np.mean(t_train == t_pred) print('The accuracy of train set is:',accuracy_train) # 计算预测正确测试样本比例 y_pred = sigmoid(phi_test_b * W) t_pred = np.where(y_pred > 0.5, 1 ,0) accuracy_test = np.mean(t_test == t_pred) print('The accuracy of test set is:',accuracy_test) # 计算最后预测的准确率 model = sklearn.linear_model.LogisticRegression(solver='newton-cg') model.fit(phi_train_b, t_train) y_pred = model.predict(phi_test_b) acc = np.mean(t_test== y_pred.reshape([-1,1])) print (acc)

浙公网安备 33010602011771号

浙公网安备 33010602011771号