Hadoop开发周期(二):编写mapper和reducer程序

编写一个简单的MapReduce程序大体上需要如下3步:

1)实现Mapper,处理输入的对,输出中间结果;

2)实现Reducer,对中间结果进行运算,输出最终结果;

3)在main方法里定义运行作业,定义一个job,在这里控制job如何运行等。

本文将通过一个实例(字数统计)演示MapReduce基本编程。

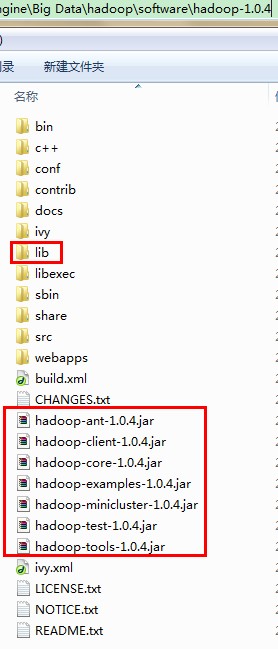

0 导入Hadoop的jar包

导入hadoop目录下和lib目录下的jar包

1 编写Mapper类

Mapper抽象类是一个泛型,有4个形式的参数类型,分别指定map函数的输入键,输入值,输出键,输出值。就上面的示例来说,输入键没有用到(实际代表行在文本中格的位置,没有这方面的需要,所以忽略),输入值是一样文本,输出键为单词,输出值代表单词出现的次数。

Hadoop规定了自己的一套可用于网络序列优化的基本类型,而不是使用内置的java类型,这些都在org.apache.hadoop.io包中定义,上面使用的Text类型相当于java的String类型,IntWritable类型相当于java的Integer类型。

package cn.com.yz.mapreduce;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<Object, Text, Text, IntWritable> {

// --------------------------------------------------------------------

private final static IntWritable one = new IntWritable(1); // initial word number is 1

private Text word = new Text(); // word

// --------------------------------------------------------------------

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

} // end while

} // end map()

} // end class WordCountMapper

2 编写Reduce类

Reducer抽象类的四个形式参数类型指定了reduce函数的输入和输出类型。在本例子中,输入键是单词,输入值是单词出现的次数,将单词出现的次数进行叠加,输出单词和单词总数。

package cn.com.yz.mapreduce;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends

Reducer<Text, IntWritable, Text, IntWritable> {

// --------------------------------------------------------------------

private IntWritable result = new IntWritable();

// --------------------------------------------------------------------

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

} //end for

result.set(sum);

context.write(key, result);

} //end reduce()

} //end class WordCountReducer

3 编写Main方法

package cn.com.yz.mapreduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class WordCount {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args)

.getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}// end if

// set job

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(WordCountMapper.class);

job.setCombinerClass(WordCountReducer.class);

job.setReducerClass(WordCountReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// set input and output path

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

//submit job and wait for fininshing

System.exit(job.waitForCompletion(true) ? 0 : 1);

}// end main()

} // end class WordCount

Hadoop的复杂在于job的配置有着复杂的属性参数,如文件分割策略、排序策略、map输出内存缓冲区的大小、工作线程数量等,深入理解掌握这些参数才能使自己的MapReduce程序在集群环境中运行的最优。