flink本地开发环境安装与部署(单机)

一、安装

1、下载地址

https://archive.apache.org/dist/flink/

本文选择的版本是:flink-1.13.0-bin-scala_2.12.tgz

2、解压文件

tar -zxvf flink-1.13.0-bin-scala_2.12.tgz -C /usr/local/myroom/

3、配置环境变量,在最后一行新增两个export

vi /etc/profile

export FLINK_HOME=/usr/local/myroom/flink-1.13.0

export PATH=$PATH:$FLINK_HOME/bin

4、重新加载,使配置立即生效

source /etc/profile

5、验证安装是否成功

flink --version

6、启动flink

start-cluster.sh

备注,停止命令是:stop-cluster.sh

7、查看相关进程是否启动

[root@single myroom]# jps 16931 TaskManagerRunner 16662 StandaloneSessionClusterEntrypoint 16952 Jps

8、页面访问

http://192.168.23.190:8081/

二、部署

1、启动flink,如上面的第6步

2、准备程序,如下

package com.leiyuke.flink.datastream.demo.deploy; import org.apache.flink.api.common.functions.FlatMapFunction; import org.apache.flink.api.java.tuple.Tuple2; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.util.Collector; public class WordCount { public static void main(String[] args) throws Exception{ final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); DataStream<String> dataStream = env.socketTextStream("192.168.23.190",9000,"\n"); DataStream<Tuple2<String,Integer>> countData = dataStream .flatMap(new FlatMapFunction<String, Tuple2<String, Integer>>() { @Override public void flatMap(String value, Collector<Tuple2<String, Integer>> out) throws Exception { String[] words = value.toLowerCase().split("\\W+"); for(String word :words){ if(word.length() > 0){ out.collect(new Tuple2<>(word,1)); } } } }).keyBy(value -> value.f0) .sum(1); countData.print(); env.execute("CountSocketWord"); } }

备注:pom.xml中与flink相关的依赖,scope设置为provided,因为服务器上lib中已经存在了

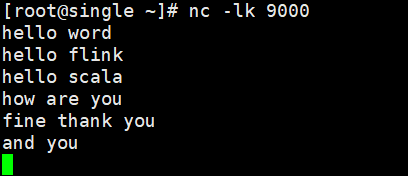

3、开启nc

nc -lk 9000

4、将程序打包上传,运行jar

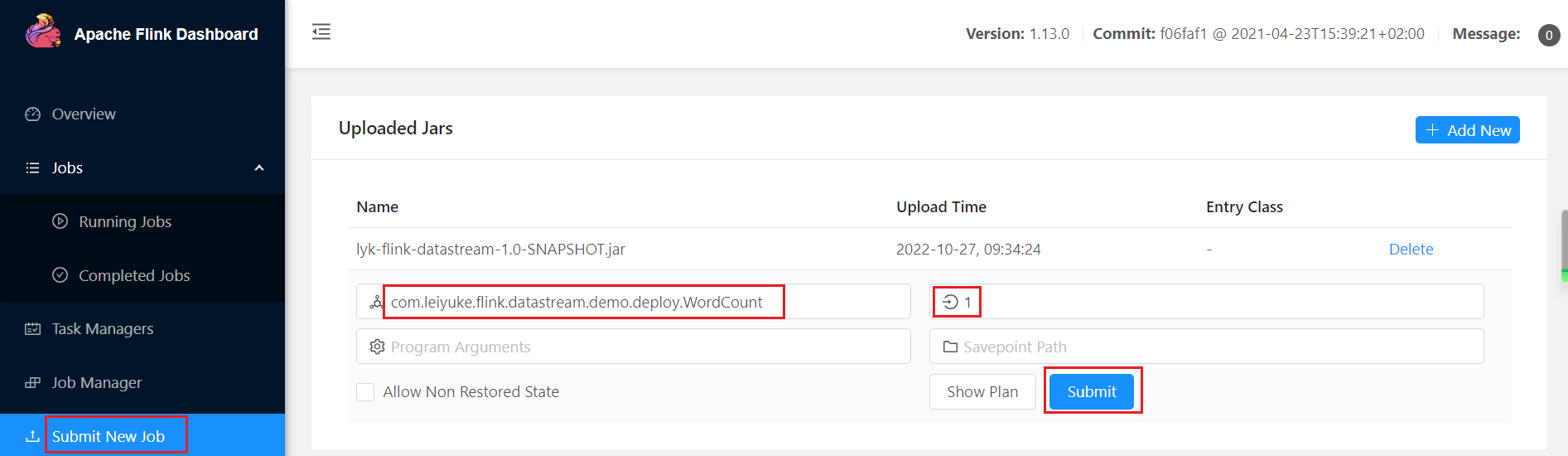

1)方法1,界面操作

2)方法2,执行命令

flink run -c com.leiyuke.flink.datastream.demo.deploy.WordCount lyk-flink-datastream-1.0-SNAPSHOT.jar

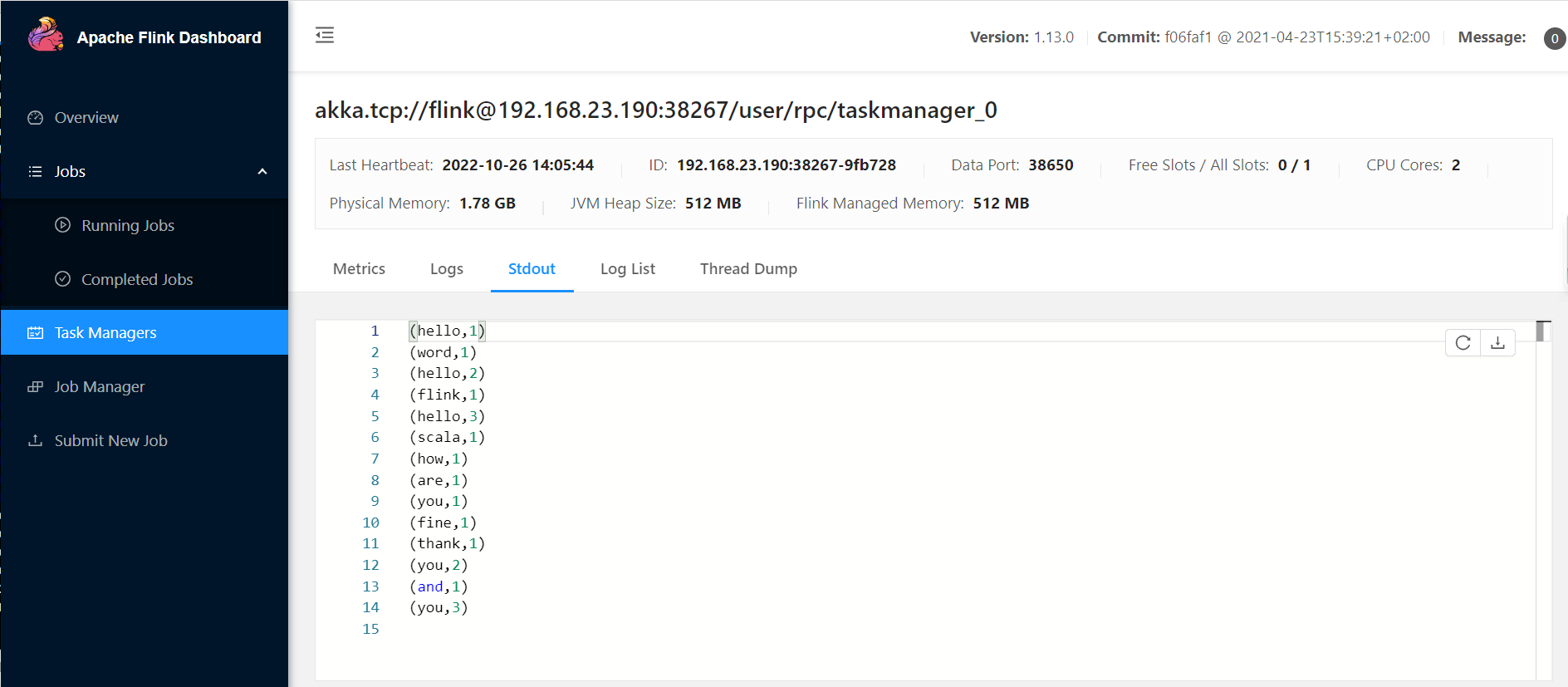

5、测试如下:

6、停止任务

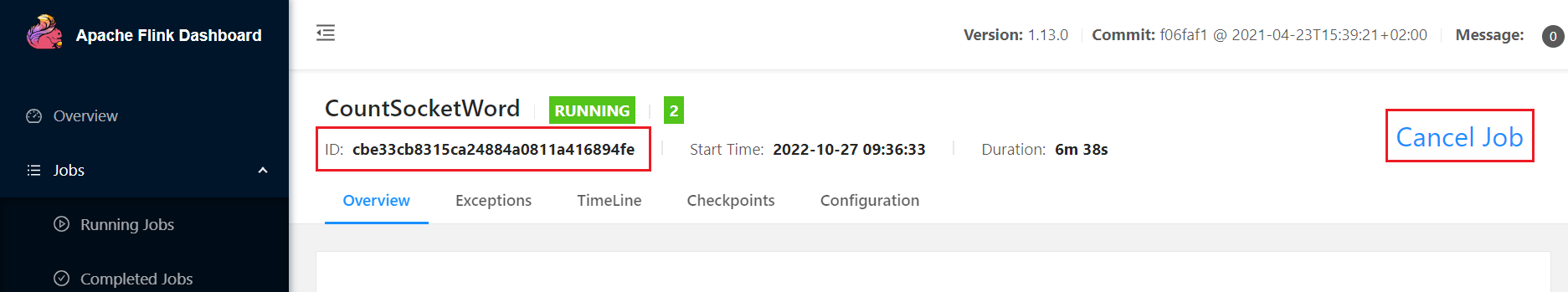

1)方法1,Jobs -> Running Jobs -> 点击Job Name列表对应的名字 -> 点击Cancel Job

2)方法2,执行命令

flink cancel cbe33cb8315ca24884a0811a416894fe (这一串数字是上图的JobId)