谷粒商城分布式高级(二)—— ElasticSearch全文检索

一、ElasticSearch-全文检索

1、简介

https://www.elastic.co/cn/what-is/elasticsearch 全文搜索属于最常见的需求,开源的 Elasticsearch 是目前全文搜索引擎的首选。 它可以快速地储存、搜索和分析海量数据。维基百科、Stack Overflow、Github 都采用它

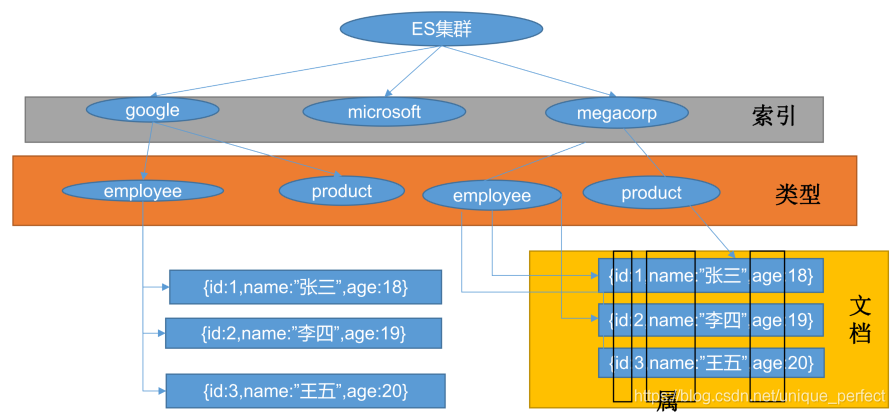

2、基本概念

1、Index(索引)

动词,相当于 MySQL 中的 insert;

名词,相当于 MySQL 中的 Database

2、Type(类型)

在 Index(索引)中,可以定义一个或多个类型。

类似于 MySQL 中的 Table;每一种类型的数据放在一起;

3、Document(文档)

保存在某个索引(Index)下,某种类型(Type)的一个数据(Document),文档是 JSON 格 式的,

Document 就像是 MySQL 中的某个 Table 里面的内容;

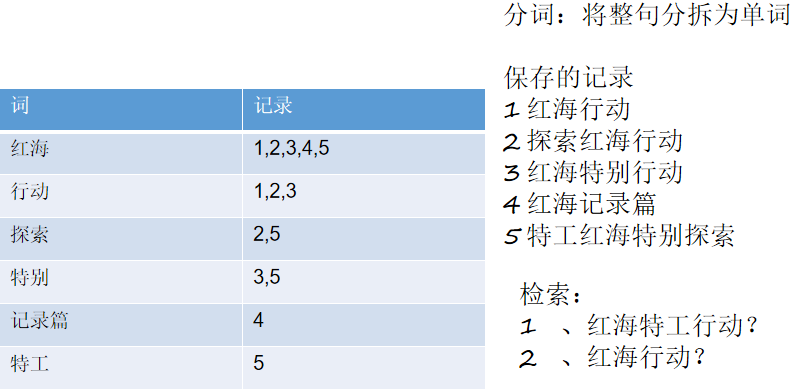

4、倒排索引机制

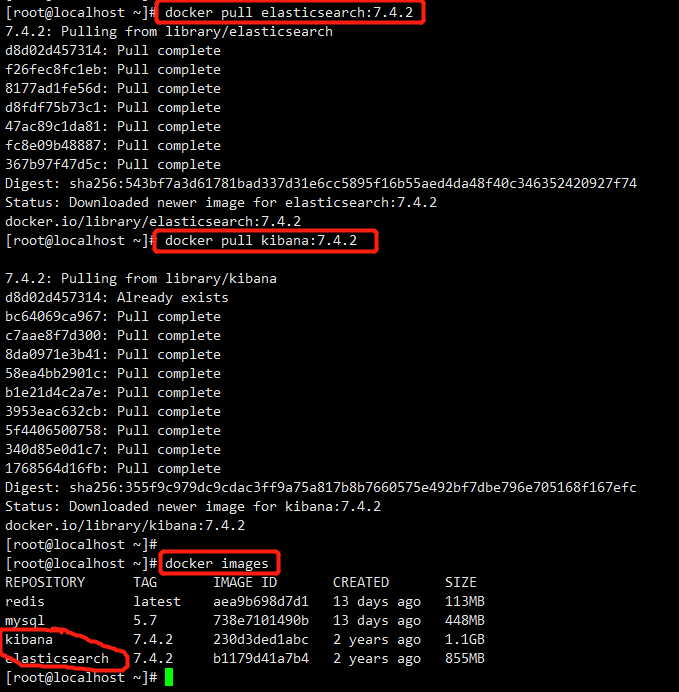

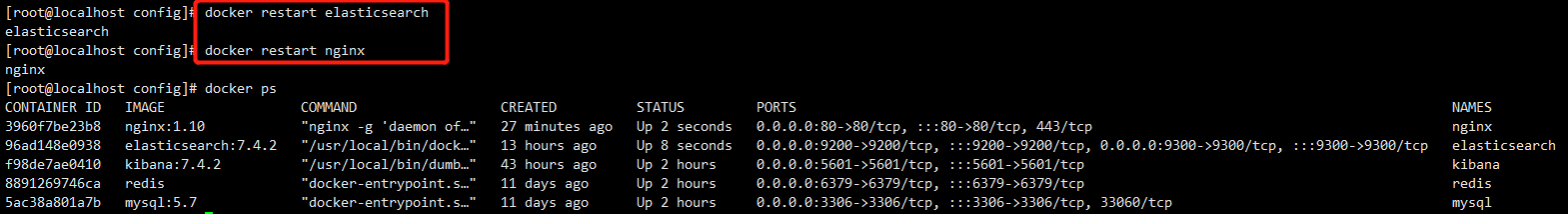

3、Docker 安装 Es

1、下载镜像文件

docker pull elasticsearch:7.4.2 存储和检索数据

docker pull kibana:7.4.2 可视化检索数据

注意:版本要统一

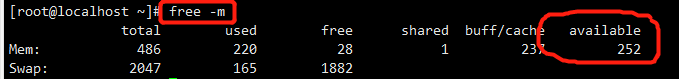

先检查一下虚拟机的可用内存

2、安装 ElasticSearch

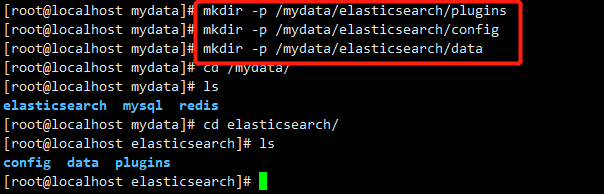

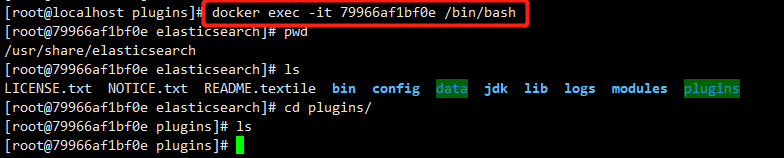

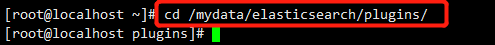

(1)创建挂载目录

mkdir -p /mydata/elasticsearch/plugins

mkdir -p /mydata/elasticsearch/config

mkdir -p /mydata/elasticsearch/data

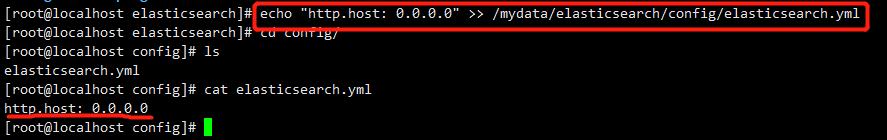

(2)设置es可以被远程任何机器访问

echo "http.host: 0.0.0.0" >> /mydata/elasticsearch/config/elasticsearch.yml

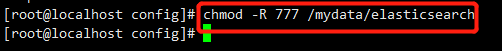

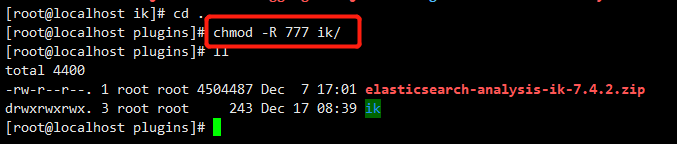

(3)递归更改权限,es需要访问

chmod -R 777 /mydata/elasticsearch

注意:一定要授权,否则后面启动的时候会访问拒绝,没权限

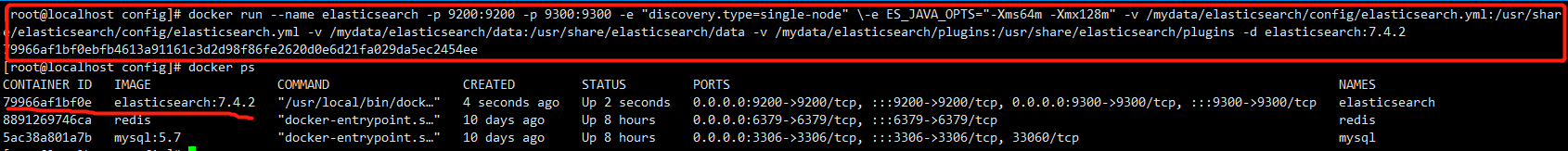

(4)创建实例,启动 Elastic search

注意:

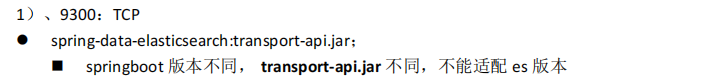

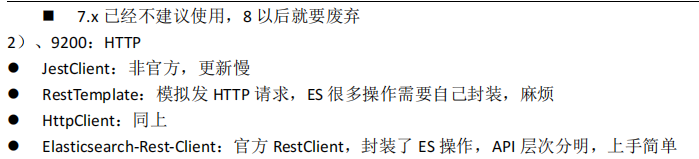

# 9200是用户交互端口 9300是集群心跳端口

# -e指定是单阶段运行

# -e指定占用的内存大小,生产时可以设置32G

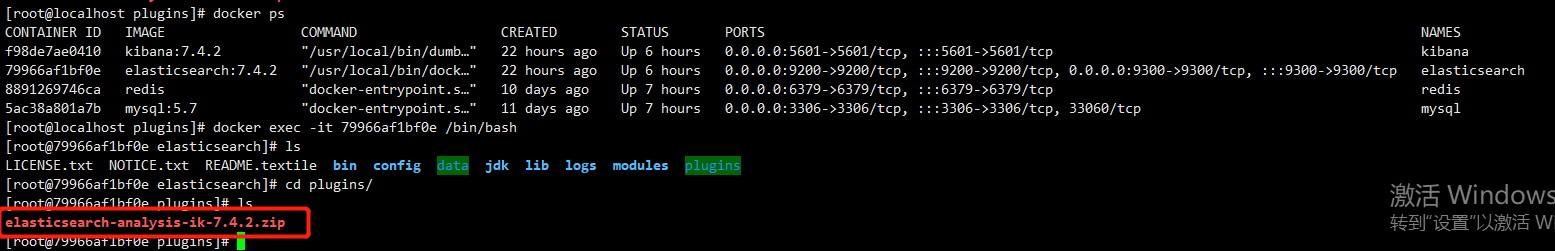

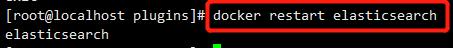

以后再外面装好插件重启即可;

特别注意:

-e ES_JAVA_OPTS="-Xms64m -Xmx128m" \ 测试环境下,设置 ES 的初始内存和最大内存,否则导致过大启动不了 ES

(5)设置随docker自启动 docker update elasticsearch --restart=always

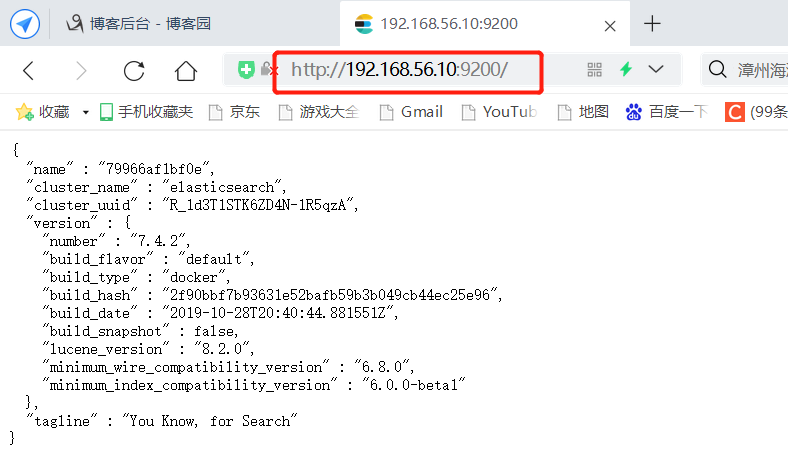

(6)测试访问

查看elasticsearch版本信息:http://192.168.56.10:9200/

显示elasticsearch 节点信息http://192.168.11.129:9200/_cat/nodes

127.0.0.1 69 99 9 1.07 0.78 0.56 dilm * 79966af1bf0e

79966af1bf0e代表上面的节点,*代表是主节点

3、安装 kibana

(1)创建实例并启动

docker run --name kibana -e ELASTICSEARCH_HOSTS=http://192.168.56.10:9200 -p 5601:5601 -d kibana:7.4.2

注意:http://192.168.56.10:9200 一定改为自己虚拟机的地址

(2)设置随docker自启动 docker update kibana --restart=always

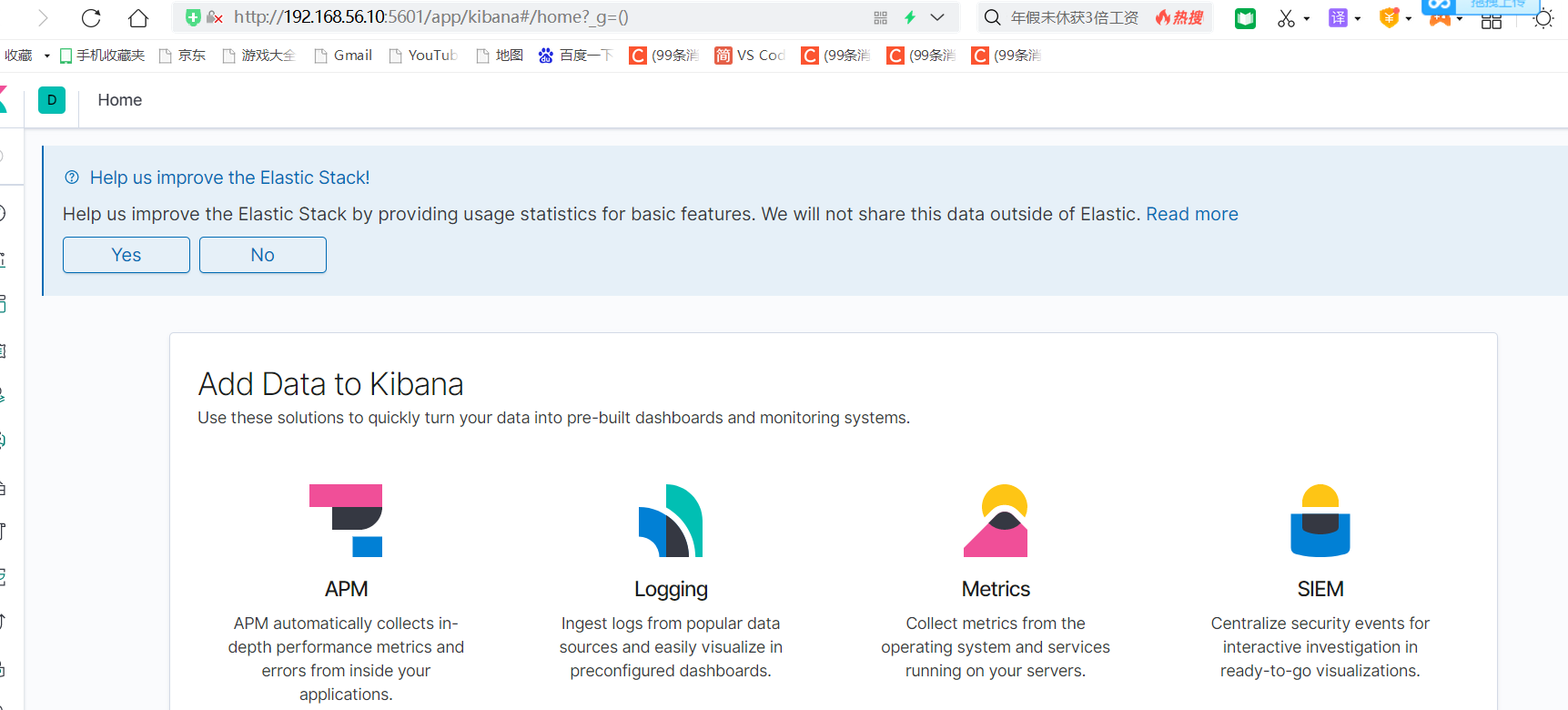

(3)测试

访问:http://192.168.56.10:5601/app/kibana

4、初步检索

1、_cat

(1)GET /_cat/nodes:查看所有节点

请求:http://192.168.56.10:9200/_cat/nodes

返回:127.0.0.1 53 99 18 1.64 1.34 0.89 dilm * 79966af1bf0e

注意:79966af1bf0e代表上面的节点,*代表是主节点

(2)GET /_cat/health:查看es健康状况

请求:http://192.168.56.10:9200/_cat/health

返回:1639660246 13:10:46 elasticsearch green 1 1 2 2 0 0 0 0 - 100.0%

注意:green表示健康值正常

(3)GET /_cat/master:查看主节点

请求:http://192.168.56.10:9200/_cat/master

返回:yV_3GAgSRlCZRbsaZGmwvg 127.0.0.1 127.0.0.1 79966af1bf0e

注意:主节点唯一编号、虚拟机地址

(4)GET /_cat/indices:查看所有索引,等价于mysql的show database;

请求:http://192.168.56.10:9200/_cat/indices

返回:green open .kibana_task_manager_1 TgtHkBPEQa27TRzx7csj_A 1 0 2 0 38.3kb 38.3kb

green open .kibana_1 WX3cdamiRh-ylxDECyU5qg 1 0 3 0 14.8kb 14.8kb

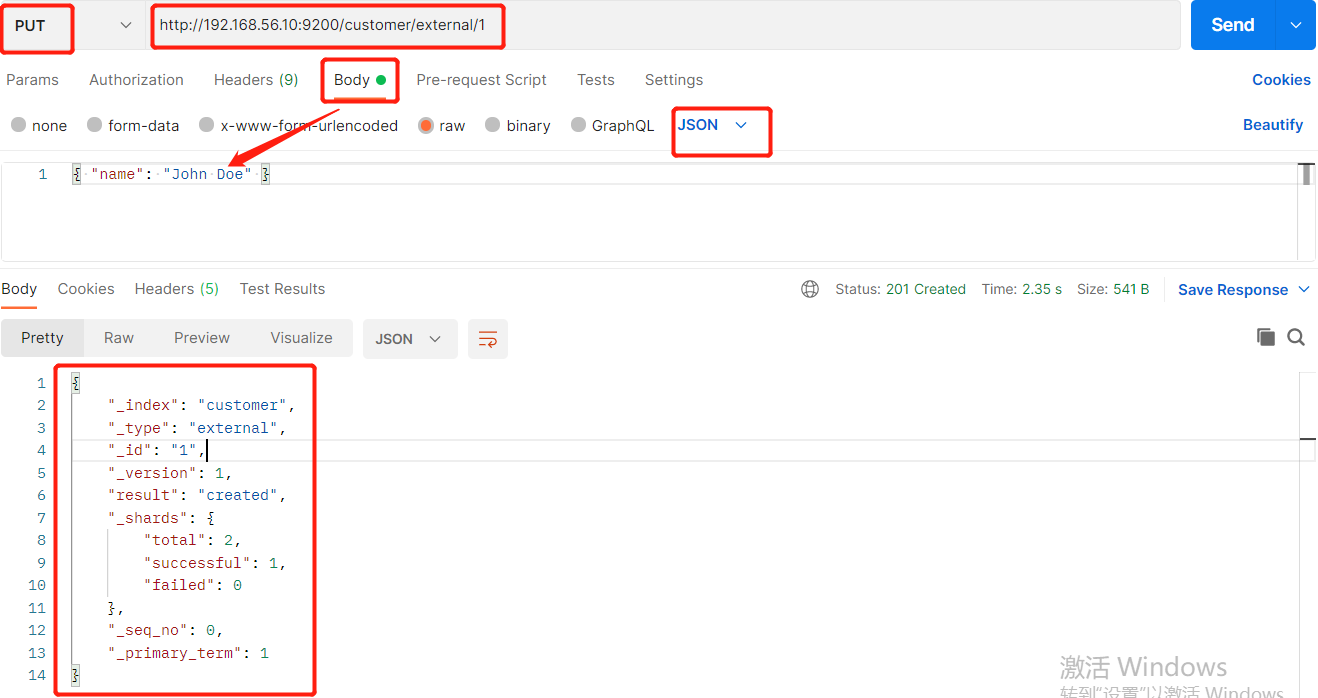

2、索引一个文档(保存)

创建数据成功后,显示201 created表示插入记录成功。 返回数据: 带有下划线开头的,称为元数据,反映了当前的基本信息。 { "_index": "customer", 表明该数据在哪个数据库下; "_type": "external", 表明该数据在哪个类型下; "_id": "1", 表明被保存数据的id; "_version": 1, 被保存数据的版本 "result": "created", 这里是创建了一条数据,如果重新put一条数据,则该状态会变为updated,并且版本号也会发生变化。 "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 0, "_primary_term": 1 } 下面选用POST方式: 添加数据的时候,不指定ID,会自动的生成id,并且类型是新增: { "_index": "customer", "_type": "external", "_id": "5MIjvncBKdY1wAQm-wNZ", "_version": 1, "result": "created", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 11, "_primary_term": 6 } 再次使用POST插入数据,不指定ID,仍然是新增的: { "_index": "customer", "_type": "external", "_id": "5cIkvncBKdY1wAQmcQNk", "_version": 1, "result": "created", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 12, "_primary_term": 6 } 添加数据的时候,指定ID,会使用该id,并且类型是新增: { "_index": "customer", "_type": "external", "_id": "2", "_version": 1, "result": "created", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 13, "_primary_term": 6 } 再次使用POST插入数据,指定同样的ID,类型为updated { "_index": "customer", "_type": "external", "_id": "2", "_version": 2, "result": "updated", "_shards": { "total": 2, "successful": 1, "failed": 0 }, "_seq_no": 14, "_primary_term": 6 }

3、查询文档 & 乐观锁字段

(1)GET /customer/external/1:查询文档

请求:http://192.168.56.10:9200/customer/external/1

返回:{ "_index": "customer", "_type": "external", "_id": "1", "_version": 10, "_seq_no": 18,//并发控制字段,每次更新都会+1,用来做乐观锁 "_primary_term": 6,//同上,主分片重新分配,如重启,就会变化 "found": true, "_source": { "name": "John Doe" } }

通过“if_seq_no=1&if_primary_term=1”,当序列号匹配的时候,才进行修改,否则不修改。

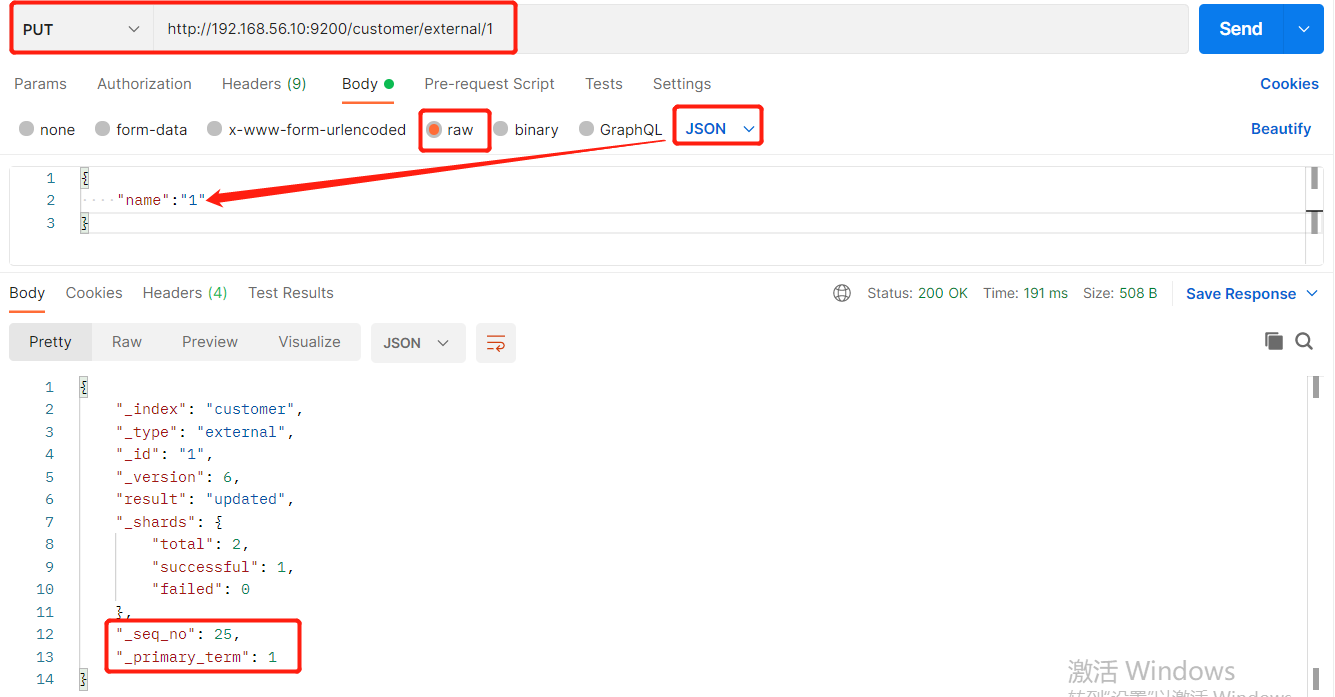

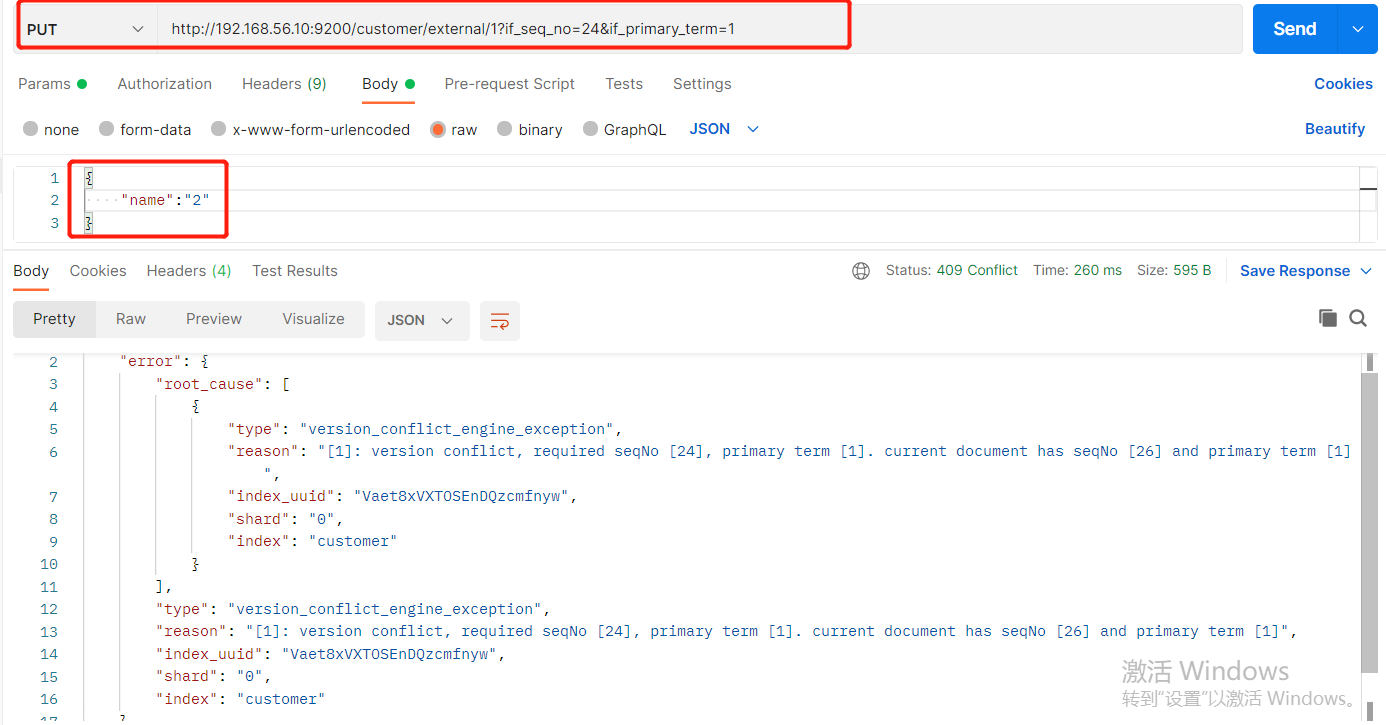

(2)实例:将id=1的数据更新为name=1,然后再次更新为name=2,起始1_seq_no=24,_primary_term=1

(a)将name更新为1

PUT http://192.168.56.10:9200/customer/external/1

(b)将name更新为2,更新过程中使用seq_no=24

PUT http://192.168.56.10:9200/customer/external/1?if_seq_no=24&if_primary_term=1

出现更新错误

查询新的数据,发现_seq_no=25,是第一次更新完毕之后的_seq_no

再次更新,更新成功

PUT http://192.168.56.10:9200/customer/external/1?if_seq_no=26&if_primary_term=1

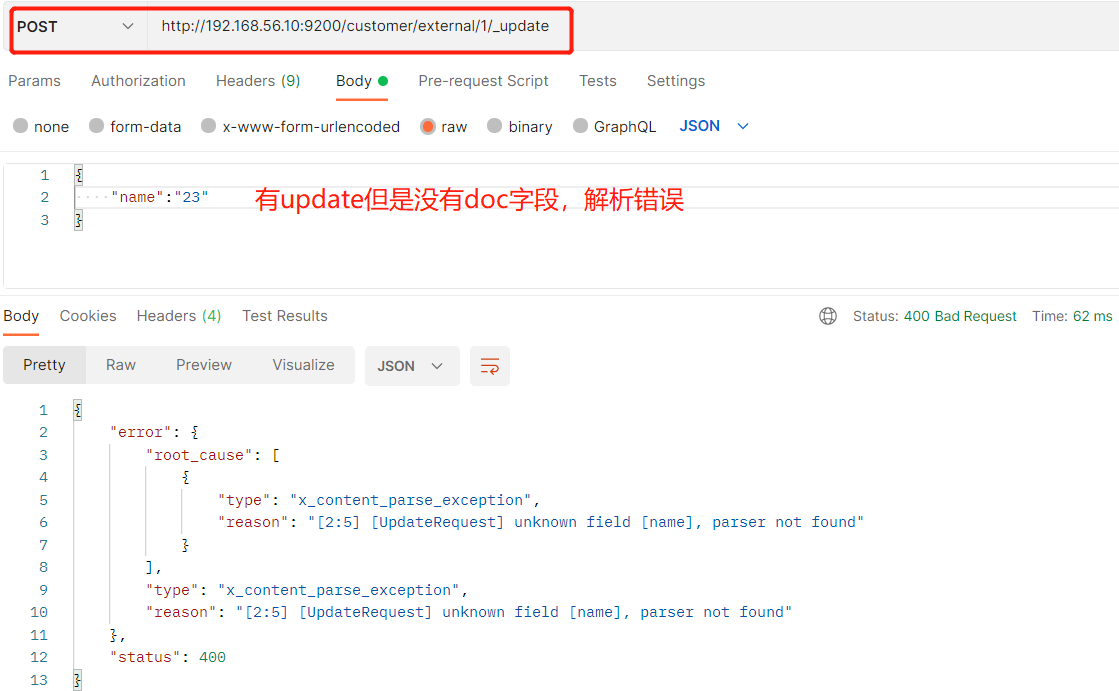

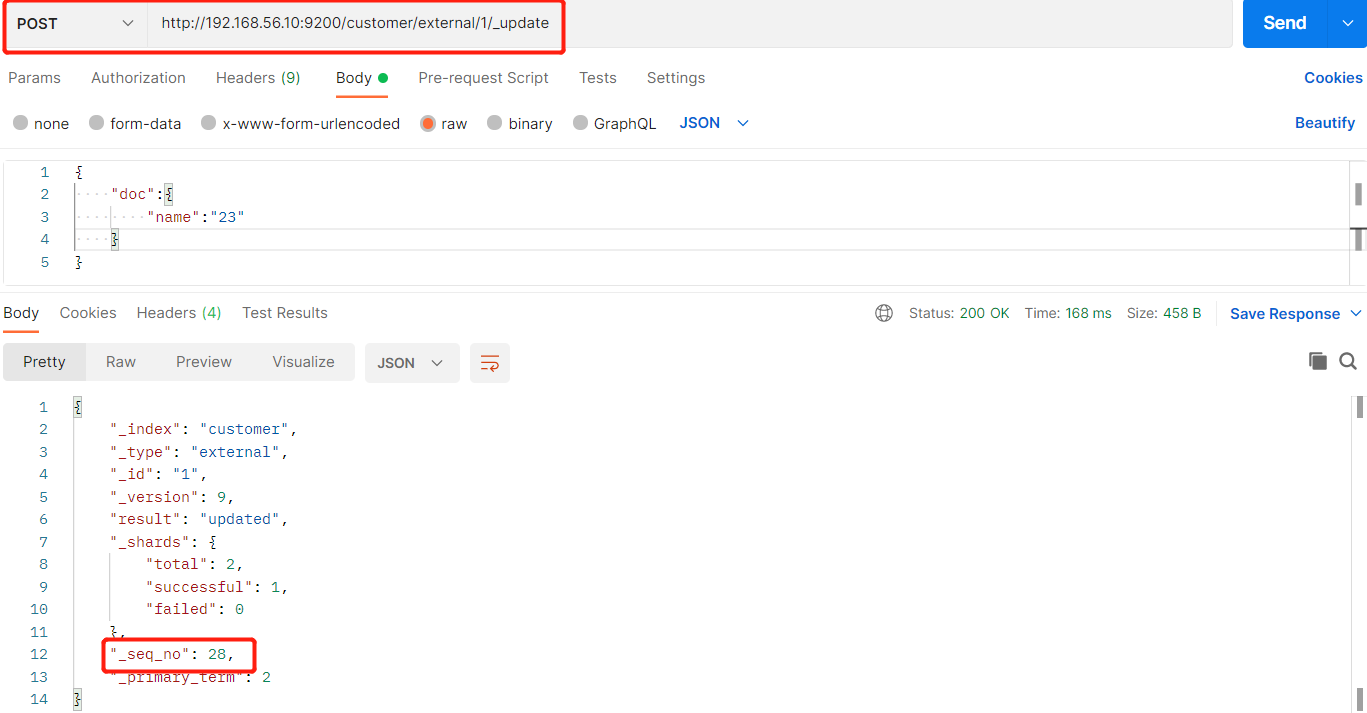

4、更新文档 (1)更新文档_update (a)带有update的POST

POST customer/externel/1/_update { "doc":{ "name":"111" } } 或者 (b)不带update的POST

POST customer/externel/1 { "doc":{ "name":"222" } } 或者 (c)不带update的PUT

PUT customer/externel/1 { "doc":{ "name":"222" } }

(2)不同

(a)POST操作会对比源文档数据,如果相同不会有什么操作,文档version不增加。

(b)PUT操作总会将数据重新保存并增加version版本

(3)POST时带_update对比元数据如果一样就不进行任何操作。

(3)看场景

(a)对于大并发更新,不带update

(b)对于大并发查询偶尔更新,带update;对比更新,重新计算分配规则

(4)实例

如果再次执行更新,则不执行任何操作,序列号也不发生变化,返回:

{

"_index": "customer",

"_type": "external",

"_id": "1",

"_version": 9,

"result": "noop",

"_shards": {

"total": 0,

"successful": 0,

"failed": 0

},

"_seq_no": 28,

"_primary_term": 2

}

POST更新方式,会对比原来的数据,和原来的相同,则不执行任何操作(version和_seq_no)都不变。

POST更新文档,不带_update

在更新过程中,重复执行更新操作,数据也能够更新成功,不会和原来的数据进行对比。_seq_no会变化

{

"_index": "customer",

"_type": "external",

"_id": "1",

"_version": 13,

"result": "updated",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"_seq_no": 32,

"_primary_term": 2

}

5、删除文档 & 索引

(1)语法

DELETE customer/external/1

DELETE customer

注:elasticsearch并没有提供删除类型的操作,只提供了删除索引和文档的操作。

(2)实例

删除id=1的数据,删除后继续查询

DELETE http://192.168.56.10:9200/customer/external/1

{

"_index": "customer",

"_type": "external",

"_id": "1",

"_version": 14,

"result": "deleted",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"_seq_no": 33,

"_primary_term": 2

}

再次执行DELETE http://192.168.56.10:9200/customer/external/1

{

"_index": "customer",

"_type": "external",

"_id": "1",

"_version": 1,

"result": "not_found",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"_seq_no": 34,

"_primary_term": 2

}

GET http://192.168.56.10:9200/customer/external/1

{

"_index": "customer",

"_type": "external",

"_id": "1",

"found": false

}

(3)删除整个customer索引数据

删除前,所有的索引http://192.168.56.10:9200/_cat/indices

green open .kibana_task_manager_1 TgtHkBPEQa27TRzx7csj_A 1 0 2 0 30.4kb 30.4kb

green open .kibana_1 WX3cdamiRh-ylxDECyU5qg 1 0 5 0 18.3kb 18.3kb

yellow open customer Vaet8xVXTOSEnDQzcmfnyw 1 1 6 4 13.9kb 13.9kb

删除“ customer ”索引 DELTE http://192.168.56.10:9200/customer

响应

{

"acknowledged": true

}

删除后,所有的索引http://192.168.56.10:9200/_cat/indices

green open .kibana_task_manager_1 TgtHkBPEQa27TRzx7csj_A 1 0 2 0 30.4kb 30.4kb

green open .kibana_1 WX3cdamiRh-ylxDECyU5qg 1 0 5 0 18.3kb 18.3kb

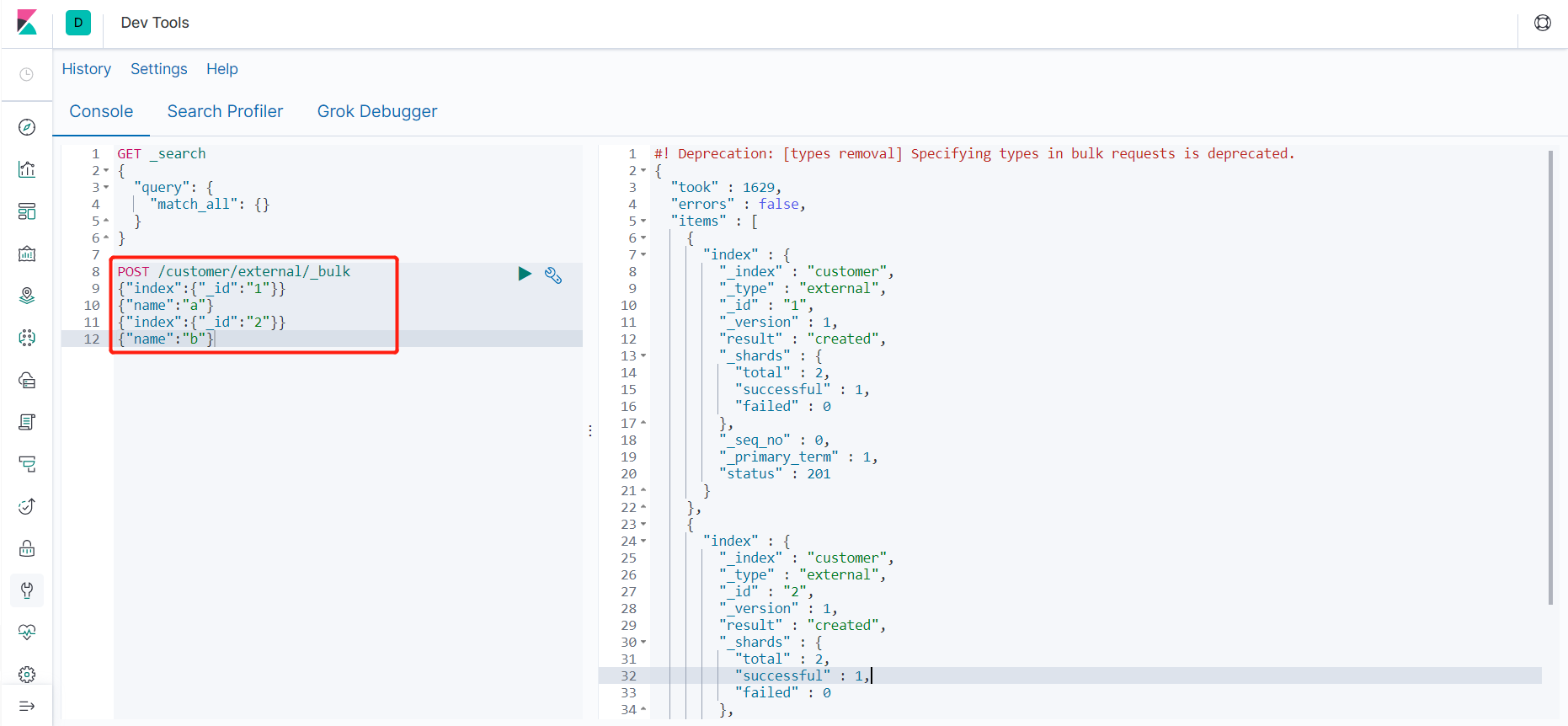

6、bulk 批量 API

(1)匹配导入数据 POST http://192.168.56.10:9200/customer/external/_bulk 两行为一个整体 {"index":{"_id":"1"}} {"name":"a"} {"index":{"_id":"2"}} {"name":"b"} 注意格式json和text均不可,要去kibana里Dev Tools

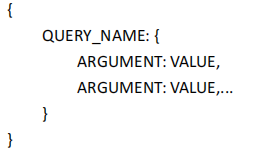

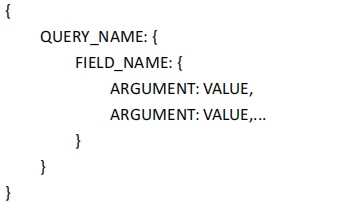

语法格式:

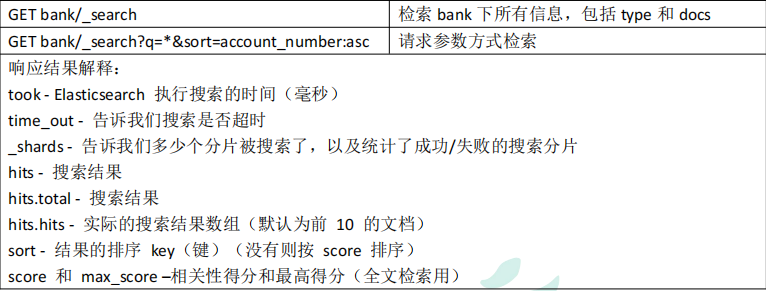

7、样本测试数据

准备了一份顾客银行账户信息的虚构的JSON文档样本。每个文档都有下列的schema(模式)。

{ "account_number": 1, "balance": 39225, "firstname": "Amber", "lastname": "Duke", "age": 32, "gender": "M", "address": "880 Holmes Lane", "employer": "Pyrami", "email": "amberduke@pyrami.com", "city": "Brogan", "state": "IL" }

https://gitee.com/xlh_blog/common_content/blob/master/es%E6%B5%8B%E8%AF%95%E6%95%B0%E6%8D%AE.json,导入数据

POST bank/account/_bulk 上面的数据

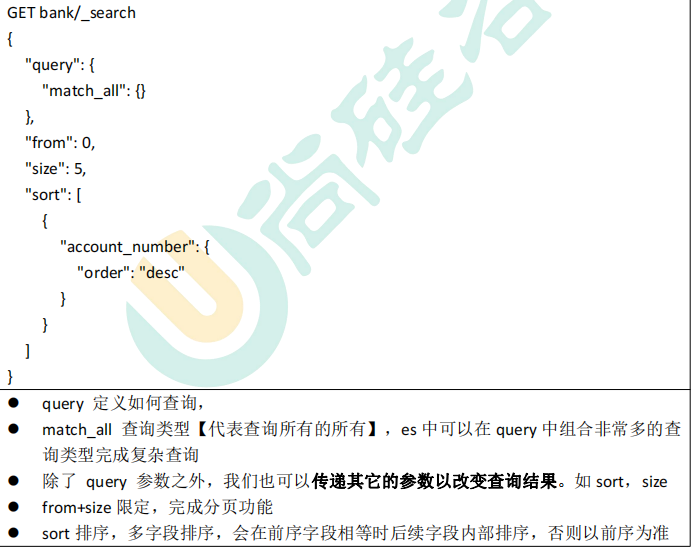

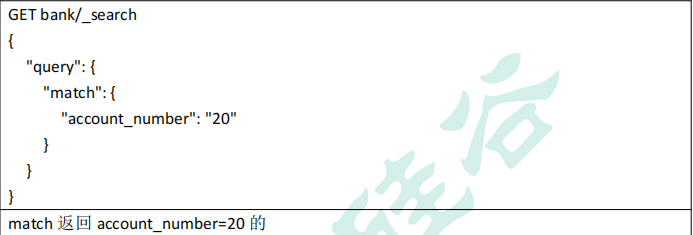

http://192.168.56.10:9200/_cat/indices 刚导入了1000条 yellow open bank GAry5upkQga9NZ6gY2Gyfw 1 1 1000 0 427.7kb 427.7kb

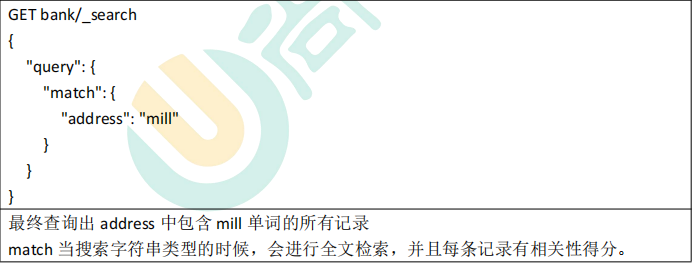

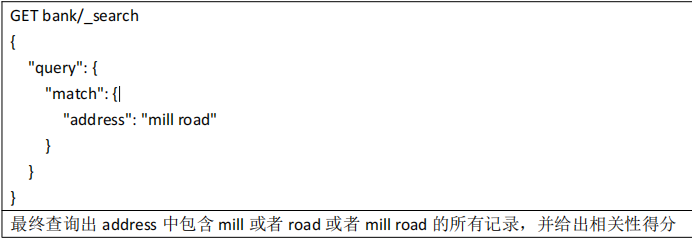

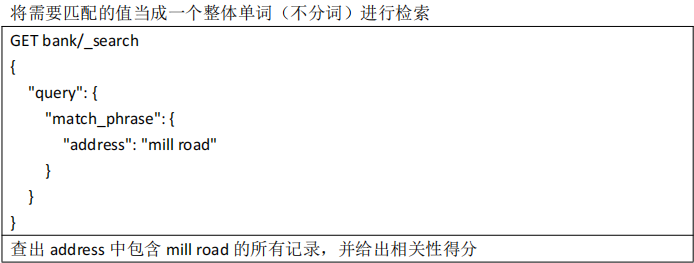

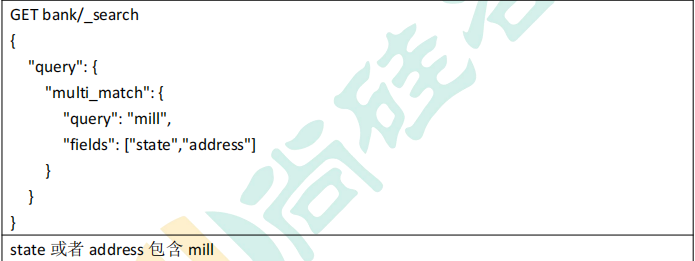

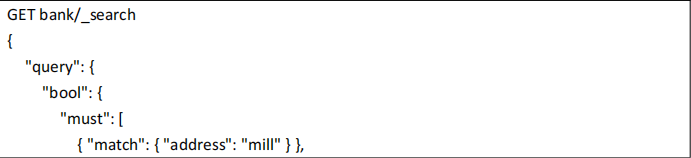

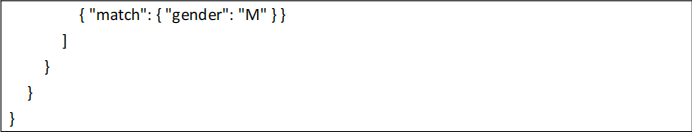

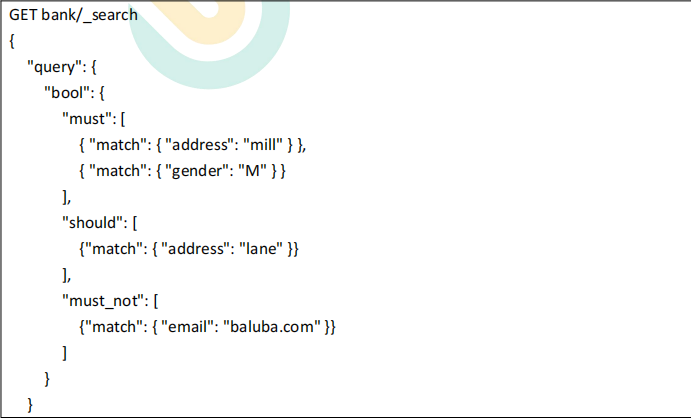

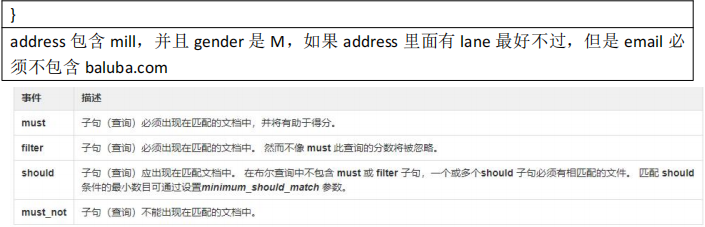

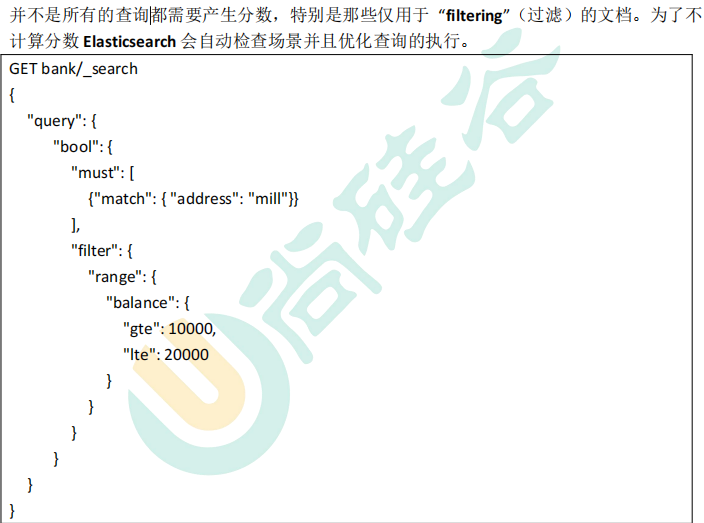

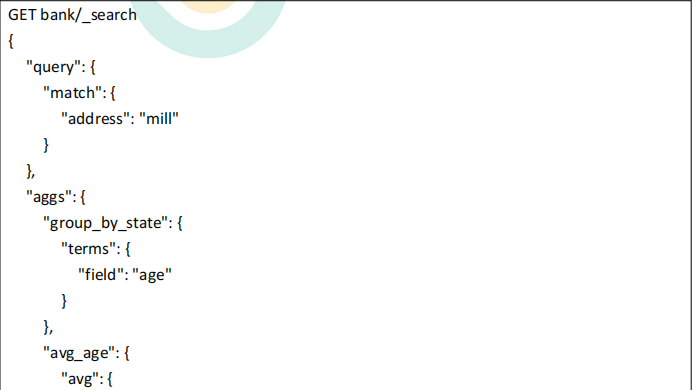

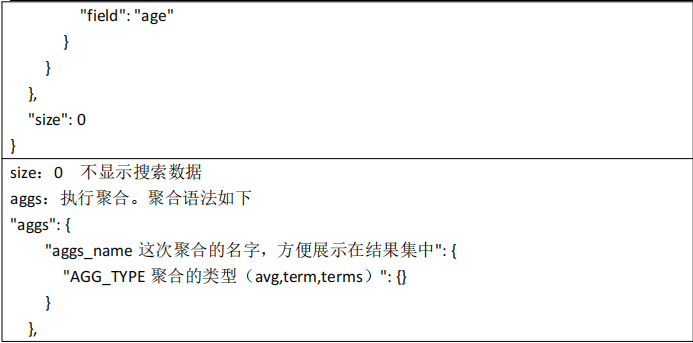

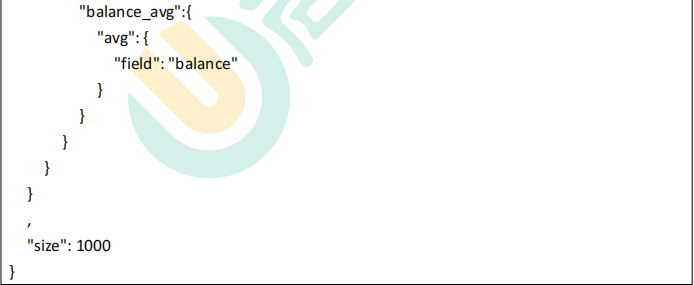

数据内容