ECK部署

参考文章:

ECK配置:

https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-elasticsearch-specification.html

ECK搭建:

https://medium.com/@kishorechandran41/elasticsearch-cluster-on-google-kubernetes-engine-71bedd71fa85

https://hmj2088.medium.com/elastic-cloud-on-kubernetes-b5a69339e920

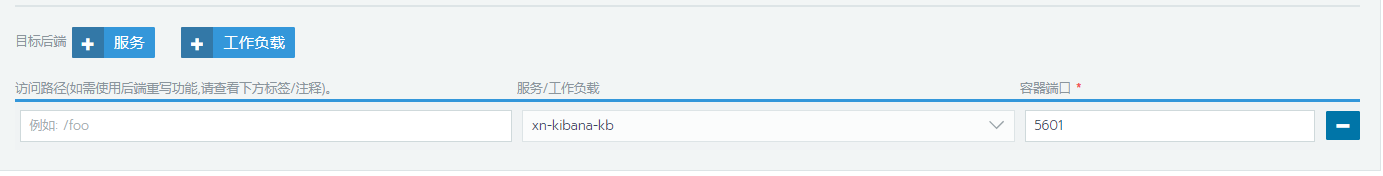

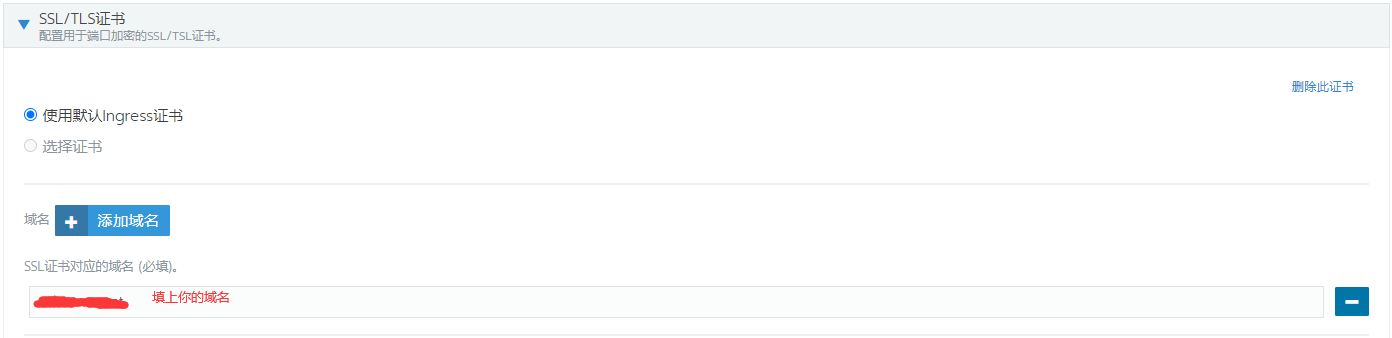

部署:(3节点,1 master-node, 2 data-nodes) 版本: elastic-operator: 1.5.0 ELK: 7.12.0 1. 准备工作 a). 安装 all-in-one (elastic-operator) 下载地址: https://download.elastic.co/downloads/eck/1.5.0/all-in-one.yaml 镜像: docker.elastic.co/eck/eck-operator:1.5.0 # kubectl apply -f all-in-one.yaml //创建 b). 创建存储类 # master 主节点使用 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: eck-master //存储类名称,和后面es申请空间时关联 provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer reclaimPolicy: Retain # data 数据节点使用 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: elasticsearch-data //存储类名称,和后面es申请空间时关联 provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer reclaimPolicy: Retain c). 创建pv,使用local PV方式 apiVersion: v1 kind: PersistentVolume metadata: name: master # pv名称 spec: capacity: storage: 30Gi # 容量 volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: eck-master local: path: /mnt/disks/ssd1 # 宿主机路径。要手动在主机创建访目录 nodeAffinity: required: nodeSelectorTerms: # 节点选择 - matchExpressions: - key: kubernetes.io/hostname operator: In values: - node01 persistentVolumeReclaimPolicy: Retain # 回收策略 storageClassName: eck-master # 关联的存储类 volumeMode: Filesystem # 其它两个数据节点的PV,按照些方式创建。 2. 部署elasticsearch apiVersion: elasticsearch.k8s.elastic.co/v1 kind: Elasticsearch metadata: name: elk-k8s # 集群名称,后面kibana要使用这个名称连接es namespace: elastic-system spec: version: 7.12.0 # es版本号 nodeSets: - name: master # 名称,随便取 count: 1 # 节点数 config: node.roles: ["master","ingest"] # master节点。ingest很重要,否则开启xpack.monitoring时会报错 node.store.allow_mmap: true xpack.monitoring.enabled: true xpack.monitoring.collection.enabled: true indices.breaker.total.use_real_memory: false # 关键参数,影响es性能 indices.fielddata.cache.size: 40% # 关键参数,影响es性能 indices.query.bool.max_clause_count: 4096 indices.memory.index_buffer_size: "25%" podTemplate: metadata: labels: # 标签,没有使用,只是写在这里 foo: bar spec: initContainers: - name: sysctl securityContext: privileged: true command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144'] containers: - name: elasticsearch # 这个名字不要改 env: - name: ES_JAVA_OPTS value: -Xms4g -Xmx4g resources: requests: memory: 6Gi cpu: 2 limits: memory: 6Gi cpu: 2 image: hub.example.com/base/elasticsearch:7.12.0 # 私有镜像仓库里摘取镜像 readinessProbe: # 调大了健康检查时间 exec: command: - bash - -c - /mnt/elastic-internal/scripts/readiness-probe-script.sh failureThreshold: 3 initialDelaySeconds: 30 periodSeconds: 20 successThreshold: 1 timeoutSeconds: 20 volumeClaimTemplates: - metadata: name: elasticsearch-data # 名字不要改 spec: accessModes: - ReadWriteOnce resources: requests: storage: 30Gi # 申请的pv空间 storageClassName: eck-master # 关联的存储类 - name: datas count: 2 podTemplate: spec: initContainers: - name: sysctl securityContext: privileged: true command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144'] containers: - name: elasticsearch # 这个名字不要改 env: - name: ES_JAVA_OPTS value: -Xms6g -Xmx6g resources: requests: memory: 8Gi cpu: 4 limits: memory: 8Gi cpu: 4 image: hub.example.com/base/elasticsearch:7.12.0 readinessProbe: exec: command: - bash - -c - /mnt/elastic-internal/scripts/readiness-probe-script.sh failureThreshold: 3 initialDelaySeconds: 30 periodSeconds: 20 successThreshold: 1 timeoutSeconds: 20 config: node.roles: ["data"] # 数据节点 node.store.allow_mmap: true xpack.monitoring.enabled: true xpack.monitoring.collection.enabled: true indices.breaker.total.use_real_memory: false indices.fielddata.cache.size: 40% indices.query.bool.max_clause_count: 4096 indices.memory.index_buffer_size: "25%" volumeClaimTemplates: - metadata: name: elasticsearch-data # 这个名字不要改 spec: accessModes: - ReadWriteOnce resources: requests: storage: 50Gi # 申请的pv空间 storageClassName: eck-node # 关联的存储类 # kubectl apply -f elastic.yaml # 创建es集群。查看一下日志有没有报错 获取elasticsearch默认密码 # PASSWORD=$(kubectl -n elastic-system get secrets elk-k8s-es-elastic-user -o go-template='{{.data.elastic | base64decode}}') 用户名:elastic 密码:$PASSWORD # curl --insecure -u "elastic:$PASSWORD" -k https://10.43.123.78:9200/_cat/nodes?v 3. 使用elasticsearch api 调整参数 PUT _cluster/settings { "persistent": { "xpack.monitoring.collection.enabled": true } } PUT /_cluster/settings { "persistent": { "cluster": { "max_shards_per_node":15000 } } } PUT /_cluster/settings { "persistent" : { "indices.breaker.fielddata.limit" : "60%", "indices.breaker.request.limit" : "40%", "indices.breaker.total.limit" : "70%" } } 4. 部署kibana apiVersion: kibana.k8s.elastic.co/v1 kind: Kibana metadata: name: xn-kibana namespace: elastic-system spec: version: 7.12.0 # kibana版本 count: 1 elasticsearchRef: name: "elk-k8s" # es集群名称 namespace: elastic-system podTemplate: spec: containers: - name: kibana env: - name: NODE_OPTIONS value: "--max-old-space-size=2048" resources: requests: memory: 1Gi cpu: 0.5 limits: memory: 3Gi cpu: 2 image: hub.example.com/base/kibana:7.12.0 # kubectl apply -f kibana.yaml # 部署Kibana 5. 配置代理访问kibana 因为我是使用rancher部署的k8s集群,所以下面是在rancher里创建的nginx-ingress配置

---- 空行 --------

添加注释: nginx.ingress.kubernetes.io/backend-protocol=https

在kibana里查看一下集群状态:

GET _cat/nodes GET _cluster/settings GET _cat/health GET _cat/thread_pool?v 根据需要调整对应index模板: { "index": { "mapping": { "total_fields": { "limit": "10000" } }, "refresh_interval": "15s", "number_of_shards": "1", "translog": { "flush_threshold_size": "1024mb", "sync_interval": "120s", "durability": "async" }, "max_docvalue_fields_search": "500", "query": { "default_field": [ "message", "tags", "agent.ephemeral_id", "agent.id", ...... 6. 部署filebeat apiVersion: beat.k8s.elastic.co/v1beta1 kind: Beat metadata: name: filebeat namespace: elastic-system spec: type: filebeat version: 7.11.1 elasticsearchRef: name: xn-elastic namespace: elastic-system kibanaRef: name: xn-kibana namespace: elastic-system config: filebeat: autodiscover: providers: - type: kubernetes node: ${NODE_NAME} hints: enabled: true default_config: type: container paths: - /var/log/containers/*${data.kubernetes.container.id}.log tail_files: true harvester_buffer_size: 65536 exclude_lines: ['SqlSession', '<== Total: 0', 'JDBC Connection', '<== Columns'] #multiline: # pattern: ^\d{4}-\d{1,2}-\d{1,2}\s\d{1,2}:\d{1,2}:\d{1,2} # negate: true # match: after filebeat.config: modules: path: ${path.config}/modules.d/*.yml reload.enabled: false filebeat.modules: - module: system syslog: enabled: true var.paths: ["/var/log/messages"] var.convert_timezone: true auth: enabled: true var.paths: ["/var/log/secure"] var.convert_timezone: true filebeat.shutdown_timeout: 10s logging.level: info # debug #logging.selectors: ["prospector","harvester"] output.elasticsearch: worker: 4 bulk_max_size: 22000 flush_interval: 1s indices: - index: "k8s-syslog-%{+yyyy.MM.dd}" when.equals: event.module: "system" - index: "k8s-%{[kubernetes.namespace]}-%{+yyyy.MM.dd}" username: ${ELASTICSEARCH_USERNAME} password: ${ELASTICSEARCH_PASSWORD} ##setup.template.enabled: true setup.template.name: "k8s" setup.template.pattern: "k8s-*" setup.ilm.enabled: false processors: - add_cloud_metadata: {} - add_host_metadata: {} - drop_fields: fields: ["host.ip", "host.mac", "host.id", "host.name", "host.architecture", "host.os.family", "host.os.name", "host.os.version", "host.os.codename", "host.os.kernel", "ecs.version", "agent.name", "agent.version", "kubernetes.node.l abels.beta_kubernetes_io/arch", "kubernetes.node.labels.beta_kubernetes_io/os"] queue.mem: events: 16000 flush.min_events: 9000 flush.timeout: 1s daemonSet: podTemplate: spec: serviceAccountName: filebeat automountServiceAccountToken: true terminationGracePeriodSeconds: 30 dnsPolicy: ClusterFirstWithHostNet hostNetwork: true # Allows to provide richer host metadata containers: - name: filebeat securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true image: registry.cqxndb.com/base/filebeat:7.11.1 resources: limits: cpu: 300m memory: 300Mi requests: cpu: 300m memory: 300Mi volumeMounts: - name: varlogcontainers mountPath: /var/log/containers - name: varlogpods mountPath: /var/log/pods - name: varlibdockercontainers mountPath: /var/lib/docker/containers - name: varlogmessages mountPath: /var/log/messages - name: varlogsecure mountPath: /var/log/secure env: - name: ELASTICSEARCH_USERNAME value: elastic - name: ELASTICSEARCH_PASSWORD valueFrom: secretKeyRef: key: elastic name: xn-elastic-es-elastic-user - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName volumes: - name: varlogcontainers hostPath: path: /var/log/containers - name: varlogpods hostPath: path: /var/log/pods - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: varlogmessages hostPath: path: /var/log/messages - name: varlogsecure hostPath: path: /var/log/secure --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: filebeat rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - nodes - pods verbs: - get - watch - list --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: elastic-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: elastic-system roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io 7. 部署APMServer apiVersion: apm.k8s.elastic.co/v1 kind: ApmServer metadata: name: xn-apmserver namespace: elastic-system spec: version: 7.11.1 count: 1 elasticsearchRef: name: "xn-elastic" # 引用ES名称 kibanaRef: name: "xn-kibana" # 引用kibana名称 config: apm-server: rum.enabled: true ilm.enabled: true rum.event_rate.limit: 300 rum.event_rate.lru_size: 1000 rum.allow_origins: ['*'] logging: level: warning queue.mem: events: 10240 output.elasticsearch: worker: 2 bulk_max_size: 500 podTemplate: spec: containers: - name: apm-server resources: requests: memory: 2Gi cpu: 2 limits: memory: 4Gi cpu: 4 image: registry.example.com/base/apm-server:7.11.1

应用接入APMServer:

参考链接:https://github.com/maieve/Eck-apm-example