完全分布式Hadoop2.3安装与配置

|

1

2

3

4

5

6

7

8

9

10

11

12

|

[root@master-hadoop

~]#

tar zxvf jdk-7u17-linux-x64.tar.gz[root@master-hadoop

~]#

mv jdk1.7.0_17/ /usr/local/jdk1.7[root@slave1-hadoop

~]#

vi/etc/profile #末尾添加变量JAVA_HOME=/usr/local/jdk1.7PATH=$PATH:$JAVA_HOME/binCLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/jre/libexport JAVA_HOME

CLASSPATHPATH[root@slave1-hadoop

~]#source

/etc/profile[root@slave1-hadoop

~]#

java-version #显示版本说明配置成功java

version"1.7.0_17"Java(TM)

SE RuntimeEnvironment (build 1.7.0_17-b02)Java

HotSpot(TM) 64-BitServer VM (build 23.7-b01, mixed mode) |

|

1

2

3

4

5

6

|

[root@master-hadoop

~]#useradd

-u 600 hadoop[root@master-hadoop

~]#passwd

hadoopChanging

password for userhadoop.New

password:Retype

new password:passwd:

all authenticationtokens updated successfully. |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@master-hadoop

~]#

su - hadoop[hadoop@master-hadoop

~]$ ssh-keygen

-t rsa #一直回车生成密钥[hadoop@master-hadoop

~]$ cd/home/hadoop/.ssh/[hadoop@master-hadoop

.ssh]$ lsid_rsa

id_rsa.pub[hadoop@slave1-hadoop

~]$ mkdir /home/hadoop/.ssh #登录两台创建.ssh目录[hadoop@slave2-hadoop

~]$ mkdir /home/hadoop/.ssh[hadoop@master-hadoop

.ssh]$ scp id_rsa.pub

hadoop@slave1-hadoop:/home/hadoop/.ssh/[hadoop@master-hadoop

.ssh]$ scp id_rsa.pub

hadoop@slave2-hadoop:/home/hadoop/.ssh/[hadoop@slave1-hadoop

~]$ cd/home/hadoop/.ssh/[hadoop@slave1-hadoop

.ssh]$ cat id_rsa.pub

>> authorized_keys[hadoop@slave1-hadoop

.ssh]$ chmod 600

authorized_keys[hadoop@slave1-hadoop

.ssh]$ chmod 700

../.ssh/ #目录权限必须设置700[root@slave1-hadoop

~]#

vi /etc/ssh/sshd_config #开启RSA认证RSAAuthentication yesPubkeyAuthentication yesAuthorizedKeysFile

.ssh/authorized_keys[root@slave1-hadoop

~]#

service sshd restart |

|

1

2

3

4

5

6

7

|

[root@master-hadoop

~]#

tar zxvf hadoop-2.3.0.tar.gz -C /home/hadoop/[root@master-hadoop

~]#

chown hadoop.hadoop -R /home/hadoop/hadoop-2.3.0/[root@master-hadoop

~]#

vi /etc/profile #添加hadoop变量,方便使用HADOOP_HOME=/home/hadoop/hadoop-2.3.0/PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbinexport HADOOP_HOME

PATH[root@master-hadoop

~]#

source /etc/profile |

|

1

2

3

|

[hadoop@master-hadoop

~]$ cd hadoop-2.3.0/etc/hadoop/[hadoop@master-hadoop

hadoop]$ vi hadoop-env.shexport JAVA_HOME=/usr/local/jdk1.7/ |

|

1

2

3

|

[hadoop@master-hadoophadoop]$ vi slavesslave1-hadoopslave2-hadoop |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

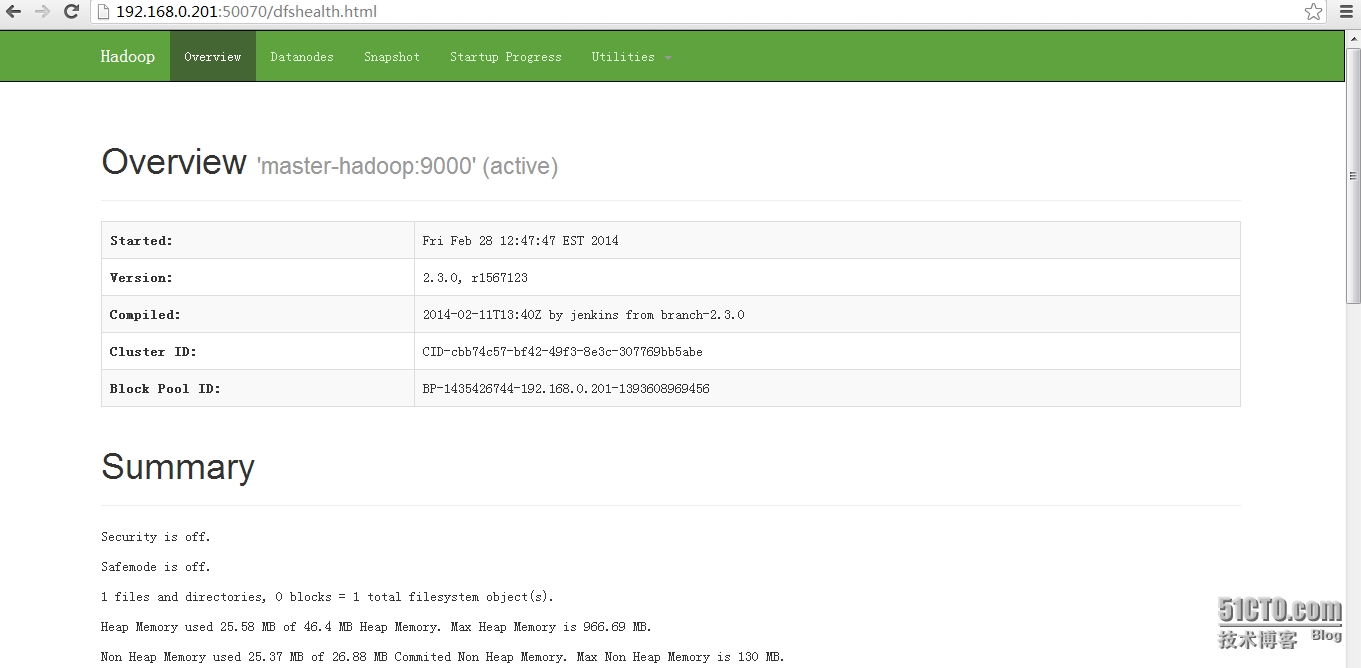

<configuration><property><name>fs.defaultFS</name><value>hdfs://master-hadoop:9000</value></property><property><name>io.file.buffer.size</name><value>131072</value></property><property><name>hadoop.tmp.dir</name><value>file:/home/hadoop/tmp</value></property></configuration>4.hdfs-site.xml<configuration><property><name>dfs.namenode.name.dir</name><value>file:/home/hadoop/dfs/name</value></property><property><name>dfs.namenode.data.dir</name><value>file:/home/hadoop/dfs/data</value></property><property><name>dfs.replication</name> #数据副本数量,默认3,我们是两台设置2<value>2</value></property></configuration> |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

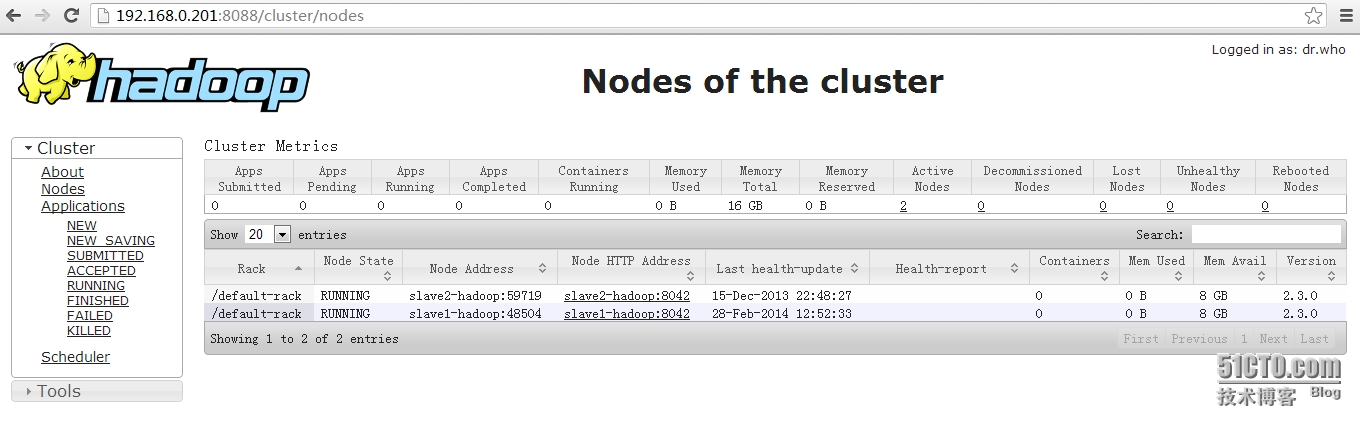

<configuration><property><name>yarn.resourcemanager.address</name><value>master-hadoop:8032</value></property><property><name>yarn.resourcemanager.scheduler.address</name><value>master-hadoop:8030</value></property><property><name>yarn.resourcemanager.resource-tracker.address</name><value>master-hadoop:8031</value></property><property><name>yarn.resourcemanager.admin.address</name><value>master-hadoop:8033</value></property><property><name>yarn.resourcemanager.webapp.address</name><value>master-hadoop:8088</value></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value></property></configuration> |

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.jobhistory.address</name><value>master-hadoop:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>master-hadoop:19888</value></property></configuration> |

浙公网安备 33010602011771号

浙公网安备 33010602011771号