CentOS7.6配置do.cker和K.B.S

方法一:

|

节点及功能 |

主机名 |

IP |

|

Master、etcd、registry |

K8s-01 |

10.8.8.31 |

|

Node1 |

K8s-02 |

10.8.8.32 |

|

Node2 |

K8s-03 |

10.8.8.33 |

一:环境搭建:(各节点均需配置)

1.1:新建虚拟机(最好重新安装,不要用镜像文件)

编辑网卡文件:vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet PROXY_METHOD=none BROWSER_ONLY=no #BOOTPROTO=dhcp BOOTPROTO=static DEFROUTE=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_FAILURE_FATAL=no IPV6_ADDR_GEN_MODE=stable-privacy NAME=ens33 #UUID=1bc6ef33-bdb7-4f3d-8021-b138426828ed DEVICE=ens33 #ONBOOT=no ONBOOT=yes IPADDR=10.8.8.31 NETMASK=255.255.255.0 GATEWAY=10.8.8.2 DNS1=8.8.8.8 DNS2=1.1.1.1

1.2:修改机器名

hostnamectl set-hostname k8s-01

1.3:安装ansible

yum install -y ansible

vi /etc/ansible/hosts

# This is the default ansible 'hosts' file. # # It should live in /etc/ansible/hosts # # - Comments begin with the '#' character # - Blank lines are ignored # - Groups of hosts are delimited by [header] elements # - You can enter hostnames or ip addresses # - A hostname/ip can be a member of multiple groups [k8s] 10.8.8.31 10.8.8.32 10.8.8.33 [master] 10.8.8.31 [node] 10.8.8.32 10.8.8.33

1.4:配置ssh互信,所有结点都要运行

ssh-keygen -t rsa

[root@localhost ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:NFGl8BmAOW6ch93oiRuBLzNS1jY5dcIU6bGpwLUyUeQ root@k8s-01 The key's randomart image is: +---[RSA 2048]----+ | oo=*=o.. | | ..= *+.+ | | . BEXoO+ | | O #.B.. | | o B OS. | | . + = o | | . + o | | . | | | +----[SHA256]-----+

ssh-copy-id -i /root/.ssh/id_rsa.pub 10.8.8.31

ssh-copy-id -i /root/.ssh/id_rsa.pub 10.8.8.32

ssh-copy-id -i /root/.ssh/id_rsa.pub 10.8.8.33

[root@localhost .ssh]# ssh-copy-id -i /root/.ssh/id_rsa.pub 10.8.8.33 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" The authenticity of host '10.8.8.33 (10.8.8.33)' can't be established. ECDSA key fingerprint is SHA256:ozAbIXZWFBIwjiypTD23hQ9ioBr81+MZd1TGCQcc0o8. ECDSA key fingerprint is MD5:9d:0c:48:4f:c4:50:7c:08:71:33:9e:86:13:46:b3:12. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@10.8.8.33's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh '10.8.8.33'" and check to make sure that only the key(s) you wanted were added.

1.5:用ansible重启集群

ansible all -a 'reboot'

1.6:ansible all -a 'yum update -y

ansible all -a 'yum install -y net-tools.x86_64'

ansible all -a 'yum install -y vim-enhanced.x86_64'

ansible all -a 'yum install -y wget'

ansible all -a 'yum install -y tree'

ansible all -a 'yum install -y ntp ntpdate'

echo '*/10 * * * * root ntpdate cn.pool.ntp.org' >> /etc/crontab

ansible all -a 'ntpdate cn.pool.ntp.org'

编辑hosts文件,并分发到各节点

vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.8.8.31 k8s-01 10.8.8.32 k8s-02 10.8.8.33 k8s-03

scp /etc/hosts root@10.8.8.32:/etc/

1.7:关闭防火墙

[root@k8s-01 ~]# ansible all -a 'systemctl stop firewalld'

[root@k8s-01 ~]# ansible all -a 'systemctl disable firewalld'

[root@k8s-01 ~]# ansible all -a 'systemctl mask firewalld'

1.8:关闭selinux

https://www.cnblogs.com/liwei0526vip/p/5644163.html (sed用法)

getenforce

vim /etc/selinux/config

SELINUX=disabled

ansible all -a "sed -i '7s/.*/#&/' /etc/selinux/config"

ansible all -a "sed -i '7a SELINUX=disabled' /etc/selinux/config"

1.9:关闭交换分区swap

swapoff -a

rm /dev/mapper/centos-swap

sed -i 's/.*swap.*/#&/' /etc/fstab

ansible all -a 'swapoff -a'

ansible all -a 'rm /dev/mapper/centos-swap'

ansible all -a "sed -i 's/.*swap.*/#&/' /etc/fstab"

ansible all -a 'reboot'

二:部署etcd(yum方法)

https://blog.csdn.net/xiaozhangdetuzi/article/details/81302405

https://www.jianshu.com/p/e892997b387b

2.1:所有节点安装etcd

ansible all -a 'yum install -y etcd'

2.2:配置etcd.conf

vim /etc/etcd/etcd.conf

[root@k8s-01 ~]# vim /etc/etcd/etcd.conf (原文件) #[Member] #ETCD_CORS="" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_LISTEN_PEER_URLS="http://localhost:2380" ETCD_LISTEN_CLIENT_URLS="http://localhost:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" ETCD_NAME="default" #ETCD_SNAPSHOT_COUNT="100000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" #ETCD_QUOTA_BACKEND_BYTES="0" #ETCD_MAX_REQUEST_BYTES="1572864" #ETCD_GRPC_KEEPALIVE_MIN_TIME="5s" #ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s" #ETCD_GRPC_KEEPALIVE_TIMEOUT="20s" # #[Clustering] #ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380" ETCD_ADVERTISE_CLIENT_URLS="http://localhost:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_DISCOVERY_SRV="" #ETCD_INITIAL_CLUSTER="default=http://localhost:2380" #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" #ETCD_INITIAL_CLUSTER_STATE="new" #ETCD_STRICT_RECONFIG_CHECK="true" #ETCD_ENABLE_V2="true" # #[Proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[Security] #ETCD_CERT_FILE="" #ETCD_KEY_FILE="" #ETCD_CLIENT_CERT_AUTH="false" #ETCD_TRUSTED_CA_FILE="" #ETCD_AUTO_TLS="false" #ETCD_PEER_CERT_FILE="" #ETCD_PEER_KEY_FILE="" #ETCD_PEER_CLIENT_CERT_AUTH="false" #ETCD_PEER_TRUSTED_CA_FILE="" #ETCD_PEER_AUTO_TLS="false" # #[Logging] #ETCD_DEBUG="false" #ETCD_LOG_PACKAGE_LEVELS="" #ETCD_LOG_OUTPUT="default" # #[Unsafe] #ETCD_FORCE_NEW_CLUSTER="false" # #[Version] #ETCD_VERSION="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[Profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" # #[Auth] #ETCD_AUTH_TOKEN="simple"

k8s-01

[root@k8s-01 ~]# vim /etc/etcd/etcd.conf

#[Member]

#节点名称

ETCD_NAME="k8s-01"

#指定节点的数据存储目录

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#监听URL,用于与其他节点通讯

ETCD_LISTEN_PEER_URLS="http://10.8.8.31:2380"

#对外提供服务的地址,客户端会连接到这里和 etcd 交互

ETCD_LISTEN_CLIENT_URLS="http://10.8.8.31:2379,http://127.0.0.1:2379"

#[Clustering]

#该节点同伴监听地址,这个值会告诉集群中其他节点

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.8.8.31:2380"

#对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点

ETCD_ADVERTISE_CLIENT_URLS="http://10.8.8.31:2379"

#集群中所有节点的信息,

#格式为 node1=http://ip1:2380,node2=http://ip2:2380,…

#注意:这里的 node1 是节点的 --name 指定的名字;后面的 ip1:2380 是 --initial-advertise-peer-urls 指定的值

ETCD_INITIAL_CLUSTER="k8s-01=http://10.8.8.31:2380,k8s-02=http://10.8.8.32:2380,k8s-03=http://10.8.8.33:2380"

#创建集群的 token,这个值每个集群保持唯一。

#这样的话,如果你要重新创建集群,即使配置和之前一样,也会再次生成新的集群和节点 uuid;否则会导致多个集群之间的冲突,造成未知的错误

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

#新建集群的时候,这个值为 new ;假如已经存在的集群,这个值为 existing

ETCD_INITIAL_CLUSTER_STATE="new"

k8s-02

#[Member] ETCD_NAME="k8s-02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="http://10.8.8.32:2380" ETCD_LISTEN_CLIENT_URLS="http://10.8.8.32:2379,http://127.0.0.1:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="http://10.8.8.32:2380" ETCD_ADVERTISE_CLIENT_URLS="http://10.8.8.32:2379" ETCD_INITIAL_CLUSTER="k8s-01=http://10.8.8.31:2380,k8s-02=http://10.8.8.32:2380,k8s-03=http://10.8.8.33:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

2.3:etcd集群,

所有节点分别运行

systemctl start etcd

或ansible运行

ansible all -a 'systemctl start etcd'

检测启动情况:

etcdctl member list

[root@k8s-01 etcd]# etcdctl member list 21a69e29ab8d1218: name=k8s-02 peerURLs=http://10.8.8.32:2380 clientURLs=http://10.8.8.32:2379 isLeader=true 3df47f4e2d43b21a: name=k8s-03 peerURLs=http://10.8.8.33:2380 clientURLs=http://10.8.8.33:2379 isLeader=false 5b118d787e1ab5d3: name=k8s-01 peerURLs=http://10.8.8.31:2380 clientURLs=http://10.8.8.31:2379 isLeader=false

k8s-02 isLeader=true 为主节点

etcdctl -C http://10.8.8.31:2379 cluster-health

[root@k8s-01 etcd]# etcdctl -C http://10.8.8.31:2379 cluster-health member 21a69e29ab8d1218 is healthy: got healthy result from http://10.8.8.32:2379 member 3df47f4e2d43b21a is healthy: got healthy result from http://10.8.8.33:2379 member 5b118d787e1ab5d3 is healthy: got healthy result from http://10.8.8.31:2379 cluster is healthy

设置开机启动:

ansible all -a 'systemctl enable etcd'

三:安装docker

3.1:安装docker yum (各节点都要安装)

ansible all -a 'yum install -y docker'

ansible all -a 'docker version' (报错如下)

[root@k8s-01 etcd]# ansible all -a 'docker version' 10.8.8.31 | FAILED | rc=1 >> Client: Version: 1.13.1 API version: 1.26 Package version: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?non-zero return code 10.8.8.32 | FAILED | rc=1 >> Client: Version: 1.13.1 API version: 1.26 Package version: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?non-zero return code 10.8.8.33 | FAILED | rc=1 >> Client: Version: 1.13.1 API version: 1.26 Package version: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?non-zero return code

ansible all -a 'systemctl daemon-reload'

ansible all -a 'systemctl restart docker'

ansible all -a 'docker version'

[root@k8s-01 etcd]# ansible all -a 'docker version' 10.8.8.31 | SUCCESS | rc=0 >> Client: Version: 1.13.1 API version: 1.26 Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Server: Version: 1.13.1 API version: 1.26 (minimum version 1.12) Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Experimental: false 10.8.8.33 | SUCCESS | rc=0 >> Client: Version: 1.13.1 API version: 1.26 Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Server: Version: 1.13.1 API version: 1.26 (minimum version 1.12) Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Experimental: false 10.8.8.32 | SUCCESS | rc=0 >> Client: Version: 1.13.1 API version: 1.26 Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Server: Version: 1.13.1 API version: 1.26 (minimum version 1.12) Package version: docker-1.13.1-91.git07f3374.el7.centos.x86_64 Go version: go1.10.3 Git commit: 07f3374/1.13.1 Built: Wed Feb 13 17:10:12 2019 OS/Arch: linux/amd64 Experimental: false

ifconfig

[root@k8s-01 etcd]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

ether 02:42:7f:71:21:01 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.8.8.31 netmask 255.255.255.0 broadcast 10.8.8.255

inet6 fe80::4e95:1400:1371:99a4 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:0b:69:ff txqueuelen 1000 (Ethernet)

RX packets 83459 bytes 43293262 (41.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 60528 bytes 7960462 (7.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 1358 bytes 731784 (714.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1358 bytes 731784 (714.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

3.2:设置开机启动:

ansible all -a 'systemctl enable docker'

四:安装kubernetes

4.1:安装kubernetes(各节点都要安装)

ansible all -a 'yum install -y kubernetes'

4.2:kubernetes master上要运行以下组件

Kubernets API Server

Kubernets Controller Manager

Kubernets Scheduler

4.3:配置并启动master:(在master机器编辑)

4.3.1:apiserver

https://segmentfault.com/a/1190000002920092

vim /etc/kubernetes/apiserver

apiserver原文件:

[root@k8s-01 ~]# vim /etc/kubernetes/apiserver (原文件) ### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to. KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1" # The port on the local server to listen on. # KUBE_API_PORT="--port=8080" # Port minions listen on # KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://127.0.0.1:2379" # Address range to use for services KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" # Add your own! KUBE_API_ARGS=""

apiserver修改后文件:

[root@k8s-01 ~]# vim /etc/kubernetes/apiserver ### # kubernetes system config # # The following values are used to configure the kube-apiserver # # The address on the local server to listen to.

# –insecure-bind-address: apiserver绑定主机的非安全端口,设置0.0.0.0表示绑定所有IP地址 KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" # The port on the local server to listen on.

# –insecure-port: apiserver绑定主机的非安全端口号,默认为8080 KUBE_API_PORT="--port=8080" # Port minions listen on # KUBELET_PORT="--kubelet-port=10250" # Comma separated list of nodes in the etcd cluster KUBE_ETCD_SERVERS="--etcd-servers=http://10.8.8.31:2379,http://10.8.8.32:2379,http://10.8.8.33:2379" # Address range to use for services

# –service-cluster-ip-range: Kubernetes集群中service的虚拟IP地址范围,以CIDR表示,该IP范围不能与物理机的真实IP段有重合

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16" # default admission control policies

# –admission_control: kubernetes集群的准入控制设置,各控制模块以插件的形式依次生效

# NamespaceExists它会观察所有的请求,如果请求尝试创建一个不存在的namespace,则这个请求被拒绝

# LimitRanger他会观察所有的请求,确保没有违反已经定义好的约束条件,这些条件定义在namespace中LimitRange对象中

# 如果在kubernetes中使用LimitRange对象,则必须使用这个插件

# SecurityContextDeny这个插件将会将使用了 SecurityContext的pod中定义的选项全部失效

# serviceAccount为运行在pod内的进程添加了相应的认证信息

# ResourceQuota它会观察所有的请求,确保在namespace中ResourceQuota对象处列举的container没有任何异常,

# 如果在kubernetes中使用了ResourceQuota对象,就必须使用这个插件来约束container,

# 推荐在admission control参数列表中,这个插件排最后一个! #KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota" # serviceAccount为运行在pod内的进程添加了相应的认证信息(因此例未做认证,所以此项取消)

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own! KUBE_API_ARGS=""

4.3.2:config

vim /etc/kubernetes/config

config原文件:

[root@k8s-01 ~]# vim /etc/kubernetes/config ### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://127.0.0.1:8080"

config修改后文件:

[root@k8s-01 ~]# vim /etc/kubernetes/config ### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://10.8.8.31:8080"

4.3.3:master节点启动服务并设置开机启动

[root@k8s-01 ~]# systemctl start kube-apiserver

[root@k8s-01 ~]# systemctl enable kube-apiserver

[root@k8s-01 ~]# systemctl start kube-controller-manager

[root@k8s-01 ~]# systemctl enable kube-controller-manager

[root@k8s-01 ~]# systemctl start kube-scheduler

[root@k8s-01 ~]# systemctl enable kube-scheduler

4.4:配置并启动node:(node节点机器操作)

4.4.1:在kubernetes node上需要运行以下组件:

Kubelet

Kubernets Proxy

4.4.2:config

vim /etc/kubernetes/config

config原文件

[root@k8s-02 ~]# vim /etc/kubernetes/config ### # kubernetes system config # # The following values are used to configure various aspects of all # kubernetes services, including # # kube-apiserver.service # kube-controller-manager.service # kube-scheduler.service # kubelet.service # kube-proxy.service # logging to stderr means we get it in the systemd journal KUBE_LOGTOSTDERR="--logtostderr=true" # journal message level, 0 is debug KUBE_LOG_LEVEL="--v=0" # Should this cluster be allowed to run privileged docker containers KUBE_ALLOW_PRIV="--allow-privileged=false" # How the controller-manager, scheduler, and proxy find the apiserver KUBE_MASTER="--master=http://127.0.0.1:8080"

config修改后文件:

[root@k8s-02 etcd]# vim /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.8.8.31:8080"

4.4.3:kubelet

vim /etc/kubernetes/kubelet

kubelet原文件:

[root@k8s-02 ~]# vim /etc/kubernetes/kubelet ### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=127.0.0.1" # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=127.0.0.1" # location of the api-server KUBELET_API_SERVER="--api-servers=http://127.0.0.1:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" # Add your own! KUBELET_ARGS=""

kubelet修改后文件:

[root@k8s-02 etcd]# vim /etc/kubernetes/kubelet ### # kubernetes kubelet (minion) config # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces) KUBELET_ADDRESS="--address=0.0.0.0" # The port for the info server to serve on # KUBELET_PORT="--port=10250" # You may leave this blank to use the actual hostname KUBELET_HOSTNAME="--hostname-override=10.8.8.32" # location of the api-server KUBELET_API_SERVER="--api-servers=http://10.8.8.31:8080" # pod infrastructure container KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest" # Add your own! KUBELET_ARGS=""

4.4.4:启动服务并添加开机启动

[root@k8s-02 ~]# systemctl start kubelet

[root@k8s-02 ~]# systemctl enable kubelet

[root@k8s-02 ~]# systemctl start kube-proxy

[root@k8s-02 ~]# systemctl enable kube-proxy

坑!

vim /etc/kubernetes/kubelet中

KUBELET_HOSTNAME="--hostname-override=10.8.8.32"这里如果没有配置hostname,status会报错

3月 20 11:19:56 k8s-02 kube-proxy[29412]: E0320 11:19:56.256315 29412 server.go:421] Can't get Node "k8s-02", assuming iptables proxy, err: nodes "k8s-02" not found

修改配置为: KUBELET_HOSTNAME="--hostname-override=k8s-02"

重启服务:systemctl restart kube-proxy

4.5:进入master机器,查看node状态

kubectl -s http://10.8.8.31:8080 get node

[root@k8s-01 ~]# kubectl -s http://10.8.8.31:8080 get node NAME STATUS AGE 10.8.8.32 NotReady 1h k8s-02 Ready 2m k8s-03 Ready 7m

五:配置网络flannel

5.1:安装flannel(各节点机器都要安装)

[root@k8s-01 ~]# ansible all -a 'yum install -y flannel'

5.2:配置flannel

vim /etc/sysconfig/flanneld

[root@k8s-01 ~]# vim /etc/sysconfig/flanneld # Flanneld configuration options # etcd url location. Point this to the server where etcd runs FLANNEL_ETCD_ENDPOINTS="http://10.8.8.31:2379,http://10.8.8.32:2379,http://10.8.8.33:2379" # etcd config key. This is the configuration key that flannel queries # For address range assignment FLANNEL_ETCD_PREFIX="/atomic.io/network" # Any additional options that you want to pass #FLANNEL_OPTIONS=""

5.3:配置flannel网段

etcdctl mk /atomic.io/network/config '{ "Network":"10.10.0.0/16" }'

Flannel使用Etcd进行配置,来保证多个Flannel实例之间的配置一致性,所以需要在etcd上进行如下配置:(‘/atomic.io/network/config’这个key与上文/etc/sysconfig/flannel中的配置项FLANNEL_ETCD_PREFIX是相对应的,错误的话启动就会出错)

[root@k8s-01 ~]# etcdctl mk /atomic.io/network/config '{ "Network":"10.10.0.0/16" }'

{ "Network":"10.10.0.0/16" }

5.4:启动flannel服务并重启kubernetes服务

5.4.1:master上启动

[root@k8s-01 ~]# ansible master -a 'systemctl start flanneld'

[root@k8s-01 ~]# ansible master -a 'systemctl enable flanneld'

ifconfig 可以看到flannel信息

[root@k8s-01 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

ether 02:42:7f:71:21:01 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.8.8.31 netmask 255.255.255.0 broadcast 10.8.8.255

inet6 fe80::4e95:1400:1371:99a4 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:0b:69:ff txqueuelen 1000 (Ethernet)

RX packets 900960 bytes 259734166 (247.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 843207 bytes 139504742 (133.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.10.43.0 netmask 255.255.0.0 destination 10.10.43.0

inet6 fe80::da51:4e1c:3fdb:4c90 prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 245215 bytes 80894269 (77.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 245215 bytes 80894269 (77.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@k8s-01 ~]# ansible master -a 'systemctl restart docker'

[root@k8s-01 ~]# ansible master -a 'systemctl restart kube-apiserver'

[root@k8s-01 ~]# ansible master -a 'systemctl restart kube-controller-manager'

[root@k8s-01 ~]# ansible master -a 'systemctl restart kube-scheduler'

5.4.2:node上启动

[root@k8s-01 ~]# ansible node -a 'systemctl start flanneld'

[root@k8s-01 ~]# ansible node -a 'systemctl enable flanneld'

[root@k8s-01 ~]# ansible node -a 'systemctl restart docker'

[root@k8s-01 ~]# ansible node -a 'systemctl restart kubelet'

[root@k8s-01 ~]# ansible node -a 'systemctl restart kube-proxy'

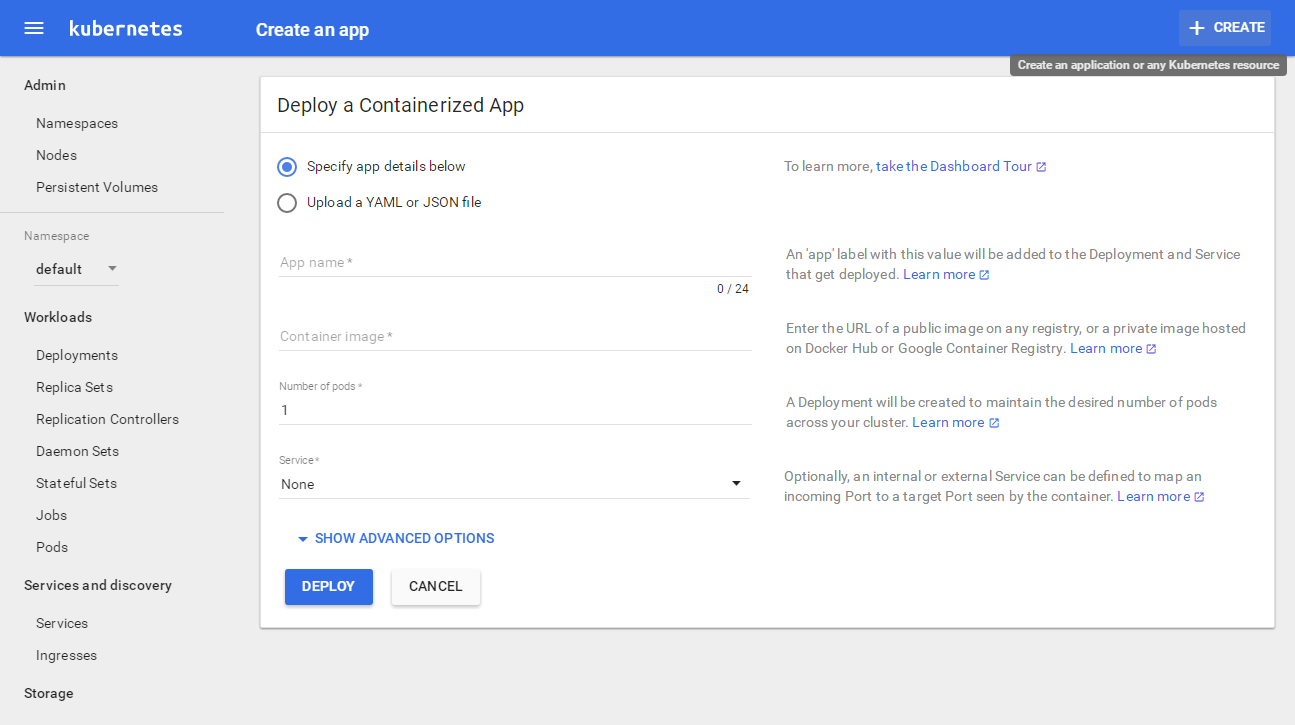

六:kubernetes-dashboard安装

https://www.cnblogs.com/zhenyuyaodidiao/p/6500897.html

https://blog.csdn.net/qq1083062043/article/details/84949924

https://www.cnblogs.com/fengzhihai/p/9851470.html

https://www.cnblogs.com/yy-cxd/p/6650573.html

6.1:准备registry.access.redhat.com/rhel7/pod-infrastructure:latest (各节点都下载)

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

vim /etc/rhsm/ca/redhat-uep.pem 已经有数据

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

6.2:拉取kubernetes-dashboard-amd64:v1.5.1 (西游记)

docker pull gcr.io/google_containers/kubernetes-dashboard-amd64:v1.5.1

6.3:docker文件转tar包 (master上执行)

docker save gcr.io/google_containers/kubernetes-dashboard-amd64:v1.5.1 > dashboard.tar

docker save registry.access.redhat.com/rhel7/pod-infrastructure:latest > podinfrastructure.tar

6.4:tar包转docker

docker load < dashboard.tar

6.5:准备yaml文件

mkdir -p /etc/kubernetes/yamlfile

cd /etc/kubernetes/yamlfile

wget https://rawgit.com/kubernetes/kubernetes/master/cluster/addons/dashboard/dashboard-controller.yaml

wget https://rawgit.com/kubernetes/kubernetes/master/cluster/addons/dashboard/dashboard-service.yaml

vim dashboard.yaml

1 apiVersion: extensions/v1beta1 2 kind: Deployment 3 metadata: 4 # Keep the name in sync with image version and 5 # gce/coreos/kube-manifests/addons/dashboard counterparts 6 name: kubernetes-dashboard-latest 7 namespace: kube-system 8 spec: 9 replicas: 1 10 template: 11 metadata: 12 labels: 13 k8s-app: kubernetes-dashboard 14 version: latest 15 kubernetes.io/cluster-service: "true" 16 spec: 17 containers: 18 - name: kubernetes-dashboard 19 image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.5.1 20 resources: 21 # keep request = limit to keep this container in guaranteed class 22 limits: 23 cpu: 100m 24 memory: 50Mi 25 requests: 26 cpu: 100m 27 memory: 50Mi 28 ports: 29 - containerPort: 9090 30 args: 31 - --apiserver-host=http://10.8.8.31:8080 32 livenessProbe: 33 httpGet: 34 path: / 35 port: 9090 36 initialDelaySeconds: 30 37 timeoutSeconds: 30

vim dashboardsvc.yaml

1 apiVersion: v1 2 kind: Service 3 metadata: 4 name: kubernetes-dashboard 5 namespace: kube-system 6 labels: 7 k8s-app: kubernetes-dashboard 8 kubernetes.io/cluster-service: "true" 9 spec: 10 selector: 11 k8s-app: kubernetes-dashboard 12 ports: 13 - port: 80 14 targetPort: 9090

6.6:用yaml启动

kubectl create -f dashboard.yaml

kubectl create -f dashboardsvc.yaml

[root@k8s-01 yamlfail]# kubectl create -f dashboard.yaml deployment "kubernetes-dashboard-latest" created [root@k8s-01 yamlfail]# kubectl create -f dashboardsvc.yaml service "kubernetes-dashboard" created

删除方法:

kubectl delete -f xxx.yaml

kubectl delete deployment kubernetes-dashboard-latest --namespace=kube-system

kubectl delete svc kubernetes-dashboard --namespace=kube-system

注意:

kubectl get deployment --all-namespaces

不要直接删除pod,使用kubectl请删除拥有该pod的Deployment。如果直接删除pod,则Deployment将会重新创建该pod。

6.7:查看pod状态

kubectl get pod --all-namespaces

[root@k8s-01 yamlfail]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kubernetes-dashboard-latest-190610294-c027r 1/1 Running 0 1h

kubectl get svc --all-namespaces

[root@k8s-01 yamlfail]# kubectl get svc --all-namespaces

NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes 10.254.0.1 <none> 443/TCP 2d

kube-system kubernetes-dashboard 10.254.112.86 <none> 80/TCP 1h

kubectl get pod -o wide --all-namespaces

[root@k8s-01 yamlfail]# kubectl get pod -o wide --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

kube-system kubernetes-dashboard-latest-190610294-c027r 1/1 Running 0 1h 10.10.49.2 k8s-02

6.8:web访问

http://10.8.8.31:8080/ui

Error: 'dial tcp 10.10.49.2:9090: getsockopt: connection timed out' Trying to reach: 'http://10.10.49.2:9090/'

6.9:curl 10.10.49.2:9090

[root@k8s-02 ~]# curl 10.10.49.2:9090

<!doctype html> <html ng-app="kubernetesDashboard"> <head> <meta charset="utf-8"> <title>Kubernetes Dashboard</title> <link rel="icon" type="image/png" href="assets/images/kubernetes-logo.png"> <meta name="viewport" content="width=device-width"> <link rel="stylesheet" href="static/vendor.a0fa0655.css"> <link rel="stylesheet" href="static/app.968d5cf5.css"> </head> <body> <!--[if lt IE 10]>

<p class="browsehappy">You are using an <strong>outdated</strong> browser.

Please <a href="http://browsehappy.com/">upgrade your browser</a> to improve your

experience.</p>

<![endif]--> <kd-chrome layout="column" layout-fill> </kd-chrome> <script src="static/vendor.89dbb771.js"></script> <script src="api/appConfig.json"></script> <script src="static/app.50ef120b.js"></script> </body> </html>

通过查看网卡信息,k8s-03为10.10.80.0网段

[root@k8s-03 zm]# ifconfig

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.10.80.0 netmask 255.255.0.0 destination 10.10.80.0

inet6 fe80::3624:5df7:a344:fc0e prefixlen 64 scopeid 0x20<link>

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3 bytes 144 (144.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

在k8s-03机器上可以ping通dashboard,其他机器不通

[root@k8s-03 zm]# ping 10.10.80.2 PING 10.10.80.2 (10.10.80.2) 56(84) bytes of data. 64 bytes from 10.10.80.2: icmp_seq=1 ttl=64 time=0.058 ms 64 bytes from 10.10.80.2: icmp_seq=2 ttl=64 time=0.043 ms

查看docker ip:

docker inspect -f '{{.Name}} - {{.NetworkSettings.IPAddress }}' $(docker ps -aq)

[root@k8s-03 zz]# docker inspect -f '{{.Name}} - {{.NetworkSettings.IPAddress }}' $(docker ps -aq)

/k8s_kubernetes-dashboard.88d5a45d_kubernetes-dashboard-latest-190610294-zxgtw_kube-system_9ba7a9b3-4bbc-11e9-958a-000c290b69ff_e5226d0a -

/k8s_POD.28c50bab_kubernetes-dashboard-latest-190610294-zxgtw_kube-system_9ba7a9b3-4bbc-11e9-958a-000c290b69ff_c5434807 - 10.10.80.2

/k8s_kubernetes-dashboard.88d5a45d_kubernetes-dashboard-latest-190610294-zxgtw_kube-system_9ba7a9b3-4bbc-11e9-958a-000c290b69ff_443e86fe -

/k8s_POD.28c50bab_kubernetes-dashboard-latest-190610294-zxgtw_kube-system_9ba7a9b3-4bbc-11e9-958a-000c290b69ff_618335e7 -

kubectl cluster-info

[root@k8s-01 yamlfail]# kubectl cluster-info Kubernetes master is running at http://localhost:8080 kubernetes-dashboard is running at http://localhost:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

解决办法:

各节点开启ip转发:

echo "net.ipv4.ip_forward = 1" >>/usr/lib/sysctl.d/50-default.conf

各节点修改flannel配置文件:

vim /etc/sysconfig/flanneld

填坑:

[root@k8s-01 yamlfile]# kubectl create -f dashboard-controller.yaml

[root@k8s-01 yamlfile]# kubectl create -f dashboard-controller.yaml Error from server (AlreadyExists): error when creating "dashboard-controller.yaml": serviceaccounts "kubernetes-dashboard" already exists yaml: line 50: did not find expected key

用如下方法删除

kubectl delete -f kubernetes-dashboard.yaml

[root@k8s-01 yamlfile]# kubectl delete -f kubernetes-dashboard.yaml secret "kubernetes-dashboard-certs" deleted serviceaccount "kubernetes-dashboard" deleted

再次创建

kubectl create -f dashboard-controller.yaml

[root@k8s-01 yamlfile]# kubectl create -f dashboard-controller.yaml serviceaccount "kubernetes-dashboard" created error: yaml: line 50: did not find expected key

kubectl create -f dashboard-service.yaml

[root@k8s-01 yamlfile]# kubectl create -f dashboard-service.yaml service "kubernetes-dashboard" created

查看:

kubectl get svc --all-namespaces

[root@k8s-01 yamlfile]# kubectl get svc --all-namespaces NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes 10.254.0.1 <none> 443/TCP 23h kube-system kubernetes-dashboard 10.254.227.33 <none> 443/TCP 4m

网页访问:

http://10.8.8.31:8080/ui (不成功)

坑!

https://www.cnblogs.com/guyeshanrenshiwoshifu/p/9147238.html

查看pod:

kubectl get pods --all-namespaces

[root@k8s-01 yamlfile]# kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system kubernetes-dashboard-1468570674-zxgtw 0/1 ContainerCreating 0 7m

查看信息:

kubectl describe pod kubernetes-dashboard-2498798083-tgwsn --namespace=kube-system

[root@k8s-01 yamlfile]# kubectl describe pod kubernetes-dashboard-2498798083-tgwsn --namespace=kube-system

Name: kubernetes-dashboard-2498798083-tgwsn

Namespace: kube-system

Node: k8s-03/10.8.8.33

Start Time: Thu, 21 Mar 2019 12:04:12 +0800

Labels: app=kubernetes-dashboard

pod-template-hash=2498798083

Status: Pending

IP:

Controllers: ReplicaSet/kubernetes-dashboard-2498798083

Containers:

kubernetes-dashboard:

Container ID:

Image: gcr.io/google_containers/kubernetes-dashboard-amd64:v1.5.1

Image ID:

Port: 9090/TCP

Args:

--apiserver-host=http://10.8.8.31:8080

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Liveness: http-get http://:9090/ delay=30s timeout=30s period=10s #success=1 #failure=3

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: dedicated=master:Equal:NoSchedule

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1h 1m 18 {kubelet k8s-03} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

1h 2s 296 {kubelet k8s-03} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

cd /etc/docker/certs.d/registry.access.redhat.com/

[root@k8s-01 ~]# cd /etc/docker/certs.d/registry.access.redhat.com/ [root@k8s-01 registry.access.redhat.com]# ll 总用量 0 lrwxrwxrwx 1 root root 27 3月 20 09:06 redhat-ca.crt -> /etc/rhsm/ca/redhat-uep.pem

[root@k8s-01 registry.access.redhat.com]# cd /etc/rhsm/ca/

[root@k8s-01 ca]# ll

总用量 0

生成:redhat-uep.pem

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

vim /etc/rhsm/ca/redhat-uep.pem 已经有数据

docker pull registry.access.redhat.com/rhel7/pod-infrastructure:latest

删除并重新生成

cd /etc/kubernetes/yamlfile/

kubectl delete -f dashboard-controller.yaml

kubectl delete -f dashboard-service.yaml

kubectl create -f dashboard-controller.yaml

kubectl create -f dashboard-service.yaml

七:继续测试kube-ui

7.1:web访问:

http://10.8.8.31:8080/ui

自动跳转到:

http://10.8.8.31:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard/

报错:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "endpoints \"kubernetes-dashboard\" not found",

"reason": "NotFound",

"details": {

"name": "kubernetes-dashboard",

"kind": "endpoints"

},

"code": 404

}

7.2:重启各结点及创建docker

测试网络联通性,master上要能ping通个node上的docker

cd /etc/kubernetes/yamlfail

kubectl create -f dashboard.yaml

kubectl create -f dashboardsvc.yaml

[root@k8s-01 yamlfail]# kubectl create -f dashboard.yaml deployment "kubernetes-dashboard-latest" created 您在 /var/spool/mail/root 中有新邮件 [root@k8s-01 yamlfail]# kubectl create -f dashboardsvc.yaml service "kubernetes-dashboard" created

7.3:查看状态

kubectl get deployment --all-namespaces

kubectl get svc --all-namespaces

kubectl get pod -o wide --all-namespaces

[root@k8s-01 yamlfail]# kubectl get deployment --all-namespaces NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE kube-system kubernetes-dashboard-latest 1 1 1 1 22s [root@k8s-01 yamlfail]# kubectl get svc --all-namespaces NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE default kubernetes 10.254.0.1 <none> 443/TCP 14d kube-system kubernetes-dashboard 10.254.157.175 <none> 80/TCP 38s [root@k8s-01 yamlfail]# kubectl get pod -o wide --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE kube-system kubernetes-dashboard-latest-190610294-nf0jc 1/1 Running 0 59s 10.10.7.2 k8s-03

7.4:再次web访问:

http://10.8.8.31:8080/ui

http://10.8.8.31:8080/api/v1/proxy/namespaces/kube-system/services/kubernetes-dashboard/#/workload?namespace=default

感谢:

条例清晰!(方法一)

https://www.cnblogs.com/zhenyuyaodidiao/p/6500830.html

https://www.cnblogs.com/zhenyuyaodidiao/p/6500897.html

有点繁杂:(方法二)

https://www.cnblogs.com/netsa/p/8279045.html

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ 方法二 ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

方法二:

一:环境准备

1.1:删除原有go/golang

whereis go whereis golang whereis gocode #如果需要的话 //找到后删除 rm -rf xxx

1.2:下载

https://studygolang.com/dl

wget https://studygolang.com/dl/golang/go1.12.linux-amd64.tar.gz

1.3:解压到指定目录

tar -C /usr/local/ -zxvf go1.12.linux-amd64.tar.gz

cd /usr/local/go

1.4:建立gopath目录

mkdir -p /home/gocode

1.5:添加环境

vim /etc/profile

export GOROOT=/usr/local/go export GOPATH=/home/gocode export PATH=$PATH:$GOROOT/bin:$GOPATH/bin

source /etc/profile

验证是否成功

go version

1.6:安装git

yum install -y git

1.7:下载

go get -v github.com/gin-gonic/gin go get -v github.com/go-sql-driver/mysql go get -v github.com/robfig/cron

1.8:测试

vim helloworld.go

package main

import "fmt"

func main() {

fmt.Printf("Hello, world.\n")

}

运行 go run helloworld.go

编译 go build helloworld.go

go install

编译后的文件运行为 ./helloworld

后台运行:

Linux 在运行程序的尾部加入&,或者nohup ./example &

1.9:SSH免密

https://blog.csdn.net/wangganggang3168/article/details/80568049

https://blog.csdn.net/wang704987562/article/details/78904350

ssh-keygen -t rsa (各节点均需执行)

[root@docker-01 ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: SHA256:UhHoFCQ/SyuQdw61fWVPkQn/jhY59HwTvG/SpfC4CXk root@docker-01 The key's randomart image is: +---[RSA 2048]----+ | ..+oo. +.o+ | | . +oo . o ++ | | o oo* o . +o | | o =.= . . =o| | . = S . +o*| | . . . + B=| | o E * =| | o + o | | o | +----[SHA256]-----+

把各节点的id_rsa.pub内容集中拷贝到authorized_keys

vim /root/.ssh/id_rsa.pub

vim /root/.ssh/authorized_keys

scp authorized_keys root@docker-01:/root/.ssh/

分别登入其他节点,分别ssh到各节点,第一次ssh会有提示,输入yes后解除

[root@docker-02 .ssh]# ssh docker-04 The authenticity of host 'docker-04 (10.8.8.24)' can't be established. ECDSA key fingerprint is SHA256:8UK41mz0DDPjzQ7UPH9ADOFYBN34cMFJVXaOJ5gADx0. ECDSA key fingerprint is MD5:15:63:19:03:ad:fb:a6:e8:3d:74:01:0b:ab:88:88:0b. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'docker-04,10.8.8.24' (ECDSA) to the list of known hosts. Last login: Wed Mar 13 21:02:52 2019 from docker-01

二:生成证书:

2.1:

参考:https://kubernetes.io/zh/docs/concepts/cluster-administration/certificates/#创建证书

CFSSL方法

https://kubernetes.io/zh/docs/concepts/cluster-administration/certificates/#cfssl

下载安装:cd /zz

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /bin/cfssl curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /bin/cfssl-certinfo curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /bin/cfssljson chmod +x cfssl*

2.2:创建ca-config.jaon:

mkdir -p /opt/ssl && cd /opt/ssl

可用cfssl print-defaults config > ca-config.json自动生成后按需改配置

vim ca-config.jaon

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

过期时间配置为10年

ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数,后续在签名证书时使用某个profile

signing:表示该证书可用于签名其它证书,生成的ca.pem证书中CA=TRUE;

server auth:表示client可以用该CA对server提供的证书进行验证;

client auth:表示server可以用该CA对client提供的证书进行验证

2.3:创建ca-csr.jaon

可用cfssl print-defaults csr > ca-csr.json自动生成后按需修改

vim ca-csr.jaon

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "<country>",

"ST": "<state>",

"L": "<city>",

"O": "<organization>",

"OU": "<organization unit>"

}]

}

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

CN : Common Name,kube-apiserver从证书中提取该字段作为请求的用户名;

O : Organization,kube-apiserver从证书中提取该字段作为请求用户所属的组;

2.4:生成证书密钥:

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@docker-01 ssl]# vim ca-csr.json [root@docker-01 ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca 2019/03/13 11:01:36 [INFO] generating a new CA key and certificate from CSR 2019/03/13 11:01:36 [INFO] generate received request 2019/03/13 11:01:36 [INFO] received CSR 2019/03/13 11:01:36 [INFO] generating key: rsa-2048 2019/03/13 11:01:36 [INFO] encoded CSR 2019/03/13 11:01:36 [INFO] signed certificate with serial number 377680744285591674329230033735744500343528771314 [root@docker-01 ssl]# ll 总用量 20 -rw-r--r--. 1 root root 284 3月 12 21:33 ca-config.json -rw-r--r--. 1 root root 1001 3月 13 11:01 ca.csr -rw-r--r--. 1 root root 208 3月 13 11:01 ca-csr.json -rw-------. 1 root root 1679 3月 13 11:01 ca-key.pem -rw-r--r--. 1 root root 1359 3月 13 11:01 ca.pem

2.5:创建kubernetes证书

vim kubernetes-csr.json

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"10.8.8.21",

"10.8.8.22",

"10.8.8.23",

"10.8.8.24",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

2.6:生成kubernetes密钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

[root@docker-01 ssl]# vim kubernetes-csr.json

[root@docker-01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

2019/03/13 11:21:38 [INFO] generate received request

2019/03/13 11:21:38 [INFO] received CSR

2019/03/13 11:21:38 [INFO] generating key: rsa-2048

2019/03/13 11:21:38 [INFO] encoded CSR

2019/03/13 11:21:38 [INFO] signed certificate with serial number 466577397722502141135271666270895637824536137432

2019/03/13 11:21:38 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

上面WARNING先忽略

2.7:创建admin证书

vim admin-csr.json

{

"CN": "kubernetes-admin",

"hosts": [

"10.8.8.21",

"10.8.8.22",

"10.8.8.23",

"10.8.8.24"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

kube-apiserver将提取CN作为客户端的用户名,这里是kubernetes-admin,

将提取O作为用户所属的组,这里是system:master。

kube-apiserver预定义了一些 RBAC使用的ClusterRoleBindings,

例如 cluster-admin将组system:masters与 ClusterRole cluster-admin绑定,

而cluster-admin拥有访问kube-apiserver的所有权限,

因此kubernetes-admin这个用户将作为集群的超级管理员。

2.8:生成admin密钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

[root@docker-01 ssl]# vim admin-csr.json

[root@docker-01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2019/03/13 13:19:32 [INFO] generate received request

2019/03/13 13:19:32 [INFO] received CSR

2019/03/13 13:19:32 [INFO] generating key: rsa-2048

2019/03/13 13:19:33 [INFO] encoded CSR

2019/03/13 13:19:33 [INFO] signed certificate with serial number 542875374330312060082808070092917596528046572224

2019/03/13 13:19:33 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

2.9:创建kube-proxy-csr.json证书

vim kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

指定证书User为 system:kube-proxy

kube-apiserver 预定义的RoleBinding cluster-admin

将User system:kube-proxy与Role system:node-proxier绑定,

将Role授予调用kube-apiserver Proxy相关API的权限;

生成kube-proxy证书和密钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@docker-01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2019/03/13 13:30:08 [INFO] generate received request

2019/03/13 13:30:08 [INFO] received CSR

2019/03/13 13:30:08 [INFO] generating key: rsa-2048

2019/03/13 13:30:08 [INFO] encoded CSR

2019/03/13 13:30:08 [INFO] signed certificate with serial number 567732124973226627997281945626780290685046730115

2019/03/13 13:30:08 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

校验证书:看输出内容和json是否一致

cfssl-certinfo -cert kubernetes.pem

| 组件 | 证书 | 说明 |

|---|---|---|

| etcd | ca.pem、kubernetes-key.pem、kubernetes.pem | 和kube-apiserver通用 |

| kube-apiserver | ca.pem、kubernetes-key.pem、kubernetes.pem | kube-controller、kube-scheduler和apiserver都是部署在master可以使用非安全通行,不再单独安装证书。 |

| kube-proxy | ca.pem、kube-proxy-key.pem、kube-proxy.pem | |

| kubectl | ca.pem、admin-key.pem、admin.pem |

三:搭建Etcd

https://www.jianshu.com/p/98b8fa3e3596

各节点均需执行!!!

3.1:关闭selinux

getenforce

vim /etc/selinux/config

SELINUX=disabled

3.2:关闭交换分区swap

swapoff -a

rm /dev/mapper/centos-swap

sed -i 's/.*swap.*/#&/' /etc/fstab

3.3:设置内核

vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

sysctl -p /etc/sysctl.conf

3.4:环境配置

vim /root/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export NODE_NAME=docker-01

export NODE_IP=10.8.8.21

export NODE_IPS="10.8.8.21 10.8.8.22 10.8.8.23 10.8.8.24"

export ETCD_NODES=docker-01=https://10.8.8.21:2380,docker-02=https://10.8.8.22:2380,docker-03=https://10.8.8.23:2380,docker-04=https://10.8.8.24:2380

~

3.5:etcd证书配置

cd /etc/kubernetes/ssl

创建etcd签名请求

vim etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"10.8.8.21",

"10.8.8.22",

"10.8.8.23",

"10.8.8.24"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

生成etcd证书和秘钥

[root@docker-01 ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2019/03/13 16:09:32 [INFO] generate received request

2019/03/13 16:09:32 [INFO] received CSR

2019/03/13 16:09:32 [INFO] generating key: rsa-2048

2019/03/13 16:09:33 [INFO] encoded CSR

2019/03/13 16:09:33 [INFO] signed certificate with serial number 398364810642443697380742999828998753293408212966

2019/03/13 16:09:33 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@docker-01 ssl]#

3.6:安装ETCD

https://coreos.com/etcd/docs/latest/dl_build.html

tar -zxvf etcd-v3.3.12-linux-amd64.tar.gz

cd etcd-v3.3.12-linux-amd64

cp etcd* /usr/local/bin/

export ETCDCTL_API=3

env

拷贝到其他节点:

scp /usr/local/bin/etcd* root@docker-02:/usr/local/bin/

scp /usr/local/bin/etcd* root@docker-03:/usr/local/bin/

scp /usr/local/bin/etcd* root@docker-04:/usr/local/bin/

创建etcd工作目录

mkdir -p /var/lib/etcd

如果没有配置这个目录,会现现Failed at step CHDIR spawning /usr/local/bin/etcd: No such file or directory的错误信息。

创建配置文件目录

mkdir -p /etc/etcd

3.7:创建ETCD的配置文件

/etc/etcd/etcd-key.conf:存放我们证书的配置信息

/etc/etcd/etcd.conf:存放ETCD集群的配置信息

vim /etc/etcd/etcd-key.conf

ETCD_KEY='--cert-file=/etc/kubernetes/ssl/etcd.pem --key-file=/etc/kubernetes/ssl/etcd-key.pem --peer-cert-file=/etc/kubernetes/ssl/etcd.pem --peer-key-file=/etc/kubernetes/ssl/etcd-key.pem --trusted-ca-file=/etc/kubernetes/ssl/ca.pem --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem'

vim /etc/etcd/etcd.conf

master配置:

ETCD_NAME='--name=k8s-master' ETCD_DATA_DIR='--data-dir=/data/etcd' ETCD_INITIAL_CLUSTER_STATE='--initial-cluster-state=new' ETCD_INITIAL_CLUSTER_TOKEN='--initial-cluster-token=etcd-cluster-0' ETCD_INITIAL_ADVERTISE_PEER_URLS='--initial-advertise-peer-urls=http://10.8.8.21:2380' ETCD_LISTEN_PEER_URLS='--listen-peer-urls=http://10.8.8.21:2380' ETCD_LISTEN_CLIENT_URLS='--listen-client-urls=http://10.8.8.21:2379,http://127.0.0.1:2379' ETCD_ADVERTISE_CLIENT_URLS='--advertise-client-urls=http://10.8.8.21:2379' ETCD_INITIAL_CLUSTER='--initial-cluster=k8s-master=http://10.8.8.21:2380,k8s-node02=http://10.8.8.22:2380,k8s-node03=http://10.8.8.23:2380,k8s-node04=http://10.8.8.24:2380' #ETCD_KEY='/etc/kubernetes/ssl/'

node配置:

ETCD_NAME='--name=k8s-node02' ETCD_DATA_DIR='--data-dir=/data/etcd' ETCD_INITIAL_CLUSTER_STATE='--initial-cluster-state=new' ETCD_INITIAL_CLUSTER_TOKEN='--initial-cluster-token=etcd-cluster-0' ETCD_INITIAL_ADVERTISE_PEER_URLS='--initial-advertise-peer-urls=http://10.8.8.22:2380' ETCD_LISTEN_PEER_URLS='--listen-peer-urls=http://10.8.8.22:2380' ETCD_LISTEN_CLIENT_URLS='--listen-client-urls=http://10.8.8.22:2379,http://127.0.0.1:2379' ETCD_ADVERTISE_CLIENT_URLS='--advertise-client-urls=http://10.8.8.22:2379' ETCD_INITIAL_CLUSTER='--initial-cluster=k8s-master=http://10.8.8.21:2380,k8s-node02=http://10.8.8.22:2380,k8s-node03=http://10.8.8.23:2380,k8s-node04=http://10.8.8.24:2380' #ETCD_KEY='/etc/kubernetes/ssl/'

/etc/etcd/etcd.conf文件中等号左边键与/usr/lib/systemd/system/etcd.service中$后的命名一致

/etc/etcd/etcd.conf文件中等号右边单引号中等号左边的值与etcd --help中命名一致,如不一致启动集群时会报错

vim /var/log/messages

Mar 14 13:53:22 docker-01 systemd: Starting Etcd Server... Mar 14 13:53:22 docker-01 etcd: error verifying flags, 'k8s_master' is not a valid flag. See 'etcd --help'. Mar 14 13:53:22 docker-01 systemd: etcd.service: main process exited, code=exited, status=1/FAILURE Mar 14 13:53:22 docker-01 systemd: Failed to start Etcd Server. Mar 14 13:53:22 docker-01 systemd: Unit etcd.service entered failed state. Mar 14 13:53:22 docker-01 systemd: etcd.service failed. Mar 14 13:53:23 docker-01 systemd: Stopped Etcd Server.

3.8:添加服务

vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

#Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/data/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

EnvironmentFile=-/etc/etcd/etcd-key.conf

ExecStart=/usr/local/bin/etcd \

$ETCD_NAME \

$ETCD_DATA_DIR \

$ETCD_INITIAL_CLUSTER_STATE \

$ETCD_INITIAL_CLUSTER_TOKEN \

$ETCD_INITIAL_ADVERTISE_PEER_URLS \

$ETCD_LISTEN_PEER_URLS \

$ETCD_LISTEN_CLIENT_URLS \

$ETCD_ADVERTISE_CLIENT_URLS \

$ETCD_INITIAL_CLUSTER \

$ETCD_KEY

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

name

节点名称

data-dir

指定节点的数据存储目录

listen-peer-urls

监听URL,用于与其他节点通讯

listen-client-urls

对外提供服务的地址:比如 http://ip:2379,http://127.0.0.1:2379 ,客户端会连接到这里和 etcd 交互

initial-advertise-peer-urls

该节点同伴监听地址,这个值会告诉集群中其他节点

initial-cluster

集群中所有节点的信息,格式为 node1=http://ip1:2380,node2=http://ip2:2380,… 。注意:这里的 node1 是节点的 --name 指定的名字;后面的 ip1:2380 是 --initial-advertise-peer-urls 指定的值

initial-cluster-state

新建集群的时候,这个值为 new ;假如已经存在的集群,这个值为 existing

initial-cluster-token

创建集群的 token,这个值每个集群保持唯一。这样的话,如果你要重新创建集群,即使配置和之前一样,也会再次生成新的集群和节点 uuid;否则会导致多个集群之间的冲突,造成未知的错误

advertise-client-urls

对外公告的该节点客户端监听地址,这个值会告诉集群中其他节点在不同的设备上要替换name、initial-advertise-peer-urls、listen-peer-urls、listen-client-urls、advertise-client-urls中的名称和IP

复制到其他服务器:

scp etcd.service root@docker-02:/usr/lib/systemd/system/

修改配置文件etcd.service

3.9:启动etcd集群(各节点均需启动,启动主节点时会停滞很久,因为其他节点还未开启)

修改vim /usr/lib/systemd/system/etcd.service文件后要重新加载

systemctl daemon-reload

systemctl start etcd.service

systemctl stop etcd.service

报错处理:

3.9.1:connection refused

Mar 14 14:32:46 docker-01 etcd: health check for peer 7d8eee4f1e1ab8e9 could not connect: dial tcp 10.8.8.22:2380: connect: connection refused (prober "ROUND_TRIPPER_SNAPSHOT")

ssh连接不通

[root@docker-01 system]# ssh 10.8.8.24 -p 2380 ssh: connect to host 10.8.8.24 port 2380: Connection refused

解决方法:先启动node节点机器,后启动master

3.9.2:etcd.service服务配置文件中设置的工作目录WorkingDirectory=xxx目录必须存在,并且建好,否则/var/log/message报错

Mar 14 15:25:21 docker-03 systemd: Starting Etcd Server... Mar 14 15:25:21 docker-03 systemd: Failed at step CHDIR spawning /usr/local/bin/etcd: No such file or directory Mar 14 15:25:21 docker-03 systemd: etcd.service: main process exited, code=exited, status=200/CHDIR Mar 14 15:25:21 docker-03 systemd: Failed to start Etcd Server. Mar 14 15:25:21 docker-03 systemd: Unit etcd.service entered failed state. Mar 14 15:25:21 docker-03 systemd: etcd.service failed. Mar 14 15:25:23 docker-03 systemd: Stopped Etcd Server.

3.9.3:request cluster ID mismatch

https://blog.51cto.com/1666898/2156165

Mar 15 08:38:22 docker-01 etcd: request cluster ID mismatch (got ce8738a43379cfa0 want 25c4c375d3f1f1e) Mar 15 08:38:22 docker-01 etcd: rejected connection from "10.8.8.22:57202" (error "tls: first record does not look like a TLS handshake", ServerName "")

删除配置文件中--data-dir项!

解决办法:删除了etcd集群所有节点中的--data_dir的内容

分析: 因为集群搭建过程,单独启动过单一etcd,做为测试验证,集群内第一次启动其他etcd服务时候,是通过发现服务引导的,所以需要删除旧的成员信息

参考:One of the member was bootstrapped via discovery service. You must remove the previous data-dir to clean up the member information. Or the member will ignore the new configuration and start with the old configuration. That is why you see the mismatch.

3.9.4:以下是最终启动成功的etcd.service,note节点机器只用修改红字部分为本节点信息

vim /usr/lib/systemd/system/etcd.service

[Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target #Documentation=https://github.com/coreos [Service] User=root After=network.target After=network-online.target Wants=network-online.target #Documentation=https://github.com/coreos [Service] User=root Type=notify WorkingDirectory=/data/etcd/ ExecStart=/usr/local/bin/etcd \ --name=k8s-master \ --cert-file=/etc/kubernetes/ssl/etcd.pem \ --key-file=/etc/kubernetes/ssl/etcd-key.pem \ --trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --peer-cert-file=/etc/kubernetes/ssl/etcd.pem \ --peer-key-file=/etc/kubernetes/ssl/etcd-key.pem \ --peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \ --peer-client-cert-auth \ --client-cert-auth \ --listen-peer-urls=https://10.8.8.21:2380 \ --initial-advertise-peer-urls=https://10.8.8.21:2380 \ --listen-client-urls=https://10.8.8.21:2379,https://127.0.0.1:2379 \ --advertise-client-urls=https://10.8.8.21:2379 \ --initial-cluster-token=etcd-cluster-0 \ --initial-cluster=k8s-master=https://10.8.8.21:2380,k8s-node02=https://10.8.8.22:2380,k8s-node03=https://10.8.8.23:2380,k8s-node04=https://10.8.8.24:2380 \ --initial-cluster-state=new Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target

3.9.5:检查各节点情况

etcdctl 2.2.1版本用如下方法

etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

--endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379, \

https://10.8.8.23:2379,https://10.8.8.24:2379 cluster-health

etcdctl 3.3.12版本用如下方法

etcdctl --cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/kubernetes.pem \

--key=/etc/kubernetes/ssl/kubernetes-key.pem \

--endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379, \

https://10.8.8.23:2379,https://10.8.8.24:2379 endpoint health

回显信息

[root@docker-02 network]# etcdctl --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem --endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379 cluster-health

member 9464641f79dde42 is healthy: got healthy result from https://10.8.8.23:2379

member 250662a51b30eed5 is healthy: got healthy result from https://10.8.8.24:2379

member 3255ddeea7f12617 is healthy: got healthy result from https://10.8.8.21:2379

member b488eb3b12837d51 is healthy: got healthy result from https://10.8.8.22:2379

cluster is healthy

3.9.6:export ETCDCTL_API=3 这个变量要记得设置!!!否则会报错!

[root@docker-02 ~]# etcdctl mkdir /test-etcd Error: x509: certificate signed by unknown authority

[root@docker-02 ~]# export ETCDCTL_API=3 [root@docker-02 ~]# systemctl restart etcd [root@docker-02 ~]# etcdctl member list 9464641f79dde42, started, k8s-node03, https://10.8.8.23:2380, https://10.8.8.23:2379 250662a51b30eed5, started, k8s-node04, https://10.8.8.24:2380, https://10.8.8.24:2379 3255ddeea7f12617, started, k8s-master, https://10.8.8.21:2380, https://10.8.8.21:2379 b488eb3b12837d51, started, k8s-node02, https://10.8.8.22:2380, https://10.8.8.22:2379

四:安装Flannel

4.1:下载并安装flannel

wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

tar -zxvf flannel-v0.11.0-linux-amd64.tar.gz -C /zm/flannel

[root@docker-01 zm]# tar -zxvf flannel-v0.11.0-linux-amd64.tar.gz flanneld mk-docker-opts.sh README.md

mv flanneld /usr/bin/

mv mk-docker-opts.sh /usr/bin/

创建服务文件:

https://blog.csdn.net/bbwangj/article/details/81205244

vim /usr/lib/systemd/system/flanneld.service

[Unit] Description=flannel Before=docker.service [Service] ExecStart=/usr/bin/flanneld [Install] WantedBy=multi-user.target RequiredBy=docker.service

[Unit] Description=Flanneld overlay address etcd agent After=network.target After=network-online.target Wants=network-online.target After=etcd.service Before=docker.service [Service] Type=notify EnvironmentFile=/etc/sysconfig/flanneld EnvironmentFile=-/etc/sysconfig/docker-network ExecStart=/usr/bin/flanneld -etcd-endpoints=${FLANNEL_ETCD} -etcd-prefix=${FLANNEL_ETCD_KEY} $FLANNEL_OPTIONS ExecStartPost=/usr/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker Restart=on-failure [Install] WantedBy=multi-user.target RequiredBy=docker.service

mkdir -p /etc/systemd/system/flanneld.service.d/ && vim /etc/systemd/system/flanneld.service.d/flannel.conf

[Service] Environment="FLANNELD_ETCD_ENDPOINTS=http://10.8.8.21:2379" Environment="FLANNELD_ETCD_PREFIX=/usr/local/flannel/network"

检查是否生效

[root@docker-01 system]# systemctl daemon-reload [root@docker-01 system]# systemctl show flanneld --property Environment Environment=FLANNELD_ETCD_ENDPOINTS=http://10.8.8.21:2379 FLANNELD_ETCD_PREFIX=/usr/local/flannel/network

启动flannel

systemctl start flanneld

设置IP:

报错(1):

[root@docker-02 ~]# etcdctl mk /usr/local/flannel/network/config '{"Network":"10.9.0.0/16","SubnetMin":"10.9.1.0","SubnetMax":"10.9.254.0"}'

Error: dial tcp 127.0.0.1:4001: connect: connection refused

修改配置文件:

vim /usr/lib/systemd/system/etcd.service

--listen-client-urls=https://10.8.8.22:2379,https://127.0.0.1:2379 \

改为:

--listen-client-urls=https://10.8.8.22:2379,http://127.0.0.1:2379 \

报错(2):

[root@docker-02 ~]# systemctl daemon-reload

[root@docker-02 ~]# systemctl stop etcd

[root@docker-02 ~]# systemctl start etcd

[root@docker-02 ~]# etcdctl mk /usr/local/flannel/network/config '{"Network":"10.9.0.0/16","SubnetMin":"10.9.1.0","SubnetMax":"10.9.254.0"}'

Error: x509: certificate signed by unknown authority

执行以下两条命令

etcdctl --endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379 \ --ca-file=/etc/kubernetes/ssl/ca.pem \ --cert-file=/etc/kubernetes/ssl/kubernetes.pem \ --key-file=/etc/kubernetes/ssl/kubernetes-key.pem \ mkdir /usr/local/flannel/network

etcdctl --endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379 \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

mk /usr/local/flannel/network/config '{"Network":"10.9.0.0/16","SubnetLen":24,"Backend":{"Type":"host-gw"}}’

[root@docker-02 network]# etcdctl --endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem set /usr/local/flannel/network/config '{"Network":"10.9.0.0/16","Backend":{"Type":"vxlan"}}'

核对信息

声明变量:

ETCD_ENDPOINTS=‘https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379’

etcdctl --endpoints=${ETCD_ENDPOINTS} \

--ca-file=/etc/kubernetes/ssl/ca.pem \

--cert-file=/etc/kubernetes/ssl/kubernetes.pem \

--key-file=/etc/kubernetes/ssl/kubernetes-key.pem \

ls /kube-centos/network/subnets

[root@docker-02 /]# etcdctl --endpoints=https://10.8.8.21:2379,https://10.8.8.22:2379,https://10.8.8.23:2379,https://10.8.8.24:2379 --ca-file=/etc/kubernetes/ssl/ca.pem --cert-file=/etc/kubernetes/ssl/kubernetes.pem --key-file=/etc/kubernetes/ssl/kubernetes-key.pem get /usr/local/flannel/network/config

{"Network":"10.9.0.0/16","SubnetLen":24,"Backend":{"Type":"host-gw"}}

感谢:

https://www.cnblogs.com/zhenyuyaodidiao/p/6500830.html

GO:

https://blog.csdn.net/xianchanghuang/article/details/82722064

k8s:

https://www.cnblogs.com/netsa/p/8126155.html

https://blog.csdn.net/qq_36207775/article/details/82343807

https://www.cnblogs.com/xuchenCN/p/9479737.html

etcd:

https://www.jianshu.com/p/98b8fa3e3596

flannel:

https://www.cnblogs.com/ZisZ/p/9212820.html

docker:

https://www.cnblogs.com/ZisZ/p/8962194.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号