k8s二进制部署及coredns及dashboard

k8s二进制部署及coredns及dashboard

kubernetes高可用集群二进制部署

主机规划

| 主机 | IP | 用途 |

|---|---|---|

| k8s-deploy | 192.168.44.10 | deploy |

| k8s-harbor | 192.168.44.11 | harbor/etcd |

| k8s-master1 | 192.168.44.12 | master/etcd |

| k8s-master2 | 192.168.44.13 | master/etcd |

| k8s-master3 | 192.168.44.14 | master |

| k8s-node1 | 192.168.44.15 | node |

| k8s-node2 | 192.168.44.16 | node |

| k8s-node3 | 192.168.44.17 | node |

k8s-deploy主机相关操作

- 安装ansible

apt install ansible

- 配置与各主机信任

ssh-keygen #生成公钥及私钥

apt install sshpass #安装用于免密码工具

#使用脚本部署免密

root@k8s-deploy:~/scripts# cat key.sh

#!/bin/bash

IP="

192.168.44.10

192.168.44.11

192.168.44.12

192.168.44.13

192.168.44.14

192.168.44.15

192.168.44.16

192.168.44.17

"

for node in ${IP};do

sshpass -p 123456 ssh-copy-id ${node} -o StrictHostKeyChecking=no

echo "${node} 秘钥copy完成"

ssh ${node} ln -sv /usr/bin/python3 /usr/bin/python

echo "${node} /usr/bin/python3 软链接创建完成"

done

使用kubeasz进行二进制安装

- 下载相关组件

apt install git

export release=3.3.1

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

./ezdown -D

- 进行安装前配置

cd /etc/kubeasz/

./ezctl new k8s-cluster1 #创建k8s-cluster1的集群名,并配置hosts,config

编辑/etc/kubeasz/clusters/k8s-cluster1/hosts,配置相关主机信息

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

192.168.44.11

192.168.44.12

192.168.44.13

# master node(s)

[kube_master]

192.168.44.12

192.168.44.13

# work node(s)

[kube_node]

192.168.44.15

192.168.44.16

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

#192.168.1.6 LB_ROLE=backup EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

#192.168.1.7 LB_ROLE=master EX_APISERVER_VIP=192.168.1.250 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="calico"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.68.0.0/16"

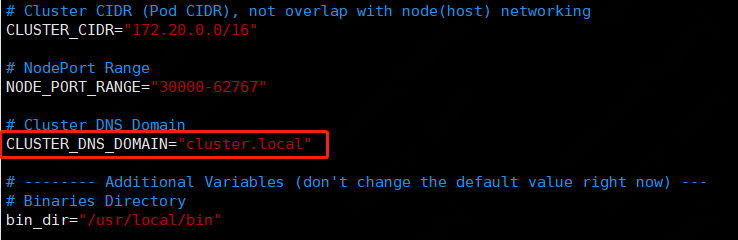

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="172.20.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-62767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/local/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-cluster1"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

编辑config.yml文件

/etc/kubeasz/clusters/k8s-cluster1/config.yml

############################

# prepare

############################

# 可选离线安装系统软件包 (offline|online)

INSTALL_SOURCE: "online"

# 可选进行系统安全加固 github.com/dev-sec/ansible-collection-hardening

OS_HARDEN: false

############################

# role:deploy

############################

# default: ca will expire in 100 years

# default: certs issued by the ca will expire in 50 years

CA_EXPIRY: "876000h"

CERT_EXPIRY: "438000h"

# kubeconfig 配置参数

CLUSTER_NAME: "cluster1"

CONTEXT_NAME: "context-{{ CLUSTER_NAME }}"

# k8s version

K8S_VER: "1.24.2"

############################

# role:etcd

############################

# 设置不同的wal目录,可以避免磁盘io竞争,提高性能

ETCD_DATA_DIR: "/var/lib/etcd"

ETCD_WAL_DIR: ""

############################

# role:runtime [containerd,docker]

############################

# ------------------------------------------- containerd

# [.]启用容器仓库镜像

ENABLE_MIRROR_REGISTRY: true

# [containerd]基础容器镜像

SANDBOX_IMAGE: "harbor.jackedu.cn/baseimage/pause:3.7"

# [containerd]容器持久化存储目录

CONTAINERD_STORAGE_DIR: "/var/lib/containerd"

# ------------------------------------------- docker

# [docker]容器存储目录

DOCKER_STORAGE_DIR: "/var/lib/docker"

# [docker]开启Restful API

ENABLE_REMOTE_API: false

# [docker]信任的HTTP仓库

INSECURE_REG: '["http://harbor.jackedu.cn"]'

############################

# role:kube-master

############################

# k8s 集群 master 节点证书配置,可以添加多个ip和域名(比如增加公网ip和域名)

#下面设置的地址可以在证书中配置

#用于通过高可用地址或者域名访问集群时访问

MASTER_CERT_HOSTS:

- "10.1.1.1"

- "k8s.easzlab.io"

#- "www.test.com"

# node 节点上 pod 网段掩码长度(决定每个节点最多能分配的pod ip地址)

# 如果flannel 使用 --kube-subnet-mgr 参数,那么它将读取该设置为每个节点分配pod网段

# https://github.com/coreos/flannel/issues/847

NODE_CIDR_LEN: 24

############################

# role:kube-node

############################

# Kubelet 根目录

KUBELET_ROOT_DIR: "/var/lib/kubelet"

# node节点最大pod 数

MAX_PODS: 110

# 配置为kube组件(kubelet,kube-proxy,dockerd等)预留的资源量

# 数值设置详见templates/kubelet-config.yaml.j2

KUBE_RESERVED_ENABLED: "no"

# k8s 官方不建议草率开启 system-reserved, 除非你基于长期监控,了解系统的资源占用状况;

# 并且随着系统运行时间,需要适当增加资源预留,数值设置详见templates/kubelet-config.yaml.j2

# 系统预留设置基于 4c/8g 虚机,最小化安装系统服务,如果使用高性能物理机可以适当增加预留

# 另外,集群安装时候apiserver等资源占用会短时较大,建议至少预留1g内存

SYS_RESERVED_ENABLED: "no"

############################

# role:network [flannel,calico,cilium,kube-ovn,kube-router]

############################

# ------------------------------------------- flannel

# [flannel]设置flannel 后端"host-gw","vxlan"等

FLANNEL_BACKEND: "vxlan"

DIRECT_ROUTING: false

# [flannel] flanneld_image: "quay.io/coreos/flannel:v0.10.0-amd64"

flannelVer: "v0.15.1"

flanneld_image: "easzlab.io.local:5000/easzlab/flannel:{{ flannelVer }}"

# ------------------------------------------- calico

# [calico]设置 CALICO_IPV4POOL_IPIP=“off”,可以提高网络性能,条件限制详见 docs/setup/calico.md,改为off,不能跨子网通信

CALICO_IPV4POOL_IPIP: "Always"

# [calico]设置 calico-node使用的host IP,bgp邻居通过该地址建立,可手工指定也可以自动发现

IP_AUTODETECTION_METHOD: "can-reach={{ groups['kube_master'][0] }}"

# [calico]设置calico 网络 backend: brid, vxlan, none

CALICO_NETWORKING_BACKEND: "brid"

# [calico]设置calico 是否使用route reflectors

# 如果集群规模超过50个节点,建议启用该特性

CALICO_RR_ENABLED: false

# CALICO_RR_NODES 配置route reflectors的节点,如果未设置默认使用集群master节点

# CALICO_RR_NODES: ["192.168.1.1", "192.168.1.2"]

CALICO_RR_NODES: []

# [calico]更新支持calico 版本: [v3.3.x] [v3.4.x] [v3.8.x] [v3.15.x]

calico_ver: "v3.19.4"

# [calico]calico 主版本

calico_ver_main: "{{ calico_ver.split('.')[0] }}.{{ calico_ver.split('.')[1] }}"

# ------------------------------------------- cilium

# [cilium]镜像版本

cilium_ver: "1.11.6"

cilium_connectivity_check: true

cilium_hubble_enabled: false

cilium_hubble_ui_enabled: false

# ------------------------------------------- kube-ovn

# [kube-ovn]选择 OVN DB and OVN Control Plane 节点,默认为第一个master节点

OVN_DB_NODE: "{{ groups['kube_master'][0] }}"

# [kube-ovn]离线镜像tar包

kube_ovn_ver: "v1.5.3"

# ------------------------------------------- kube-router

# [kube-router]公有云上存在限制,一般需要始终开启 ipinip;自有环境可以设置为 "subnet"

OVERLAY_TYPE: "full"

# [kube-router]NetworkPolicy 支持开关

FIREWALL_ENABLE: true

# [kube-router]kube-router 镜像版本

kube_router_ver: "v0.3.1"

busybox_ver: "1.28.4"

############################

# role:cluster-addon

############################

# coredns 自动安装

#不使用kubeasz安装dns

dns_install: "no"

corednsVer: "1.9.3"

#不使用本地缓存

ENABLE_LOCAL_DNS_CACHE: false

dnsNodeCacheVer: "1.21.1"

# 设置 local dns cache 地址

LOCAL_DNS_CACHE: "169.254.20.10"

# metric server 自动安装

#不安装metric server

metricsserver_install: "no"

metricsVer: "v0.5.2"

# dashboard 自动安装

dashboard_install: "yes"

dashboardVer: "v2.5.1"

dashboardMetricsScraperVer: "v1.0.8"

# prometheus 自动安装

prom_install: "no"

prom_namespace: "monitor"

prom_chart_ver: "35.5.1"

# nfs-provisioner 自动安装

nfs_provisioner_install: "no"

nfs_provisioner_namespace: "kube-system"

nfs_provisioner_ver: "v4.0.2"

nfs_storage_class: "managed-nfs-storage"

nfs_server: "192.168.1.10"

nfs_path: "/data/nfs"

# network-check 自动安装

network_check_enabled: false

network_check_schedule: "*/5 * * * *"

############################

# role:harbor

############################

# harbor version,完整版本号

HARBOR_VER: "v2.1.3"

HARBOR_DOMAIN: "harbor.easzlab.io.local"

HARBOR_TLS_PORT: 8443

# if set 'false', you need to put certs named harbor.pem and harbor-key.pem in directory 'down'

HARBOR_SELF_SIGNED_CERT: true

# install extra component

HARBOR_WITH_NOTARY: false

HARBOR_WITH_TRIVY: false

HARBOR_WITH_CLAIR: false

HARBOR_WITH_CHARTMUSEUM: true

通过ansible在各主机增加harbor的hosts解析

ansible k8s-hosts -m shell -a 'echo "192.168.44.11 harbor.jackedu.cn" >> /etc/hosts'

安装k8s

- 初始化环境

./ezctl setup k8s-cluster1 01 #初始化环境

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/01.prepare.yml

./ezctl setup k8s-cluster1 02 #安装etcd

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/02.etcd.yml

export NODE_IPS="192.168.44.11 192.168.44.12 192.168.44.13"

for ip in ${NODE_IPS}; do

ETCDCTL_API=3 etcdctl \

--endpoints=https://${ip}:2379 \

--cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/etcd.pem \

--key=/etc/kubernetes/ssl/etcd-key.pem \

endpoint health; done

- 部署运行时

master与node节点都要同时安装运行时(containerd或cri-docker)1.24己经不在支持docker,可以自行使用部署工具匹配,此步骤为可选步骤

#验证基础容器镜像

root@k8s-deploy:/etc/kubeasz# grep SANDBOX_IMAGE ./clusters/* -R

./clusters/k8s-cluster1/config.yml:SANDBOX_IMAGE: "harbor.jackedu.cn/baseimage/pause:3.7"

./ezctl setup k8s-cluster1 03

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/03.runtime.yml

#验证

root@k8s-master1:~# containerd -v

containerd github.com/containerd/containerd v1.6.4 212e8b6fa2f44b9c21b2798135fc6fb7c53efc16

配置containerd中可以信任的本地harbor配置

在/etc/containerd/config.toml中增加

endpoint = ["https://quay.mirrors.ustc.edu.cn"]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."harbor.jackedu.cn"]

endpoint = ["http://harbor.jackedu.cn"]

保存文件后,通过ansible下发到各主机,重启containerd

ansible all -m copy -a 'src=/root/config.toml dest=/etc/containerd/config.toml force=yes'ansible all -m ansible all -m systemd -a 'name=containerd state=restarted'

为了在后续新增加节点使该参数生效,需要在ansile-playbook模板中加上这个

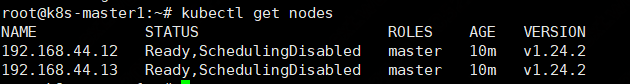

- 部署Master

#可自定义配置

vim roles/kube-master/tasks/main.yml

./ezctl setup k8s-cluster1 04

- 部署node

vim roles/kube-node/tasks/main.yml

./ezctl setup k8s-cluster1 05

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/05.kube-node.yml

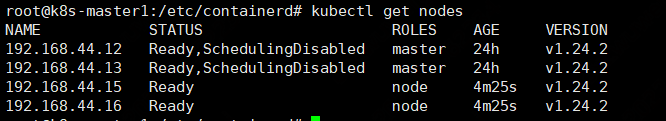

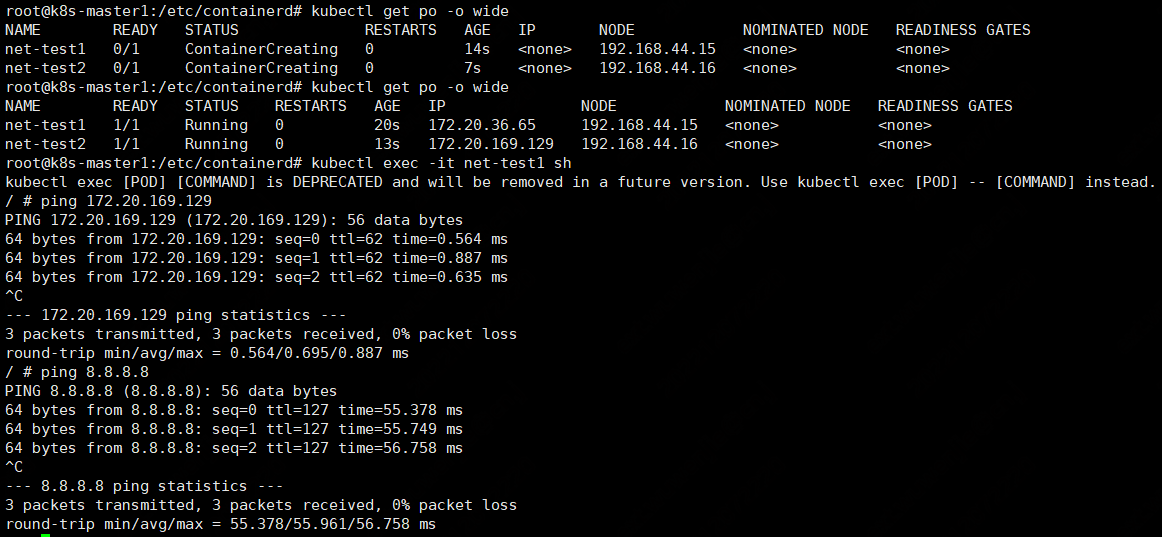

- 部署calico

将原有calico相关容器tag为harbor一置,并Push,修改playbook文件

执行

./ezctl setup k8s-cluster1 06

ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/06.network.yml

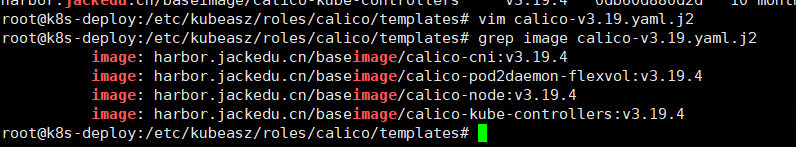

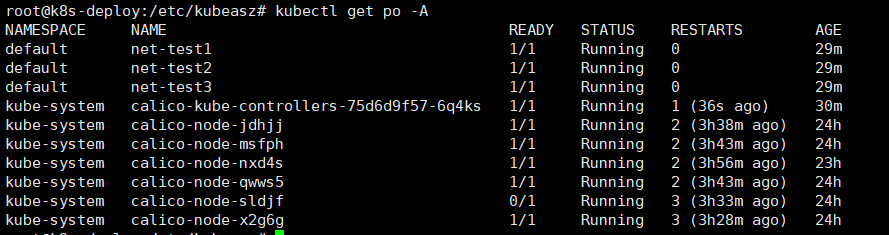

验证

root@k8s-master1:/etc/containerd# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+-------------------+-------+----------+-------------+

| 192.168.44.13 | node-to-node mesh | up | 14:12:22 | Established |

| 192.168.44.15 | node-to-node mesh | up | 14:12:24 | Established |

| 192.168.44.16 | node-to-node mesh | up | 14:12:24 | Established |

+---------------+-------------------+-------+----------+-------------+

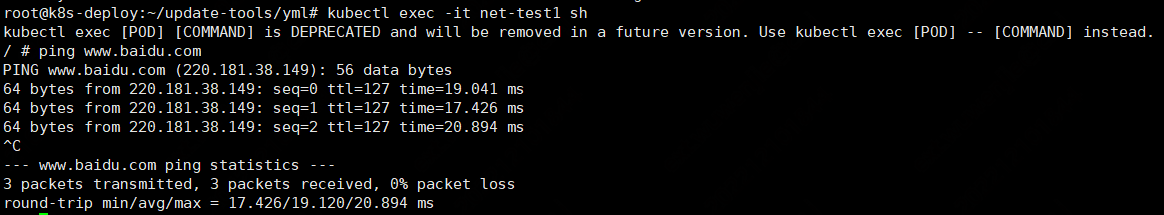

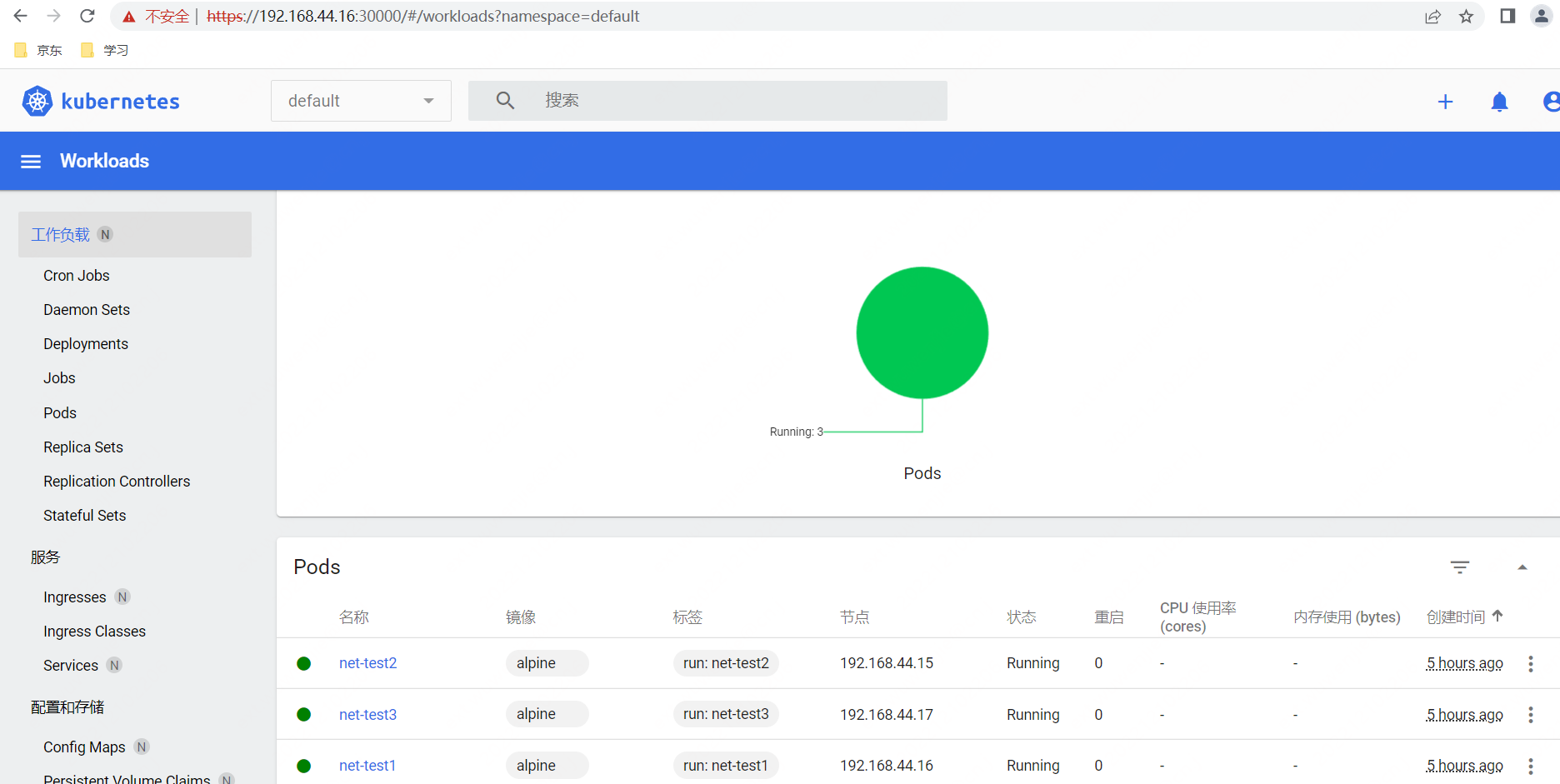

root@k8s-master1:/etc/containerd# kubectl run net-test1 --image=alpine sleep 360000

pod/net-test1 created

root@k8s-master1:/etc/containerd# kubectl run net-test2 --image=alpine sleep 360000

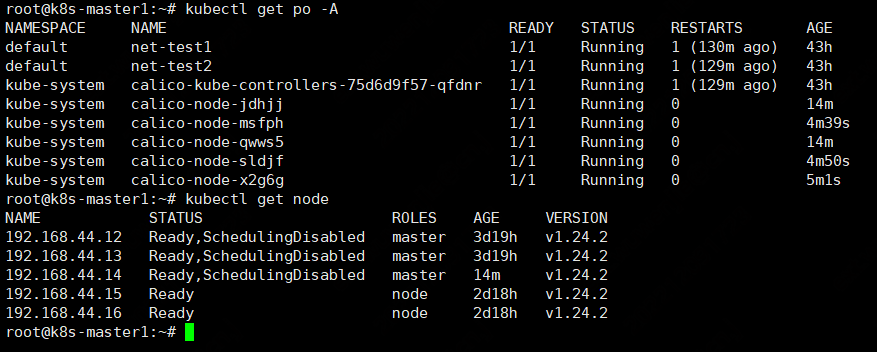

- 添加master节点

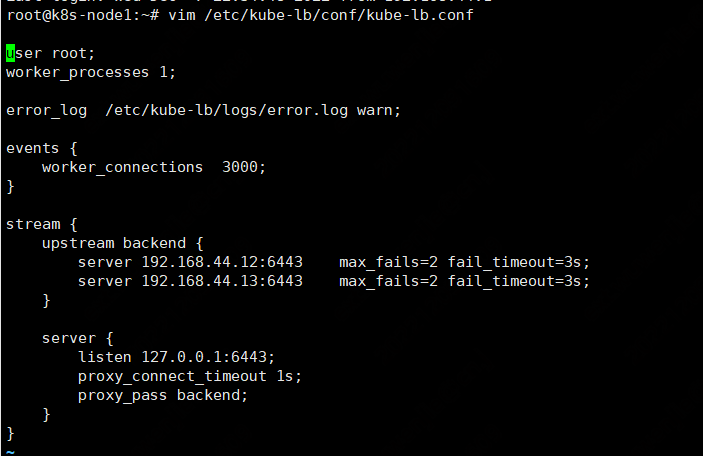

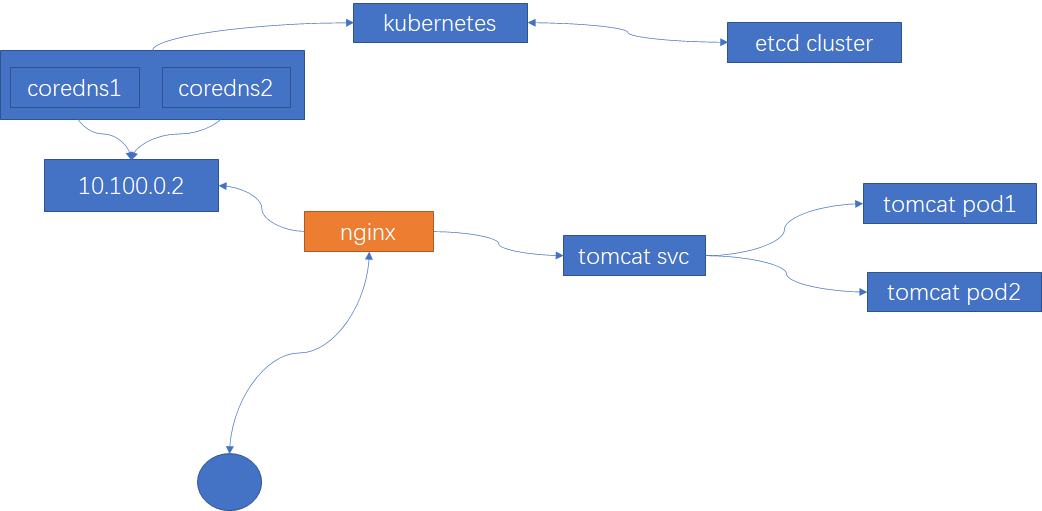

kubeasz是通过node节点本地部署的kube-lb(实际为nginx)来实际master节点的负载,如下图

./ezctl add-master k8s-cluster1 192.168.44.14

验证

此时会自动更新kube-lb.conf,添加新的master

- 添加node节点

./ezctl add-node k8s-cluster1 192.168.44.17

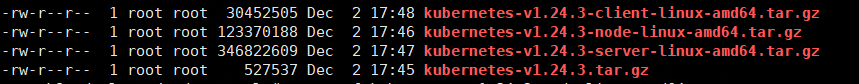

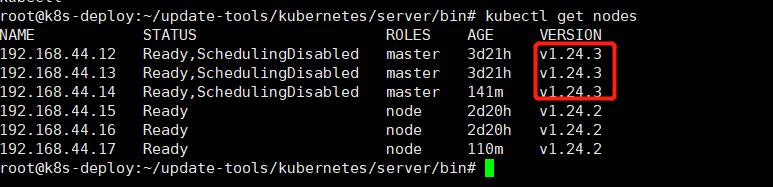

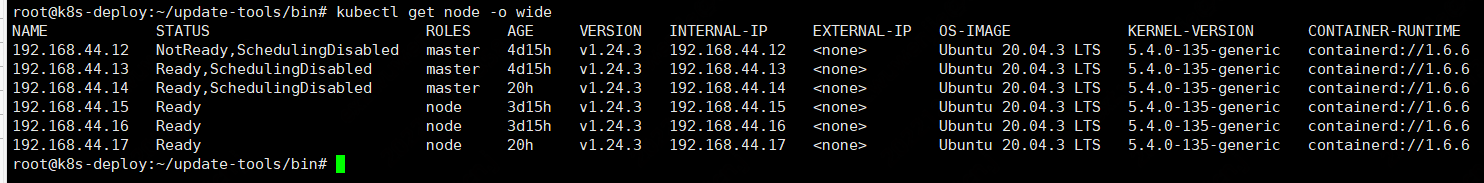

升级kubernetes 1.24.3

需要从github的kubernetes中找到下面的4个包

然后解压所有包

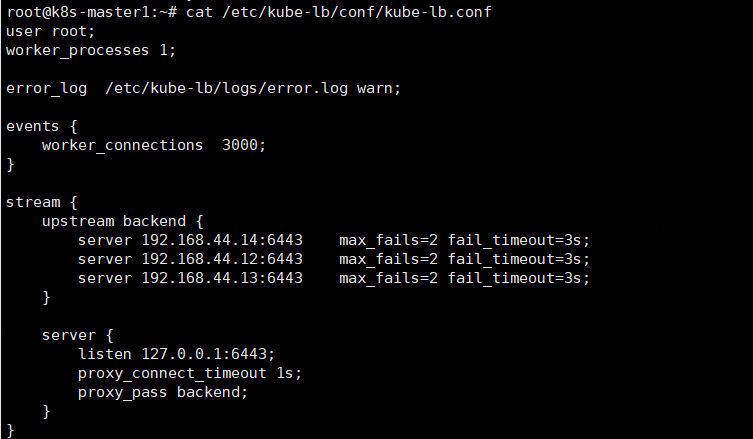

先将其中一个k8s-master节点从负载中摘除,注意所有节点都要摘除

通过ansible批量修改kube-lb.conf配置文件

ansible all -m shell -a "sed -i 's/server 192.168.44.12/#server 192.168.44.12/g' /etc/kube-lb/conf/kube-lb.conf"

ansible all -m systemd -a "name=kube-lb state=restarted"

在44.12的master上面停止如下服务

systemctl stop kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet

在deploy主机将相关文件拷贝到目标机

scp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl 192.168.44.11:/usr/local/bin/

启动所有服务

systemctl start kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet

验证

将kube-lb.conf配置文件修改并重启所有kube-lb服务

ansible all -m shell -a "sed -i 's/#server 192.168.44.12/server 192.168.44.12/g' /etc/kube-lb/conf/kube-lb.conf"

ansible all -m systemd -a "name=kube-lb state=restarted"

将其他master节点按这个方法进行升级

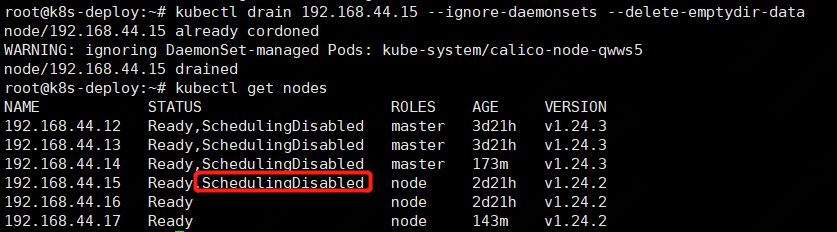

- 升级node节点

需要将相关节点驱逐

kubectl drain 192.168.44.15 --ignore-daemonsets --delete-emptydir-data

node节点打上不可调度的标签

停止kubelet,kube-proxy服务

systemctl stop kubelet kube-proxy

拷贝文件到node升级节点

scp kubelet kube-proxy 192.168.44.15:/usr/local/bin

启动服务

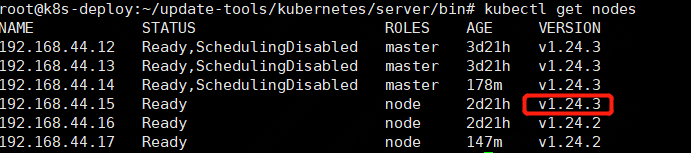

systemctl start kubelet kube-proxy

修改可调度

kubectl uncordon 192.168.44.15

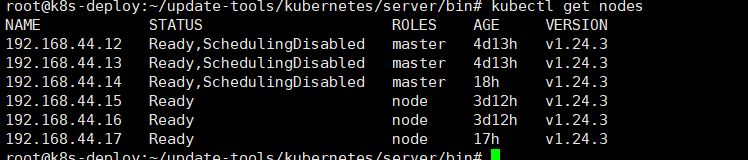

将其他节点按上面步骤进行升级

最后将相关升级文件拷入到kubeasz的文件中,用于以后扩入时使用

\cp kube-apiserver kube-controller-manager kube-scheduler kube-proxy kubelet kubectl /etc/kubeasz/bin/

- 升级contrainred

下载地址

https://github.com/containerd/containerd/releases/tag/v1.6.6

正常生产流程也是按照node节点的步骤去做升级,先驱逐节点上的pod,然后再进行升级

先停止kubelet,kube-proxy

systemctl disable kubelet kube-proxy containerd

reboot

scp * 192.168.44.17:/usr/local/bin

修改为开机启动,并启动服务

systemctl start kubelet kube-proxy containerd

systemctl enable kubelet kube-proxy containerd

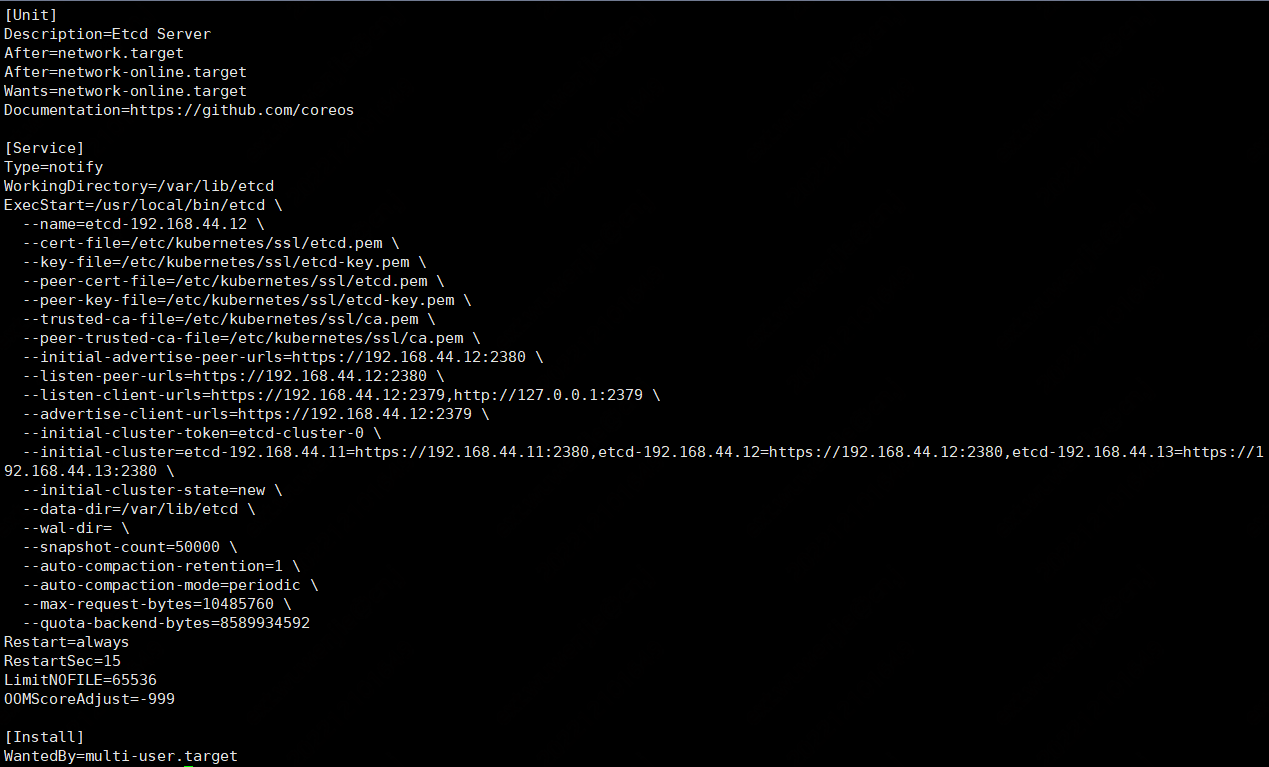

etcd的备份和恢复-基于快照

使用命令进行备份恢复

etcdctl snapshot save saveshot.db

etcdctl snapshot restore saveshot.db --data-dir=/tmp/etcd/ #恢复目录不能为空

可以将恢复目录设置为etcd的存储目录,或者清etcd目录清空,将数据恢复到etcd目录

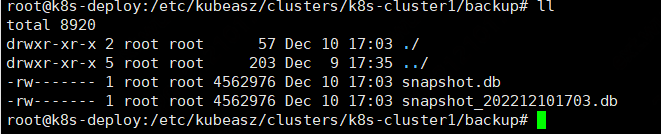

使用ezctl命令进行备份恢复

./ezctl backup k8s-cluster1

他会将最后的备份拷贝snapshot.db文件

恢复命令

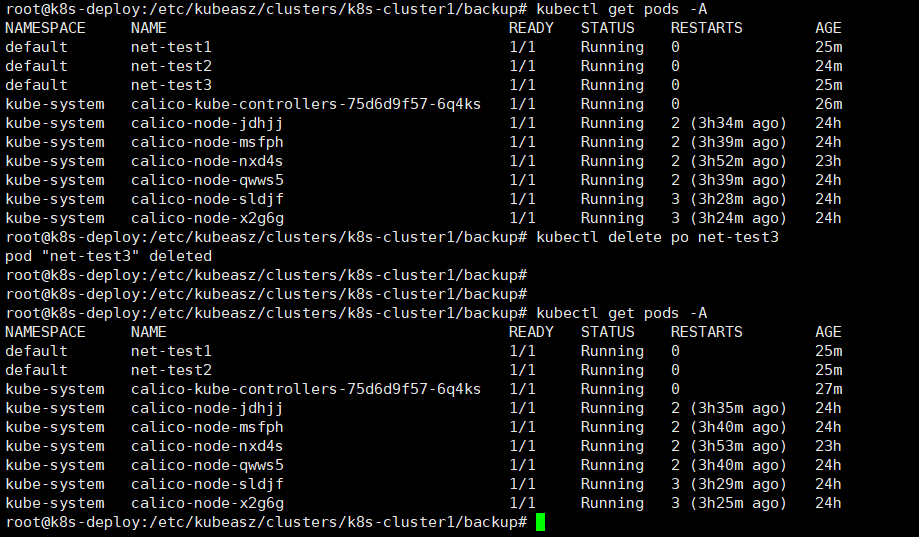

将net-test1的pod删除,利用之前的备份进行恢复

./ezctl restore k8s-cluster1

注意,如果有多个备份,需要将你想恢复日期的备份拷贝成snapshot.db文件

coredns的域名解析流程

客户访问tomcat服务是通过nginx去查找kube-dns(svc),他到后面的coredns中查找tomcat svc的IP,如果coredns有缓存就由他返回,如果没有就继续到etcd中查找返回,然后tomcat svc再去后端找查tomcat pod,coredns通过访问kubernetes服务即通过 apiserver将数据存储到etcd cluster中。

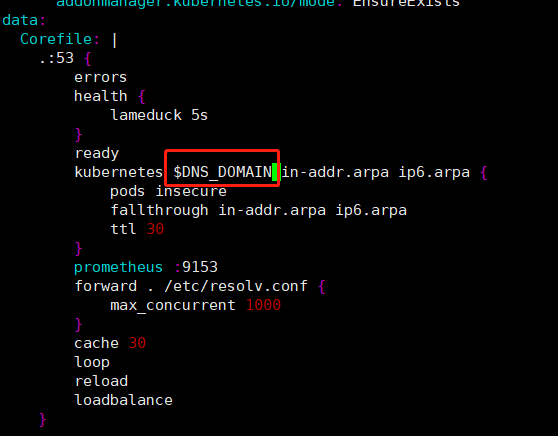

Corefile配置

将addons中的dns配置提取出来进行修改部署

kubernetes/cluster/addons/dns/coredns/coredns.yaml.base

这个是和kubeasz中的hosts文件里的CLUSTER_DNS_DOMAIN一置

修改images镜像为1.9.3(grc镜像无法下载,从dockerhub下载,并上传到自有镜像仓库)

验证

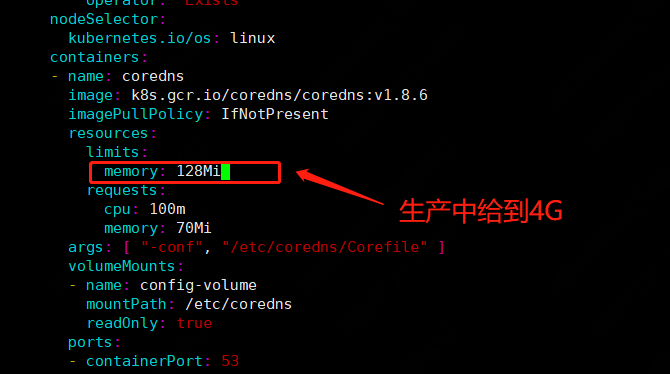

coredns在生产中使用注意问题

1.打开配置文件内存限制

2.开多副本

corefile解析

data:

Corefile: |

.:53 {

errors #错误信息标准输出

health { #在coredns的http://localhost:8080/health商口提供coredns服务的健康报告

lameduck 5s

}

ready #监听8181端口,当coredns的插件都己就绪时,访问该接口会返回200 OK.

#kubernetes Coredns将基于kubernetes service name进行DNS查询并返回查询记录给客户端

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

#promethus: coredns的度量指标数据以prometheus的key-value格式在http://localhost:9153/metrics URI上提供

prometheus :9153

#forward 非kubernetes集群内的其他任何域名查询都将转发到预定义的目的server 如(/etc/resove.conf或IP(如8.8.8.8)

#forward . 8.8.8.8 {}

forward . /etc/resolv.conf {

max_concurrent 1000

}

#启用service解析缓存,单位为秒

cache 30

# 检测域名解析是否有死循环,如coredns转发给内网DNS服务器,而内网DNS服务器又转发给coredns,如果发现解析是死循环,则强制中止coredns进程(kubernetes会重建)

loop

# 检测corefile是否更改,在重新编辑configmap配置后,默认2分钟后会优雅的自动加载

reload

# 轮训DNS域名解析,如果一个域名存在记录则轮训解析

loadbalance

}

#公司的内部域名,交给专门的dns去做解析时,需要如下配置

myserver.online {

forward 。 172.16.16.16:53

}

dashboard的使用

- 部署

下载镜像push到本地镜像

docker pull kubernetesui/dashboard:v2.6.0

docker pull kubernetesui/metrics-scraper:v1.0.8

修改yaml文件

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: kubernetes-dashboard

image: harbor.jackedu.cn/baseimage/dashboard:v2.6.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

type: NodePort #增加nodeport暴露

ports:

- port: 8000

targetPort: 8000

nodePort: 30000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: harbor.jackedu.cn/baseimage/metrics-scraper:v1.0.8

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

kubectl apply -f dashboard-v2.6.0.yaml

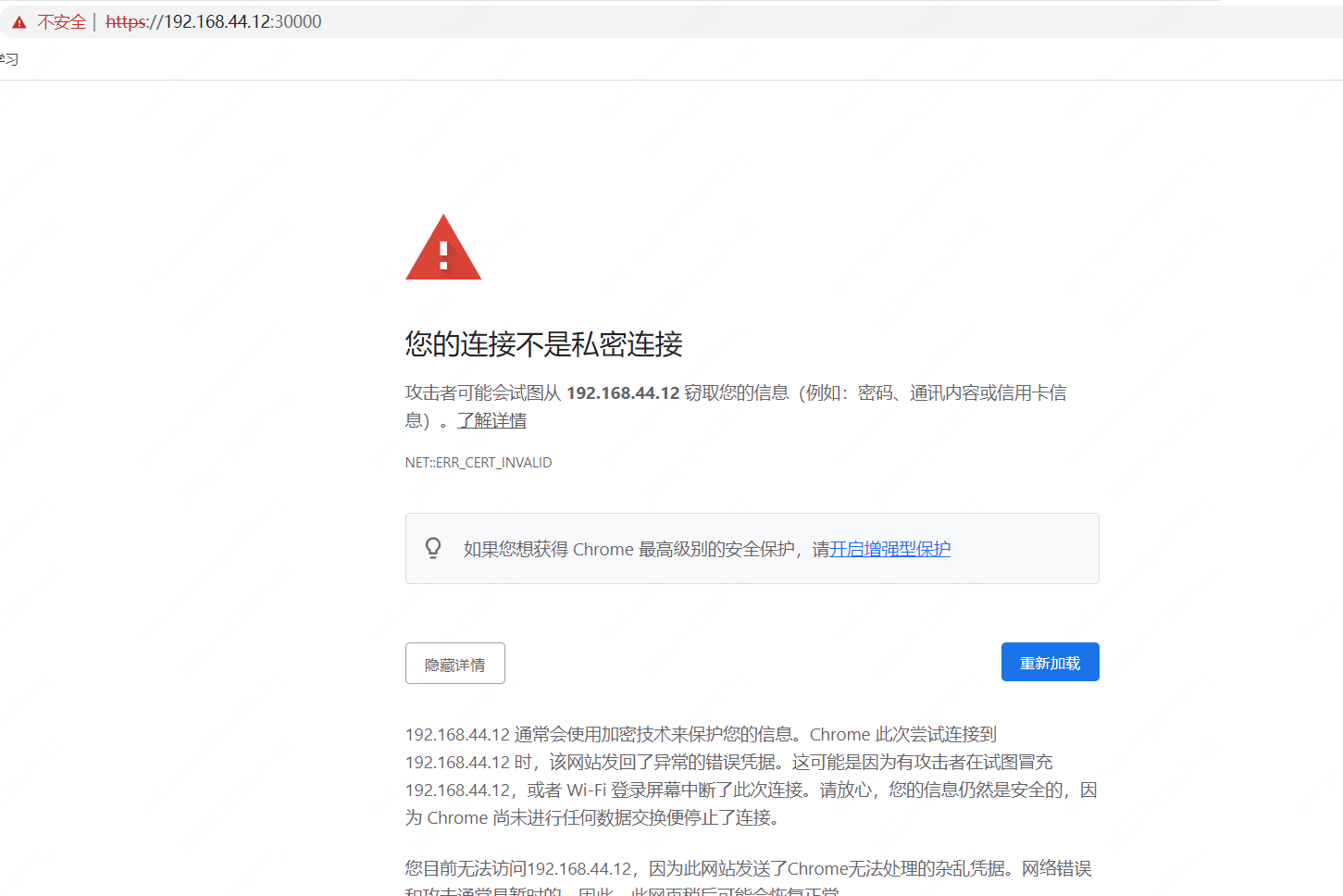

出现这个页面需要输入thisisunsafe.

此时需要输入token,需要自己创建

创建admin-user.yaml

root@k8s-deploy:~/update-tools/yml# vim admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

创建admin-secret.yaml

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: dashboard-admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

root@k8s-deploy:~/update-tools/yml# kubectl apply -f admin-user.yaml

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

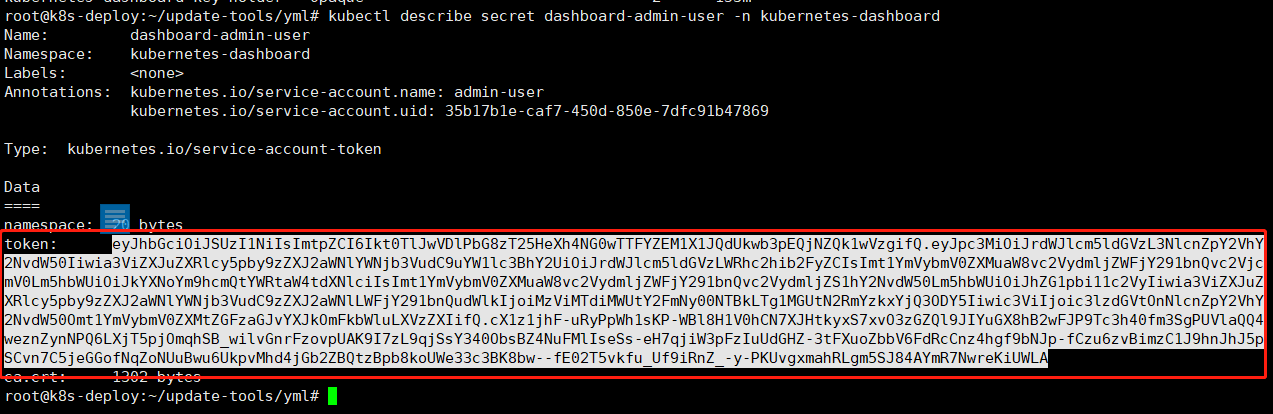

在1.23及之前的k8s中会自动创建token,但是1.24需要自己创建

获取tocken

kubectl describe secret dashboard-admin-user -n kubernetes-dashboard

将这段值拷贝到token,即可登陆成功!

浙公网安备 33010602011771号

浙公网安备 33010602011771号