1-zookeeper基本原理和使用

1 分布式应用

1.1 分布式系统原理

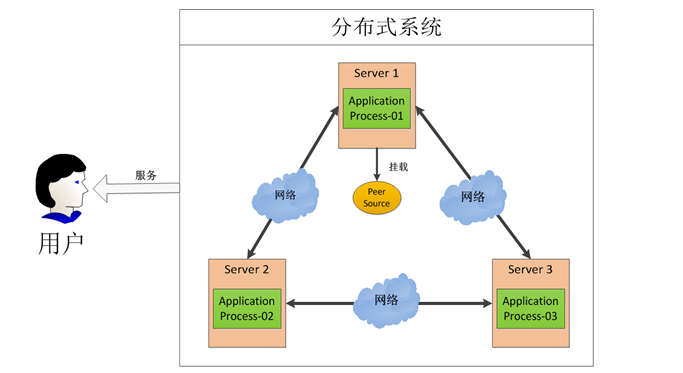

在一个网络中,每台服务器上各跑一个应用,然后彼此连接起来就组成一套系统。比如提供完成的游戏服务,需要有认证应用,道具应用,积分应用,游戏主应用等,应用并非跑在一台服务器上,而是分布在整个网络中,整合起来提供服务。

1.2 分布式协调技术

解决分布式环境中的资源调度问题。分布式锁是其核心技术。比如有三个进程物理分布在网络中,有一个磁盘资源,原本三个进程会对它发生资源争抢,互相干扰。分布式调度就是使用调度器,让进程1先访问磁盘,然后用分布式锁对资源进行上锁,使其他进程无法访问。进程1用完磁盘后,锁就释放掉。调度器分配其他进程来使用资源,并且上锁独占该资源。这样,就实现了分布式环境中资源的有序调度。

1.3 分布式锁应用

google开发了非开源的chbby实现,而后雅虎模仿开发了zookeeper,并且开源、捐给了apache。zookeeper在可用性、性能、一致性上都有非常好的表现。

zeekeeper目前使用广泛。企业在构建分布式系统时,可以使用zookeeper为基础来做。

2 zook配置

centos7默认会安装openjdk,卸载。然后安装jdk,配置env。(在PATH中,java的路径要写在前面,不然会读取原先的openjdk)

[root@yhzk02 ~]# rpm -ivh jdk-8u171-linux-x64.rpm #安装jdk [root@yhzk02 latest]# rpm -qa | grep openjdk java-1.8.0-openjdk-1.8.0.131-11.b12.el7.x86_64 java-1.8.0-openjdk-headless-1.8.0.131-11.b12.el7.x86_64 [root@yhzk02 latest]# rpm -e java-1.8.0-openjdk java-1.8.0-openjdk-headless --nodeps #卸载openjdk vim /etc/profile.d/java.sh export JRE_HOME=/usr/java/latest/jre export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib [root@yhzk02 latest]# . /etc/profile.d/java.sh [root@yhzk02 latest]# java -version java version "1.8.0_171" Java(TM) SE Runtime Environment (build 1.8.0_171-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode) #配置env并检查

zook包不用安装,直接放进/usr/local目录下,解包。然后需要写一个conf/zoo.cfg文件。

[root@yhzk02 zookeeper-3.4.11]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.10.12 yhzk01 192.168.10.13 yhzk02 192.168.10.14 yhzk03 #先设定hosts

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/zookeeperdata clientPort=2181 server.1=yhzk01:2888:3888 server.2=yhzk02:2888:3888 server.3=yhzk03:2888:3888 #然后写conf/zoo.cfg文件。 #ticktime:心跳信息检测时间,最小会话超时时间为ticktime的两倍,单位为毫秒。 #dataDir:内存db快照。 #clientPort:监听客户端访问的端口 #server.id=hostname:port(选举端口):port(通信端口)。

[root@yhzk01 /]# cat /zookeeperdata/myid 1 #在每个集群节点的dataDir下创建ID标识文件。

然后可以使用zkServer.sh start启动。(按myid按顺序启动,1->2->3)

启动后,在bin/下有一个zookeeper.out文件,是zook的日志文件。正确配置会有如下输出

2018-04-20 09:47:31,682 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /usr/local/zookeeper-3.4.11/bin/../conf/ zoo.cfg 2018-04-20 09:47:31,703 [myid:] - INFO [main:QuorumPeer$QuorumServer@184] - Resolved hostname: yhzk01 to address: yhzk01/192.168.10.12 2018-04-20 09:47:31,703 [myid:] - INFO [main:QuorumPeer$QuorumServer@184] - Resolved hostname: yhzk03 to address: yhzk03/192.168.10.14 2018-04-20 09:47:31,704 [myid:] - INFO [main:QuorumPeer$QuorumServer@184] - Resolved hostname: yhzk02 to address: yhzk02/192.168.10.13 2018-04-20 09:47:31,704 [myid:] - INFO [main:QuorumPeerConfig@398] - Defaulting to majority quorums 2018-04-20 09:47:31,707 [myid:2] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3 2018-04-20 09:47:31,707 [myid:2] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 0 2018-04-20 09:47:31,707 [myid:2] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled. 2018-04-20 09:47:31,726 [myid:2] - INFO [main:QuorumPeerMain@130] - Starting quorum peer 2018-04-20 09:47:31,739 [myid:2] - INFO [main:ServerCnxnFactory@117] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server c onnection factory 2018-04-20 09:47:31,743 [myid:2] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181 2018-04-20 09:47:31,751 [myid:2] - INFO [main:QuorumPeer@1158] - tickTime set to 2000 2018-04-20 09:47:31,751 [myid:2] - INFO [main:QuorumPeer@1204] - initLimit set to 10 2018-04-20 09:47:31,752 [myid:2] - INFO [main:QuorumPeer@1178] - minSessionTimeout set to -1 2018-04-20 09:47:31,752 [myid:2] - INFO [main:QuorumPeer@1189] - maxSessionTimeout set to -1 2018-04-20 09:47:31,763 [myid:2] - INFO [main:QuorumPeer@1467] - QuorumPeer communication is not secured! 2018-04-20 09:47:31,764 [myid:2] - INFO [main:QuorumPeer@1496] - quorum.cnxn.threads.size set to 20 2018-04-20 09:47:31,779 [myid:2] - INFO [ListenerThread:QuorumCnxManager$Listener@736] - My election bind port: yhzk02/192.168.10.13:3888 2018-04-20 09:47:31,797 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:QuorumPeer@909] - LOOKING 2018-04-20 09:47:31,798 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:FastLeaderElection@820] - New election. My id = 2, prop osed zxid=0x0

2018-04-20 10:02:15,286 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:QuorumPeer$QuorumServer@184] - Resolved hostname: yhzk03 to address: yhzk03/192.168.10.14 2018-04-20 10:02:15,286 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:FastLeaderElection@854] - Notification time out: 60000 2018-04-20 10:02:44,463 [myid:2] - INFO [yhzk02/192.168.10.13:3888:QuorumCnxManager$Listener@743] - Received connection request /192.168. 10.12:33318 2018-04-20 10:02:44,561 [myid:2] - INFO [WorkerReceiver[myid=2]:FastLeaderElection@602] - Notification: 1 (message format version), 1 (n. leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 1 (n.sid), 0x0 (n.peerEpoch) LOOKING (my state) 2018-04-20 10:02:44,561 [myid:2] - INFO [WorkerReceiver[myid=2]:FastLeaderElection@602] - Notification: 1 (message format version), 3 (n. leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 1 (n.sid), 0x0 (n.peerEpoch) LOOKING (my state) 2018-04-20 10:02:44,577 [myid:2] - INFO [WorkerSender[myid=2]:QuorumCnxManager@347] - Have smaller server identifier, so dropping the con nection: (3, 2) 2018-04-20 10:02:44,578 [myid:2] - INFO [WorkerReceiver[myid=2]:FastLeaderElection@602] - Notification: 1 (message format version), 3 (n. leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 2 (n.sid), 0x0 (n.peerEpoch) LOOKING (my state) 2018-04-20 10:02:44,624 [myid:2] - INFO [yhzk02/192.168.10.13:3888:QuorumCnxManager$Listener@743] - Received connection request /192.168. 10.14:56120 2018-04-20 10:02:44,680 [myid:2] - INFO [WorkerReceiver[myid=2]:FastLeaderElection@602] - Notification: 1 (message format version), 3 (n. leader), 0x0 (n.zxid), 0x1 (n.round), LOOKING (n.state), 3 (n.sid), 0x0 (n.peerEpoch) LOOKING (my state) 2018-04-20 10:02:44,888 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:QuorumPeer@979] - FOLLOWING 2018-04-20 10:02:44,901 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Learner@86] - TCP NoDelay set to: true 2018-04-20 10:02:44,927 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:zookeeper.version= 3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0, built on 11/01/2017 18:06 GMT 2018-04-20 10:02:44,927 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:host.name=yhzk02 2018-04-20 10:02:44,927 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:java.version=1.8.0 _171 2018-04-20 10:02:44,928 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:java.vendor=Oracle Corporation 2018-04-20 10:02:44,928 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:java.home=/usr/jav a/jdk1.8.0_171-amd64/jre 2018-04-20 10:02:44,928 [myid:2] - INFO [QuorumPeer[myid=2]/0:0:0:0:0:0:0:0:2181:Environment@100] - Server environment:java.class.path=/u sr/local/zookeeper-3.4.11/bin/../build/classes:/usr/local/zookeeper-3.4.11/bin/../build/lib/*.jar:/usr/local/zookeeper-3.4.11/bin/../lib/s lf4j-log4j12-1.6.1.jar:/usr/local/zookeeper-3.4.11/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper-3.4.11/bin/../lib/netty-3.10.5.Fina l.jar:/usr/local/zookeeper-3.4.11/bin/../lib/log4j-1.2.16.jar:/usr/local/zookeeper-3.4.11/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper -3.4.11/bin/../lib/audience-annotations-0.5.0.jar:/usr/local/zookeeper-3.4.11/bin/../zookeeper-3.4.11.jar:/usr/local/zookeeper-3.4.11/bin/ ../src/java/lib/*.jar:/usr/local/zookeeper-3.4.11/bin/../conf:.:/usr/java/latest/lib/dt.jar:/usr/java/latest/lib/tools.jar:/usr/java/lates t/jre/lib

4 zook工具

4.1 启停&状态:

zkServer.sh {start|stop|status}

使用telnet可以查看更多信息。

clients是处于tcp长连接状态的客户端及数据收发信息。

[root@yhzk01 bin]# telnet 127.0.0.1 2181 stat Zookeeper version: 3.4.11-37e277162d567b55a07d1755f0b31c32e93c01a0, built on 11/01/2017 18:06 GMT Clients: /127.0.0.1:56962[0](queued=0,recved=1,sent=0) Latency min/avg/max: 0/0/0 Received: 5 Sent: 4 Connections: 1 Outstanding: 0 Zxid: 0x0 Mode: follower Node count: 4

4.2 zkCli工具

集群之间是互通的,在一个zook操作,另外两台上也会同步。

./zkCli.sh -server 127.0.0.1:22181 #指定服务器和端口

4.2.1 查看目录结构 && 状态 && 设立WATCHER

[zk: localhost:2181(CONNECTED) 0] ls / [zookeeper] #查看/节点下的子节点 [zk: localhost:2181(CONNECTED) 27] ls /worker [process3, process1, process2] #查看/worker下面的子节点 [zk: localhost:2181(CONNECTED) 30] ls /worker/process1 true [121] [zk: localhost:2181(CONNECTED) 31] WATCHER:: WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/worker/process1 #监听/worker/process1子节点数量。在其他id中创建子节点,本id会收到WATCHER。 [zk: localhost:2181(CONNECTED) 18] stat /yinhe cZxid = 0x500000003 ctime = Thu Apr 26 11:06:17 CST 2018 #节点创建时间 mZxid = 0x500000003 mtime = Thu Apr 26 11:06:17 CST 2018 #节点修改时间 pZxid = 0x500000003 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 0 #子节点数量,类似目录树,可能有好几层。 #查看节点状态 #后面再加true参数表示watch临时节点。在集群中,id2可以监听在id1上创建的节点,当id1挂了,id2就会收到消息。

4.2.2 增

[zk: localhost:2181(CONNECTED) 42] create -s /worker/process1/123 'i am auth process' Created /worker/process1/1230000000003 #增加顺序子节点。类似一个名为123的进程需要很多线程。 [zk: localhost:2181(CONNECTED) 0] create -e /worker/process1/124 '' Created /worker/process1/124 #创建临时节点。client把一个临时节点注册到zook,client一旦失联,节点消失。

4.2.3 删

[zk: localhost:2181(CONNECTED) 40] delete /worker/process1/123 #删除一节点

4.2.4 查

[zk: localhost:2181(CONNECTED) 3] get /worker/process1/1250000000005 wip="60.123.44.30";lip="192.168.10.3" cZxid = 0x600000008 ctime = Fri Apr 27 10:12:33 CST 2018 mZxid = 0x600000008 mtime = Fri Apr 27 10:12:33 CST 2018 pZxid = 0x600000008 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 37 numChildren = 0 #查一个节点的内容。可以发现dataLength = 37

4.2.5 改

[zk: localhost:2181(CONNECTED) 4] set /worker/process1/1250000000005 'wip="60.123.44.30";lip="192.168.10.4"'

浙公网安备 33010602011771号

浙公网安备 33010602011771号