Linux创建RAID10_实战

Linux创建RAID10

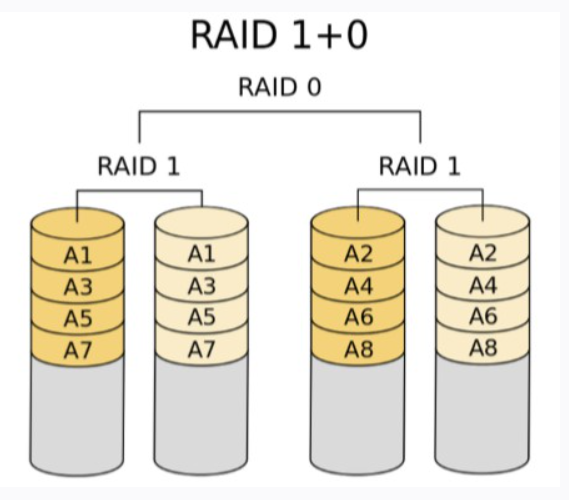

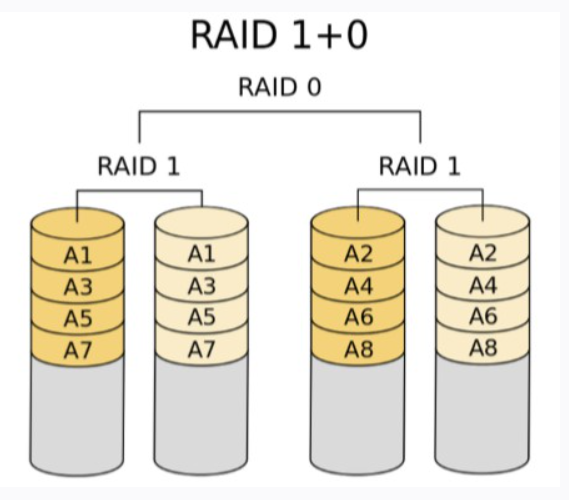

- RAID10 是先将数据进行镜像操作,然后再对数据进行分组,RAID1 在这里就是一个冗余的备份阵列,而RAID0则负责数据的读写阵列

- 至少要四块盘,两两组合做 RAID1,然后做 RAID0,RAID10 对存储容量的利用率和RAID1一样低,只有 50%

- Raid10 方案造成了 50% 的磁盘浪费,但是它提供了 200% 的速度和单磁盘损坏的数据安全性,并且当同时损坏的磁盘不在同一 RAID1 中,就能保证数据安全性,RAID10 能提供比 RAID5 更好的性能

创建RAID10,并格式化,挂载使用

- 添加4块虚拟硬盘并全部分区,类型ID为fd

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 10G 0 disk

sdb 8:16 0 10G 0 disk

sdc 8:32 0 10G 0 disk

sdd 8:48 0 10G 0 disk

sr0 11:0 1 7.3G 0 rom

nvme0n1 259:0 0 80G 0 disk

├─nvme0n1p1 259:1 0 1G 0 part /boot

└─nvme0n1p2 259:2 0 79G 0 part

├─rhel-root 253:0 0 50G 0 lvm /

├─rhel-swap 253:1 0 2G 0 lvm [SWAP]

└─rhel-home 253:2 0 27G 0 lvm /home

[root@localhost ~]# fdisk -l | grep raid

/dev/sda1 2048 20971519 20969472 10G fd Linux raid autodetect

/dev/sdb1 2048 20971519 20969472 10G fd Linux raid autodetect

/dev/sdc1 2048 20971519 20969472 10G fd Linux raid autodetect

/dev/sdd1 2048 20971519 20969472 10G fd Linux raid autodetect

- 创建两个 RAID1,不添加热备份盘

[root@localhost ~]# mdadm -C -v /dev/md101 -l1 -n2 /dev/sd{a,b}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 10475520K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md101 started.

[root@localhost ~]# mdadm -C -v /dev/md102 -l1 -n2 /dev/sd{c,d}1

mdadm: Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensure that

your boot-loader understands md/v1.x metadata, or use

--metadata=0.90

mdadm: size set to 10475520K

Continue creating array? y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md102 started.

- 查看 raidstat 状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1]

md102 : active raid1 sdd1[1] sdc1[0]

10475520 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdb1[1] sda1[0]

10475520 blocks super 1.2 [2/2] [UU]

unused devices: <none>

- 查看两个 RAID1 的详细信息

[root@localhost ~]# mdadm -D /dev/md101

/dev/md101:

Version : 1.2

Creation Time : Mon Dec 21 23:17:00 2020

Raid Level : raid1

Array Size : 10475520 (9.99 GiB 10.73 GB)

Used Dev Size : 10475520 (9.99 GiB 10.73 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Dec 21 23:17:53 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:101 (local to host localhost)

UUID : 6edfc41b:6bb36e51:e0f130aa:d279835f

Events : 17

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

[root@localhost ~]# mdadm -D /dev/md102

/dev/md102:

Version : 1.2

Creation Time : Mon Dec 21 23:17:47 2020

Raid Level : raid1

Array Size : 10475520 (9.99 GiB 10.73 GB)

Used Dev Size : 10475520 (9.99 GiB 10.73 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Dec 21 23:18:40 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Consistency Policy : resync

Name : localhost:102 (local to host localhost)

UUID : 0652afc7:eef07cc0:f27498fc:375620b9

Events : 17

Number Major Minor RaidDevice State

0 8 33 0 active sync /dev/sdc1

1 8 49 1 active sync /dev/sdd1

- 创建 RAID10

[root@localhost ~]# mdadm -C -v /dev/md10 -l0 -n2 /dev/md10{1,2}

mdadm: chunk size defaults to 512K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md10 started.

- 查看 raidstat 状态

[root@localhost ~]# cat /proc/mdstat

Personalities : [raid1] [raid0]

md10 : active raid0 md102[1] md101[0]

20932608 blocks super 1.2 512k chunks

md102 : active raid1 sdd1[1] sdc1[0]

10475520 blocks super 1.2 [2/2] [UU]

md101 : active raid1 sdb1[1] sda1[0]

10475520 blocks super 1.2 [2/2] [UU]

unused devices: <none>

- 查看 RAID10 的详细信息

[root@localhost ~]# mdadm -D /dev/md10

/dev/md10:

Version : 1.2

Creation Time : Mon Dec 21 23:28:37 2020

Raid Level : raid0

Array Size : 20932608 (19.96 GiB 21.43 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Mon Dec 21 23:28:37 2020

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Layout : original

Chunk Size : 512K

Consistency Policy : none

Name : localhost:10 (local to host localhost)

UUID : 7c1db36f:4346f40e:07419940:4423e366

Events : 0

Number Major Minor RaidDevice State

0 9 101 0 active sync /dev/md101

1 9 102 1 active sync /dev/md102

- 格式化 RAID10,类型为 xfs

[root@localhost ~]# mkfs.xfs /dev/md10

meta-data=/dev/md10 isize=512 agcount=16, agsize=327040 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=5232640, imaxpct=25

= sunit=128 swidth=256 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

- 挂载 RAID10 ,挂载点为 /raid10

[root@localhost ~]# mkdir /raid10

[root@localhost ~]# mount /dev/md10 /raid10/

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 887M 0 887M 0% /dev

tmpfs 904M 0 904M 0% /dev/shm

tmpfs 904M 8.7M 895M 1% /run

tmpfs 904M 0 904M 0% /sys/fs/cgroup

/dev/mapper/rhel-root 50G 1.8G 49G 4% /

/dev/nvme0n1p1 1014M 173M 842M 17% /boot

/dev/mapper/rhel-home 27G 225M 27G 1% /home

tmpfs 181M 0 181M 0% /run/user/0

/dev/md10 20G 176M 20G 1% /raid10