k8s work node status NotReady

问题背景:

Work Node 节点执行 join后,状态NotReady.

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master2 Ready control-plane 2d21h v1.29.0

k8s-node1 NotReady <none> 2d21h v1.29.0

然后查看pod可以看到192.168.56.11的flannel pod没有启动起来。

kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-j2q5w 1/1 Running 2 2d21h 192.168.56.100 k8s-master2 <none> <none>

kube-flannel kube-flannel-ds-n5h7t 0/1 Init:0/2 0 14h 192.168.56.11 k8s-node1 <none> <none>

kube-system coredns-857d9ff4c9-9vw86 1/1 Running 2 2d21h 10.244.0.7 k8s-master2 <none> <none>

kube-system coredns-857d9ff4c9-fxglw 1/1 Running 2 2d21h 10.244.0.6 k8s-master2 <none> <none>

kube-system etcd-k8s-master2 1/1 Running 6 2d21h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-apiserver-k8s-master2 1/1 Running 8 2d21h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-controller-manager-k8s-master2 1/1 Running 2 2d21h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-proxy-p528v 0/1 ContainerCreating 0 14h 192.168.56.11 k8s-node1 <none> <none>

kube-system kube-proxy-vckjv 1/1 Running 2 2d21h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-scheduler-k8s-master2 1/1 Running 7 2d21h 192.168.56.100 k8s-master2 <none> <none>

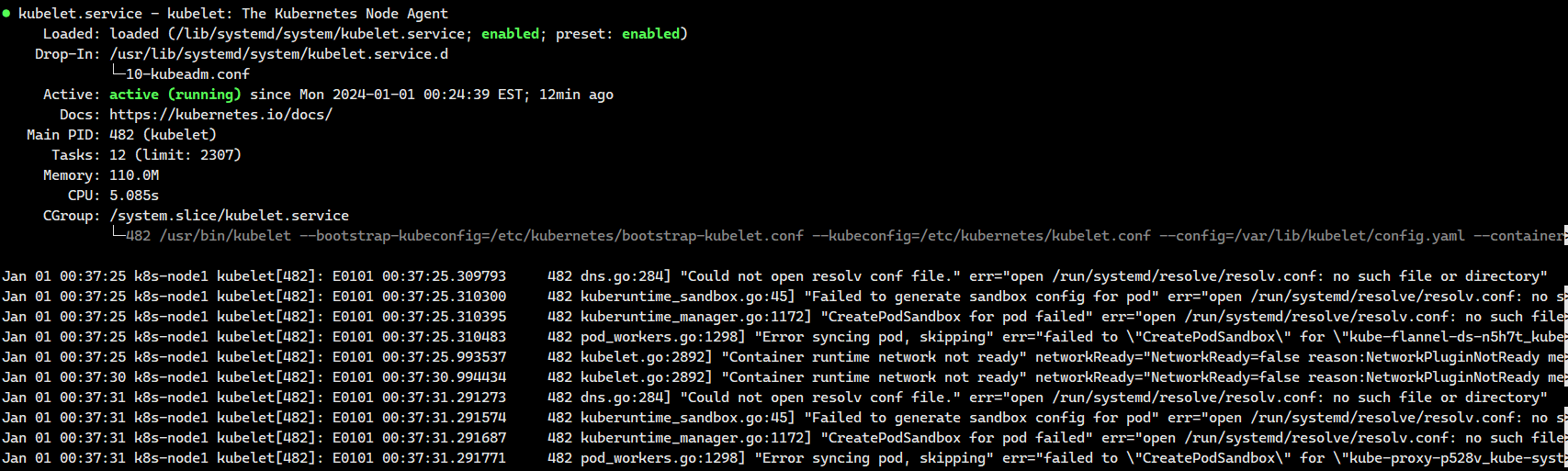

接下来再查看node节点,kubelet的状态

这里有这样的错误sandbox config for pod \\\"kube-flannel-ds-n5h7t_kube-flannel(7410979a-2b8c-4bae-852d-49ee657fe9cb)\\\": open /run/systemd/resolve/resolv.conf: no such file or directory\""

这里pod ‘kube-flannel-ds-n5h7t_kube-flannel’ 启动失败了。所以要做的就是解决这个问题。

解决方案

- sudo apt update

- sudo apt install systemd-resolved

- sudo systemctl start systemd-resolved.service

- sudo systemctl enable systemd-resolved.service

Debian 相关的技术文档

https://manpages.debian.org/testing/systemd/systemd-resolved.service.8.en.html

https://packages.debian.org/unstable/systemd-resolved

之后异常改成了这样

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2024-01-01 06:26:04 EST; 14h ago

Docs: https://kubernetes.io/docs/

Main PID: 2298 (kubelet)

Tasks: 11 (limit: 2307)

Memory: 36.7M

CPU: 34.151s

CGroup: /system.slice/kubelet.service

└─2298 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --contain>

Jan 01 20:46:24 k8s-node1 kubelet[2298]: E0101 20:46:24.591540 2298 kubelet.go:2892] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady >

Jan 01 20:46:25 k8s-node1 kubelet[2298]: E0101 20:46:25.955155 2298 remote_runtime.go:193] "RunPodSandbox from runtime service failed" err="rpc error: code = Unknown desc = failed to ge>

Jan 01 20:46:25 k8s-node1 kubelet[2298]: E0101 20:46:25.955477 2298 kuberuntime_sandbox.go:72] "Failed to create sandbox for pod" err="rpc error: code = Unknown desc = failed to get san>

Jan 01 20:46:25 k8s-node1 kubelet[2298]: E0101 20:46:25.955577 2298 kuberuntime_manager.go:1172] "CreatePodSandbox for pod failed" err="rpc error: code = Unknown desc = failed to get sa>

lines 1-17

继续检查containerd问题,发先没有/etc/containerd/config.toml文件,于时执行containerd config default > /etc/containerd/config.toml生产一份默认的配置文件。并重启containerd和kubelet。

这里,最好修改config.toml的sandbox镜像仓库地址

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

sudo systemctl restart containerd && sudo systemctl restart kubelet

最后的运行效果

master@k8s-master2:~$ kubectl get pods -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-flfgs 1/1 Running 3 (84s ago) 50m 192.168.56.11 k8s-node1 <none> <none>

kube-flannel kube-flannel-ds-j2q5w 1/1 Running 2 3d18h 192.168.56.100 k8s-master2 <none> <none>

kube-system coredns-857d9ff4c9-9vw86 1/1 Running 2 3d18h 10.244.0.7 k8s-master2 <none> <none>

kube-system coredns-857d9ff4c9-fxglw 1/1 Running 2 3d18h 10.244.0.6 k8s-master2 <none> <none>

kube-system etcd-k8s-master2 1/1 Running 6 3d18h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-apiserver-k8s-master2 1/1 Running 8 3d18h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-controller-manager-k8s-master2 1/1 Running 2 3d18h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-proxy-p528v 1/1 Running 4 (83s ago) 35h 192.168.56.11 k8s-node1 <none> <none>

kube-system kube-proxy-vckjv 1/1 Running 2 3d18h 192.168.56.100 k8s-master2 <none> <none>

kube-system kube-scheduler-k8s-master2 1/1 Running 7 3d18h 192.168.56.100 k8s-master2 <none> <none>

master@k8s-master2:~$ kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master2 Ready control-plane 3d18h v1.29.0

k8s-node1 Ready <none> 3d18h v1.29.0

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2024-01-01 21:51:40 EST; 6min ago

Docs: https://kubernetes.io/docs/

Main PID: 1676 (kubelet)

Tasks: 10 (limit: 2307)

Memory: 34.1M

CPU: 3.454s

CGroup: /system.slice/kubelet.service

└─1676 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config.yaml --c>

lines 1-12