强化学习框架RLlib教程003:Training APIs的使用(二)基础pythonAPI

目录

基础pythonAPI概览

计算动作(Computing Actions)

获取策略状态(Accessing Policy State)

获取模型状态(Accessing Model State)

例子:预处理喂给model的观测值

例子:查询一个policy的动作分布

例子:从DQN模型中获取Q-value

参考资料

|

基础pythonAPI概览 |

python的API可以让我们构建更多RL模型以适应更多场景。常用的RLlib API有custom environments, preprocessors, or models。

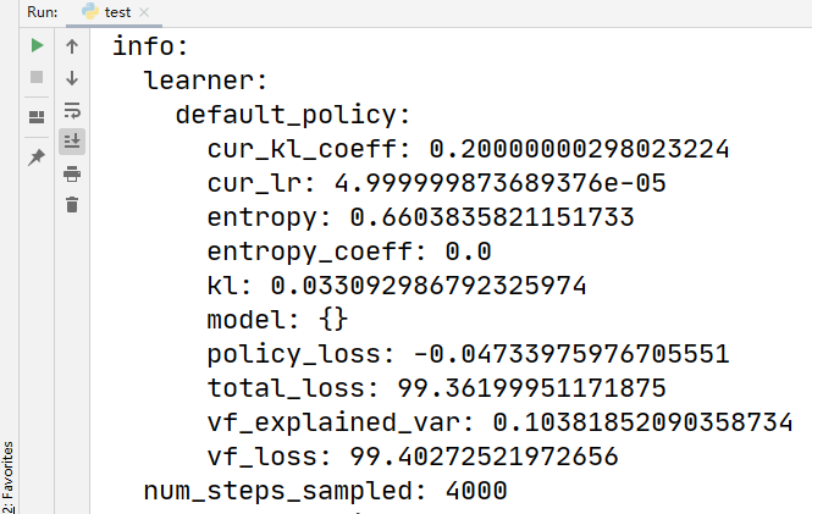

这里有一个基础的使用案例:(for a more complete example, see custom_env.py)

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print ray.init() config = ppo.DEFAULT_CONFIG.copy() config["num_gpus"] = 1 config["num_workers"] = 2 config["eager"] = False trainer = ppo.PPOTrainer(config=config, env="CartPole-v0") # Can optionally call trainer.restore(path) to load a checkpoint. for i in range(1000): # Perform one iteration of training the policy with PPO result = trainer.train() print(pretty_print(result)) if i % 100 == 0: checkpoint = trainer.save() print("checkpoint saved at", checkpoint) # Also, in case you have trained a model outside of ray/RLlib and have created # an h5-file with weight values in it, e.g. # my_keras_model_trained_outside_rllib.save_weights("model.h5") # (see: https://keras.io/models/about-keras-models/) # ... you can load the h5-weights into your Trainer's Policy's ModelV2 # (tf or torch) by doing: trainer.import_model("my_weights.h5") # NOTE: In order for this to work, your (custom) model needs to implement # the `import_from_h5` method. # See https://github.com/ray-project/ray/blob/master/rllib/tests/test_model_imports.py # for detailed examples for tf- and torch trainers/models.

注意:推荐使用Tune来run RLlib的trainers,这样可以简单的管理实验和可视化。仅需要配置"run": ALG_NAME, "env": ENV_NAME参数

所有的RLlib trainer都兼容Tune API。这就使得在实验中使用Tune变得简单。例如,下面的代码就可以执行一个PPO算法的超参数扫描:

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray from ray import tune ray.init() tune.run( "PPO", stop={"episode_reward_mean": 200}, config={ "env": "CartPole-v0", "num_gpus": 0, "num_workers": 1, "lr": tune.grid_search([0.01, 0.001, 0.0001]), "eager": False, }, )

tune.run()返回一个ExperimentAnalysis 对象,可供我们对训练结果进行进一步分析,也可以用于根据checkpoint恢复智能体参数,例如:

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray from ray import tune import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print ray.init() config = { "env": "CartPole-v0", "num_gpus": 0, "num_workers": 1, "eager": False, } # tune.run() allows setting a custom log directory (other than ``~/ray-results``) # and automatically saving the trained agent analysis = ray.tune.run( ppo.PPOTrainer, config=config, local_dir="./", stop={"episode_reward_mean": 30}, checkpoint_at_end=True) # list of lists: one list per checkpoint; each checkpoint list contains # 1st the path, 2nd the metric value checkpoints = analysis.get_trial_checkpoints_paths( trial=analysis.get_best_trial("episode_reward_mean"), metric="episode_reward_mean") # Loading and restoring a trained agent from a checkpoint is simple: agent = ppo.PPOTrainer(config=config) agent.restore(checkpoints[0][0]) print(agent)

|

计算动作(Computing Actions) |

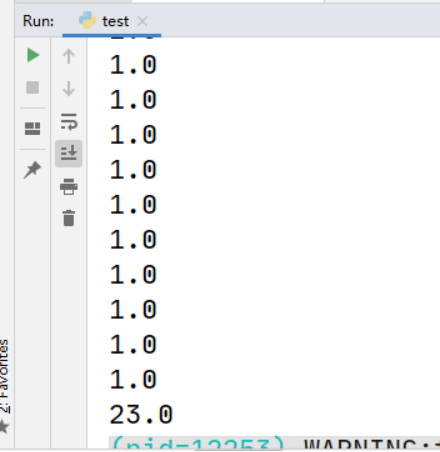

根据一个训练好的agent计算动作最简单的方法是trainer.compute_action()。这个方法预处理并过滤observation之后,传递给agent的policy。下面在一个episode里展示一个简单的测试训练过的agent的例子:

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print import gym ray.init() config = ppo.DEFAULT_CONFIG.copy() config["num_gpus"] = 0 config["num_workers"] = 1 config["eager"] = False agent = ppo.PPOTrainer(config=config, env="CartPole-v0") # instantiate env class env = gym.make("CartPole-v0") # run until episode ends episode_reward = 0 done = False obs = env.reset() while not done: action = agent.compute_action(obs) obs, reward, done, info = env.step(action) episode_reward += reward print(reward) print(episode_reward)

|

获取策略状态(Accessing Policy State) |

获取trainer的内部状态是常见的操作,比如设置或获取内部权重。在RLlib trainer的状态中,集群中多个rollout workers的状态是复制的。然而,你能容易地获取和更新状态。

你也可以获取master上的trainer的状态,通过 trainer.get_policy() or trainer.workers.local_worker(),但是要注意,更新他可能不会立即影响远端的副本(如果你设置的num_workers>0)。

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print import gym ray.init() config = ppo.DEFAULT_CONFIG.copy() config["num_workers"] = 2 trainer = ppo.PPOTrainer(config=config, env="CartPole-v0") # Get weights of the default local policy trainer.get_policy().get_weights() # Same as above trainer.workers.local_worker().policy_map["default_policy"].get_weights() # Get list of weights of each worker, including remote replicas trainer.workers.foreach_worker(lambda ev: ev.get_policy().get_weights()) # Same as above trainer.workers.foreach_worker_with_index(lambda ev, i: ev.get_policy().get_weights())

|

获取模型状态(Accessing Model State) |

和获取policy状态相似,你也许想得到正在训练的神经网络模型的参考。例如,你也许想单独地预训练它,或者更新成RLlib之外的参数。想做这些的话,获取policy的model就可以了。

|

例子:预处理喂给model的观测值 |

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print import gym ray.init() import gym env = gym.make("Pong-v0") # RLlib uses preprocessors to implement transforms such as one-hot encoding # and flattening of tuple and dict observations. from ray.rllib.models.preprocessors import get_preprocessor prep = get_preprocessor(env.observation_space)(env.observation_space) print(prep) #<ray.rllib.models.preprocessors.GenericPixelPreprocessor object at 0x7fc4d049de80> # Observations should be preprocessed prior to feeding into a model print(env.reset().shape) #(210, 160, 3) print(prep.transform(env.reset()).shape) #(84, 84, 3)

|

例子:查询一个policy的动作分布 |

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import numpy as np import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print ray.init() import gym # Get a reference to the policy from ray.rllib.agents.ppo import PPOTrainer trainer = PPOTrainer(env="CartPole-v0", config={"eager": True, "num_workers": 0}) policy = trainer.get_policy() # <ray.rllib.policy.eager_tf_policy.PPOTFPolicy_eager object at 0x7fd020165470> # Run a forward pass to get model output logits. Note that complex observations # must be preprocessed as in the above code block. logits, _ = policy.model.from_batch({"obs": np.array([[0.1, 0.2, 0.3, 0.4]])}) # (<tf.Tensor: id=1274, shape=(1, 2), dtype=float32, numpy=...>, []) # Compute action distribution given logits policy.dist_class # <class_object 'ray.rllib.models.tf.tf_action_dist.Categorical'> dist = policy.dist_class(logits, policy.model) # <ray.rllib.models.tf.tf_action_dist.Categorical object at 0x7fd02301d710> # Query the distribution for samples, sample logps dist.sample() # <tf.Tensor: id=661, shape=(1,), dtype=int64, numpy=..> dist.logp([1]) # <tf.Tensor: id=1298, shape=(1,), dtype=float32, numpy=...> # Get the estimated values for the most recent forward pass policy.model.value_function() # <tf.Tensor: id=670, shape=(1,), dtype=float32, numpy=...> policy.model.base_model.summary() # Model: "model" # _____________________________________________________________________ # Layer (type) Output Shape Param # Connected to # ===================================================================== # observations (InputLayer) [(None, 4)] 0 # _____________________________________________________________________ # fc_1 (Dense) (None, 256) 1280 observations[0][0] # _____________________________________________________________________ # fc_value_1 (Dense) (None, 256) 1280 observations[0][0] # _____________________________________________________________________ # fc_2 (Dense) (None, 256) 65792 fc_1[0][0] # _____________________________________________________________________ # fc_value_2 (Dense) (None, 256) 65792 fc_value_1[0][0] # _____________________________________________________________________ # fc_out (Dense) (None, 2) 514 fc_2[0][0] # _____________________________________________________________________ # value_out (Dense) (None, 1) 257 fc_value_2[0][0] # ===================================================================== # Total params: 134,915 # Trainable params: 134,915 # Non-trainable params: 0 # _____________________________________________________________________

|

例子:从DQN模型中获取Q-value |

import os os.environ["CUDA_VISIBLE_DEVICES"] = '3' import ray import numpy as np import ray.rllib.agents.ppo as ppo from ray.tune.logger import pretty_print ray.init() import gym # Get a reference to the policy # Get a reference to the model through the policy from ray.rllib.agents.dqn import DQNTrainer trainer = DQNTrainer(env="CartPole-v0", config={"eager": True}) model = trainer.get_policy().model # <ray.rllib.models.catalog.FullyConnectedNetwork_as_DistributionalQModel ...> # List of all model variables model.variables() # [<tf.Variable 'default_policy/fc_1/kernel:0' shape=(4, 256) dtype=float32>, ...] # Run a forward pass to get base model output. Note that complex observations # must be preprocessed. An example of preprocessing is examples/saving_experiences.py model_out = model.from_batch({"obs": np.array([[0.1, 0.2, 0.3, 0.4]])}) # (<tf.Tensor: id=832, shape=(1, 256), dtype=float32, numpy=...) # Access the base Keras models (all default models have a base) model.base_model.summary() # Model: "model" # _______________________________________________________________________ # Layer (type) Output Shape Param # Connected to # ======================================================================= # observations (InputLayer) [(None, 4)] 0 # _______________________________________________________________________ # fc_1 (Dense) (None, 256) 1280 observations[0][0] # _______________________________________________________________________ # fc_out (Dense) (None, 256) 65792 fc_1[0][0] # _______________________________________________________________________ # value_out (Dense) (None, 1) 257 fc_1[0][0] # ======================================================================= # Total params: 67,329 # Trainable params: 67,329 # Non-trainable params: 0 # ______________________________________________________________________________ # Access the Q value model (specific to DQN) model.get_q_value_distributions(model_out) # [<tf.Tensor: id=891, shape=(1, 2)>, <tf.Tensor: id=896, shape=(1, 2, 1)>] model.q_value_head.summary() # Model: "model_1" # _________________________________________________________________ # Layer (type) Output Shape Param # # ================================================================= # model_out (InputLayer) [(None, 256)] 0 # _________________________________________________________________ # lambda (Lambda) [(None, 2), (None, 2, 1), 66306 # ================================================================= # Total params: 66,306 # Trainable params: 66,306 # Non-trainable params: 0 # _________________________________________________________________ # Access the state value model (specific to DQN) model.get_state_value(model_out) # <tf.Tensor: id=913, shape=(1, 1), dtype=float32> model.state_value_head.summary() # Model: "model_2" # _________________________________________________________________ # Layer (type) Output Shape Param # # ================================================================= # model_out (InputLayer) [(None, 256)] 0 # _________________________________________________________________ # lambda_1 (Lambda) (None, 1) 66049 # ================================================================= # Total params: 66,049 # Trainable params: 66,049 # Non-trainable params: 0 # _________________________________________________________________

|

参考资料 |

https://docs.ray.io/en/latest/rllib.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号