codis3安装测试

环境

机器: cld-tmp-ops-06

集群方式: 伪集群

依赖: java(zk)、go

codis-dashboard:192.168.233.135:11080

codis-fe:192.168.233.135:18090

codis-proxy:192.168.233.135:19000,192.168.233.138:19000,192.168.233.139:19000

redis:192.168.233.135:6379 (maxmemory 2G)

192.168.233.135:6380(maxmemory 2G)

redis:192.168.233.138:6379 (maxmemory 2G)

192.168.233.138:6380 (maxmemory 2G)

redis:192.168.233.139:6379 (maxmemory 2G)

192.168.233.139:6380 (maxmemory 2G)

sentimel:192.168.233.135:26379 ,192.168.233.138:26379 ,192.168.233.139:26379

下载应用

wget http://golangtc.com/static/go/1.8/go1.8.linux-amd64.tar.gz

wget http://mirrors.hust.edu.cn/apache/zookeeper/zookeeper-3.4.10/zookeeper-3.4.10.tar.gz

wget https://github.com/CodisLabs/codis/archive/3.1.5.tar.gz

安装依赖

export JAVA_HOME=/usr/local/java CLASSPATH=/usr/local/java/lib/dt.jar:/usr/local/java/lib/tools.jar PATH=/usr/local/java/bin:$PATH export PATH JAVA_HOME CLASSPATH export GOROOT=/usr/local/go export GOPATH=/usr/local/gopkg export PATH=$GOROOT/bin:$PATH

source /etc/profile即可

安装Zookeeper

解压到/home/service

zoo.cfg

# egrep -v "(^$|^#)" /usr/local/zookeeper-3.4.10/conf/zoo.cfg tickTime=2000 initLimit=10 syncLimit=5 dataDir=/export/zookeeper/data dataLogDir=/export/zookeeper/log clientPort=2181 server.135=192.168.233.135:2888:3888 server.138=192.168.233.138:2888:3888 server.139=192.168.233.139:2888:3888 #clientPort:客户端连接 Zookeeper 服务器的端口 #server.N=YYY:A:B 其中N表示服务器编号就是myid的编号,YYY表示服务器的IP地址,A为LF通信端口,表示该服务器与集群中的leader交换的信息的端口。B为选举端口,表示选举新leader时服务器间相互通信的端口

配置myid

echo "135" > /export/zookeeper/data/myid echo "138" > /export/zookeeper/data/myid echo "139" > /export/zookeeper/data/myid

启动zk

/usr/local/zookeeper-3.4.10/bin/zkServer.sh start /usr/local/zookeeper-3.4.10/bin/zkServer.sh status /usr/local/zookeeper-3.4.10/bin/zkCli.sh -server 192.168.233.138:2181

安装codis

创建编译目录

mkdir -pv /usr/local/gopkg/src/github.com/CodisLabs

编译

mv codis.xxx coids &&cd codis&&make

之后生成一个bin目录 将目录copy到codis启动目录下我的目录是 /export/codis/

安装dashboard

- 生成dashboard配置文件

/export/codis/bin/codis-dashboard --default-config |tee /export/codis/conf/dashboard.conf # egrep -v "^$|^#" /export/codis/conf/dashboard.conf coordinator_name = "zookeeper" coordinator_addr = "192.168.233.135:2181,192.168.233.138:2181,192.168.233.139:2181" product_name = "codis-test" product_auth = "xukq" admin_addr = "0.0.0.0:18080" migration_method = "semi-async" migration_parallel_slots = 100 migration_async_maxbulks = 200 migration_async_maxbytes = "32mb" migration_async_numkeys = 500 migration_timeout = "30s" sentinel_quorum = 2 sentinel_parallel_syncs = 1 sentinel_down_after = "30s" sentinel_failover_timeout = "5m" sentinel_notification_script = "" sentinel_client_reconfig_script = ""

启动dashboard

nohup /export/codis/bin/codis-dashboard --ncpu=2 --config=/export/codis/conf/dashboard.conf --log=/export/codis/log/dashboard.log --log-level=WARN &

关闭命令

/export/codis/bin/codis-admin --dashboard=192.168.233.135:18080 --shutdown

安装codis-fe

生成配置文件

# /export/codis/bin/codis-admin --dashboard-list --zookeeper=192.168.233.138:2181|tee /export/codis/conf/codis-fe.json [ { "name": "codis-test", "dashboard": "192.168.233.135:18080" } ]

启动FE

nohup /export/codis/bin/codis-fe --ncpu=2 --log=/export/codis/log/codis-fe.log --log-level=WARN --dashboard-list=/export/codis/conf/codis-fe.json --listen=0.0.0.0:18090 &

安装codis-proxy

生成配置文件(其他两台的配置相同,一起启动即可)

/export/codis/bin/codis-proxy --default-config|tee /export/codis/conf/codis-proxy.conf # egrep -v "(^$|^#)" /export/codis/conf/codis-proxy.conf product_name = "codis-test" product_auth = "xukq" session_auth = "" admin_addr = "0.0.0.0:11080" proto_type = "tcp4" proxy_addr = "0.0.0.0:19000" jodis_name = "zookeeper" jodis_addr = "192.168.233.135:2181,192.168.233.138:2181,192.168.233.139:2181" jodis_timeout = "20s" jodis_compatible = false proxy_datacenter = "" proxy_max_clients = 1000 proxy_max_offheap_size = "1024mb" proxy_heap_placeholder = "256mb" backend_ping_period = "5s" backend_recv_bufsize = "128kb" backend_recv_timeout = "30s" backend_send_bufsize = "128kb" backend_send_timeout = "30s" backend_max_pipeline = 1024 backend_primary_only = false backend_primary_parallel = 1 backend_replica_parallel = 1 backend_keepalive_period = "75s" backend_number_databases = 16 session_recv_bufsize = "128kb" session_recv_timeout = "30m" session_send_bufsize = "64kb" session_send_timeout = "30s" session_max_pipeline = 10000 session_keepalive_period = "75s" session_break_on_failure = false metrics_report_server = "" metrics_report_period = "1s" metrics_report_influxdb_server = "" metrics_report_influxdb_period = "1s" metrics_report_influxdb_username = "" metrics_report_influxdb_password = "" metrics_report_influxdb_database = "" metrics_report_statsd_server = "" metrics_report_statsd_period = "1s" metrics_report_statsd_prefix = ""

启动

nohup /export/codis/bin/codis-proxy --ncpu=2 --config=/export/codis/conf/codis-proxy.conf --log=/export/codis/log/codis-proxy.log --log-level=WARN &

关闭命令

/export/codis/bin/codis-admin --proxy=192.168.233.135:11080 --auth='xukq' --shutdown

安装codis-server

生成配置文件

# cat /export/codis/conf/redis6379.conf daemonize yes pidfile "/usr/local/codis/run/redis6379.pid" port 6379 timeout 86400 tcp-keepalive 60 loglevel notice logfile "/export/codis/log/redis6379.log" databases 16 save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error no rdbcompression yes dbfilename "dump6379.rdb" dir "/export/codis/redis_data_6379" masterauth "xukq" slave-serve-stale-data yes repl-disable-tcp-nodelay no slave-priority 100 requirepass "xukq" maxmemory 2gb maxmemory-policy allkeys-lru appendonly no appendfsync everysec no-appendfsync-on-rewrite yes auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-entries 512 list-max-ziplist-value 64 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 0 0 0 client-output-buffer-limit pubsub 0 0 0 hz 10 aof-rewrite-incremental-fsync yes repl-backlog-size 32mb # Generated by CONFIG REWRITE

6380同6379配置

启动

/export/codis/bin/codis-server /export/codis/conf/redis6379.conf

6380 以及其他机器同样这样启动

启动sentinel

cp /usr/local/gopkg/src/github.com/CodisLabs/codis/extern/redis-3.2.8/src/redis-sentinel /export/codis/bin nohuo /export/codis/bin/redis-sentinel /export/codis/conf/sentinel.conf & # cat /export/codis/conf/sentinel.conf bind 0.0.0.0 protected-mode no port 26379 dir "/tmp" 这里不需要指定节点信息

nohuo /export/codis/bin/redis-sentinel /export/codis/conf/sentinel.conf &

查看进程

# ps -ef |grep codis root 29475 1 0 5月14 ? 00:01:48 /export/codis/bin/codis-fe --ncpu=2 --log=/export/codis/log/codis-fe.log --log-level=WARN --dashboard-list=/export/codis/conf/codis-fe.json --listen=0.0.0.0:18090 root 31454 1 0 5月14 ? 00:09:03 /export/codis/bin/codis-server *:6379 root 31503 1 0 5月14 ? 00:14:15 /export/codis/bin/codis-server *:6380 root 91651 1 1 5月14 ? 00:36:33 /export/codis/bin/codis-dashboard --ncpu=2 --config=/export/codis/conf/dashboard.conf --log=/export/codis/log/dashboard.log --log-level=WARN root 93306 1 0 5月14 ? 00:07:15 /export/codis/bin/codis-proxy --ncpu=2 --config=/export/codis/conf/codis-proxy.conf --log=/export/codis/log/codis-proxy.log --log-level=WARN root 105017 1 0 5月14 ? 00:11:43 /export/codis/bin/redis-sentinel 0.0.0.0:26379 [sentinel] root 127452 117312 0 11:55 pts/1 00:00:00 grep --color=auto codis

Fe管理界面配置

http://192.168.233.135:18090/打开web即可

codis可用性测试:

一下内容为其他测试集群测试

目前redis-port不支持从twemproxy 读取,因此只能将redis作为数据源进行数据同步。

原理:

简单的来说就是静态解析rdb到redis。

官方介绍有四个功能:

DECODE dumped payload to human readable format (hex-encoding)

RESTORE rdb file to target redis

DUMP rdb file from master redis

SYNC data from master to slave

线下导入测试

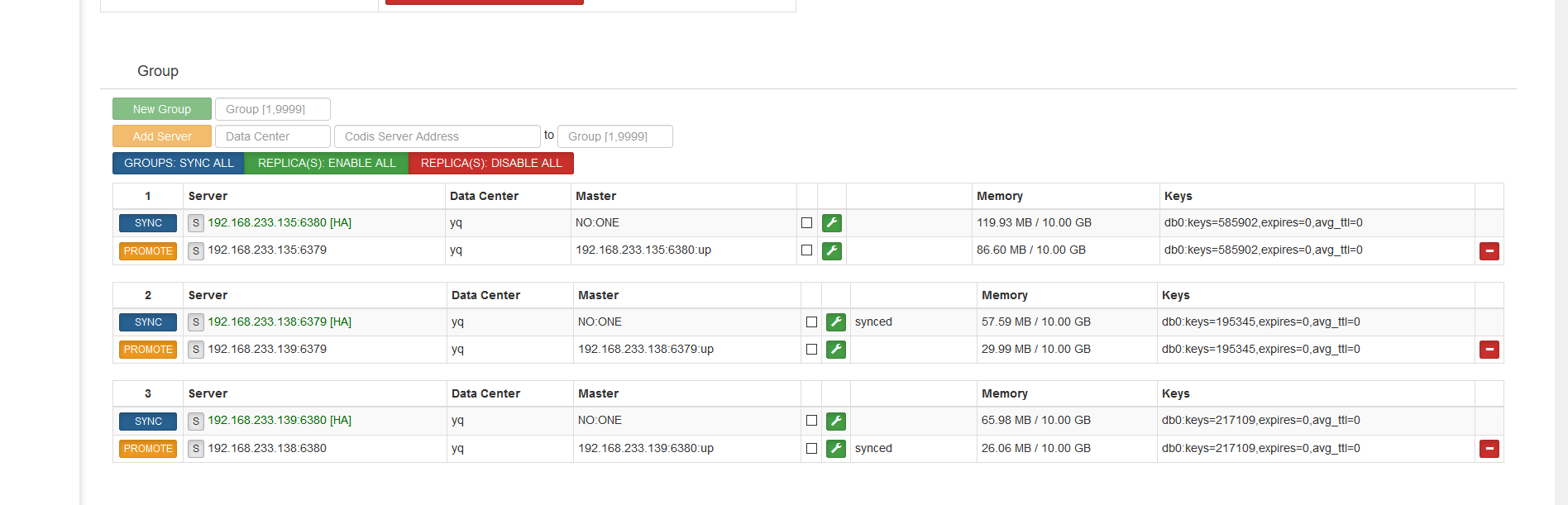

先查看codis数据量

进行导入:

# cat daoruredis.sh#!/bin/bashfor port in {6379,6380}; do/home/service/redis-port/redis-port sync -n 1 --parallel=10 -f 192.168.233.140:${port} --password="91eb221c" -t 192.168.233.138:19000 >${port}.log 2>&1 &sleep 5done2017/05/15 15:00:25 main.go:189: [INFO] set ncpu = 1, parallel = 102017/05/15 15:00:25 sync.go:56: [INFO] sync from '192.168.233.140:6379' to '192.168.233.138:19000'2017/05/15 15:00:26 sync.go:73: [INFO] rdb file = 71543382017/05/15 15:00:27 sync.go:235: [INFO] total=7154338 - 20700 [ 0%] entry=6272017/05/15 15:00:28 sync.go:235: [INFO] total=7154338 - 46112 [ 0%] entry=17942017/05/15 15:00:29 sync.go:235: [INFO] total=7154338 - 103262 [ 1%] entry=44172017/05/15 15:00:30 sync.go:235: [INFO] total=7154338 - 165345 [ 2%] entry=72712017/05/15 15:00:31 sync.go:235: [INFO] total=7154338 - 207198 [ 2%] entry=91932017/05/15 15:00:32 sync.go:235: [INFO] total=7154338 - 249386 [ 3%] entry=111322017/05/15 15:00:33 sync.go:235: [INFO] total=7154338 - 294590 [ 4%] entry=132092017/05/15 15:00:34 sync.go:235: [INFO] total=7154338 - 339400 [ 4%] entry=152672017/05/15 15:00:35 sync.go:235: [INFO] total=7154338 - 372815 [ 5%] entry=168042017/05/15 15:00:36 sync.go:235: [INFO] total=7154338 - 411493 [ 5%] entry=185822017/05/15 15:00:37 sync.go:235: [INFO] total=7154338 - 453199 [ 6%] entry=204982017/05/15 15:00:38 sync.go:235: [INFO] total=7154338 - 494151 [ 6%] entry=223792017/05/15 15:00:39 sync.go:235: [INFO] total=7154338 - 540217 [ 7%] entry=244972017/05/15 15:00:40 sync.go:235: [INFO] total=7154338 - 583806 [ 8%] entry=26499......2017/05/15 15:32:32 sync.go:291: [INFO] sync: +forward=0 +nbypass=0 +nbytes=02017/05/15 15:32:33 sync.go:291: [INFO] sync: +forward=1 +nbypass=0 +nbytes=142017/05/15 15:32:34 sync.go:291: [INFO] sync: +forward=0 +nbypass=0 +nbytes=0

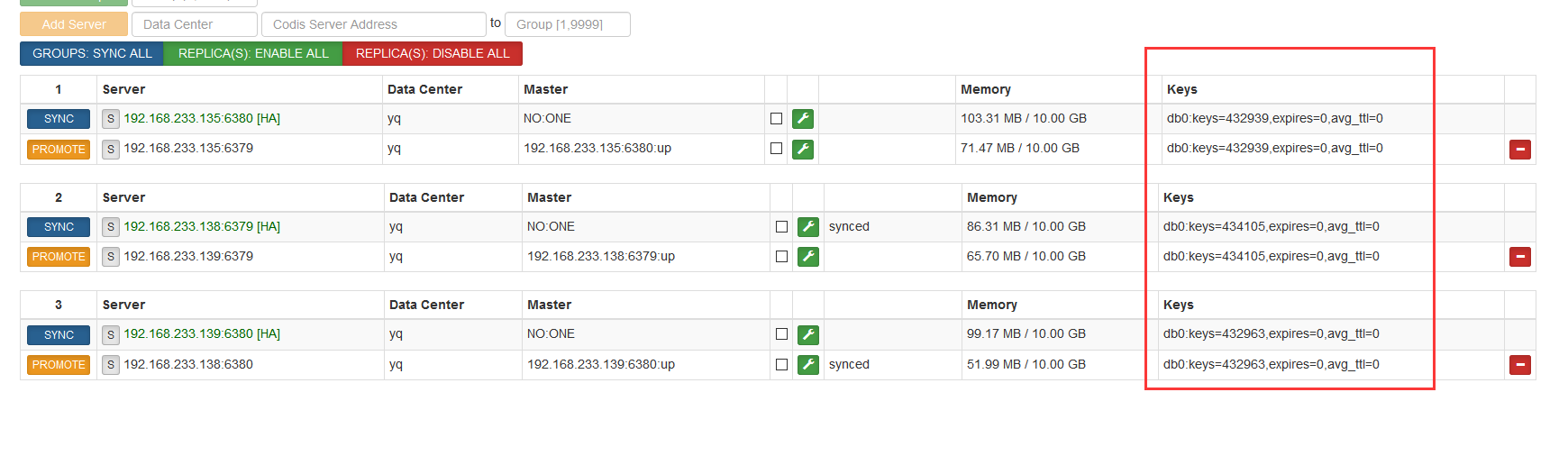

再次查看codis key

从图上看key,已经进行导入。

注(源端可以边写边导入,尽可能的减少key损失)

主从切换&&数据导入测试

从目前来看我们就一个组一个实例单点,

下面我们添加节点,并进行数据迁移以及主机故障测试

可以看到 在复制过程中如果数据没有复制完成会丢失。

下面启动20002的redis进程,并将20002提升为主

查看在同步过程中将某一台下线,其中同步的key将永久丢失。

浙公网安备 33010602011771号

浙公网安备 33010602011771号