Kubernetes部署

主机规划

| 主机名 | ssh地址 | 角色 |

|---|---|---|

| master.kube.test | ssh://root@172.23.107.18:60028 | docker-ce、Harbor、etcdkeeper、kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kupe-proxy、flannel |

| node1.kube.test | ssh://root@172.23.101.47:60028 | docker-ce、etcd、kubelet、kupe-proxy、flannel |

| node2.kube.test | ssh://root@172.23.101.48:60028 | docker-ce、etcd、kubelet、kupe-proxy、flannel |

| node3.kube.test | ssh://root@172.23.101.49:60028 | docker-ce、etcd、kubelet、kupe-proxy、flannel |

基础环境准备

基础配置

###############################################################

### 对所有主机的操作

###############################################################

systemctl stop firewalld

systemctl disable firewalld

vim /etc/selinux/config

###############################################################

SELINUX=disabled

###############################################################

setenforce 0

getenforce # 输出为Permissive或者Disabled则表示配置成功

# 修改内核参数

cat > /etc/sysctl.d/kubernetes.conf <<EOF

# 将桥接的IPv4流量传递到iptables的链

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

vm.swappiness=0

#关闭不使用的ipv6协议栈,防止触发docker BUG.

net.ipv6.conf.all.disable_ipv6=1

EOF

sysctl --system # 使配置生效

# ntp时间同步

yum -y install ntp

systemctl daemon-reload

systemctl enable ntpd.service

systemctl start ntpd.service

配置yum源

###############################################################

### 对所有主机的操作

###############################################################

cd /etc/yum.repos.d/

# 首先配置docker-ce镜像

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 或者

wget https://download.docker.com/linux/centos/docker-ce.repo

# 更新本地yum缓存

yum clean all && yum makecache

# 验证配置

yum list | grep docker-ce

配置hosts以及免密

###############################################################

### 对所有主机的操作

###############################################################

echo "master.kube.test" >> /etc/hostname # 172.23.107.18上的操作

echo "node1.kube.test" >> /etc/hostname # 172.23.101.47上的操作

echo "node2.kube.test" >> /etc/hostname # 172.23.101.48上的操作

echo "node3.kube.test" >> /etc/hostname # 172.23.101.49上的操作

echo "HOSTNAME=master.kube.test" /etc/sysconfig/network # 172.23.107.18上的操作

echo "HOSTNAME=node1.kube.test" /etc/sysconfig/network # 172.23.101.47上的操作

echo "HOSTNAME=node2.kube.test" /etc/sysconfig/network # 172.23.101.48上的操作

echo "HOSTNAME=node3.kube.test" /etc/sysconfig/network # 172.23.101.49上的操作

hostname master.kube.test # 172.23.107.18上的操作

hostname node1.kube.test # 172.23.101.47上的操作

hostname node2.kube.test # 172.23.101.48上的操作

hostname node3.kube.test # 172.23.101.49上的操作

###############################################################

### ssh登陆到172.23.107.18主机

###############################################################

# 生成rsa密钥对

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

# 配置免密

ssh-copy-id -p 60028 172.23.107.18 # 在交互界面输入172.23.107.18主机的root账户密码

ssh-copy-id -p 60028 172.23.101.47 # 在交互界面输入172.23.101.47主机的root账户密码

ssh-copy-id -p 60028 172.23.101.48 # 在交互界面输入172.23.101.48主机的root账户密码

ssh-copy-id -p 60028 172.23.101.49 # 在交互界面输入172.23.101.49主机的root账户密码

# 配置本地域名映射

echo "master.kube.test 172.23.107.18" >> /etc/hosts

echo "node1.kube.test 172.23.101.47" >> /etc/hosts

echo "node2.kube.test 172.23.101.48" >> /etc/hosts

echo "node3.kube.test 172.23.101.49" >> /etc/hosts

# 远程拷贝hosts文件

scp -P 60028 /etc/hosts root@172.23.101.47:/etc/

scp -P 60028 /etc/hosts root@172.23.101.48:/etc/

scp -P 60028 /etc/hosts root@172.23.101.49:/etc/

安装docker

###############################################################

### 对所有主机的操作

###############################################################

mkdir -p /opt/{src,app,data/docker}

# 安装docker

yum install -y docker-ce

# 配置docker文件

vim /etc/docker/daemon.json

cat EOF > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://registry.docker-cn.com"], # 国内的docker镜像地址

"insecure-registries": ["172.23.107.18:1080"], # 允许push的局域网镜像仓库地址

"exec-opts": ["native.cgroupdriver=systemd"],

"graph": "/opt/data/docker" # 镜像和容器存储地址

}

EOF

systemctl daemon-reload

systemctl start docker

systemctl enable docker

说明:

- 使用systemd作为docker的cgroup driver可以确保服务器节点在资源紧张的情况更加稳定

- 上面还可以自定义bip参数,设置docker网关和子网掩码,如:172.17.0.1/24。但是在后面配置了flannel是时候会在systemctl的unit配置中作为--bip参数传入,这里如果重复配置会导致docker不能启动

- 部分参数(registry-mirrors、insecure-registries ...)修改,只要reconfigure(systemctl reload docker) 就生效

Harbor安装

docker-compose安装

由于Harbor组件较多,一个个容器去配置比较麻烦,所以这里直接使用docker-compose统一安装

###############################################################

### ssh登录到172.23.107.18

###############################################################

wget https://github.com/docker/compose/releases/download/1.27.4/docker-compose-Linux-x86_64 -O /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

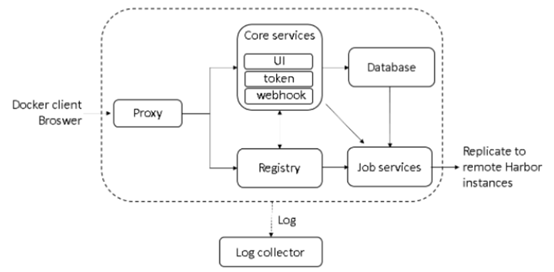

简介

Harbor是一个用于存储和分发Docker镜像的企业级Registry服务器,通过添加一些企业必需的功能特性,例如安全、标识和管理等,扩展了开源Docker Distribution。作为一个企业级私有Registry服务器,Harbor提供了更好的性能和安全。提升用户使用Registry构建和运行环境传输镜像的效率。Harbor支持安装在多个Registry节点的镜像资源复制,镜像全部保存在私有Registry中, 确保数据和知识产权在公司内部网络中管控。另外,Harbor也提供了高级的安全特性,诸如用户管理,访问控制和活动审计等。

各组件介绍

- Proxy:Harbor的registry, UI, token等服务,通过一个前置的反向代理统一接收浏览器、Docker客户端的请求,并将请求转发给后端不同的服务。

- Registry:负责储存Docker镜像,并处理docker push/pull 命令。由于我们要对用户进行访问控制,即不同用户对Docker image有不同的读写权限,Registry会指向一个token服务,强制用户的每次docker pull/push请求都要携带一个合法的token, Registry会通过公钥对token 进行解密验证。

- Core services:这是Harbor的核心功能,主要提供以下服务:

- UI:提供图形化界面,帮助用户管理registry上的镜像(image), 并对用户进行授权。

- webhook:为了及时获取registry上image状态变化的情况,在Registry上配置webhook,把状态变化传递给UI模块。

- token 服务:负责根据用户权限给每个docker push/pull命令签发token. Docker 客户端向Registry服务发起的请求,如果不包含token,会被重定向到这里,获得token后再重新向Registry进行请求。

- Database:为core services提供数据库服务,负责储存用户权限、审计日志、Docker image分组信息等数据。

- Job Services:提供镜像远程复制功能,可以把本地镜像同步到其他Harbor实例中。

- Log collector:为了帮助监控Harbor运行,负责收集其他组件的log,供日后进行分析。

![]()

安装

###############################################################

### ssh登录到172.23.107.18

###############################################################

mkdir /opt/data/harbor

wget https://github.com/goharbor/harbor/releases/download/v2.1.2/harbor-offline-installer-v2.1.2.tgz -P /opt/src/

tar -zxvf /opt/src/harbor-offline-installer-v2.1.2.tgz -C /opt/app/ && cd /opt/app/harbor

cp harbor.yml.tmpl harbor.yml

vim harbor.yml

hostname: 172.23.107.18

http:

port: 80

# 将https相关配置注释

#https:

# port: 443

# certificate: /opt/data/harbor/cert/server.crt

# private_key: /opt/data/harbor/cert/server.key

harbor_admin_password: Harbor123

data_volume: /opt/data/harbor

# 安装

./install.sh

docker-compose ps

# 启动

docker-compose up -d #启动

docker-compose stop #停止

docker-compose restart #重新启动

# 注:install之后会产生docker-compose的配置文件,可以在这里修改相关配置。或者在common里面还有nginx得相关配置可工修改。还可修改harbor.yml之后,再次执行install.sh

# 测试仓库

docker login 172.23.107.18:1080 --username admin --password Harbor12345

docker pull busybox

docker tag busybox:latest 172.23.107.18:1080/library/busybox:latest

docker push 172.23.107.18:1080/library/busybox:latest

docker rmi 172.23.107.18:1080/library/busybox:latest

docker rmi busybox:latest

docker pull 172.23.107.18:1080/library/busybox:latest

证书

环境准备

###############################################################

### ssh登录到172.23.107.18

###############################################################

mkdir -p /opt/app/cfssl-r1.2/{bin,ca}

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /opt/app/cfssl-r1.2/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /opt/app/cfssl-r1.2/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /opt/app/cfssl-r1.2/bin/cfssl-certinfo

ln -s /opt/app/cfssl-r1.2 /usr/local/cfssl && cd /usr/local/cfssl

chmod +x /usr/local/cfssl/bin/{cfssl,cfssljson,cfssl-certinfo}

vim /etc/profile

###############################################################

# System Env

export PATH=$PATH:/usr/local/cfssl/bin

###############################################################

source /etc/profile

# 编辑签发证书时的配置文件

cat > ca/ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"etcd": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

},

"kubernetes": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

说明:

- ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

- signing:表示该cert证书可以签名其他证书;生成的cert.pem证书中 CA=TRUE;

- server auth:表示client可以用该cert证书对server提供的证书进行验证;

- client auth:表示server可以用该cert证书对client提供的证书进行验证;

- expiry:过期时间,这里设置的是20年;

自签名CA根证书

# 编辑证书请求文件

cat > ca/ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "System"

}],

"ca": {

"expiry": "175200h"

}

}

EOF

说明:

- “CN”:Common Name,kube-apiserver 从证书中提取作为请求的用户名 (User Name);浏览器使用该字段验证网站是否合法;

- “O”:Organization,kube-apiserver 从证书中提取该字段作为请求用户所属的组 (Group)

# 生成证书

cfssl gencert -initca ca/ca-csr.json | cfssljson -bare /usr/local/cfssl/ca/ca

说明:结果会生成一个证书请求文件ca.csr、证书文件ca.pem和私钥文件ca-key.pem。cfssljson应该是通过管道将cfssl生成的证书和私钥写入到以-bare定义的开头名称的文件中。

安装etcd服务

etcd安装

###############################################################

### ssh登录到172.23.101.47、172.23.101.48、172.23.101.49

###############################################################

wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz -P /opt/src

tar -zxvf /opt/src/etcd-v3.4.13-linux-amd64.tar.gz -C /opt/app/

ln -s /opt/app/etcd-v3.4.13-linux-amd64 /usr/local/etcd && cd /usr/local/etcd

mkdir -p /usr/local/etcd/{bin,conf} /opt/data/etcd/ca && mv /usr/local/etcd/{etcd,etcdctl} /usr/local/etcd/bin/

vim /etc/profile

#####################################################################

# Etcd Env

export ETCD_HOME=/usr/local/etcd

export PATH=$PATH:$ETCD_HOME/bin

#####################################################################

source /etc/profile

etcd -version

# 修改配置文件

cat > conf/etcd.conf <<EOF

# 规划三个节点etcd name分别为:etcd1、etcd2、etcd3

# [member]

ETCD_NAME=etcd1 # 对应的主机配置对应的name

ETCD_DATA_DIR="/opt/data/etcd" # 数据存储目录

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380" # 供集群间通信地址

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" # 为客户端提供接口服务地址

# [cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://[xxx.xxx.xxx.xxx]:2380" # 配置本机可供外部访问的集群通信地址

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" # 集群token

ETCD_ADVERTISE_CLIENT_URLS="http://[xxx.xxx.xxx.xxx]:2379" # 配置本机可供外部客户端访问的地址

ETCD_INITIAL_CLUSTER="etcd1=http://172.23.101.47:2380,etcd2=http://172.23.101.48:2380,etcd3=http://172.23.101.49:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_ENABLE_V2="true"

EOF

cat > /usr/lib/systemd/system/etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/opt/data/etcd

EnvironmentFile=-/usr/local/etcd/conf/etcd.conf

ExecStart=/usr/local/etcd/bin/etcd

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable etcd.service

systemctl start etcd.service

# 验证

etcdctl member list

etcdctl --endpoints="http://172.23.101.47:2379,http://172.23.101.48:2379,http://172.23.101.49:2379" endpoint health

注意:

- 启动集群启动时,一定要快速启动所有节点,否则 systemctl 命令会一直阻塞直到超时,只有集群所有节点都正常运行了,etcd集群才算是启动成功,systemctl才会有返回,systemctl status etcd.service才会是正常的

- flannel操作etcd使用的是v2的API,而kubernetes操作etcd使用的v3的API,为了兼容flannel,将默认开启v2版本,故配置文件中设置 ETCD_ENABLE_V2="true"

- ETCD3.4版本ETCDCTL_API=3 etcdctl 和 etcd --enable-v2=false 成为了默认配置,如要使用v2版本,执行etcdctl时候需要设置ETCDCTL_API环境变量,例如:ETCDCTL_API=2 etcdctl

- 可以使用api2 和 api3 写入 etcd3 数据,但是需要注意,使用不同的api版本写入数据需要使用相应的api版本读取数据。

- f=2 etcdctl ls /

- ETCDCTL_API=3 etcdctl get /

- ETCD3.4版本会自动读取环境变量的参数,所以EnvironmentFile文件中有的参数,不需要再次在ExecStart启动参数中添加,二选一,如同时配置,会触发以下类似报错“etcd: conflicting environment variable "ETCD_NAME" is shadowed by corresponding command-line flag (either unset environment variable or disable flag)”

- flannel操作etcd使用的是v2的API,而kubernetes操作etcd使用的v3的API

安装etcdkeeper(推荐)

###############################################################

### ssh登陆到172.23.107.18

###############################################################

wget https://github.com/evildecay/etcdkeeper/releases/download/v0.7.6/etcdkeeper-v0.7.6-linux_x86_64.zip -P /opt/src

unzip /opt/src/etcdkeeper-v0.7.6-linux_x86_64.zip -d /opt/app/

ln -s /opt/app/etcdkeeper /usr/local/etcdkeeper

cd /usr/local/etcdkeeper && chmod +x etcdkeeper

cat > /usr/lib/systemd/system/etcdkeeper.service <<EOF

[Unit]

Description=etcdkeeper service

After=network.target

[Service]

Type=simple

ExecStart=/usr/local/etcdkeeper/etcdkeeper -h 172.23.107.18 -p 8800

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

PrivateTmp=true

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl start etcdkeeper.service

systemctl enable etcdkeeper.service

访问地址:http://172.23.107.18:8800/etcdkeeper

安装Flannel

登录主机

ssh -P 60028 root@172.23.107.18

ssh -P 60028 root@172.23.101.47

ssh -P 60028 root@172.23.101.48

ssh -P 60028 root@172.23.101.49

初始化环境

mkdir -p /opt/app/flannel-v0.13.0-linux-amd64/{bin,conf,ca}

wget https://github.com/coreos/flannel/releases/download/v0.13.0/flannel-v0.13.0-linux-amd64.tar.gz -P /opt/src

tar -zxvf /opt/src/flannel-v0.13.0-linux-amd64.tar.gz -C /opt/app/flannel-v0.13.0-linux-amd64

ln -s /opt/app/flannel-v0.13.0-linux-amd64 /usr/local/flannel && cd /usr/local/flannel

mv ./{flanneld,mk-docker-opts.sh} ./bin

向etcd中注册网段

ETCDCTL_API=2 etcdctl set /coreos.com/network/config '{"Network": "172.17.0.0/16", "SubnetMin": "172.17.0.0", "SubnetMax": "172.17.255.0", "SubnetLen": 24, "Backend": {"Type": "vxlan", "Directrouting": true}}'

flannel使用CIDR格式的网络地址,用于为Pod配置网络功能:

-

Network:用于指定Flannel地址池。上述配置中也表示整个flannel集群的网段为172.17.0.0,子网掩码为255.255.0.0;

-

SubnetMin、SudbnetMax:分别用于指定能分配的ip段的范围。在上述中配置中

SubnetMin=172.17.0.0、SubnetMax=172.17.255.0,代表每个主机分配的ip端的范围。即:- node(1)的docker0网桥ip为172.17.0.0

- node(2)的docker0网桥ip为172.17.1.0

- ...

- node(n)的docker0网桥ip为172.17.255.0

不会超过范围;

-

SubnetLen:用于指定分配给单个宿主机的docker0的ip段的子网掩码的长度。上述配置中表示每个node分配给pod的ip范围配为172.17.xxx.

-

Backend:用于指定数据包以什么方式转发,默认为udp模式,host-gw模式性能最好,但不能跨宿主机网络

- VxLAN:类似于4层隧道的协议,需要按flannel的方式拆包和封包,性能稍差。

- Host-gw:直接使用原生的3层网络,但不能跨宿主机网络

- Directrouting:设为true的时候,若请求端和回应端在同网段,则使用直接路由模式,若不同网段,则自动降级为VxLAN模式。

到此我们可以发现整个集群中会存在3个网络:

-

node network:承载kubernetes集群中各个“物理”Node(master和minion)通信的网络;

-

service network:由kubernetes集群中的Services所组成的“网络”。只在本节点中通过iptables路由消息,如果是同一节点的pod则不需要转换,如果跨节点最终还是会转换为通过flannel通信;

-

flannel network: 即Pod网络,集群中承载各个Pod相互通信的网络。

编辑配置文件

cat > conf/flannel.conf <<EOF

FLANNELD_FLAGS="\

--etcd-endpoints=http://172.23.101.47:2379,http://172.23.101.48:2379,http://172.23.101.49:2379 \

--etcd-prefix=/coreos.com/network \

"

EOF

编辑Unit文件

cat > /usr/lib/systemd/system/flanneld.service <<EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=-/usr/local/flannel/conf/flannel.conf

ExecStart=/usr/local/flannel/bin/flanneld --ip-masq \$FLANNELD_FLAGS

ExecStartPost=/usr/local/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start flanneld.service

systemctl enable flanneld.service

配置Docker使用flannel IP

vim /usr/lib/systemd/system/docker.service

####################################################################################

[Unit]

# 不要修改不相关的配置

# ...

[Service]

# 不要修改不相关的配置

# ...

EnvironmentFile=-/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock $DOCKER_NETWORK_OPTIONS

# 不要修改不相关的配置

# ...

[Install]

# 不要修改不相关的配置

# ...

####################################################################################

systemctl daemon-reload

systemctl restart docker.service

安装kubernetes服务

安装基础配置

###############################################################

### 对所有主机执行

###############################################################

# 注:kubernetes-server-linux-amd64.tar.gz包里面包含多Kubernetes集群所需的所有文件,所以不需要再次单独下载node可client包

wget https://dl.k8s.io/v1.18.12/kubernetes-server-linux-amd64.tar.gz -P /opt/src

tar -zxvf /opt/src/kubernetes-server-linux-amd64.tar.gz -C /opt/app/

ln -s /opt/app/kubernetes /usr/local/kubernetes && cd /usr/local/kubernetes

mkdir /usr/local/kubernetes/{bin,conf,ca,logs} /opt/data/kubelet

cp server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubelet,kube-proxy,kubectl} bin/

cp /usr/local/cfssl/ca/ca.pem ./ca/

# 配置环境变量

vim /etc/profile

####################################################################################

export PATH=$PATH:/usr/local/kubernetes/bin

####################################################################################

source /etc/profile

Master上部署kube-apiserver

登录主机

ssh -P 60028 root@172.23.107.18

cd /ust/local/kubernetes

签发Apiserver的服务端证书

编辑证书申请配置文件

cat > ca/kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"172.23.107.18",

"localhost",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "k8s",

"OU": "System"

}]

}

EOF

说明:如果 hosts 字段不为空则需要指定授权使用该证书的 IP 或域名列表,不在此范围的的节点或者服务使用此证书就会报证书不匹配错误。由于该证书后续被 kubernetes master 集群使用,将master节点的IP都填上(如有多个),同时还有service网络的首IP。(一般是 kube-apiserver 指定的 service-cluster-ip-range 网段的第一个IP,如 192.168.0.1)

签发证书

cfssl gencert -ca=/usr/local/cfssl/ca/ca.pem -ca-key=/usr/local/cfssl/ca/ca-key.pem -config=/usr/local/cfssl/ca/ca-config.json -profile=kubernetes ca/kubernetes-csr.json | cfssljson -bare ca/kubernetes

生成Service Account Token密钥对

由于服务/资源/程序等作为客户端需要使用Service Account Token来证明身份,kubernetes中是使用Controller Manager为Service Account进行签发Token的,这是需要有一个RSA私钥文件,在Controller Manager中以--service-account-private-key-file进行配置私钥文件地址。而当客户端使用该私钥签发的Token访问Apiserver时,Apiserver需要使用与该私钥配对的公钥校验该Token。在Apiserver中以--service-account-signing-key-file配置公钥文件地址。

生成ServiceAccount Token签名时用到的密钥对

# 生成私钥

openssl genrsa -out ca/service-account-pri.pem 2048

# 根据私钥提取公钥

openssl rsa -pubout -in ca/service-account-pri.pem -out ca/service-account-pub.pem

创建用于TLSBootstrap的Token用户

apiserver对集群范围内的组件提供证书签发接口的用户配置(实际是有Controller Manager提供的服务,但是由Apiserver对外暴露接口),这里需要有一个有申请证书权限的用户,这个用户通过Token auth方式与apiserver连接认证。

# kubelet使用TLS Bootstrapping时需要使用的token

export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > ca/token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:bootstrappers"

EOF

创建bootstrap配置文件

# 配置集群信息

kubectl config set-cluster kubenertes --certificate-authority=/usr/local/cfssl/ca/ca.pem --embed-certs=true --server=https://172.23.107.18:6443 --kubeconfig=conf/bootstrap.kubeconfig

# 配置用户信息

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=conf/bootstrap.kubeconfig

# 配置上下文信息

kubectl config set-context kubelet-bootstrap --cluster=kubenertes --user=kubelet-bootstrap --kubeconfig=conf/bootstrap.kubeconfig

# 切换上下文

kubectl config use-context kubelet-bootstrap --kubeconfig=conf/bootstrap.kubeconfig

# 查看配置结果

kubectl config view --kubeconfig=conf/bootstrap.kubeconfig

编辑Apiserver启动配置文件

cat > conf/kube-apiserver.conf <<EOF

KUBE_APISERVER_FLAGS="\

--insecure-bind-address=127.0.0.1 \

--insecure-port=8080 \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=172.23.107.18 \

--allow-privileged=true \

--service-cluster-ip-range=192.168.0.0/16 \

--service-node-port-range=30000-32767 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultIngressClass,ResourceQuota \

--alsologtostderr=true \

--log-dir=/usr/local/kubernetes/logs \

--v=2 \

--token-auth-file=/usr/local/kubernetes/ca/token.csv \

--cert-dir=/usr/local/kubernetes/ca \

--tls-cert-file=/usr/local/kubernetes/ca/kubernetes.pem \

--tls-private-key-file=/usr/local/kubernetes/ca/kubernetes-key.pem \

--client-ca-file=/usr/local/cfssl/ca/ca.pem \

--service-account-key-file=/usr/local/kubernetes/ca/service-account-pub.pem \

--kubelet-certificate-authority=/usr/local/cfssl/ca/ca.pem \

--kubelet-client-certificate=/usr/local/kubernetes/ca/kubernetes.pem \

--kubelet-client-key=/usr/local/kubernetes/ca/kubernetes-key.pem \

--kubelet-https=true \

--storage-backend=etcd3 \

--etcd-servers=http://172.23.101.47:2379,http://172.23.101.48:2379,http://172.23.101.49:2379 \

--etcd-prefix=/registry \

"

EOF

- --insecure-bind-address:不安全非https的服务ip地址,后期可能删除,默认0.0.0.0

- --insecure-port:不安全非https的服务ip地址,后期可能删除,默认8080

- --bind-address:安全的https服务ip地址,默认6443

- --secure-port:安全的https服务端口,默认0.0.0.0

- --advertise-address:将apiserver广播给集群成员的ip地址,集群中其他组件必须能够访问,默认使

--bind-address - --allow-privileged:如果为true,则允许特权容器,默认为false

- --service-cluster-ip-range:分配给Service CIDR的IP地址范围,不能与任何Pod的范围重复

- --service-node-port-range:保留给NodePort的端口范围(范围前后包括),默认30000-32767

- --enable-admission-plugins:启用的插件,默认NamespaceLifecycle,LimitRanger,ServiceAccount,TaintNodesByCondition,Priority,DefaultTolerationSeconds,DefaultStorageClass,StorageObjectInUseProtection,PersistentVolumeClaimResize,RuntimeClass,CertificateApproval,Certificateing,CookieSubjectRemissionh,DefaultIngressWebota,AttachmentDomission,DomainResponse,DomainResponse,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response,Response

- --alsologtostderr:是否同时将日志打印到标准错误输出和文件

- --log-dir:日志文件目录

- --v:日志级别

- --token-auth-file:如果如果配置了该flag,将用于通过令牌身份验证保护API服务器的安全端口的文件

- --cert-dir:TLS证书所在的目录, 如果提供了

--tls-cert-file和--tls-private-key-file配置,则将忽略此配置,默认为/var/run/kubernetes - --tls-cert-file:HTTPS x509证书的文件地址,和

--tls-private-key-file配置成对出现,如果启用的https服务但是没有提供--tls-cert-file和--tls-private-key-file配置,则会在--cert-dir配置的地址中生成一个自签名证书和其对应的私钥文件。 - --tls-private-key-file:

--tls-cert-file配置的证书的私钥文件地址。 - --client-ca-file:如果配置了该flag,则使用该ca证书验证客户端。

- --service-account-key-file:用于验证Service Account Token的公钥文件,必须是与kube-controller-manager配置的

--service-account-private-key-file配对的密钥对,kube-controller-manager配置私钥用于签署Service Account Token。指定的文件可以包含多个密钥,并且可以使用不同的文件多次指定flag。默认使用--tls-private-key-file配置的文件 - --kubelet-certificate-authority:为kubelet颁发服务端证书机构的根证书文件路径

- --kubelet-client-certificate:kubelet安全端口的客户端证书路径

- --kubelet-client-key:kubelet安全端口的客户端私钥文件路径

- --kubelet-https:是否使用https访问kubelet,默认true

- --storage-backend:持久化存储类型,默认为etcd3

- --etcd-servers:etcd连接地址

- --etcd-prefix:etcd中路径的默认前缀

编辑Apiserver Unit文件

cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

Wants=etcd.service

[Service]

Type=notify

EnvironmentFile=-/usr/local/kubernetes/conf/kube-apiserver.conf

ExecStart=/usr/local/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_FLAGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start kube-apiserver.service

systemctl enable kube-apiserver.service

# 在浏览器中输入地址 http://172.23.107.18:8080/swagger-ui.html 进行验证

Master上部署kube-controller-manager

登录主机

ssh -P 60028 root@172.23.107.18

cd /ust/local/kubernetes

签发Controller Manager相关证书

这里签发的证书即作为CM的服务端证书,又作为CM与apiserver连接认证的客户端证书,因为它们都是使用同一个ca颁发的

编辑证书申请配置文件

cat > ca/kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"172.23.107.18",

"localhost"

],

"names": [{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:kube-controller-manager",

"OU": "System"

}]

}

EOF

说明:hosts 列表包含所有 kube-controller-manager 节点 IP。CN 为 system:kube-controller-manager、O 为 system:kube-controller-manager,kubernetes 内置的 ClusterRoleBindings system:kube-controller-manager 赋予 kube-controller-manager 工作所需的权限

签发证书

cfssl gencert -ca=/usr/local/cfssl/ca/ca.pem -ca-key=/usr/local/cfssl/ca/ca-key.pem -config=/usr/local/cfssl/ca/ca-config.json -profile=kubernetes ca/kube-controller-manager-csr.json | cfssljson -bare ca/kube-controller-manager

创建kubeconfig文件

# 设置集群信息

kubectl config set-cluster kubernetes --certificate-authority=/usr/local/cfssl/ca/ca.pem --embed-certs=true --server=https://127.0.0.1:6443 --kubeconfig=conf/kube-controller-manager.kubeconfig

# 设置用户信息

kubectl config set-credentials system:kube-controller-manager --client-certificate=/usr/local/kubernetes/ca/kube-controller-manager.pem --client-key=/usr/local/kubernetes/ca/kube-controller-manager-key.pem --embed-certs=true --kubeconfig=conf/kube-controller-manager.kubeconfig

# 设置上下文信息

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=conf/kube-controller-manager.kubeconfig

# 设置使用上下文

kubectl config use-context system:kube-controller-manager --kubeconfig=conf/kube-controller-manager.kubeconfig

# 查看该配置

kubectl config view --kubeconfig=conf/kube-controller-manager.kubeconfig

注:--embed-certs=true表示将证书内容直接嵌入到文件中

编辑CM服务启动配置文件

cat > conf/kube-controller-manager.conf <<EOF

KUBE_CONTROLLER_MANAGER_FLAGS="\

--address=127.0.0.1 \

--port=10252 \

--bind-address=0.0.0.0 \

--secure-port=10257 \

--kubeconfig=/usr/local/kubernetes/conf/kube-controller-manager.kubeconfig \

--allocate-node-cidrs=true \

--cluster-cidr=172.17.0.0/16 \

--service-cluster-ip-range=192.168.0.0/16 \

--cluster-name=kubernetes \

--leader-elect=true \

--alsologtostderr=true \

--log-dir=/usr/local/kubernetes/logs \

--v=2 \

--cert-dir=/usr/local/kubernetes/ca/ \

--tls-cert-file=/usr/local/kubernetes/ca/kube-controller-manager.pem \

--tls-private-key-file=/usr/local/kubernetes/ca/kube-controller-manager-key.pem \

--client-ca-file=/usr/local/cfssl/ca/ca.pem \

--cluster-signing-cert-file=/usr/local/cfssl/ca/ca.pem \

--cluster-signing-key-file=/usr/local/cfssl/ca/ca-key.pem \

--experimental-cluster-signing-duration=87600h0m0s \

--feature-gates=RotateKubeletServerCertificate=true \

--service-account-private-key-file=/usr/local/kubernetes/ca/service-account-pri.pem \

--root-ca-file=/usr/local/cfssl/ca/ca.pem \

"

EOF

- --address:不安全非https的服务ip地址,后期可能删除,默认0.0.0.0

- --port:不安全非https的服务ip地址,后期可能删除,默认10252

- --bind-address:安全的https服务ip地址,默认10257

- --secure-port:安全的https服务端口,默认0.0.0.0

- --kubeconfig:具有授权和master位置信息的kubeconfig文件的路径

- --master:覆盖

--kubeconfig中的master配置 - --allocate-node-cidrs:是否需要在云供应商上分配和设置Pod的CIDR

- --cluster-cidr:群集中Pod的CIDR范围,要求

--allocate-node-cidrs为true - --service-cluster-ip-range:群集中Service的CIDR范围,要求

--allocate-node-cidrs为true - --cluster-name:集群的实例前缀,默认为kubernetes

- --leader-elect:存在多个controller-manager时,在执行主任务前全句一个leader,默认为true

- --alsologtostderr:是否同时将日志打印到标准错误输出和文件

- --log-dir:日志文件目录

- --v:日志级别

- --cert-dir:TLS证书所在的目录, 如果提供了

--tls-cert-file和--tls-private-key-file配置,则将忽略此配置 - --tls-cert-file:HTTPS x509证书的文件地址,和

--tls-private-key-file配置成对出现,如果启用的https服务但是没有提供--tls-cert-file和--tls-private-key-file配置,则会在--cert-dir配置的地址中生成一个自签名证书和其对应的私钥文件。 - --tls-private-key-file:

--tls-cert-file配置的证书的私钥文件地址。 - --client-ca-file:如果已设置,则使用与客户端证书的CommonName对应的身份对任何提出由client-ca文件中的授权机构之一签名的客户端证书的请求进行身份验证。

- --cluster-signing-cert-file:用于为集群中他组件(kubelet)签发证书的ca根证书文件路径,kubelet的服务端证书和对应apiserver的客户端证书都可以有该ca根证书颁发,默认为/etc/kubernetes/ca/ca.pem

- --cluster-signing-key-file:用于为集群中其他组件(kubelet)签发证书的ca根证书的私钥文件路径,默认/etc/kubernetes/ca/ca.key

- --experimental-cluster-signing-duration:给其他组件(kubelet)签发证书的有效期,默认8760h0m0s

- --feature-gates=RotateKubeletServerCertificatetrue=true:开启kublet server证书的自动续签特性

- --service-account-private-key-file:为签名Service Account Token签名的私钥文件路径,必须和kube-apiserver的

--service-account-key-file指定的公钥文件配对使用 - --root-ca-file:放置到Service Account Token的Secret中的CA证书路径,用来对 kube-apiserver 的证书进行校验

编辑CM的Unit文件

cat > /usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/conf/kube-controller-manager.conf

ExecStart=/usr/local/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_FLAGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start kube-controller-manager.service

systemctl enable kube-controller-manager.service

# 验证服务

kubectl get cs controller-manager -o yaml

Master上部署kube-scheduler

登录主机

ssh -P 60028 root@172.23.107.18

cd /ust/local/kubernetes

签发Scheduler相关证书

编辑证书申请配置文件

cat > ca/kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"172.23.107.18",

"localhost"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:kube-scheduler",

"OU": "System"

}]

}

EOF

说明:hosts 列表包含所有 kube-scheduler 节点 IP。CN 为 system:kube-scheduler、O 为 system:kube-scheduler,kubernetes 内置的 ClusterRoleBindings system:kube-scheduler 将赋予 kube-scheduler 工作所需的权限。

签发证书

cfssl gencert -ca=/usr/local/cfssl/ca/ca.pem -ca-key=/usr/local/cfssl/ca/ca-key.pem -config=/usr/local/cfssl/ca/ca-config.json -profile=kubernetes ca/kube-scheduler-csr.json | cfssljson -bare ca/kube-scheduler

创建kubeconfig配置文件

# 设置集群信息

kubectl config set-cluster kubernetes --certificate-authority=/usr/local/cfssl/ca/ca.pem --embed-certs=true --server=https://127.0.0.1:6443 --kubeconfig=conf/kube-scheduler.kubeconfig

# 设置用户信息

kubectl config set-credentials system:kube-scheduler --client-certificate=/usr/local/kubernetes/ca/kube-scheduler.pem --client-key=/usr/local/kubernetes/ca/kube-scheduler-key.pem --embed-certs=true --kubeconfig=conf/kube-scheduler.kubeconfig

# 设置上下文信息

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=conf/kube-scheduler.kubeconfig

# 设置使用上下文

kubectl config use-context system:kube-scheduler --kubeconfig=conf/kube-scheduler.kubeconfig

# 查看该配置

kubectl config view --kubeconfig=conf/kube-scheduler.kubeconfig

编辑Scheduler启动配置文件

cat > conf/kube-controller-manager.conf <<EOF

KUBE_SCHEDULER_FLAGS="\

--address=127.0.0.1 \

--port=10251 \

--bind-address=0.0.0.0 \

--secure-port=10259 \

--kubeconfig=/usr/local/kubernetes/conf/kube-scheduler.kubeconfig \

--leader-elect=true \

--alsologtostderr=true \

--log-dir=/usr/local/kubernetes/logs \

--v=2 \

--cert-dir=/usr/local/kubernetes/ca \

--client-ca-file=/usr/local/cfssl/ca/ca.pem \

--tls-cert-file=/usr/local/kubernetes/ca/kube-scheduler.pem \

--tls-private-key-file=/usr/local/kubernetes/ca/kube-scheduler-key.pem \

"

EOF

- --address:不安全非https的服务ip地址,后期可能删除,默认0.0.0.0

- --port:不安全非https的服务ip地址,后期可能删除,默认10251

- --bind-address:安全的https服务ip地址,默认10259

- --secure-port:安全的https服务端口,默认0.0.0.0

- --kubeconfig:具有授权和master位置信息的kubeconfig文件的路径

- --leader-elect:存在多个controller-manager时,在执行主任务前全句一个leader,默认为true

- --alsologtostderr:是否同时将日志打印到标准错误输出和文件

- --log-dir:日志文件目录

- --v:日志级别

- --cert-dir:TLS证书所在的目录。如果提供了--tls-cert-file和--tls-private-key-file,则将忽略此标志

- --client-ca-file: 如果已设置,由 client-ca-file 中的授权机构签名的客户端证书的任何请求都将使用与客户端证书的 CommonName 对应的身份进行身份验证。

- --tls-cert-file:包含默认的 HTTPS x509 证书的文件。(CA证书(如果有)在服务器证书之后并置)。如果启用了 HTTPS 服务,并且未提供 --tls-cert-file 和 --tls-private-key-file,则会为公共地址生成一个自签名证书和密钥,并将其保存到 --cert-dir 指定的目录中

- --tls-private-key-file:包含与 --tls-cert-file 匹配的默认 x509 私钥的文件

编辑Scheduler Unit文件

cat > /usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=kube-apiserver.service

Requires=kube-apiserver.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/conf/kube-scheduler.conf

ExecStart=/usr/local/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_FLAGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start kube-scheduler.service

systemctl enable kube-scheduler.service

# 验证服务

kubectl get cs scheduler -o yaml

权限相关配置

登录主机

ssh -P 60028 root@172.23.107.18

cd /ust/local/kubernetes

签发集群管理员证书

编辑证书申请配置文件

cat > ca/kubernetes-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "HuBei",

"L": "WuHan",

"O": "system:masters",

"OU": "System"

}]

}

EOF

说明:

后续 kube-apiserver 使用 RBAC 对客户端(如 kubelet、kube-proxy、Pod)请求进行授权;

kube-apiserver 预定义了一些 RBAC 使用的 RoleBindings,如 cluster-admin 将 Group system:masters 与 Role cluster-admin 绑定,该 Role 授予了调用kube-apiserver 的所有 API的权限;

O指定该证书的 Group 为 system:masters,kubelet 使用该证书访问 kube-apiserver 时 ,由于证书被 CA 签名,所以认证通过,同时由于证书用户组为经过预授权的 system:masters,所以被授予访问所有 API 的权限;

注:这个admin 证书,是将来生成管理员用的kube config 配置文件用的,现在我们一般建议使用RBAC 来对kubernetes 进行角色权限控制, kubernetes 将证书中的CN 字段 作为User, O 字段作为 Group

签发证书

cfssl gencert -ca=/usr/local/cfssl/ca/ca.pem -ca-key=/usr/local/cfssl/ca/ca-key.pem -config=/usr/local/cfssl/ca/ca-config.json -profile=kubernetes ca/admin-csr.json | cfssljson -bare ca/admin

生成集群管理员kubeconfig文件

# 设置集群信息

kubectl config set-cluster kubernetes --certificate-authority=/usr/local/cfssl/ca/ca.pem --embed-certs=true --server=https://127.0.0.1:6443 --kubeconfig=conf/admin.kubeconfig

# 设置用户信息

kubectl config set-credentials admin --client-certificate=/usr/local/kubernetes/ca/admin.pem --client-key=/usr/local/kubernetes/ca/admin-key.pem --embed-certs=true --kubeconfig=conf/admin.kubeconfig

# 设置上下文信息

kubectl config set-context admin --cluster=kubernetes --user=admin --kubeconfig=conf/admin.kubeconfig

# 设置使用上下文

kubectl config use-context admin --kubeconfig=conf/admin.kubeconfig

# 查看该配置

kubectl config view --kubeconfig=conf/admin.kubeconfig

# 配置环境变量

export KUBECONFIG=/usr/local/kubernetes/conf/admin.kubeconfig

为TLSBootstrap用户授权

申请和续签证书权限

tlsbootstrap申请初始kubelet客户端证书的clusterrole和续签kubelet客户端证书的clusterrole已存在,分别为system:certificates.k8s.io:certificatesigningrequests:nodeclient和system:certificates.k8s.io:certificatesigningrequests:selfnodeclient,所以不需要创建。但是kubelet服务端证书的申请和续签clusterrole需要手动创建

cat > requests/approve-nodecert-requests.yaml <<EOF

# 定义kubelet申请证书相关权限

########################################################################################

# 初始申请和续签使用kubelet-server证书的权限,k8s并没有内置该角色,所以需要手动创建

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver

rules:

- apiGroups:

- "certificates.k8s.io"

resources:

- "certificatesigningrequests/selfnodeserver"

verbs:

- create

---

# 为kubelet用户绑定证书申请权限

########################################################################################

# 为bootstrap用户绑定初始申请kubelet-client证书的权限

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-auto-approve-csr

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

---

# 为system:nodes用户组绑定续签kubelet-client证书的权限

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-auto-renewal-crt

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

---

# 为system:nodes用户组绑定续签kubelet-server证书的权限

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-auto-renewal-crt

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeserver

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

EOF

# 创建权限

kubectl apply -f conf/approve-nodecert-requests.yaml

# 查看

kubectl get ClusterRole,ClusterRoleBinding --all-namespaces

注:不知道为什么,我上面已经为system:nodes gourp授予了selfnodeserver权限,但是在测试kubelet服务端证书过期续签时,还是得手动批准csr请求。但是kubelet的证书申请和续签都是自动完成的

手动批准csr请求

# 查看所有csr

kubectl get csr

# 批准没有Issued的csr-request

kubectl certificate approve [csr-name]

# 拒绝没有Issued的csr-request

kubectl certificate deny [csr-name]

Node上部署kubelet

登录到主机

# 登录到172.23.107.18

ssh -P 60028 root@172.23.107.18

scp -P 60028 /usr/local/kubernetes/conf/bootstrap.kubeconfig root@172.23.101.47:/usr/local/kubernetes/conf/

scp -P 60028 /usr/local/cfssl/ca/ca.pem root@172.23.101.47:/usr/local/kubernetes/ca/

scp -P 60028 /usr/local/kubernetes/conf/bootstrap.kubeconfig root@172.23.101.48:/usr/local/kubernetes/conf/

scp -P 60028 /usr/local/cfssl/ca/ca.pem root@172.23.101.48:/usr/local/kubernetes/ca/

scp -P 60028 /usr/local/kubernetes/conf/bootstrap.kubeconfig root@172.23.101.49:/usr/local/kubernetes/conf/

scp -P 60028 /usr/local/cfssl/ca/ca.pem root@172.23.101.49:/usr/local/kubernetes/ca/

# 登录172.23.101.47

ssh -P 60028 root@172.23.101.47

cd /ust/local/kubernetes

# 登录172.23.101.48

ssh -P 60028 root@172.23.101.48

cd /ust/local/kubernetes

# 登录172.23.101.49

ssh -P 60028 root@172.23.101.49

cd /ust/local/kubernetes

编辑Kubelet Config配置文件

cat > conf/kubelet.config <<EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

healthzBindAddress: 0.0.0.0

healthzPort: 10248

cgroupDriver: systemd

clusterDNS:

- 192.168.0.2

clusterDomain: cluster.local

resolvConf: /etc/resolv.conf

failSwapOn: false

featureGates:

RotateKubeletServerCertificate: true

RotateKubeletClientCertificate: true

rotateCertificates: true

serverTLSBootstrap: true

authentication:

x509:

clientCAFile: /usr/local/kubernetes/ca/ca.pem

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

EOF

- address:kubelet 的服务 IP 地址(所有 IPv4 接口设置为 0.0.0.0 ,所有 IPv6 接口设置为 “::”),默认0.0.0.0

- port:kubelet 服务监听的本机端口号,默认值为 10250

- kubelet 可以在没有身份验证/鉴权的情况下提供只读服务的端口(设置为 0 表示禁用),默认值为 10255

- anonymousAuth:设置为 true 表示 kubelet 服务器可以接受匿名请求。未被任何认证组件拒绝的请求将被视为匿名请求。匿名请求的用户名为 system:anonymous,用户组为 system:unauthenticated。(默认值为 true)

- cgroupDriver:kubelet 操作本机 cgroup 时使用的驱动程序。支持的选项包括 cgroupfs 或者 systemd,默认值为 cgroupfs。这里需要与docker的cgroup driver配置一致

- clientCaFile:如果已设置客户端 CA 证书文件,则使用与客户端证书的 CommonName 对应的身份对任何携带 client-ca 文件中的授权机构之一签名的客户端证书的请求进行身份验证

- clusterDNS:集群内 DNS 服务的 IP 地址,以逗号分隔。仅当 Pod 设置了 “dnsPolicy=ClusterFirst” 属性时可用。注意:列表中出现的所有 DNS 服务器必须包含相同的记录组,否则集群中的名称解析可能无法正常工作。无法保证名称解析过程中会牵涉到哪些 DNS 服务器

- clusterDomain:集群的域名。如果设置了此值,除了主机的搜索域外,kubelet 还将配置所有容器以搜索所指定的域名

- resolvConf:域名解析服务的配置文件名,用作容器 DNS 解析配置的基础,默认值为/etc/resolv.conf

- failSwapOn:设置为 true 表示如果主机启用了交换分区,kubelet 将无法使用,默认值为 true

- featureGates:这里配置Kubelet申请的证书自动续期

- healthzBindAddress:用于运行 healthz 服务器的 IP 地址(对于所有 IPv4 接口,设置为 0.0.0.0;对于所有 IPv6 接口,设置为

::),默认值为 127.0.0.1 - healthzPort:本地 healthz 端点使用的端口(设置为 0 表示禁用),默认值为 10248

- rotateCertificates:设置当客户端证书即将过期时 kubelet 自动从 kube-apiserver 请求新的证书进行轮换

- rotateServerCertificates:当证书即将过期时自动从 kube-apiserver 请求新的证书进行轮换。要求启用 RotateKubeletServerCertificate 特性开关,以及对提交的 CertificateSigningRequest 对象进行批复(approve)操作,默认为false

- serverTLSBootstrap:启用服务器证书引导程序。 Kubelet不会自签名服务证书,而是从certificates.k8s.io API请求证书。 这需要批准者批准证书签名请求。 必须启用RotateKubeletServerCertificate功能,默认为false

- authentication:

- x509.clientCAFile:clientCAFile是PEM编码的证书捆绑包的路径。 如果设置,则任何提出由捆绑中的一个机构签名的提供客户端证书的请求都将使用与CommonName对应的用户名以及与客户端证书中的Organization对应的组进行身份验证。

编辑服Kubelet务启动配置文件

cat > conf/kubelet.conf <<EOF

KUBELET_FLAGS="\

--register-node=true \

--bootstrap-kubeconfig=/usr/local/kubernetes/conf/bootstrap.kubeconfig \

--cert-dir=/usr/local/kubernetes/ca \

--config=/usr/local/kubernetes/conf/kubelet.config \

--enable-server=true \

--hostname-override=172.23.101.47 \

--kubeconfig=/usr/local/kubernetes/conf/node.kubeconfig \

--log-dir=/usr/local/kubernetes/logs \

--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest \

--root-dir=/opt/data/kubelet \

--alsologtostderr=true \

--v=2 \

"

EOF

- --register-node:将本节点注册到 apiserver。如果未提供 --kubeconfig 参数,则此参数无关紧要,因为 kubelet 将没有要注册的 apiserver,默认值为 true

- --bootstrap-kubeconfig:kubeconfig 文件的路径,该文件将用于获取 kubelet 的客户端证书。如果 --kubeconfig 指定的文件不存在,则使用引导 kubeconfig 从 API 服务器请求客户端证书。成功后,将引用生成的客户端证书和密钥的 kubeconfig 文件写入 --kubeconfig 所指定的路径。客户端证书和密钥文件将存储在 --cert-dir 指向的目录中。

- --cert-dir:TLS 证书所在的目录。如果设置了 --tls-cert-file 和 --tls-private-key-file,则该设置将被忽略,默认/var/lib/kubelet/pki

- --config:kubelet 将从该文件加载其初始配置。该路径可以是绝对路径,也可以是相对路径。相对路径从 kubelet 的当前工作目录开始。省略此参数则使用内置的默认配置值。命令行参数会覆盖此文件中的配置

- --enable-server:启动 kubelet 服务器,默认值为 true

- --hostname-override:如果为非空,将使用此字符串而不是实际的主机名作为节点标识。如果设置了 --cloud-provider,则云服务商将确定节点的名称(请查询云服务商文档以确定是否以及如何使用主机名)。这里保证每个kubelet的配置全局唯一,所以这里直接配置所在节点的ip地址

- --kubeconfig:kubeconfig 配置文件的路径,指定如何连接到 API 服务器。提供 --kubeconfig 将启用 API 服务器模式,而省略 --kubeconfig 将启用独立模式

- --log-dir:如果此值为非空,则在所指定的目录中写入日志文件

- --pod-infra-container-image:指定基础设施镜像,Pod 内所有容器与其共享网络和 IPC 命名空间。仅当容器运行环境设置为 docker 时,此特定于 docker 的参数才有效,默认值为k8s.gcr.io/pause:3.1

- --root-dir:设置用于管理 kubelet 文件的根目录(例如挂载卷的相关文件),默认值为/var/lib/kubelet

- --alsologtostderr:设置为 true 表示将日志输出到文件的同时输出到 stderr

- --v:日志输出级别

编辑Kubelet Unit文件

cat > /usr/lib/systemd/system/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=-/opt/data/kubelet

EnvironmentFile=-/usr/local/kubernetes/conf/kubelet.conf

ExecStart=/usr/local/kubernetes/bin/kubelet \$KUBELET_FLAGS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start kubelet.service

systemctl enable kubelet.service

# 验证服务

kubectl get no -o yaml

Node上部署部署Kube-proxy

登录到主机

# 登录172.23.101.47

ssh -P 60028 root@172.23.101.47

cd /ust/local/kubernetes

# 登录172.23.101.48

ssh -P 60028 root@172.23.101.48

cd /ust/local/kubernetes

# 登录172.23.101.49

ssh -P 60028 root@172.23.101.49

cd /ust/local/kubernetes

编辑Kube-proxy服务启动配置文件

cat > conf/kube-proxy.conf <<EOF

KUBE_PROXY_FLAGS="\

--bind-address=0.0.0.0 \

--healthz-bind-address=0.0.0.0:10256 \

--metrics-bind-address=0.0.0.0:10249 \

--cluster-cidr=172.17.0.0/16 \

--hostname-override=172.23.101.47 \

--kubeconfig=/usr/local/kubernetes/conf/node.kubeconfig \

--alsologtostderr=true \

--log-dir=/opt/data/kubernetes/kube-proxy/logs \

--v=2 \

"

EOF

- --bind-address:代理服务器要使用的 IP 地址(对于所有 IPv4 接口设置为 0.0.0.0,对于所有 IPv6 接口设置为 ::)

- --healthz-bind-address:用于运行状况检查服务器的端口的IP地址(对于所有IPv4接口,设置为“ 0.0.0.0:10256”,对于所有IPv6接口,设置为“ [::]:10256”), 设置为空可禁用,默认0.0.0.0:10256

- --metrics-bind-address:用于度量服务器的端口的IP地址(所有IPv4接口设置为“ 0.0.0.0:10249”,所有IPv6接口设置为“ [::]:10249”),设置为空可禁用,默认为127.0.0.1:10249

- --cleanup:如果为 true,清理 iptables 和 ipvs 规则并退出

- --cleanup-ipvs:如果设置为 true 并指定了 --cleanup,则 kube-proxy 除了常规清理外,还将刷新 IPVS 规则,默认为true

- --cluster-cidr:集群中 Pod 的 CIDR 范围。配置后,将从该范围之外发送到服务集群 IP 的流量被伪装,从 Pod 发送到外部 LoadBalancer IP 的流量将被重定向到相应的集群 IP

- --hostname-override:如果非空,将使用此字符串作为标识而不是实际的主机名,这里填写主机的时间ip

- --kubeconfig:包含授权信息的 kubeconfig 文件的路径(master 位置由 master 标志设置)

- --master: Kubernetes API 服务器的地址(覆盖 kubeconfig 中的任何值)

编辑Kube-proxy Unit文件

cat > /usr/lib/systemd/system/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.service

Requires=network.service

[Service]

EnvironmentFile=-/usr/local/kubernetes/conf/kube-proxy.conf

ExecStart=/usr/local/kubernetes/bin/kube-proxy \$KUBE_PROXY_FLAGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

# 启动服务

systemctl daemon-reload

systemctl start kube-proxy.service

systemctl enable kube-proxy.service

配置K8s集群

配置节点标签和污点

# 为节点配置角色标签,角色为特殊的标签,特殊的地方在于标签名必须以'node-role.kubernetes.io/'作为前缀

kubectl label node 172.23.107.18 node-role.kubernetes.io/master=

kubectl label node 172.23.101.47 node-role.kubernetes.io/node=

kubectl label node 172.23.101.48 node-role.kubernetes.io/node=

kubectl label node 172.23.101.49 node-role.kubernetes.io/node=

# 展示角色和所有标签

kubectl get nodes --show-labels

kubectl describe node 172.23.107.18

# 给master节点设置污点

kubectl taint node 172.23.107.18 node-role.kubernetes.io/master=:NoSchedule

# 查看污点

kubectl describe node 172.23.107.18 | grep Taints

为Service Account设置镜像仓库账号

# 创建指定命名空间下的账号密码

kubectl create secret docker-registry image-repo-test --docker-server=172.23.107.18:1080 --docker-username=testdev --docker-password=xxxx --docker-email=test@admin.com --namespace=default

# 配置特定命名空间下拉去镜像所使用的默认密码

kubectl patch sa default --namespace=default -p '{"imagePullSecrets": [{"name": "image-repo-test"}]}'

# 或者可以在负载的镜像配置下加上如下配置

imagePullSecrets:

- name: image-repo-test

部署CoreDNS

ssh -P 60028 root@172.23.107.18

cd /usr/local/kubernetes/

# 编辑CoreDNS的相关对象请求配置文件

cat > requests/coredns.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations: # 污点容忍

- key: "CriticalAddonsOnly"

operator: "Exists"

- effect: "NoSchedule"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity: # 亲和性定义

nodeAffinity: # 节点亲和性

requiredDuringSchedulingIgnoredDuringExecution: #定义必要规则

nodeSelectorTerms:

- matchExpressions:

- key: "node-role.kubernetes.io/master" #必须匹配的键/值对(标签)

operator: "Exists"

podAntiAffinity: # 定义Pod间反亲和性

preferredDuringSchedulingIgnoredDuringExecution: # 定义亲和性规则

- weight: 100 # 定义权重

podAffinityTerm:

labelSelector:

matchExpressions: # 改规则定义档期啊pod部署的节点上不能有标签为k8s-app标签值为kube-dns的pod

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.20.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

# 部署CoreDNS

kubectl apply -f conf/coredns.yaml

# 查看

kubectl get pods -n kube-system

kubectl get pods --all-namespaces

# 验证dns服务

# 首先启动一个pod

kubectl run busyboxplus-curl --image=radial/busyboxplus:curl -it

# 然后执行域名解析命令

nslookup kubernetes.default

# 输出如下,则代表coredns工作正常

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

部署Ingress

创建Namespace

cat > requests/ingress-namespaces.yaml <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

EOF

kubectl apply -f requests/ingress-namespaces.yaml

部署Default Backend

Default Backend主要是用来将未知请求全部负载到这个默认后端上,这个默认后端会返回 404 页面

ssh -P 60028 root@172.23.107.18

cd /usr/local/kubernetes/

# 编辑负载请求配置文件

cat > requests/ingress-defaultbackend.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

terminationGracePeriodSeconds: 60

nodeSelector:

kubernetes.io/os: linux

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: fungitive/defaultbackend-amd64:latest

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

app.kubernetes.io/name: default-http-backend

app.kubernetes.io/part-of: ingress-nginx

ports:

- name: http

port: 80

potocol: TCP

targetPort: 8080

- name: https

port: 443

potocol: TCP

targetPort: 8080

EOF

# 部署负载

kubectl apply -f requests/defaultbackend.yaml

kubectl get pods,svc -o wide

部署Ingress controller

ssh -P 60028 root@172.23.107.18

cd /usr/local/kubernetes/

# 编辑负载请求配置文件

cat > requests/ingress-controller.yaml <<EOF

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- update

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- endpoints

verbs:

- create

- get

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-binding

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: ingress-nginx-configmap

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: ingress-nginx-tcp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

kind: ConfigMap

apiVersion: v1

metadata:

name: ingress-nginx-udp-services

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# wait up to five minutes for the drain of connections

terminationGracePeriodSeconds: 300

serviceAccountName: nginx-ingress-serviceaccount

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- effect: "NoSchedule"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

containers:

- name: nginx-ingress-controller

image: lizhenliang/nginx-ingress-controller:0.30.0

args:

- /nginx-ingress-controller

- --default-backend-service=\$(POD_NAMESPACE)/default-http-backend

- --configmap=\$(POD_NAMESPACE)/ingress-nginx-configmap

- --tcp-services-configmap=\$(POD_NAMESPACE)/ingress-nginx-tcp-services

- --udp-services-configmap=\$(POD_NAMESPACE)/ingress-nginx-udp-services

- --publish-service=\$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 101

runAsUser: 101

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 10

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

---

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

type: NodePort

ports:

- name: http

nodePort: 30080

port: 80

protocol: TCP

targetPort: 80

- name: https

nodePort: 30443

port: 443

protocol: TCP

targetPort: 443

---

apiVersion: v1

kind: LimitRange

metadata:

name: ingress-nginx

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

limits:

- min:

memory: 90Mi

cpu: 100m

type: Container

EOF

# 申请创建负载

# 部署负载

kubectl apply -f requests/defaultbackend.yaml

kubectl get all -n ingress-nginx -o wide

部署Runcher

登录到主机

# 登录到172.23.107.18

ssh -P 60028 root@172.23.107.18

cd /usr/local/kubernetes

部署Runcher服务

启动容器

mkdir -p /opt/data/runcher/data

# 拉取镜像

docker pull rancher/rancher:stable

# 启动容器

# 使用自动生成的自签证书

docker run --name=runcher -d --restart=unless-stopped --privileged -p 10080:80 -p 10443:443 -v /opt/data/runcher/data:/var/lib/rancher -v /opt/data/runcher/auditlog:/var/log/auditlog -e AUDIT_LEVEL=3 rancher/rancher:stable

# 自己签名的证书,需要挂载用来签名的ca证书

docker run --name=runcher -d --restart=unless-stopped --privileged -p 10080:80 -p 10443:443 -v /opt/data/runcher/data:/var/lib/rancher -v /opt/data/runcher/auditlog:/var/log/auditlog -e AUDIT_LEVEL=3 -v /usr/local/kubernetes/ca/runcher.pem:/etc/rancher/ssl/cert.pem -v /usr/local/kubernetes/ca/runcher-key.pem:/etc/rancher/ssl/key.pem -v /usr/local/kubernetes/ca/ca.pem:/etc/rancher/ssl/cacerts.pem rancher/rancher:stable

# 使用权威机构签发的证书

docker run --name=runcher -d --restart=unless-stopped --privileged -p 10080:80 -p 10443:443 -v /opt/data/runcher/data:/var/lib/rancher -v /opt/data/runcher/auditlog:/var/log/auditlog -e AUDIT_LEVEL=3 -v /usr/local/kubernetes/ca/runcher.pem:/etc/rancher/ssl/cert.pem -v /usr/local/kubernetes/ca/runcher-key.pem:/etc/rancher/ssl/key.pem rancher/rancher:stable --no-cacerts

添加集群

访问地址:https://172.23.107.18:10443/

点击添加集群,并导入已有的kubernetes集群

在导入集群页面需要在kubernetes中部署runcher agent的pod,这里直接执行第三条命令

其实就是一个对象请求参数的yaml,我们可以适当编辑一下,再做kubectl apply

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: proxy-clusterrole-kubeapiserver

rules:

- apiGroups: [""]

resources:

- nodes/metrics

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

verbs: ["get", "list", "watch", "create"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: proxy-role-binding-kubernetes-master

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: proxy-clusterrole-kubeapiserver

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

---

apiVersion: v1

kind: Namespace

metadata:

name: cattle-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cattle

namespace: cattle-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: cattle-admin-binding

namespace: cattle-system

labels:

cattle.io/creator: "norman"

subjects:

- kind: ServiceAccount

name: cattle

namespace: cattle-system

roleRef:

kind: ClusterRole

name: cattle-admin

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: Secret

metadata:

name: cattle-credentials-471f14c

namespace: cattle-system

type: Opaque

data:

url: "aHR0cHM6Ly8xNzIuMjMuMTA3LjE4OjEwNDQz" # 这里是runcher的https地址,以实际为准

token: "Z3NtNHQ4dmt2ZnBxbDc3aG16YmJzdmJxNGp3ZHdzdHh3NHdnY2M3ODd4emI5Znhjd3RscmI3" # 这里也以实际为准

namespace: ""

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cattle-admin

labels:

cattle.io/creator: "norman"

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cattle-cluster-agent

namespace: cattle-system

spec:

selector:

matchLabels:

app: cattle-cluster-agent

template:

metadata:

labels:

app: cattle-cluster-agent

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: NotIn

values:

- windows

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/controlplane

operator: In

values:

- "true"

- weight: 1

preference:

matchExpressions:

- key: node-role.kubernetes.io/etcd

operator: In

values:

- "true"

serviceAccountName: cattle

tolerations:

- operator: Exists

- key: "master" # 以下为容忍key=master并且value=master的污点

operator: "Equal"

value: "master"

effect: "NoSchedule"

containers:

- name: cluster-register

imagePullPolicy: IfNotPresent

env:

- name: CATTLE_FEATURES

value: ""

- name: CATTLE_IS_RKE

value: "false"

- name: CATTLE_SERVER

value: "https://172.23.107.18:10443" # 这里以实际为准

- name: CATTLE_CA_CHECKSUM

value: "3487b721e945d451c73542df6bd5c9fe143400b9688cf94340d1a783b5fcda6b" # 这里以实际为准

- name: CATTLE_CLUSTER

value: "true"

- name: CATTLE_K8S_MANAGED

value: "true"

image: rancher/rancher-agent:v2.5.3

volumeMounts:

- name: cattle-credentials

mountPath: /cattle-credentials

readOnly: true

readinessProbe:

initialDelaySeconds: 2

periodSeconds: 5

httpGet:

path: /health

port: 8080

volumes:

- name: cattle-credentials

secret:

secretName: cattle-credentials-471f14c

defaultMode: 320

注:添加集群的过程中需要下载很多镜像,所以时间会很长,请耐心等待

prometheus、grafana等可直接在可视化界面安装

浙公网安备 33010602011771号

浙公网安备 33010602011771号