思考起源于现实应用需求,随着微服务理念普及,基础设施从单机到容器到Kubernetes,体验过集群的各种好处之后,我们还缺少什么?为什么还要在kubernetes的基础上部署Istio?个人认为Istio提供了更安全、精细的流量治理能力,更便捷的对内/外访问方式,更好的多集群融合能力等;

我理解的四个不同的层次

1、最基础的是使用集群的VirtualService/DestinationRule/Gateway实现基础的流量管控,使用ServiceEntry/WorkloadEntry做外部服务管理https://www.cnblogs.com/it-worker365/p/17030958.html

2、对于多集群的公司,利用Istio做多集群管控 https://istio.io/v1.9/zh/docs/setup/install/multicluster/

3、对于透明代理功能无法满足的场景,比如rediscluster模式跨集群访问问题、比如需要动态修改配置生效的问题等...需要对透明代理功能升级利用EnvoyFilter配置或二开

4、proxyless,自定义解析执行xds协议,提升性能,增加多场景协议灵活性

5、Envoy性能优化及功能二开

其中1和2是先有成熟方案,3是今天想展开讨论的,4后续文章再议

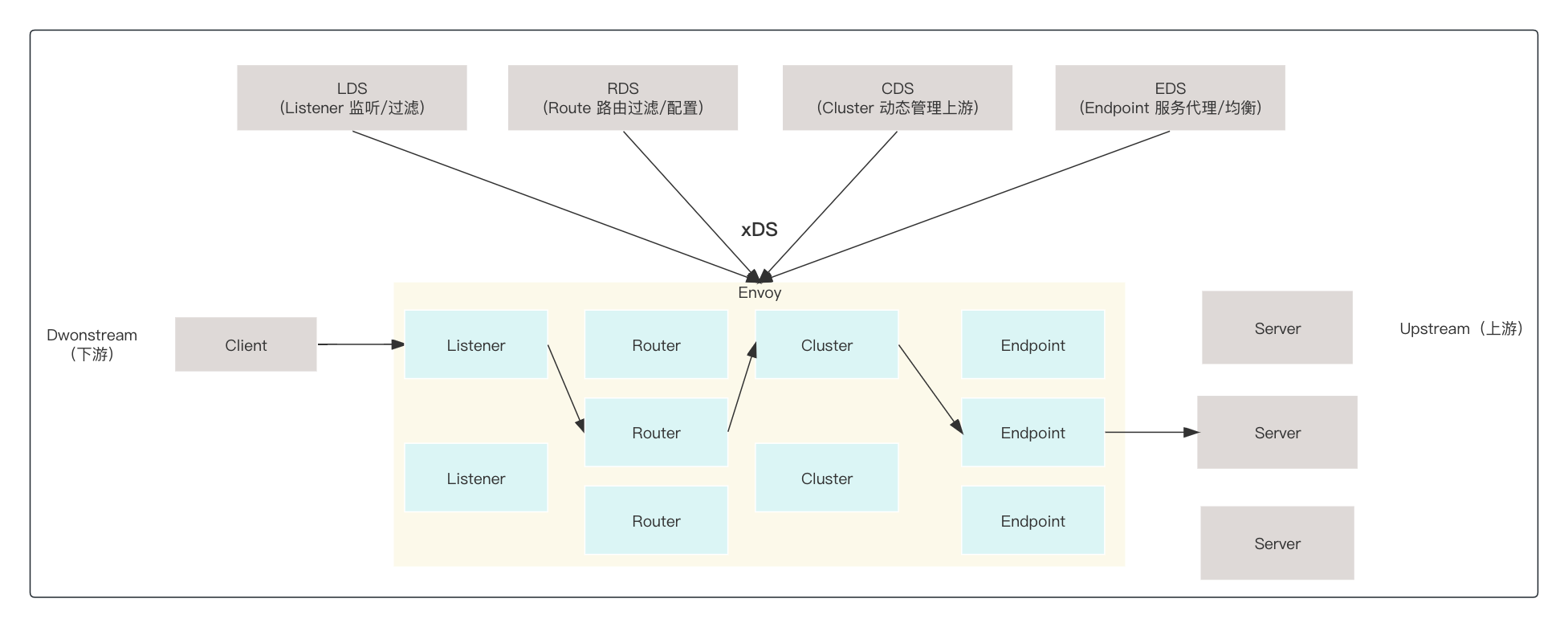

结合图理解几个概念,对于istio,连接envoy发送请求的叫下游,envoy与外部建立连接发送请求的服务叫上游;

请求经过listener(envoy监听端口,等待外部连接)->route(路由)->cluster(负载到Endpoint)->endpoint(均衡到后端服务)

这个流程是如何交互的,首先通过看istio代码结合系统部署,可以看到,istiod启动是启动了pilot-discovery(控制面逻辑,监听k8s资源、监听CRD,下发xds指令到envoy)

![]()

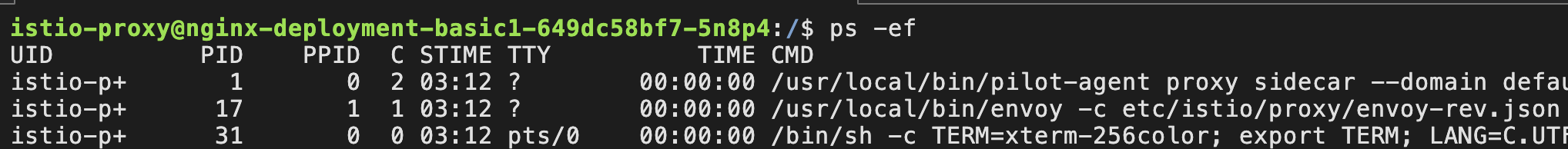

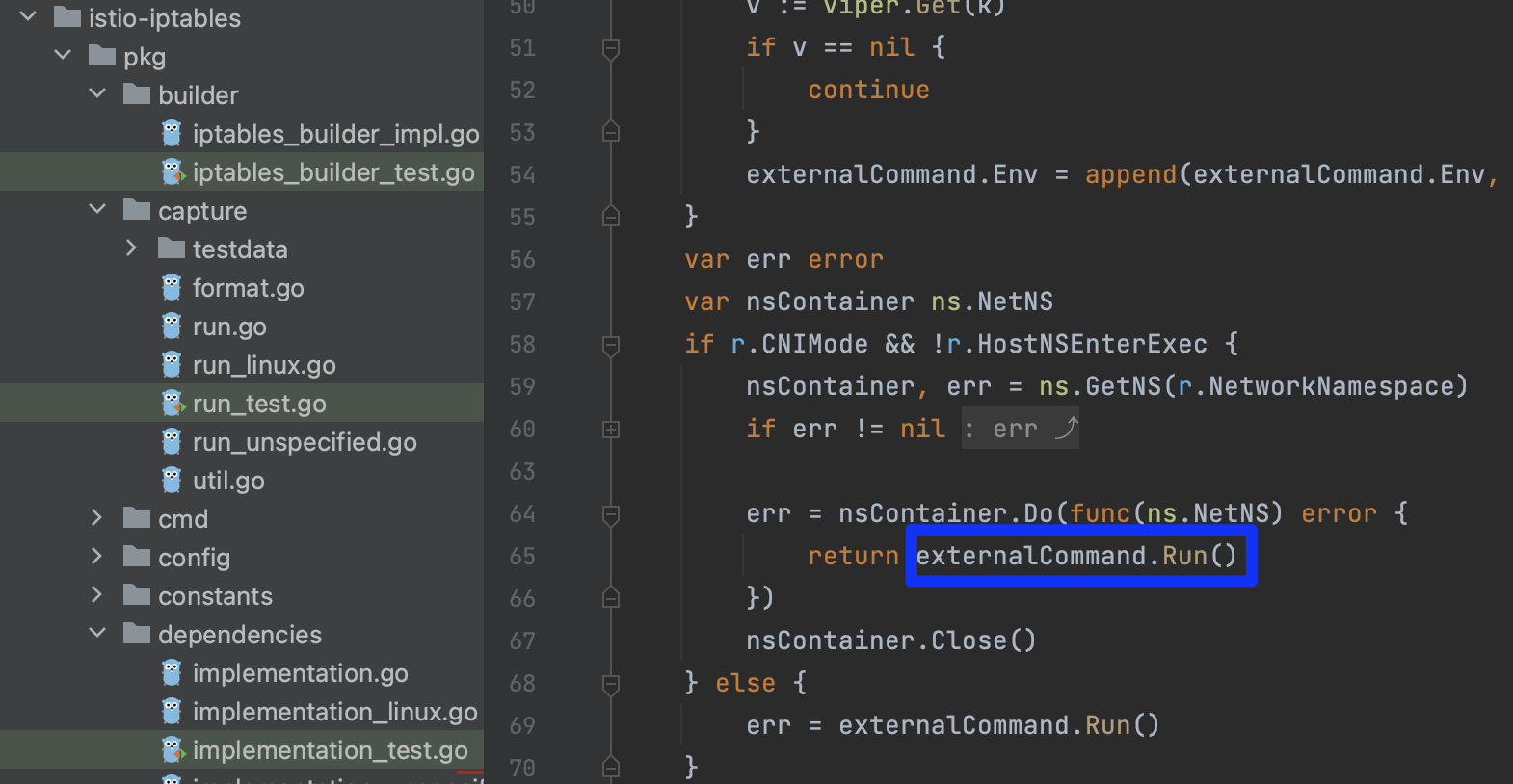

而服务边车启动的是pilot-agent 生成envoy配置、管理envoy生命周期、监听管理证书更新等 & envoy进程(流量接管)

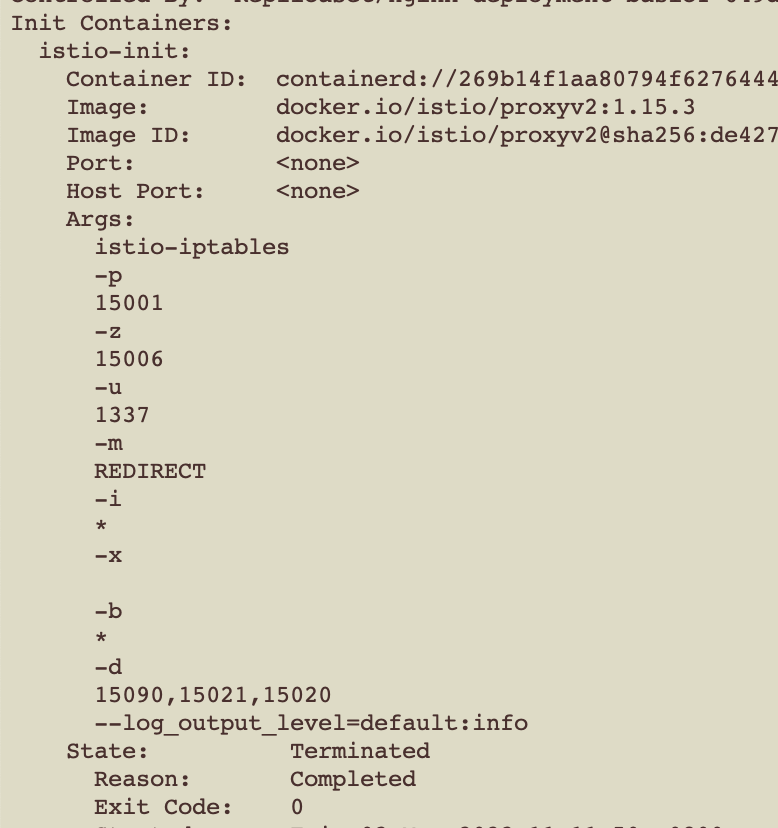

业务服务容器角度来看有两个,一个是Terminated状态,另一个是运行中的包含业务和边车的容器

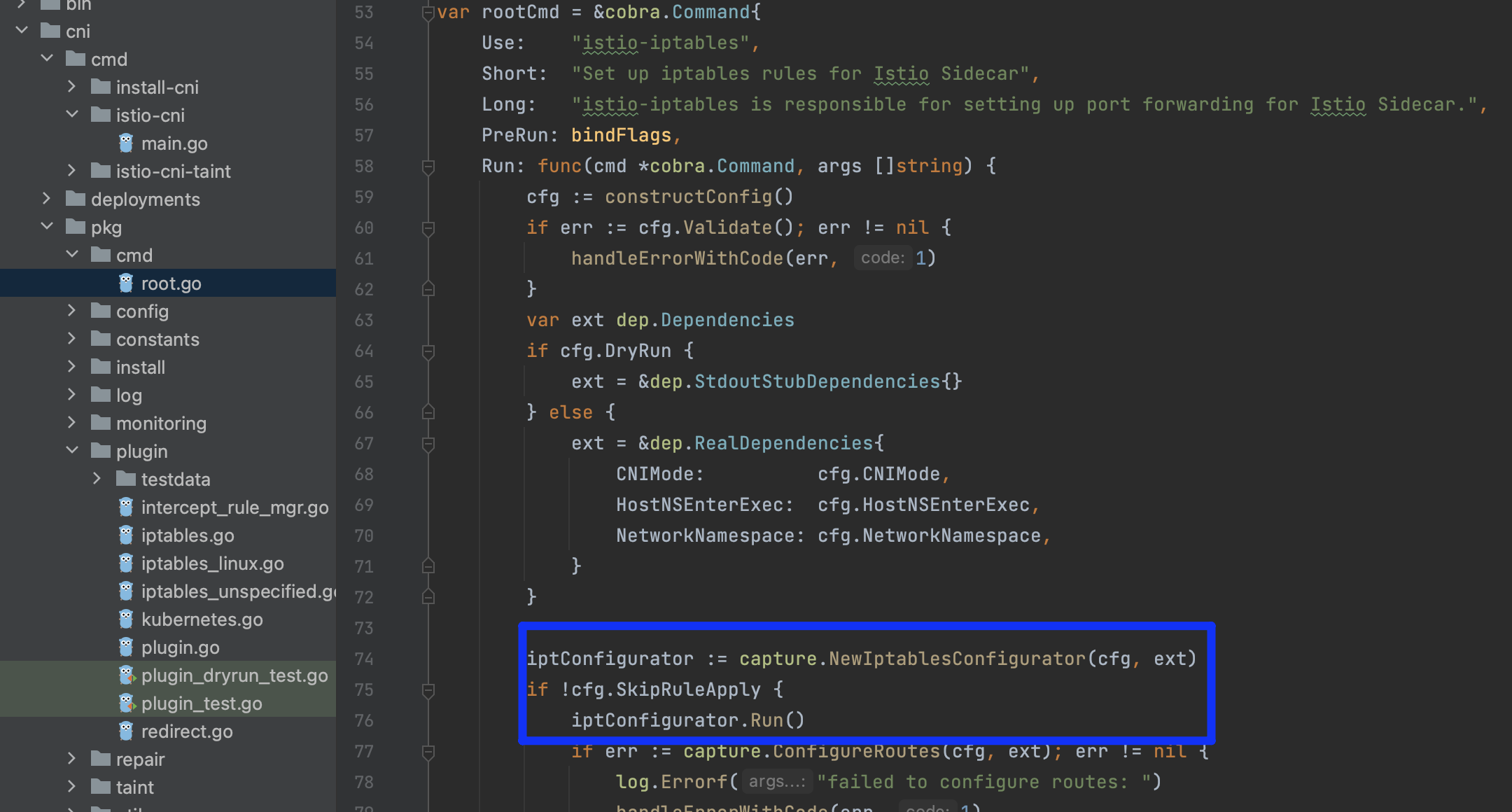

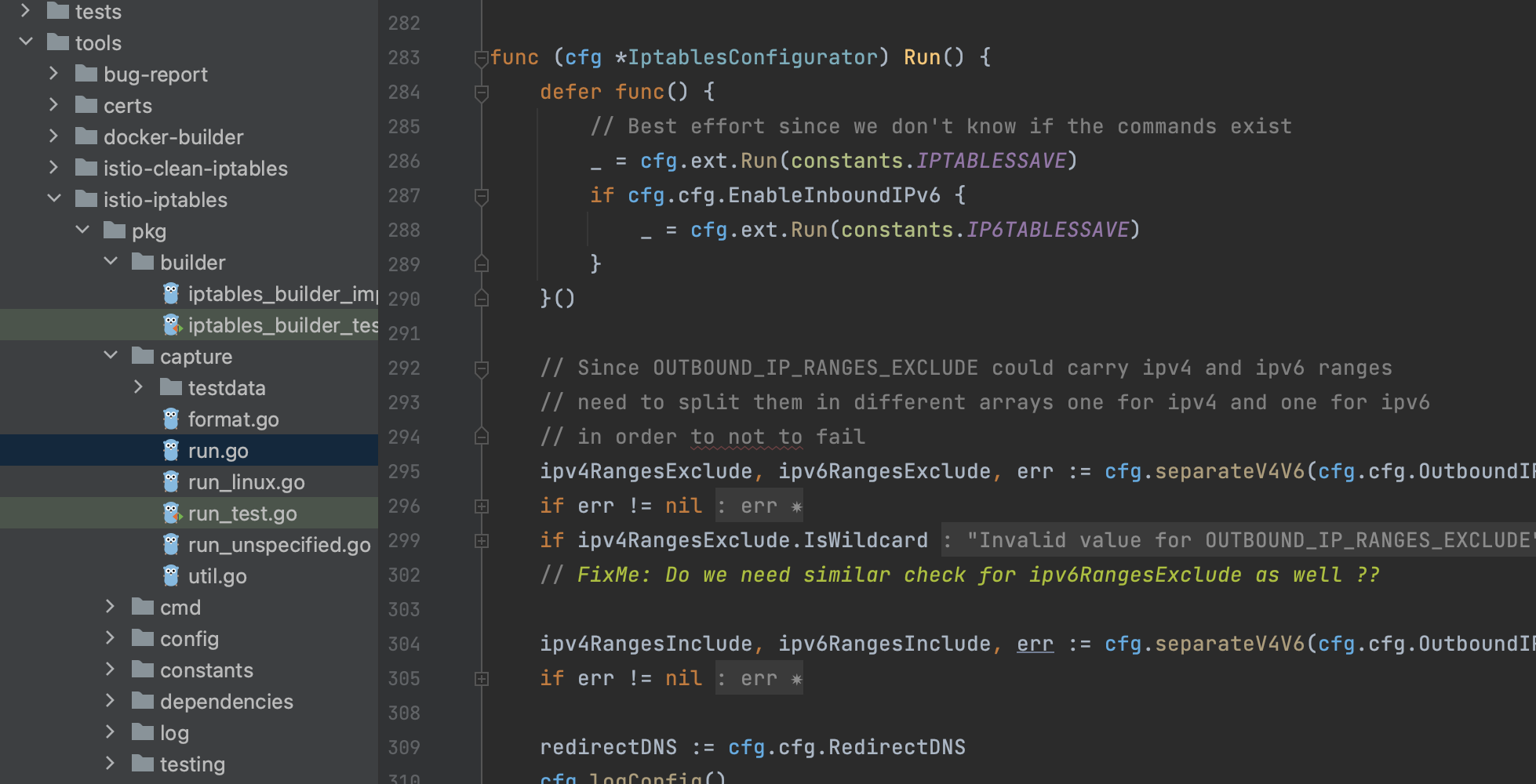

istio-init负责初始化iptables规则,以便可以开启拦截,源码在cni这一部分

同样使用了到了tools包中的iptables支持工具,规则不了解的可以参考https://www.cnblogs.com/it-worker365/p/17031120.html

最终执行命令

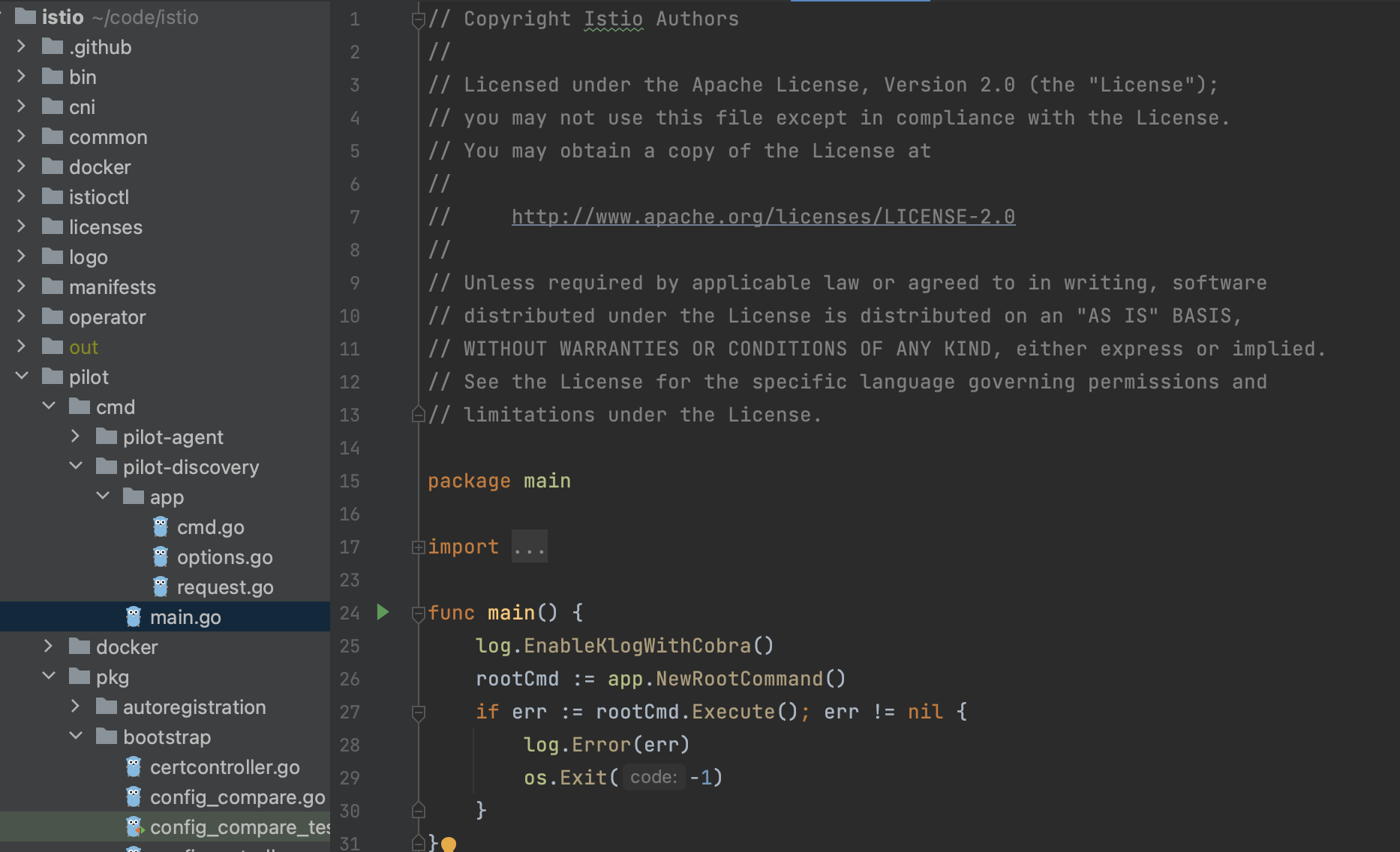

istiod启动

通过pilot-discovery的main.go方法启动调用cmd.go

cmd.go中初始化且启动bootstrap

保留关键信息,Server.go初始化封装了安全认证、监控以及XDSServer的初始化启动

func NewServer(args *PilotArgs, initFuncs ...func(*Server)) (*Server, error) { ...... s := &Server{ clusterID: getClusterID(args), environment: e, fileWatcher: filewatcher.NewWatcher(), httpMux: http.NewServeMux(), monitoringMux: http.NewServeMux(), readinessProbes: make(map[string]readinessProbe), workloadTrustBundle: tb.NewTrustBundle(nil), server: server.New(), shutdownDuration: args.ShutdownDuration, internalStop: make(chan struct{}), istiodCertBundleWatcher: keycertbundle.NewWatcher(), }

......

s.XDSServer = xds.NewDiscoveryServer(e, args.PodName, args.RegistryOptions.KubeOptions.ClusterAliases) s.XDSServer.InitGenerators(e, args.Namespace, s.internalDebugMux)// Secure gRPC Server must be initialized after CA is created as may use a Citadel generated cert. if err := s.initSecureDiscoveryService(args); err != nil { return nil, fmt.Errorf("error initializing secure gRPC Listener: %v", err) }// This should be called only after controllers are initialized. s.initRegistryEventHandlers() s.initDiscoveryService() s.initSDSServer()

......

return s, nil }

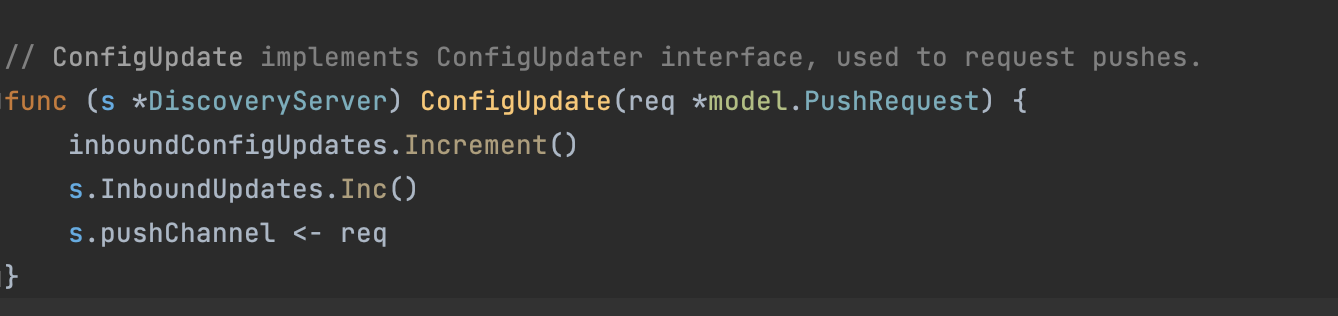

注册Handler,最后都是XDSServer.ConfigUpdate(pushReq)

// initRegistryEventHandlers sets up event handlers for config and service updates func (s *Server) initRegistryEventHandlers() { log.Info("initializing registry event handlers") // Flush cached discovery responses whenever services configuration change. serviceHandler := func(svc *model.Service, _ model.Event) { pushReq := &model.PushRequest{ Full: true, ConfigsUpdated: sets.New(model.ConfigKey{Kind: kind.ServiceEntry, Name: string(svc.Hostname), Namespace: svc.Attributes.Namespace}), Reason: []model.TriggerReason{model.ServiceUpdate}, } s.XDSServer.ConfigUpdate(pushReq) } s.ServiceController().AppendServiceHandler(serviceHandler) if s.configController != nil { configHandler := func(prev config.Config, curr config.Config, event model.Event) { defer func() { if event != model.EventDelete { s.statusReporter.AddInProgressResource(curr) } else { s.statusReporter.DeleteInProgressResource(curr) } }() log.Debugf("Handle event %s for configuration %s", event, curr.Key()) // For update events, trigger push only if spec has changed. if event == model.EventUpdate && !needsPush(prev, curr) { log.Debugf("skipping push for %s as spec has not changed", prev.Key()) return } pushReq := &model.PushRequest{ Full: true, ConfigsUpdated: sets.New(model.ConfigKey{Kind: kind.FromGvk(curr.GroupVersionKind), Name: curr.Name, Namespace: curr.Namespace}), Reason: []model.TriggerReason{model.ConfigUpdate}, } s.XDSServer.ConfigUpdate(pushReq) } schemas := collections.Pilot.All() if features.EnableGatewayAPI { schemas = collections.PilotGatewayAPI.All() } for _, schema := range schemas { // This resource type was handled in external/servicediscovery.go, no need to rehandle here. if schema.Resource().GroupVersionKind() == collections.IstioNetworkingV1Alpha3Serviceentries. Resource().GroupVersionKind() { continue } if schema.Resource().GroupVersionKind() == collections.IstioNetworkingV1Alpha3Workloadentries. Resource().GroupVersionKind() { continue } if schema.Resource().GroupVersionKind() == collections.IstioNetworkingV1Alpha3Workloadgroups. Resource().GroupVersionKind() { continue } s.configController.RegisterEventHandler(schema.Resource().GroupVersionKind(), configHandler) } if s.environment.GatewayAPIController != nil { s.environment.GatewayAPIController.RegisterEventHandler(gvk.Namespace, func(config.Config, config.Config, model.Event) { s.XDSServer.ConfigUpdate(&model.PushRequest{ Full: true, Reason: []model.TriggerReason{model.NamespaceUpdate}, }) }) } } }

ConfigUpdate做的事情是统计监控,将请求放入pushChannel

DiscoveryServer是envoy xds协议在pilot处理的grpc实现,截取类型中比较重要的几个对象,所需模型、数据生成器、防抖队列,xds推送缓冲队列以及与envoy数据面的连接集合

// DiscoveryServer is Pilot's gRPC implementation for Envoy's xds APIs

type DiscoveryServer struct {

// Env is the model environment.

Env *model.Environment

// ConfigGenerator is responsible for generating data plane configuration using Istio networking

// APIs and service registry info

ConfigGenerator core.ConfigGenerator

// pushChannel is the buffer used for debouncing.

// after debouncing the pushRequest will be sent to pushQueue

pushChannel chan *model.PushRequest

// pushQueue is the buffer that used after debounce and before the real xds push.

pushQueue *PushQueue

// adsClients reflect active gRPC channels, for both ADS and EDS.

adsClients map[string]*Connection

...

}

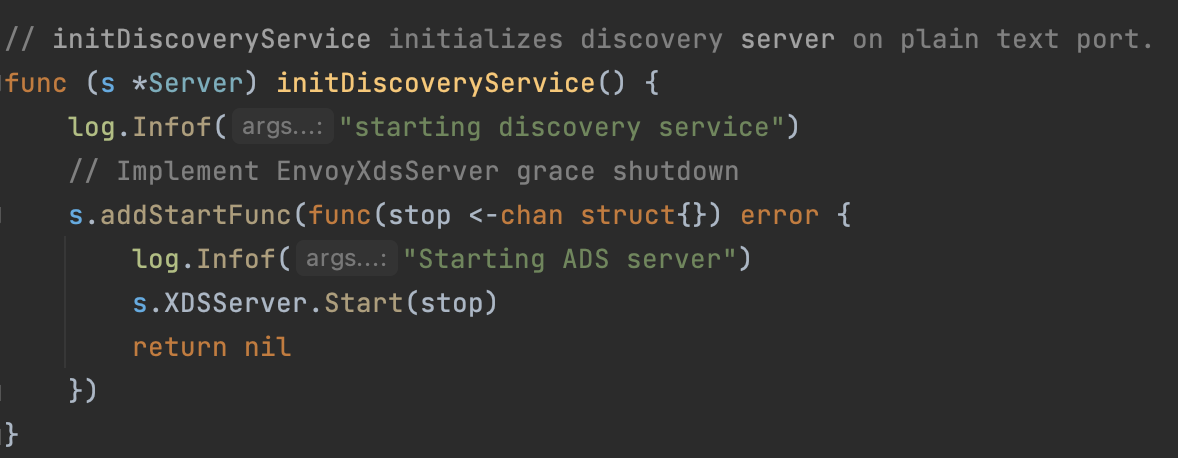

回头看Server.go中initDiscoveryService()实际是启动了XDSServer

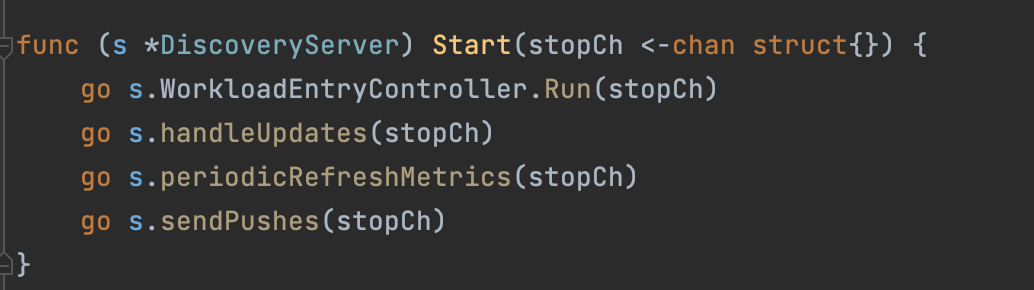

启动协程,处理发送事宜

循环中读取队列内容,推送到客户端client.pushChannel

func doSendPushes(stopCh <-chan struct{}, semaphore chan struct{}, queue *PushQueue) {

for {

select {

case <-stopCh:

return

default:

// We can send to it until it is full, then it will block until a pushes finishes and reads from it.

// This limits the number of pushes that can happen concurrently

semaphore <- struct{}{}

// Get the next proxy to push. This will block if there are no updates required.

client, push, shuttingdown := queue.Dequeue()

if shuttingdown {

return

}

recordPushTriggers(push.Reason...)

// Signals that a push is done by reading from the semaphore, allowing another send on it.

doneFunc := func() {

queue.MarkDone(client)

<-semaphore

}

proxiesQueueTime.Record(time.Since(push.Start).Seconds())

var closed <-chan struct{}

if client.stream != nil {

closed = client.stream.Context().Done()

} else {

closed = client.deltaStream.Context().Done()

}

go func() {

pushEv := &Event{

pushRequest: push,

done: doneFunc,

}

select {

case client.pushChannel <- pushEv:

return

case <-closed: // grpc stream was closed

doneFunc()

log.Infof("Client closed connection %v", client.conID)

}

}()

}

}

}

client是ads.go,代表了xds真正的客户端,这里包含了xds grpc的客户端以及连接等信息,也包含了相关操作

这里另外条路是GRPC接收端,通过StreamAggregatedResource方法接收请求,

// StreamAggregatedResources implements the ADS interface. func (s *DiscoveryServer) StreamAggregatedResources(stream DiscoveryStream) error { return s.Stream(stream) }

func (s *DiscoveryServer) Stream(stream DiscoveryStream) error { ......认证、连接 con := newConnection(peerAddr, stream) // Do not call: defer close(con.pushChannel). The push channel will be garbage collected // when the connection is no longer used. Closing the channel can cause subtle race conditions // with push. According to the spec: "It's only necessary to close a channel when it is important // to tell the receiving goroutines that all data have been sent." // Block until either a request is received or a push is triggered. // We need 2 go routines because 'read' blocks in Recv(). go s.receive(con, ids) // Wait for the proxy to be fully initialized before we start serving traffic. Because // initialization doesn't have dependencies that will block, there is no need to add any timeout // here. Prior to this explicit wait, we were implicitly waiting by receive() not sending to // reqChannel and the connection not being enqueued for pushes to pushChannel until the // initialization is complete. <-con.initialized for {// If there wasn't already a request, poll for requests and pushes. Note: if we have a huge // amount of incoming requests, we may still send some pushes, as we do not `continue` above; // however, requests will be handled ~2x as much as pushes. This ensures a wave of requests // cannot completely starve pushes. However, this scenario is unlikely. select { case req, ok := <-con.reqChan: if ok { if err := s.processRequest(req, con); err != nil { // envoy->istiod return err } } else { // Remote side closed connection or error processing the request. return <-con.errorChan } case pushEv := <-con.pushChannel: err := s.pushConnection(con, pushEv) // istiod -> envoy pushEv.done() if err != nil { return err } case <-con.stop: return nil } } }

pilot-agent启动

main.go -> cmd.go -> agent.go

// Run is a non-blocking call which returns either an error or a function to await for completion. func (a *Agent) Run(ctx context.Context) (func(), error) { ...... a.xdsProxy, err = initXdsProxy(a) ......if a.cfg.GRPCBootstrapPath != "" { if err := a.generateGRPCBootstrap(); err != nil { return nil, fmt.Errorf("failed generating gRPC XDS bootstrap: %v", err) } }if !a.EnvoyDisabled() { err = a.initializeEnvoyAgent(ctx) if err != nil { return nil, fmt.Errorf("failed to initialize envoy agent: %v", err) } a.wg.Add(1) go func() { defer a.wg.Done() // This is a blocking call for graceful termination. a.envoyAgent.Run(ctx) }() } else if a.WaitForSigterm() { // wait for SIGTERM and perform graceful shutdown a.wg.Add(1) go func() { defer a.wg.Done() <-ctx.Done() }() } return a.wg.Wait, nil }

好文

https://cloud.tencent.com/developer/article/2177603

浙公网安备 33010602011771号

浙公网安备 33010602011771号