从应用角度考虑,为什么会出现如此多的Operator场景,为什么很多中间件和厂商都会提供基于Operator的部署方案,他的价值是什么?

随着时代的发展,企业应用部署环境从传统的物理机->虚拟机->容器->集群,现在k8s已司空见惯,作为企业应用运行基础环境的kubernates的能力也越来越多的被关注,集群的企业应用个人看来是分为几个方向

1、应用部署,使用容器的基本能力,部署应用,享受容器化以及集群管理带来的各项利好

2、Operator应用,当我们在运用集群能力部署应用时,可能需要一组资源同时部署、可能需要多个部署步骤、可能需要依赖多个对象、可能需要自定义参数及转换、可能需要监控故障、可能需要在故障时手动输入命令恢复,那么这些纯靠人工就需要好多步骤,如果开发一个Operator,通过它来管理对象的资源定义及管理,监控对象的生命周期,处理对象的异常事件,这样就很方便了,提升了组件的使用门槛和运维难度

3、集群能力提升,集群本身的能力是基于普遍场景,未结合企业应用的实际特点,故需要在集群原有能力基础上,扩展或新增能力,比如开发自己的调度器,比如构建自己的个性化弹性能力

既然要说Operator,就要说下什么是Operator,如果不了解K8S研发背景的可以百度或参考其他博文,大概就是k8s提供了各类扩展框架,最原始的是client-go,后来是更便利的kubebuilder(k8s)和operator-sdk(coreos)逐步统一,用户只需要按照预定的规范创建即可,看视频一看就懂 https://github.com/kubernetes-sigs/kubebuilder/blob/master/docs/gif/kb-demo.v2.0.1.svg

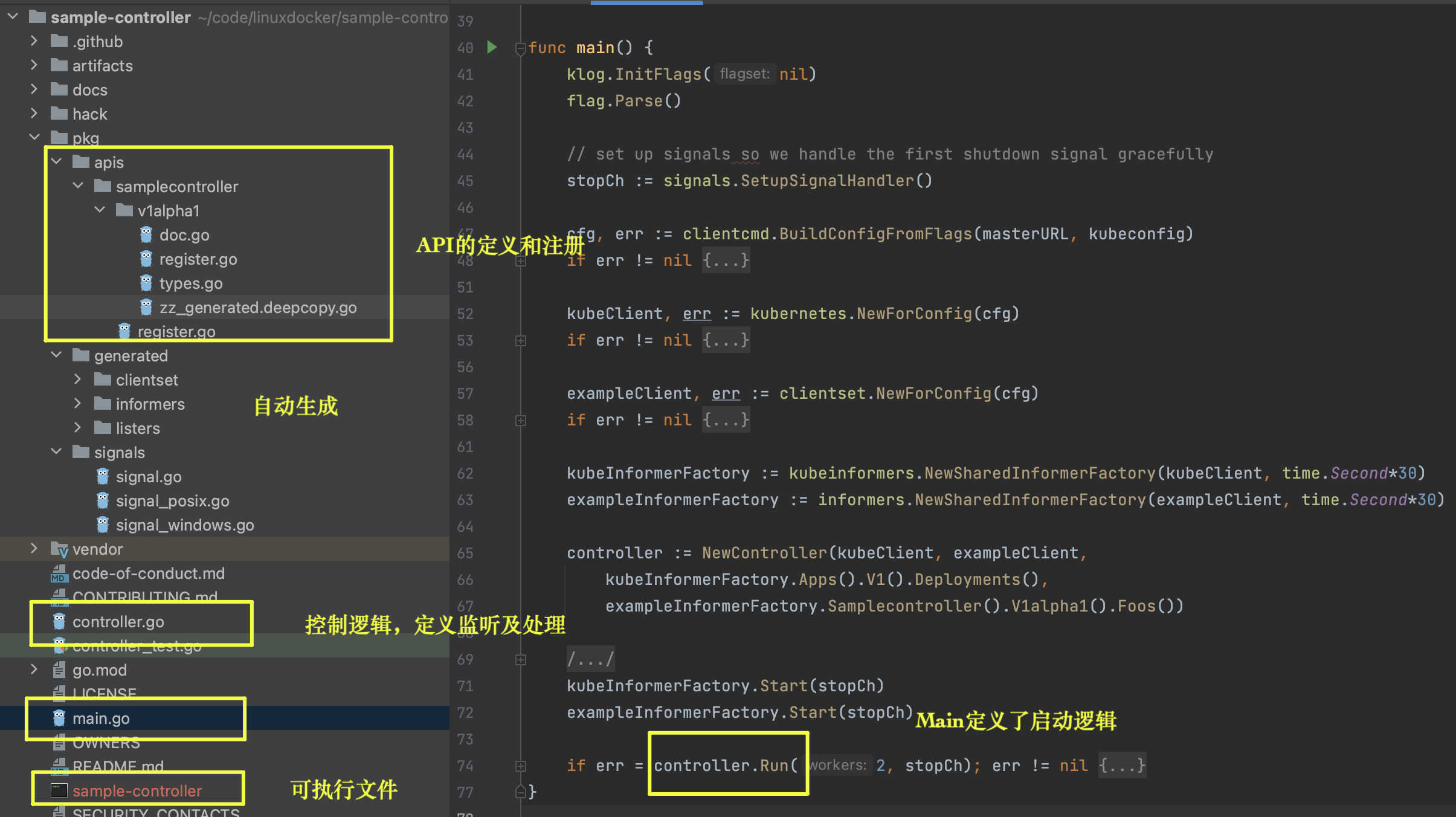

想一下,开发一个Operator需要什么? Operator就是注册了自己的逻辑到集群里,那么就要定义自己的CRD(非必需)、定义自己的监听、定义监听到对应事件之后的处理逻辑,可以先看一个最简单的实现 https://github.com/kubernetes/sample-controller

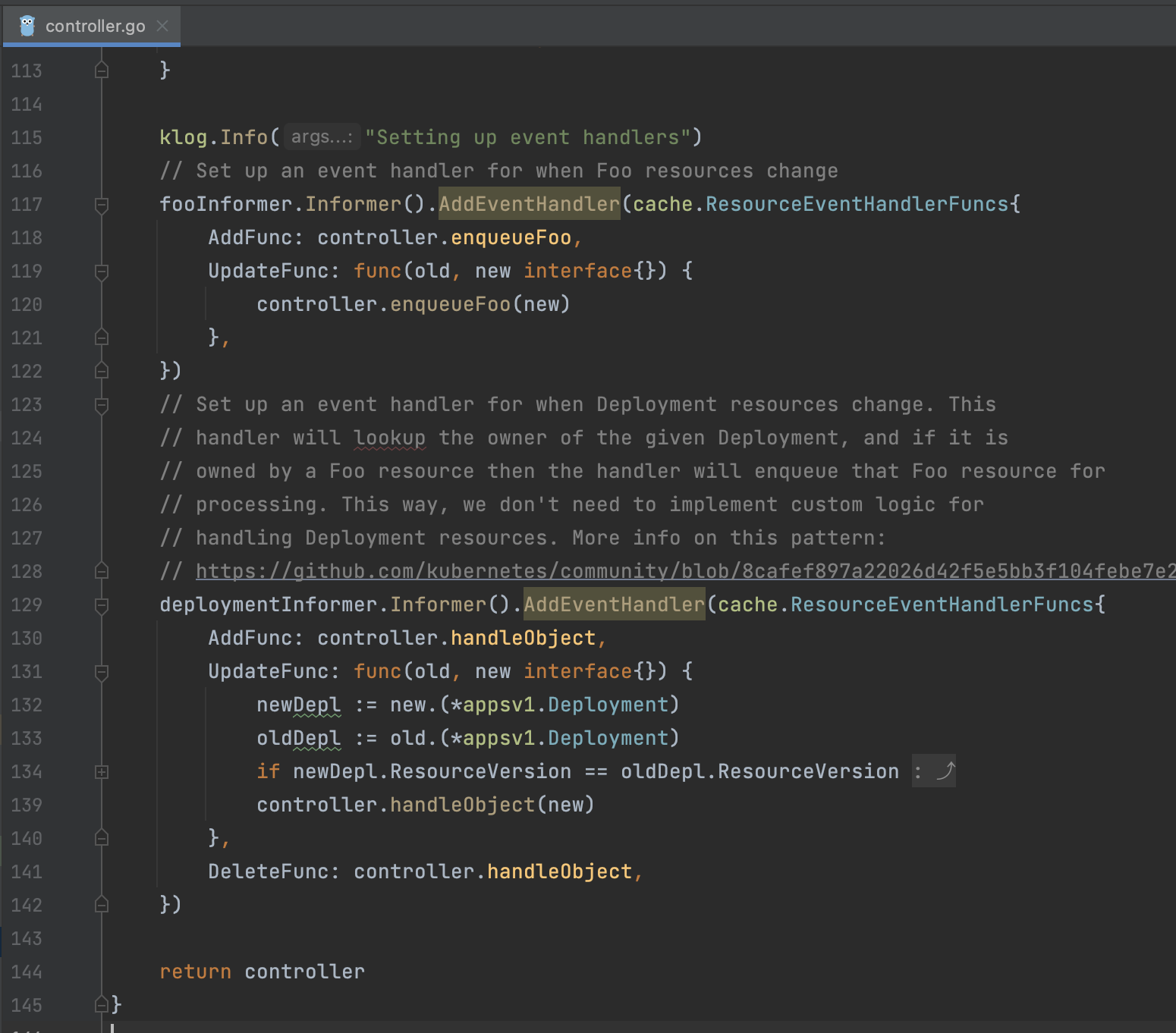

controller中注册事件监听处理器,分别处理时间AddFunc、UpdateFunc、DeleteFunc,将事件消息加入队列

消费队列处理,具体逻辑在handler里

上面是最最最简单的一个例子,其实也足够了解一般Operator定义的全过程了

CRD定义及使用在https://github.com/kubernetes/sample-controller/tree/master/artifacts/examples

好了,其实中间件提供的Operator也大同小异,这里以redis的两个operator为例看下这东西到底做了啥

首先是Redis集群,想一下,如果手工创建的核心步骤有哪些?

配置启动服务->CLUSTER MEET IP PORT互相发现->CLUSTER ADDSLOTS手动分配槽位->CLUSTER REPLICATE建立主从关系->各类故障发现及恢复

上面的步骤显而易见的繁琐,如果能通过集群自动运维是不是方便多了,于是就有了下面的这类Operator

https://github.com/ucloud/redis-cluster-operator

基础基于operator-sdk https://github.com/operator-framework/operator-sdk

按文档部署,版本不匹配还报错

error: error validating "redis.kun_redisclusterbackups_crd.yaml": error validating data: [ValidationError(CustomResourceDefinition.spec): unknown field "additionalPrinterColumns" in io.k8s.apiextensions-apiserver.pkg.apis.apiextensions.v1.CustomResourceDefinitionSpec, ValidationError(CustomResourceDefinition.spec): unknown field "subresources" in io.k8s.apiextensions-apiserver.pkg.apis.apiextensions.v1.CustomResourceDefinitionSpec, ValidationError(CustomResourceDefinition.spec): unknown field "validation" in io.k8s.apiextensions-apiserver.pkg.apis.apiextensions.v1.CustomResourceDefinitionSpec]; if you choose to ignore these errors, turn validation off with --validate=false

当前版本的customresourcedefinitions版本是apiextensions.k8s.io/v1,而组件安装是基于apiVersion: apiextensions.k8s.io/v1beta1的,所以失败,怎么办?

转? kubectl-convert?不行 https://kubernetes.io/docs/tasks/tools/install-kubectl-linux/

回头到github里找issue,还真有 https://github.com/ucloud/redis-cluster-operator/issues/95,按照里面大佬给的果然就可以了

# Thus this yaml has been adapted for k8s v1.22 as per:

# https://kubernetes.io/docs/reference/using-api/deprecation-guide/#customresourcedefinition-v122

#

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: distributedredisclusters.redis.kun

spec:

group: redis.kun

names:

kind: DistributedRedisCluster

listKind: DistributedRedisClusterList

plural: distributedredisclusters

singular: distributedrediscluster

shortNames:

- drc

scope: Namespaced

versions:

- name: v1alpha1

served: true

storage: true

subresources:

status: {}

additionalPrinterColumns:

- name: MasterSize

jsonPath: .spec.masterSize

description: The number of redis master node in the ensemble

type: integer

- name: Status

jsonPath: .status.status

description: The status of redis cluster

type: string

- name: Age

jsonPath: .metadata.creationTimestamp

type: date

- name: CurrentMasters

jsonPath: .status.numberOfMaster

priority: 1

description: The current master number of redis cluster

type: integer

- name: Images

jsonPath: .spec.image

priority: 1

description: The image of redis cluster

type: string

schema:

openAPIV3Schema:

description: DistributedRedisCluster is the Schema for the distributedredisclusters API

type: object

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this representation

of an object. Servers should convert recognized schemas to the latest

internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this

object represents. Servers may infer this from the endpoint the client

submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: DistributedRedisClusterSpec defines the desired state of DistributedRedisCluster

type: object

properties:

masterSize:

format: int32

type: integer

minimum: 1

maximum: 3

clusterReplicas:

format: int32

type: integer

minimum: 1

maximum: 3

serviceName:

type: string

pattern: '[a-z0-9]([-a-z0-9]*[a-z0-9])?(\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*'

annotations:

additionalProperties:

type: string

type: object

config:

additionalProperties:

type: string

type: object

passwordSecret:

additionalProperties:

type: string

type: object

storage:

type: object

properties:

type:

type: string

size:

type: string

class:

type: string

deleteClaim:

type: boolean

image:

type: string

securityContext:

description: 'SecurityContext defines the security options the container should be run with'

type: object

properties:

allowPrivilegeEscalation:

type: boolean

privileged:

type: boolean

readOnlyRootFilesystem:

type: boolean

capabilities:

type: object

properties:

add:

items:

type: string

type: array

drop:

items:

type: string

type: array

sysctls:

items:

type: object

properties:

name:

type: string

value:

type: string

required:

- name

- value

type: array

resources:

type: object

properties:

requests:

type: object

additionalProperties:

type: string

limits:

type: object

additionalProperties:

type: string

toleRations:

type: array

items:

type: object

properties:

effect:

type: string

key:

type: string

operator:

type: string

tolerationSeconds:

type: integer

format: int64

value:

type: string

status:

description: DistributedRedisClusterStatus defines the observed state of DistributedRedisCluster

type: object

properties:

numberOfMaster:

type: integer

format: int32

reason:

type: string

status:

type: string

maxReplicationFactor:

type: integer

format: int32

minReplicationFactor:

type: integer

format: int32

status:

type: string

reason:

type: string

---

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: redisclusterbackups.redis.kun

spec:

group: redis.kun

names:

kind: RedisClusterBackup

listKind: RedisClusterBackupList

plural: redisclusterbackups

singular: redisclusterbackup

shortNames:

- drcb

scope: Namespaced

versions:

- name: v1alpha1

# Each version can be enabled/disabled by Served flag.

served: true

# One and only one version must be marked as the storage version.

storage: true

subresources:

status: {}

additionalPrinterColumns:

- jsonPath: .metadata.creationTimestamp

name: Age

type: date

- jsonPath: .status.phase

description: The phase of redis cluster backup

name: Phase

type: string

schema:

openAPIV3Schema:

description: RedisClusterBackup is the Schema for the redisclusterbackups

API

properties:

apiVersion:

description: 'APIVersion defines the versioned schema of this representation

of an object. Servers should convert recognized schemas to the latest

internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#resources'

type: string

kind:

description: 'Kind is a string value representing the REST resource this

object represents. Servers may infer this from the endpoint the client

submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/api-conventions.md#types-kinds'

type: string

metadata:

type: object

spec:

description: RedisClusterBackupSpec defines the desired state of RedisClusterBackup

type: object

status:

description: RedisClusterBackupStatus defines the observed state of RedisClusterBackup

type: object

type: object

整体看下代码有啥逻辑,忽略部分,可以看到入口是main.go,进来之后新建了manager,将Schema定义和管理器注册到了K8S,启动了管理器

目录基本符合K8S的整体编码风格,cmd定义执行起点,pkg为主程序,api定义api定义描述,controller定义了具体的监听和事件处理,其他工具类便于操作k8s、便于访问redis、便于创建各类资源等

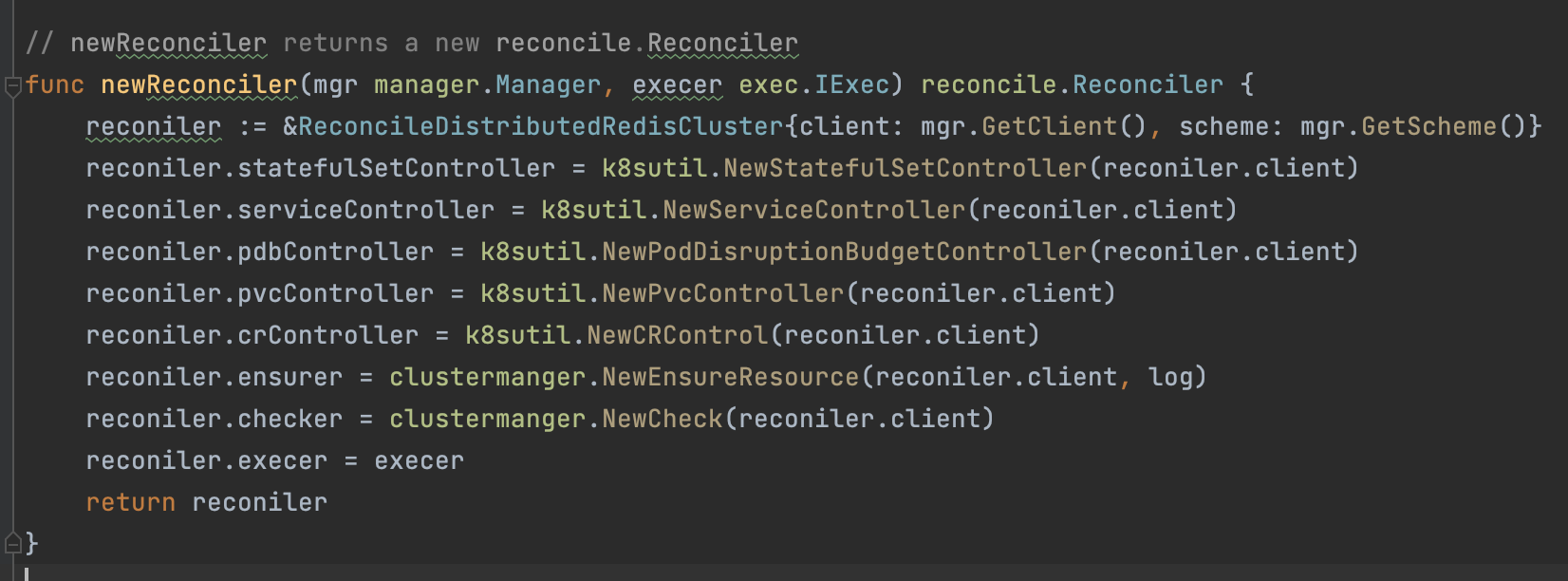

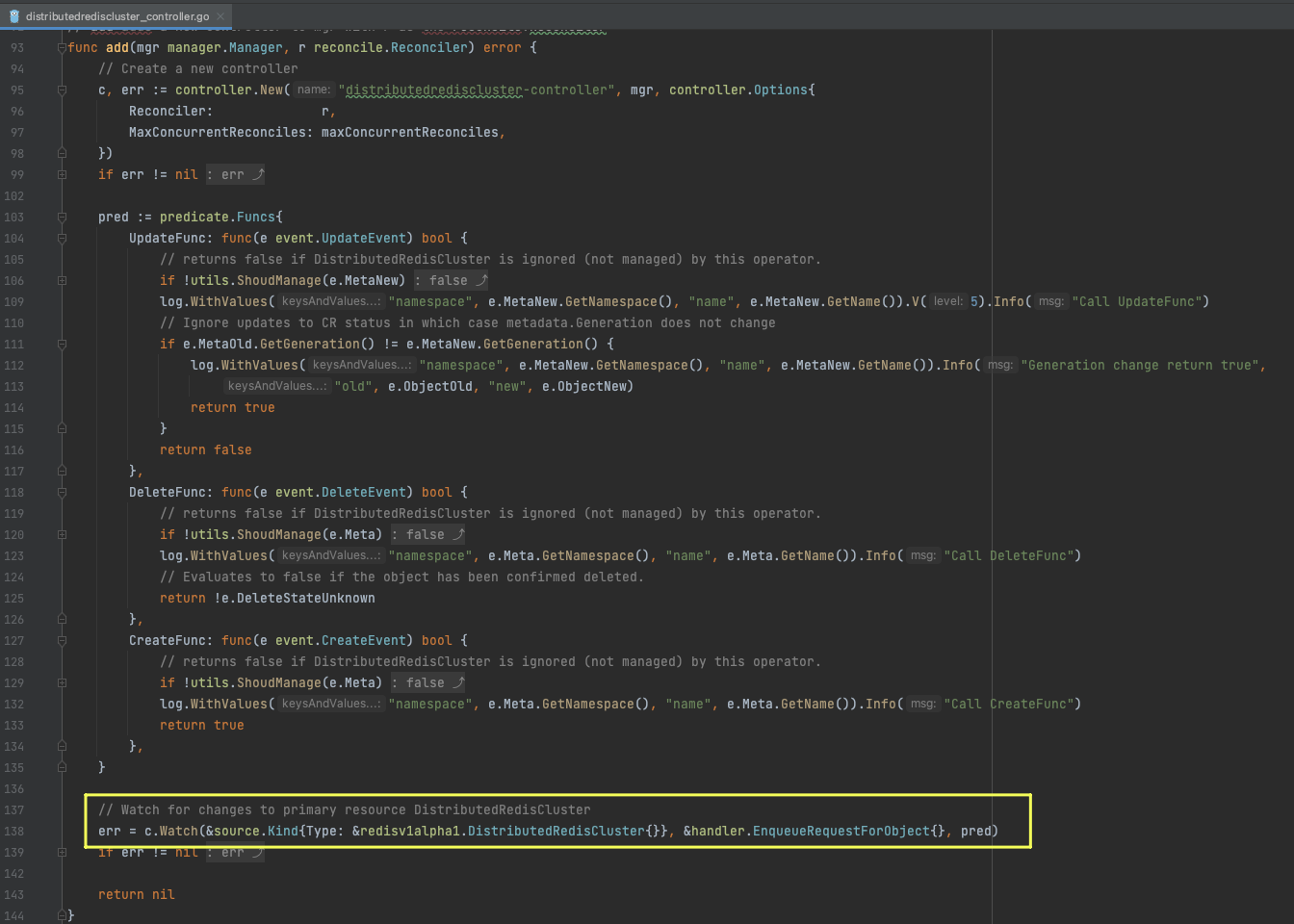

以集群控制器为例,加入了Reconciler

加入了监听逻辑对创建、更新、删除都做了监听增加了对应的处理逻辑

协调处理逻辑,获取集群定义,检查状态与预期对比,构建集群,发现异常以及自动恢复

k8s operator 中reconcile当资源变化时就会触发reconcile方法,kubebuilder中在reconcile返回时,通过return reconcile.Result{RequeueAfter: SyncBuildStatusInterval}指定下次调度间隔 ,operator会将该资源变动的event重新放入队列,然后等到RequeueAfter参数指定的时间间隔之后重新取出来再调用reconcile处理。降低频繁写etcd,保障k8s集群不受影响

// Reconcile reads that state of the cluster for a DistributedRedisCluster object and makes changes based on the state read // and what is in the DistributedRedisCluster.Spec // Note: // The Controller will requeue the Request to be processed again if the returned error is non-nil or // Result.Requeue is true, otherwise upon completion it will remove the work from the queue. func (r *ReconcileDistributedRedisCluster) Reconcile(request reconcile.Request) (reconcile.Result, error) { reqLogger := log.WithValues("Request.Namespace", request.Namespace, "Request.Name", request.Name) reqLogger.Info("Reconciling DistributedRedisCluster") // Fetch the DistributedRedisCluster instance instance := &redisv1alpha1.DistributedRedisCluster{} err := r.client.Get(context.TODO(), request.NamespacedName, instance) if err != nil { if errors.IsNotFound(err) { return reconcile.Result{}, nil } return reconcile.Result{}, err } ctx := &syncContext{ cluster: instance, reqLogger: reqLogger, } err = r.ensureCluster(ctx) if err != nil { switch GetType(err) { case StopRetry: reqLogger.Info("invalid", "err", err) return reconcile.Result{}, nil } reqLogger.WithValues("err", err).Info("ensureCluster") newStatus := instance.Status.DeepCopy() SetClusterScaling(newStatus, err.Error()) r.updateClusterIfNeed(instance, newStatus, reqLogger) return reconcile.Result{RequeueAfter: requeueAfter}, nil } matchLabels := getLabels(instance) redisClusterPods, err := r.statefulSetController.GetStatefulSetPodsByLabels(instance.Namespace, matchLabels) if err != nil { return reconcile.Result{}, Kubernetes.Wrap(err, "GetStatefulSetPods") } ctx.pods = clusterPods(redisClusterPods.Items) reqLogger.V(6).Info("debug cluster pods", "", ctx.pods) ctx.healer = clustermanger.NewHealer(&heal.CheckAndHeal{ Logger: reqLogger, PodControl: k8sutil.NewPodController(r.client), Pods: ctx.pods, DryRun: false, }) err = r.waitPodReady(ctx) if err != nil { switch GetType(err) { case Kubernetes: return reconcile.Result{}, err } reqLogger.WithValues("err", err).Info("waitPodReady") newStatus := instance.Status.DeepCopy() SetClusterScaling(newStatus, err.Error()) r.updateClusterIfNeed(instance, newStatus, reqLogger) return reconcile.Result{RequeueAfter: requeueAfter}, nil } password, err := statefulsets.GetClusterPassword(r.client, instance) if err != nil { return reconcile.Result{}, Kubernetes.Wrap(err, "getClusterPassword") } admin, err := newRedisAdmin(ctx.pods, password, config.RedisConf(), reqLogger) if err != nil { return reconcile.Result{}, Redis.Wrap(err, "newRedisAdmin") } defer admin.Close() clusterInfos, err := admin.GetClusterInfos() if err != nil { if clusterInfos.Status == redisutil.ClusterInfosPartial { return reconcile.Result{}, Redis.Wrap(err, "GetClusterInfos") } } requeue, err := ctx.healer.Heal(instance, clusterInfos, admin) if err != nil { return reconcile.Result{}, Redis.Wrap(err, "Heal") } if requeue { return reconcile.Result{RequeueAfter: requeueAfter}, nil } ctx.admin = admin ctx.clusterInfos = clusterInfos err = r.waitForClusterJoin(ctx) if err != nil { switch GetType(err) { case Requeue: reqLogger.WithValues("err", err).Info("requeue") return reconcile.Result{RequeueAfter: requeueAfter}, nil } newStatus := instance.Status.DeepCopy() SetClusterFailed(newStatus, err.Error()) r.updateClusterIfNeed(instance, newStatus, reqLogger) return reconcile.Result{}, err } // mark .Status.Restore.Phase = RestorePhaseRestart, will // remove init container and restore volume that referenced in stateulset for // dump RDB file from backup, then the redis master node will be restart. if instance.IsRestoreFromBackup() && instance.IsRestoreRunning() { reqLogger.Info("update restore redis cluster cr") instance.Status.Restore.Phase = redisv1alpha1.RestorePhaseRestart if err := r.crController.UpdateCRStatus(instance); err != nil { return reconcile.Result{}, err } if err := r.ensurer.UpdateRedisStatefulsets(instance, getLabels(instance)); err != nil { return reconcile.Result{}, err } waiter := &waitStatefulSetUpdating{ name: "waitMasterNodeRestarting", timeout: 60 * time.Second, tick: 5 * time.Second, statefulSetController: r.statefulSetController, cluster: instance, } if err := waiting(waiter, ctx.reqLogger); err != nil { return reconcile.Result{}, err } return reconcile.Result{Requeue: true}, nil } // restore succeeded, then update cr and wait for the next Reconcile loop if instance.IsRestoreFromBackup() && instance.IsRestoreRestarting() { reqLogger.Info("update restore redis cluster cr") instance.Status.Restore.Phase = redisv1alpha1.RestorePhaseSucceeded if err := r.crController.UpdateCRStatus(instance); err != nil { return reconcile.Result{}, err } // set ClusterReplicas = Backup.Status.ClusterReplicas, // next Reconcile loop the statefulSet's replicas will increase by ClusterReplicas, then start the slave node instance.Spec.ClusterReplicas = instance.Status.Restore.Backup.Status.ClusterReplicas if err := r.crController.UpdateCR(instance); err != nil { return reconcile.Result{}, err } return reconcile.Result{}, nil } if err := admin.SetConfigIfNeed(instance.Spec.Config); err != nil { return reconcile.Result{}, Redis.Wrap(err, "SetConfigIfNeed") } status := buildClusterStatus(clusterInfos, ctx.pods, instance, reqLogger) if is := r.isScalingDown(instance, reqLogger); is { SetClusterRebalancing(status, "scaling down") } reqLogger.V(4).Info("buildClusterStatus", "status", status) r.updateClusterIfNeed(instance, status, reqLogger) instance.Status = *status if needClusterOperation(instance, reqLogger) { reqLogger.Info(">>>>>> clustering") err = r.syncCluster(ctx) if err != nil { newStatus := instance.Status.DeepCopy() SetClusterFailed(newStatus, err.Error()) r.updateClusterIfNeed(instance, newStatus, reqLogger) return reconcile.Result{}, err } } newClusterInfos, err := admin.GetClusterInfos() if err != nil { if clusterInfos.Status == redisutil.ClusterInfosPartial { return reconcile.Result{}, Redis.Wrap(err, "GetClusterInfos") } } newStatus := buildClusterStatus(newClusterInfos, ctx.pods, instance, reqLogger) SetClusterOK(newStatus, "OK") r.updateClusterIfNeed(instance, newStatus, reqLogger) return reconcile.Result{RequeueAfter: time.Duration(reconcileTime) * time.Second}, nil }

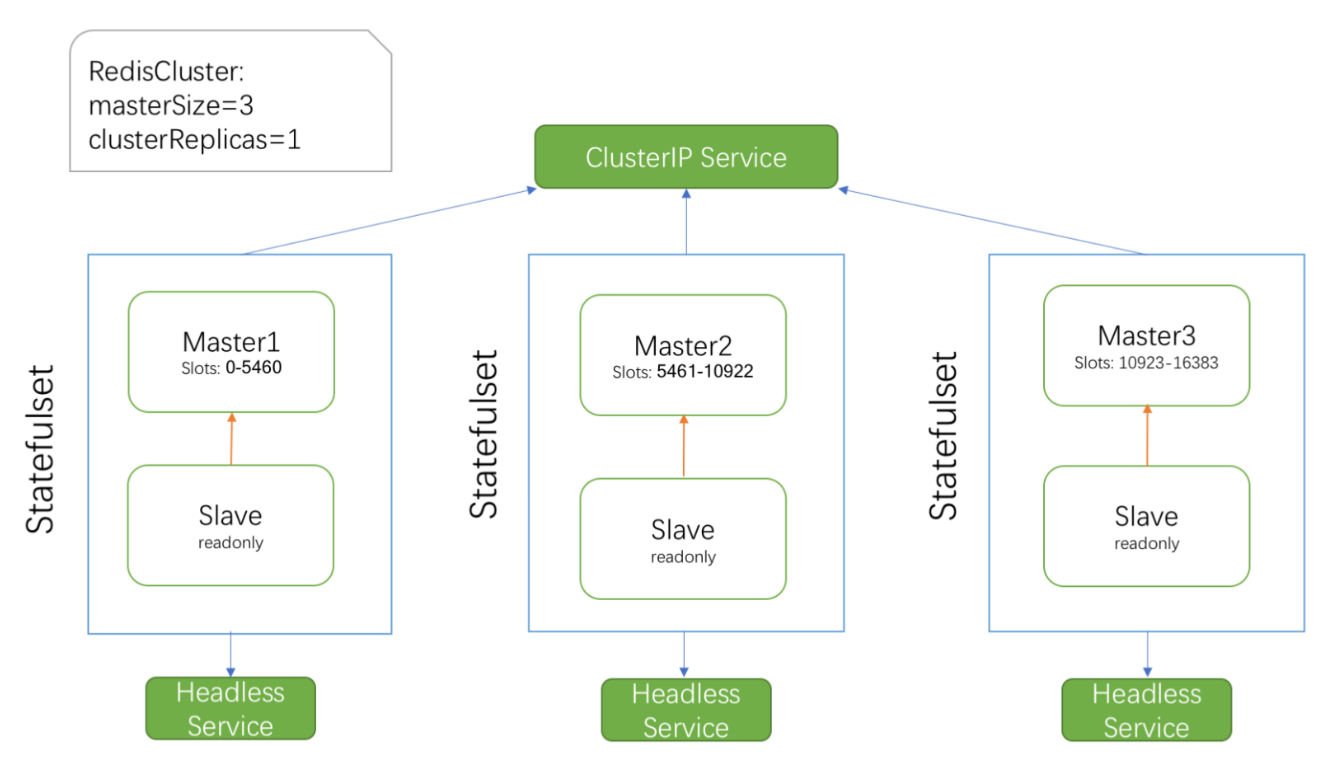

创建

apiVersion: redis.kun/v1alpha1

kind: DistributedRedisCluster

metadata:

name: example-distributedrediscluster

namespace: default

spec:

image: redis:5.0.4-alpine

imagePullPolicy: IfNotPresent

masterSize: 3

clusterReplicas: 1

serviceName: redis-svc

# resources config

resources:

limits:

cpu: 300m

memory: 200Mi

requests:

cpu: 200m

memory: 150Mi

# pv storage

storage:

type: persistent-claim

size: 1Gi

class: disk

deleteClaim: true

部署效果跟官网下图一样,Operator在创建时做了

- 配置启动服务

- CLUSTER MEET IP PORT互相发现

- CLUSTER ADDSLOTS分配槽位

- CLUSTER REPLICATE建立主从关系

- SVC及其他资源创建

- 资源及状态监测

Operator协调日志

{"level":"info","ts":1674011374.0957515,"logger":"cmd","msg":"Go Version: go1.13.3"}

{"level":"info","ts":1674011374.095774,"logger":"cmd","msg":"Go OS/Arch: linux/amd64"}

{"level":"info","ts":1674011374.095777,"logger":"cmd","msg":"Version of operator-sdk: v0.13.0"}

{"level":"info","ts":1674011374.0957801,"logger":"cmd","msg":"Version of operator: v0.2.0-62+12f703c"}

{"level":"info","ts":1674011374.0959022,"logger":"leader","msg":"Trying to become the leader."}

{"level":"info","ts":1674011374.720209,"logger":"leader","msg":"Found existing lock","LockOwner":"redis-cluster-operator-7467976bc5-87f89"}

{"level":"info","ts":1674011374.7400174,"logger":"leader","msg":"Not the leader. Waiting."}

{"level":"info","ts":1674011375.8324194,"logger":"leader","msg":"Not the leader. Waiting."}

{"level":"info","ts":1674011378.1799858,"logger":"leader","msg":"Became the leader."}

{"level":"info","ts":1674011378.7850115,"logger":"controller-runtime.metrics","msg":"metrics server is starting to listen","addr":"0.0.0.0:8383"}

{"level":"info","ts":1674011378.7851572,"logger":"cmd","msg":"Registering Components."}

{"level":"info","ts":1674011381.2693467,"logger":"metrics","msg":"Metrics Service object updated","Service.Name":"redis-cluster-operator-metrics","Service.Namespace":"default"}

{"level":"info","ts":1674011381.873133,"logger":"cmd","msg":"Could not create ServiceMonitor object","error":"no ServiceMonitor registered with the API"}

{"level":"info","ts":1674011381.8731618,"logger":"cmd","msg":"Install prometheus-operator in your cluster to create ServiceMonitor objects","error":"no ServiceMonitor registered with the API"}

{"level":"info","ts":1674011381.873167,"logger":"cmd","msg":"Starting the Cmd."}

{"level":"info","ts":1674011381.8733594,"logger":"controller-runtime.manager","msg":"starting metrics server","path":"/metrics"}

{"level":"info","ts":1674011381.8734646,"logger":"controller-runtime.controller","msg":"Starting EventSource","controller":"redisclusterbackup-controller","source":"kind source: /, Kind="}

{"level":"info","ts":1674011381.8735168,"logger":"controller-runtime.controller","msg":"Starting EventSource","controller":"distributedrediscluster-controller","source":"kind source: /, Kind="}

{"level":"info","ts":1674011381.9737544,"logger":"controller-runtime.controller","msg":"Starting EventSource","controller":"redisclusterbackup-controller","source":"kind source: /, Kind="}

{"level":"info","ts":1674011381.9740582,"logger":"controller-runtime.controller","msg":"Starting Controller","controller":"distributedrediscluster-controller"}

{"level":"info","ts":1674011381.9740942,"logger":"controller_distributedrediscluster","msg":"Call CreateFunc","namespace":"default","name":"example-distributedrediscluster"}

{"level":"info","ts":1674011382.074047,"logger":"controller-runtime.controller","msg":"Starting Controller","controller":"redisclusterbackup-controller"}

{"level":"info","ts":1674011382.0740838,"logger":"controller-runtime.controller","msg":"Starting workers","controller":"redisclusterbackup-controller","worker count":2}

{"level":"info","ts":1674011382.0741792,"logger":"controller-runtime.controller","msg":"Starting workers","controller":"distributedrediscluster-controller","worker count":4}

{"level":"info","ts":1674011382.0742629,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011382.617124,"logger":"controller_distributedrediscluster","msg":">>> Sending CLUSTER MEET messages to join the cluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011382.6185405,"logger":"controller_distributedrediscluster.redis_util","msg":"node 10.43.0.198:6379 attached properly","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011383.6385891,"logger":"controller_distributedrediscluster","msg":"requeue","Request.Namespace":"default","Request.Name":"example-distributedrediscluster","err":"wait for cluster join: Cluster view is inconsistent"}

{"level":"info","ts":1674011393.6389103,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011393.6871004,"logger":"controller_distributedrediscluster","msg":">>> Sending CLUSTER MEET messages to join the cluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011393.688571,"logger":"controller_distributedrediscluster.redis_util","msg":"node 10.43.0.198:6379 attached properly","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011394.7059786,"logger":"controller_distributedrediscluster","msg":"requeue","Request.Namespace":"default","Request.Name":"example-distributedrediscluster","err":"wait for cluster join: Cluster view is inconsistent"}

{"level":"info","ts":1674011404.7062836,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011404.7589452,"logger":"controller_distributedrediscluster","msg":">>> Sending CLUSTER MEET messages to join the cluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011404.76041,"logger":"controller_distributedrediscluster.redis_util","msg":"node 10.43.0.68:6379 attached properly","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011405.7788498,"logger":"controller_distributedrediscluster","msg":"requeue","Request.Namespace":"default","Request.Name":"example-distributedrediscluster","err":"wait for cluster join: Cluster view is inconsistent"}

{"level":"info","ts":1674011415.7791476,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011415.8322582,"logger":"controller_distributedrediscluster","msg":">>> Sending CLUSTER MEET messages to join the cluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011415.8336947,"logger":"controller_distributedrediscluster.redis_util","msg":"node 10.43.0.134:6379 attached properly","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011416.8505604,"logger":"controller_distributedrediscluster","msg":"requeue","Request.Namespace":"default","Request.Name":"example-distributedrediscluster","err":"wait for cluster join: Cluster view is inconsistent"}

{"level":"info","ts":1674011426.850888,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011426.9262946,"logger":"controller_distributedrediscluster","msg":">>>>>> clustering","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"error","ts":1674011426.9263313,"logger":"controller_distributedrediscluster","msg":"fix manually","Request.Namespace":"default","Request.Name":"example-distributedrediscluster","statefulSet":"drc-example-distributedrediscluster-2","masters":"{Redis ID: feb151649216937e13721cb36a671ca8b1425ed8, role: Master, master: , link: connected, status: [], addr: 10.43.1.4:6379, slots: [5461-10922], len(migratingSlots): 0, len(importingSlots): 0},{Redis ID: e0129438837cd65796a88997848a660b8e73b9b8, role: Master, master: , link: connected, status: [], addr: 10.43.0.15:6379, slots: [10923-16383], len(migratingSlots): 0, len(importingSlots): 0}","error":"split brain","stacktrace":"github.com/go-logr/zapr.(*zapLogger).Error\n\t/go/pkg/mod/github.com/go-logr/zapr@v0.1.1/zapr.go:128\ngithub.com/ucloud/redis-cluster-operator/pkg/controller/clustering.(*Ctx).DispatchMasters\n\t/src/pkg/controller/clustering/placement_v2.go:77\ngithub.com/ucloud/redis-cluster-operator/pkg/controller/distributedrediscluster.(*ReconcileDistributedRedisCluster).syncCluster\n\t/src/pkg/controller/distributedrediscluster/sync_handler.go:200\ngithub.com/ucloud/redis-cluster-operator/pkg/controller/distributedrediscluster.(*ReconcileDistributedRedisCluster).Reconcile\n\t/src/pkg/controller/distributedrediscluster/distributedrediscluster_controller.go:326\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:256\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:232\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).worker\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:211\nk8s.io/apimachinery/pkg/util/wait.JitterUntil.func1\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:152\nk8s.io/apimachinery/pkg/util/wait.JitterUntil\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:153\nk8s.io/apimachinery/pkg/util/wait.Until\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:88"}

{"level":"error","ts":1674011426.9368975,"logger":"controller-runtime.controller","msg":"Reconciler error","controller":"distributedrediscluster-controller","request":"default/example-distributedrediscluster","error":"DispatchMasters: split brain: drc-example-distributedrediscluster-2","stacktrace":"github.com/go-logr/zapr.(*zapLogger).Error\n\t/go/pkg/mod/github.com/go-logr/zapr@v0.1.1/zapr.go:128\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).reconcileHandler\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:258\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).processNextWorkItem\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:232\nsigs.k8s.io/controller-runtime/pkg/internal/controller.(*Controller).worker\n\t/go/pkg/mod/sigs.k8s.io/controller-runtime@v0.4.0/pkg/internal/controller/controller.go:211\nk8s.io/apimachinery/pkg/util/wait.JitterUntil.func1\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:152\nk8s.io/apimachinery/pkg/util/wait.JitterUntil\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:153\nk8s.io/apimachinery/pkg/util/wait.Until\n\t/go/pkg/mod/k8s.io/apimachinery@v0.0.0-20191004115801-a2eda9f80ab8/pkg/util/wait/wait.go:88"}

{"level":"info","ts":1674011426.9420218,"logger":"controller_distributedrediscluster","msg":"Reconciling DistributedRedisCluster","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

{"level":"info","ts":1674011426.997737,"logger":"controller_distributedrediscluster","msg":">>>>>> clustering","Request.Namespace":"default","Request.Name":"example-distributedrediscluster"}

同样,Sentinel的Operator原理也是类似,不做过多重复描述

https://github.com/ucloud/redis-operator

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律