Iptables

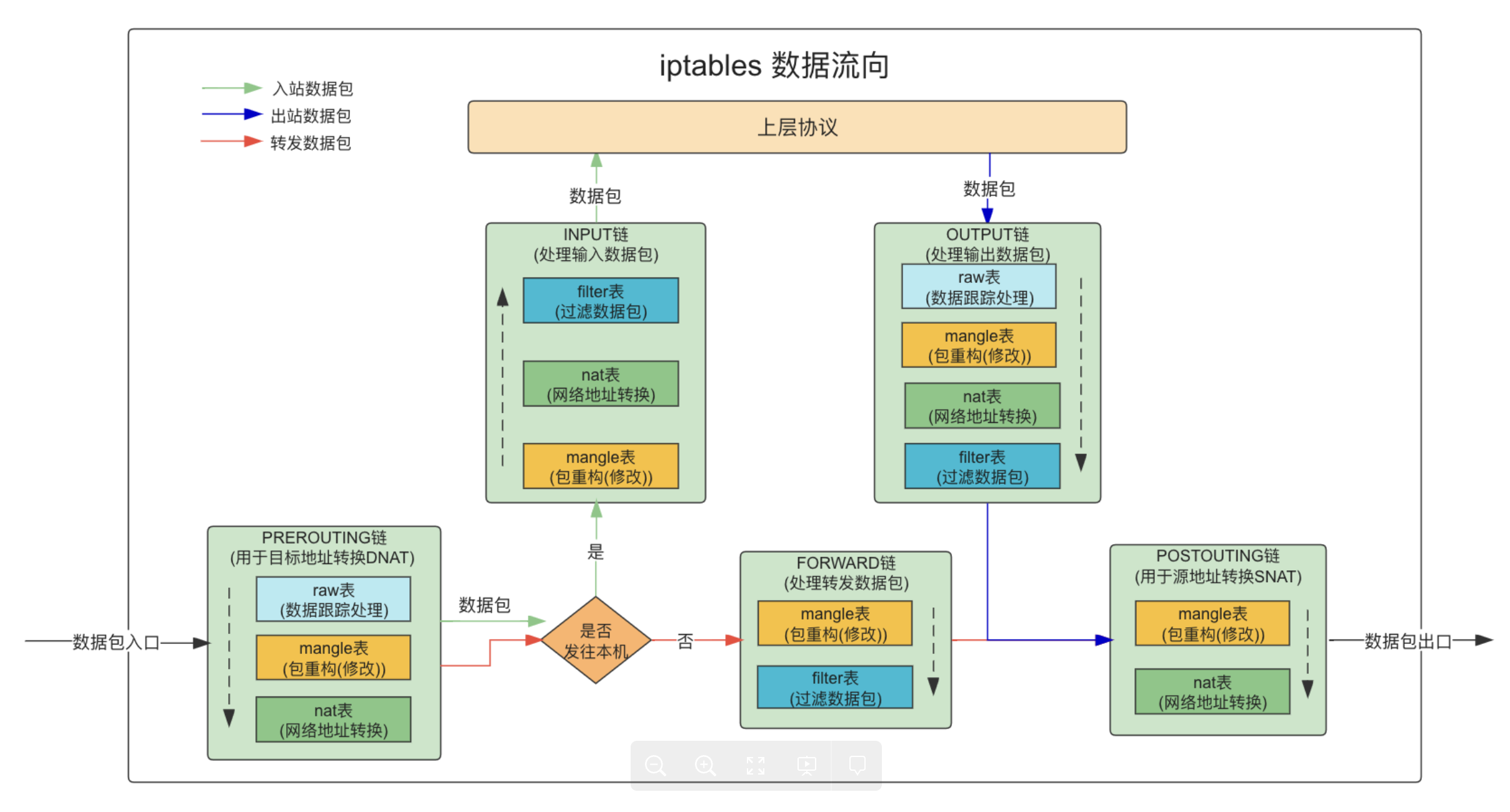

五链四表执行关系如图所示,容器环境最常用的就是filter和nat表 加上各种自定义的链插入到各个环节,拦截流量做各种控制

- filter表:匹配数据包以进行过滤

- nat表:修改数据包进行地址或者端口的转换

- mangle表:用于修改数据包IP头信息中TTL等、或者对数据包进行Mark以实现QoS或者特定路由策略

- raw表:不经过iptables的状态跟踪功能(在raw表之后mangle表之前,对数据包进行connection tracking),且raw表的内容匹配之后,后续connection tracking和地址端口转换等均跳过,可以提高特定类型包的处理效率

拦截重点-PREROUTING链是外部进来的第一条链,OUTPUT链是本地进程出去的第一条链,POSTROUTING是数据包离开宿主机的协议栈的最后一条链

常用操作

iptables -t 表名 选项(增删查改)链名称 条件 动作

- A(附加); -D(删);- R(替换);- I(插入);- S(查询规则); -F(清空); -N(新建自定义链) ;-X(删除自定义链) ;-P(默认策略);

-p 协议; -s来源地址;-d目标地址; --sport来源端口; --dport目标端口; -i 进来的接口; -o出去的接口;

-j 跳转;ACCEPT接收;REJECT拒绝;SNAT --to-source ip内部请求外部,在POSTROUTE链中转换来源地址;DNAT --to-destination ip 在PREROUTING链中,外部请求某个地址,目标转为集群内地址;MASQUERADE地址伪装;

几个小例子

iptables -t filter -I INPUT -s 10.0.0.1 -d 10.0.0.5 -p tcp --dport 80 -j ACCEPT #允许来源IP为10.0.0.1目的IP为10.0.0.5 协议为tcp 端口为80 的进行访问

iptables -t filter -A INPUT -p tcp -j DROP #附加规则拒绝所有的tcp链接

iptables -t nat -A POSTROUTING -s 192.168.1.0/24 -j SNAT --to-source 1.2.3.4 #附加POSTROUTING规则,当内部多个地址通过统一出口对外访问时,由于内部地址外部不可见,所以通过出口SNAT将源地址转换为网关地址,使得外部可以联络响应

iptables -t nat -A PREROUTING -d 1.2.3.4 -p tcp --dport 80 -j DNAT --to-destination 192.168.1.x #附加PREROUTING规则,当外部需要访问集群内某个地址时,将目标转为集群内地址

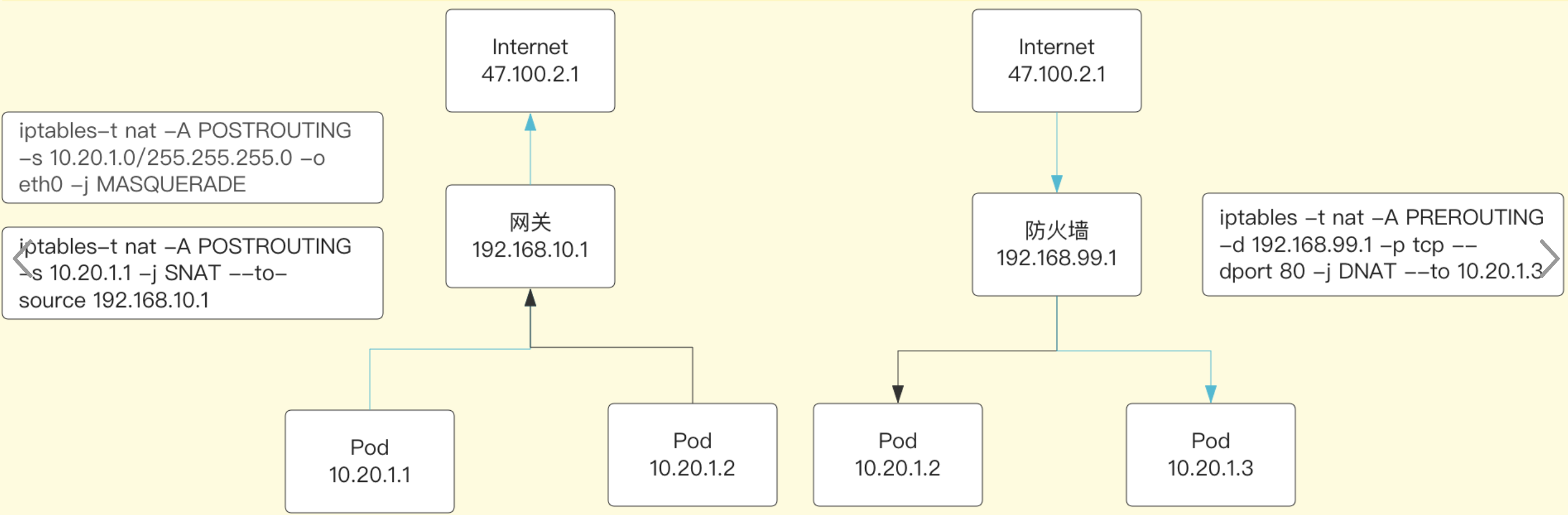

SNAT/DNAT/MASQUERADE

SNAT是源地址目标转换。比如,多个PC机使用ADSL路由器共享上网,每个PC机都配置了内网IP,PC机访问外部网络的时候,路由器将数据包的报头中的源地址替换成路由器的ip,当外部网络的服务器比如网站web服务器接到访问请求的时候,他的日志记录下来的是路由器的ip地址,而不是pc机的内网ip,这是因为,这个服务器收到的数据包的报头里边的“源地址”,已经被替换了,所以叫做SNAT,基于源地址的地址转换。

DNAT是目标网络地址转换,典型的应用是,有个web服务器放在内网配置内网ip,前端有个防火墙配置公网ip,互联网上的访问者使用公网ip来访问这个网站,当访问的时候,客户端发出一个数据包,这个数据包的报头里边,目标地址写的是防火墙的公网ip,防火墙会把这个数据包的报头改写一次,将目标地址改写成web服务器的内网ip,然后再把这个数据包发送到内网的web服务器上,这样,数据包就穿透了防火墙,并从公网ip变成了一个对内网地址的访问了,即DNAT,基于目标的网络地址转换。

MASQUERADE,地址伪装,算是snat中的一种特例,可以实现自动化的snat。由于SNAT需要确认出口IP,如果是拨号或经常变化则不便提前指定,MASQUERADE可以从服务器的网卡上,自动获取当前ip地址来做snat

打标--set-xmark,通过对经过的报文打标来进行后续的Drop、Accept或SNAT等操作

集群与iptables的关系到底是啥

kubernetes的Service是基于每个节点的kube-proxy从Kube-apiserver上监听获取Service和Endpoint信息,对Service的请求经过负载均衡转发到对应的Endpoint上

Isito的服务发现也是从kube-apiserver中获取Service和Endpoint信息,转换为istio服务模型的service和serviceinstance,但是数据面已经从kube-proxy变为Sidecar,通过拦截Pod的inbound和outbound流量,于是可以支持应用层信息相关的细粒度治理、监控等

Kubernetes

iptables

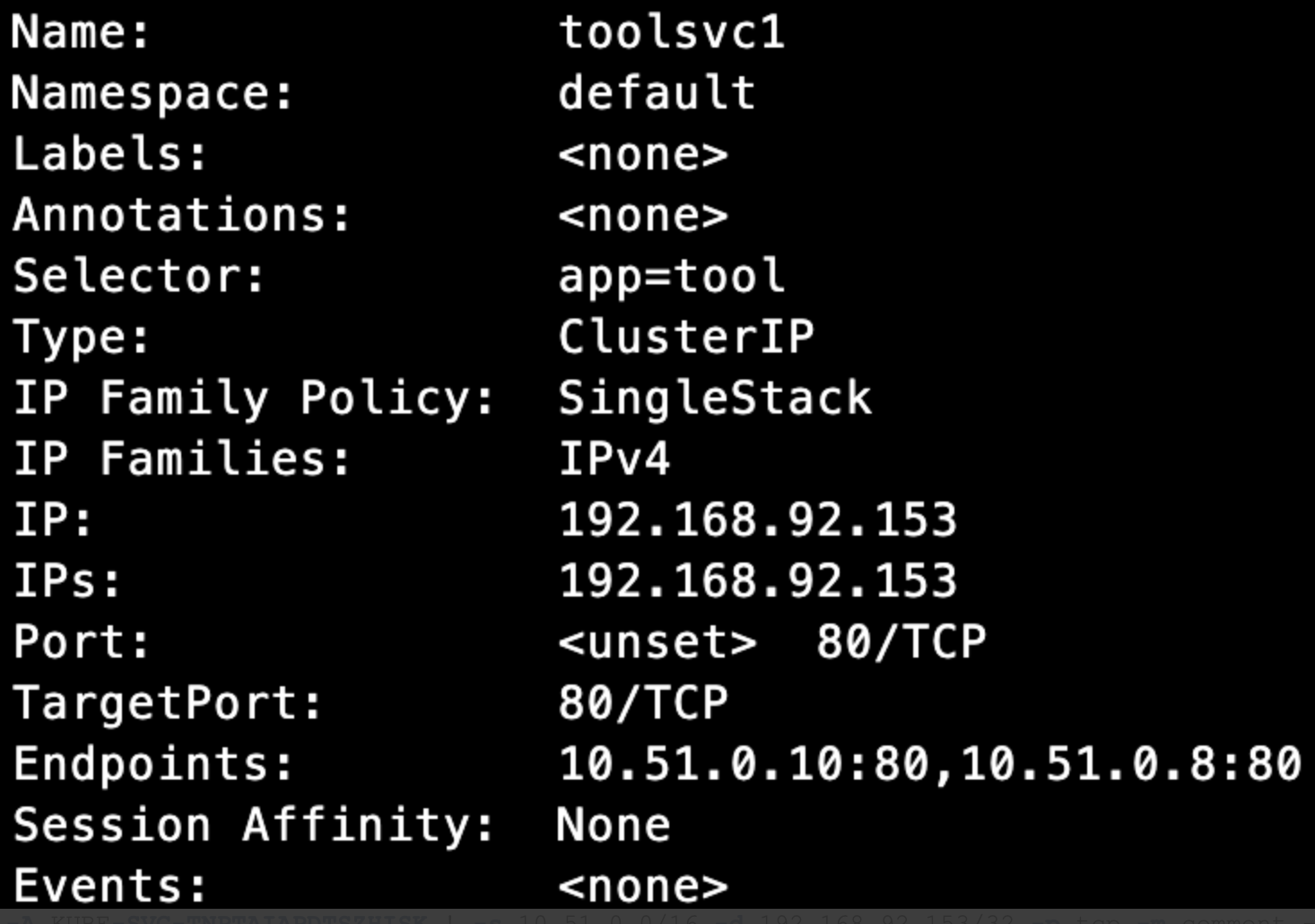

以ClusterIP服务toolsvc1为例

Node上iptables-save查看规则

###内容很多,主要针对完成此服务请求角度来看

###增加两条处理链

:KUBE-SERVICES - [0:0]

KUBE-SVC-TNPTAIAPDTSZHISK - [0:0]

###增加链路规则

### PREROUTING拦截流量转发到KUBE-SERVICES

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

###对请求打标

###对于未能匹配到跳转规则的traffic set mark 0x8000,有此标记的数据包会在filter表drop掉

###对于符合条件的包 set mark 0x4000, 有此标记的数据包会在KUBE-POSTROUTING chain中统一做MASQUERADE

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

### KUBE-SERVICES拦截目标为ClusterIP的tcp 80端口的请求到KUBE-SVC-xxx

-A KUBE-SERVICES -d 192.168.92.153/32 -p tcp -m comment --comment "default/toolsvc1 cluster IP" -m tcp --dport 80 -j KUBE-SVC-TNPTAIAPDTSZHISK

### KUBE-SVC-TNPTAIAPDTSZHISK拦截不是来自pod内且请求ClusterIP的tcp 80端口请求跳转到KUBE-MARK-MASQ打标签

-A KUBE-SVC-TNPTAIAPDTSZHISK ! -s 10.51.0.0/16 -d 192.168.92.153/32 -p tcp -m comment --comment "default/toolsvc1 cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

###以下两条执行service->pod的路由规则,当前两个pod,按照probability设置的概率访问后跳转

-A KUBE-SVC-TNPTAIAPDTSZHISK -m comment --comment "default/toolsvc1 -> 10.51.0.10:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-43S7UU6B7UNRH37P

-A KUBE-SVC-TNPTAIAPDTSZHISK -m comment --comment "default/toolsvc1 -> 10.51.0.8:80" -j KUBE-SEP-3PPBKWHSZ4NNVQY5

###pod内请求跳到KUBE-MARK-MASQ,其他tcp请求进行DNAT设置目标地址,这里由于iptables版本的问题,正常新版本的应该是--to-destination为IP:port

-A KUBE-SEP-3PPBKWHSZ4NNVQY5 -s 10.51.0.8/32 -m comment --comment "default/toolsvc1" -j KUBE-MARK-MASQ

-A KUBE-SEP-3PPBKWHSZ4NNVQY5 -p tcp -m comment --comment "default/toolsvc1" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-43S7UU6B7UNRH37P -s 10.51.0.10/32 -m comment --comment "default/toolsvc1" -j KUBE-MARK-MASQ

-A KUBE-SEP-43S7UU6B7UNRH37P -p tcp -m comment --comment "default/toolsvc1" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

以LB服务为例

![]()

filter

###服务配置healthCheckNodePort: 30807监听健康检查,filter中配置tcp请求打开该端口访问

:KUBE-NODEPORTS - [0:0]

:KUBE-SEP-5KICMAHZVZC4YBZJ - [0:0]

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https health check node port" -m tcp --dport 30807 -j ACCEPT

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http health check node port" -m tcp --dport 30807 -j ACCEPT

nat 自定义链,PREROUTING跳转到KUBE-SERVICES

:KUBE-SERVICES - [0:0]

:KUBE-EXT-J4ENLV444DNEMLR3 - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

###当请求目标为ClusterIP/LoadBalancer IP & port 443 则跳转到KUBE-SVC-J4ENLV444DNEMLR3

-A KUBE-SERVICES -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-J4ENLV444DNEMLR3

-A KUBE-SERVICES -d 47.100.127.59/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https loadbalancer IP" -m tcp --dport 443 -j KUBE-EXT-J4ENLV444DNEMLR3

###当请求目标为ClusterIP/LoadBalancer IP & port 80 则跳转到KUBE-SVC-KCMUPQBA6BMT5PWB

-A KUBE-SERVICES -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http cluster IP" -m tcp --dport 80 -j KUBE-SVC-KCMUPQBA6BMT5PWB

-A KUBE-SERVICES -d 47.100.127.59/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-KCMUPQBA6BMT5PWB

###非内部pod访问ClusterIP 443端口打标;其余请求443端口以一定概率请求到pod KUBE-SEP-xxx

-A KUBE-SVC-J4ENLV444DNEMLR3 ! -s 10.51.0.0/16 -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.74:443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-MES6WWRAPAKGDAQB

-A KUBE-SVC-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.75:443" -j KUBE-SEP-5KICMAHZVZC4YBZJ

###两个后端pod的处理,pod内部打标,其余dnat到clusterip

-A KUBE-SEP-MES6WWRAPAKGDAQB -s 10.51.0.74/32 -m comment --comment "kube-system/nginx-ingress-lb:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-MES6WWRAPAKGDAQB -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

-A KUBE-SEP-5KICMAHZVZC4YBZJ -s 10.51.0.75/32 -m comment --comment "kube-system/nginx-ingress-lb:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-5KICMAHZVZC4YBZJ -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

其余雷同

核心链:KUBE-SERVICES、KUBE-SVC-XXX、KUBE-SEP-XXX

KUBE-SERVICES链:访问集群内service的数据包入口,它会根据匹配到的service IP:port跳转到KUBE-SVC-XXX链KUBE-SVC-XXX链:对应service对象,基于random功能实现了流量的负载均衡KUBE-SEP-XXX链:通过DNAT将service IP:port替换成后端pod IP:port,从而将流量转发到相应的pod

当看过ipvs之后再看iptables,明显可以感觉到规则链数量的指数级关系,几乎没业务的集群基础组件iptables规则就已经不少了

*filter

:INPUT ACCEPT [497:48750]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [733:194554]

:KUBE-EXTERNAL-SERVICES - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-SERVICES - [0:0]

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A FORWARD -s 10.51.0.0/16 -j ACCEPT

-A FORWARD -d 10.51.0.0/16 -j ACCEPT

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https health check node port" -m tcp --dport 30807 -j ACCEPT

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http health check node port" -m tcp --dport 30807 -j ACCEPT

-A KUBE-SERVICES -d 192.168.132.118/32 -p tcp -m comment --comment "kube-system/cnfs-cache-ds-service has no endpoints" -m tcp --dport 6500 -j REJECT --reject-with icmp-port-unreachable

COMMIT

*nat

:PREROUTING ACCEPT [5:300]

:INPUT ACCEPT [5:300]

:OUTPUT ACCEPT [14:876]

:POSTROUTING ACCEPT [36:2196]

:KUBE-EXT-J4ENLV444DNEMLR3 - [0:0]

:KUBE-EXT-KCMUPQBA6BMT5PWB - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-MARK-DROP - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODEPORTS - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-PROXY-CANARY - [0:0]

:KUBE-SEP-3PPBKWHSZ4NNVQY5 - [0:0]

:KUBE-SEP-43S7UU6B7UNRH37P - [0:0]

:KUBE-SEP-5IZZOFXRSL34IXKY - [0:0]

:KUBE-SEP-5KICMAHZVZC4YBZJ - [0:0]

:KUBE-SEP-65X7VKXKEK6L2MVH - [0:0]

:KUBE-SEP-BPPU64W3NOPYWNM5 - [0:0]

:KUBE-SEP-DPWZLIL5O4NMP6QK - [0:0]

:KUBE-SEP-HW3YZVOH4F72H5TO - [0:0]

:KUBE-SEP-JY27P56VLVTUUZQL - [0:0]

:KUBE-SEP-KXH7SF53VIJDUG2X - [0:0]

:KUBE-SEP-MES6WWRAPAKGDAQB - [0:0]

:KUBE-SEP-RBMGN2LZOACKQS7P - [0:0]

:KUBE-SEP-T6LJWGOAVHKCB7NO - [0:0]

:KUBE-SEP-TMAFKYTFOVHXDWSM - [0:0]

:KUBE-SEP-U3TDJ74FPMZMR74G - [0:0]

:KUBE-SEP-VK2DGRTHDYRV4MDD - [0:0]

:KUBE-SEP-VXA3PP5XCRB3EPQV - [0:0]

:KUBE-SEP-WVOWR4FK2264LKHY - [0:0]

:KUBE-SEP-ZNQ7DYIUMNU2QORP - [0:0]

:KUBE-SERVICES - [0:0]

:KUBE-SVC-66KSR7NWIH4YX2IH - [0:0]

:KUBE-SVC-DXG5JUVGCECFEJJO - [0:0]

:KUBE-SVC-ERIFXISQEP7F7OF4 - [0:0]

:KUBE-SVC-GOW3TA4S46OYR677 - [0:0]

:KUBE-SVC-J4ENLV444DNEMLR3 - [0:0]

:KUBE-SVC-JD5MR3NA4I4DYORP - [0:0]

:KUBE-SVC-KCMUPQBA6BMT5PWB - [0:0]

:KUBE-SVC-NPX46M4PTMTKRN6Y - [0:0]

:KUBE-SVC-QMWWTXBG7KFJQKLO - [0:0]

:KUBE-SVC-RCWFORW73ETJ6YXB - [0:0]

:KUBE-SVC-TCOU7JCQXEZGVUNU - [0:0]

:KUBE-SVC-TNPTAIAPDTSZHISK - [0:0]

:KUBE-SVL-J4ENLV444DNEMLR3 - [0:0]

:KUBE-SVL-KCMUPQBA6BMT5PWB - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 10.51.0.0/16 -d 10.51.0.0/16 -j RETURN

-A POSTROUTING -s 10.51.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

-A POSTROUTING ! -s 10.51.0.0/16 -d 10.51.0.64/26 -j RETURN

-A POSTROUTING ! -s 10.51.0.0/16 -d 10.51.0.0/16 -j MASQUERADE

-A KUBE-EXT-J4ENLV444DNEMLR3 -s 10.51.0.0/16 -m comment --comment "pod traffic for kube-system/nginx-ingress-lb:https external destinations" -j KUBE-SVC-J4ENLV444DNEMLR3

-A KUBE-EXT-J4ENLV444DNEMLR3 -m comment --comment "masquerade LOCAL traffic for kube-system/nginx-ingress-lb:https external destinations" -m addrtype --src-type LOCAL -j KUBE-MARK-MASQ

-A KUBE-EXT-J4ENLV444DNEMLR3 -m comment --comment "route LOCAL traffic for kube-system/nginx-ingress-lb:https external destinations" -m addrtype --src-type LOCAL -j KUBE-SVC-J4ENLV444DNEMLR3

-A KUBE-EXT-J4ENLV444DNEMLR3 -j KUBE-SVL-J4ENLV444DNEMLR3

-A KUBE-EXT-KCMUPQBA6BMT5PWB -s 10.51.0.0/16 -m comment --comment "pod traffic for kube-system/nginx-ingress-lb:http external destinations" -j KUBE-SVC-KCMUPQBA6BMT5PWB

-A KUBE-EXT-KCMUPQBA6BMT5PWB -m comment --comment "masquerade LOCAL traffic for kube-system/nginx-ingress-lb:http external destinations" -m addrtype --src-type LOCAL -j KUBE-MARK-MASQ

-A KUBE-EXT-KCMUPQBA6BMT5PWB -m comment --comment "route LOCAL traffic for kube-system/nginx-ingress-lb:http external destinations" -m addrtype --src-type LOCAL -j KUBE-SVC-KCMUPQBA6BMT5PWB

-A KUBE-EXT-KCMUPQBA6BMT5PWB -j KUBE-SVL-KCMUPQBA6BMT5PWB

-A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https" -m tcp --dport 30330 -j KUBE-EXT-J4ENLV444DNEMLR3

-A KUBE-NODEPORTS -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http" -m tcp --dport 32659 -j KUBE-EXT-KCMUPQBA6BMT5PWB

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE

-A KUBE-SEP-3PPBKWHSZ4NNVQY5 -s 10.51.0.8/32 -m comment --comment "default/toolsvc1" -j KUBE-MARK-MASQ

-A KUBE-SEP-3PPBKWHSZ4NNVQY5 -p tcp -m comment --comment "default/toolsvc1" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-43S7UU6B7UNRH37P -s 10.51.0.10/32 -m comment --comment "default/toolsvc1" -j KUBE-MARK-MASQ

-A KUBE-SEP-43S7UU6B7UNRH37P -p tcp -m comment --comment "default/toolsvc1" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-5IZZOFXRSL34IXKY -s 10.51.0.74/32 -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook" -j KUBE-MARK-MASQ

-A KUBE-SEP-5IZZOFXRSL34IXKY -p tcp -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-5KICMAHZVZC4YBZJ -s 10.51.0.75/32 -m comment --comment "kube-system/nginx-ingress-lb:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-5KICMAHZVZC4YBZJ -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

-A KUBE-SEP-65X7VKXKEK6L2MVH -s 10.51.0.7/32 -m comment --comment "kube-system/storage-monitor-service" -j KUBE-MARK-MASQ

-A KUBE-SEP-65X7VKXKEK6L2MVH -p tcp -m comment --comment "kube-system/storage-monitor-service" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination --random --persistent

-A KUBE-SEP-BPPU64W3NOPYWNM5 -s 10.51.0.67/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-BPPU64W3NOPYWNM5 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-DPWZLIL5O4NMP6QK -s 10.51.0.2/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-DPWZLIL5O4NMP6QK -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-HW3YZVOH4F72H5TO -s 10.51.0.67/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-HW3YZVOH4F72H5TO -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-JY27P56VLVTUUZQL -s 10.51.0.75/32 -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook" -j KUBE-MARK-MASQ

-A KUBE-SEP-JY27P56VLVTUUZQL -p tcp -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-KXH7SF53VIJDUG2X -s 10.51.0.67/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-KXH7SF53VIJDUG2X -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0

-A KUBE-SEP-MES6WWRAPAKGDAQB -s 10.51.0.74/32 -m comment --comment "kube-system/nginx-ingress-lb:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-MES6WWRAPAKGDAQB -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

-A KUBE-SEP-RBMGN2LZOACKQS7P -s 10.51.0.2/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-RBMGN2LZOACKQS7P -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-T6LJWGOAVHKCB7NO -s 10.51.0.66/32 -m comment --comment "kube-system/storage-crd-validate-service" -j KUBE-MARK-MASQ

-A KUBE-SEP-T6LJWGOAVHKCB7NO -p tcp -m comment --comment "kube-system/storage-crd-validate-service" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

-A KUBE-SEP-TMAFKYTFOVHXDWSM -s 10.51.0.2/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQd

-A KUBE-SEP-TMAFKYTFOVHXDWSM -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0

-A KUBE-SEP-U3TDJ74FPMZMR74G -s 10.51.0.73/32 -m comment --comment "kube-system/metrics-server" -j KUBE-MARK-MASQ

-A KUBE-SEP-U3TDJ74FPMZMR74G -p tcp -m comment --comment "kube-system/metrics-server" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0

-A KUBE-SEP-VK2DGRTHDYRV4MDD -s 10.51.0.74/32 -m comment --comment "kube-system/nginx-ingress-lb:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-VK2DGRTHDYRV4MDD -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-VXA3PP5XCRB3EPQV -s 10.51.0.75/32 -m comment --comment "kube-system/nginx-ingress-lb:http" -j KUBE-MARK-MASQ

-A KUBE-SEP-VXA3PP5XCRB3EPQV -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination

-A KUBE-SEP-WVOWR4FK2264LKHY -s 172.28.112.16/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-WVOWR4FK2264LKHY -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination --random --persistent --to-destination --random --persistent --to-destination 0.0.0.0 --persistent

-A KUBE-SEP-ZNQ7DYIUMNU2QORP -s 10.51.0.73/32 -m comment --comment "kube-system/heapster" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZNQ7DYIUMNU2QORP -p tcp -m comment --comment "kube-system/heapster" -m tcp -j DNAT --to-destination :0 --persistent --to-destination :0 --persistent --to-destination 0.0.0.0:0 --random --persistent

-A KUBE-SERVICES -d 192.168.145.68/32 -p tcp -m comment --comment "kube-system/metrics-server cluster IP" -m tcp --dport 443 -j KUBE-SVC-QMWWTXBG7KFJQKLO

-A KUBE-SERVICES -d 192.168.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 192.168.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 192.168.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-J4ENLV444DNEMLR3

-A KUBE-SERVICES -d 47.100.127.59/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https loadbalancer IP" -m tcp --dport 443 -j KUBE-EXT-J4ENLV444DNEMLR3

-A KUBE-SERVICES -d 192.168.227.109/32 -p tcp -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook cluster IP" -m tcp --dport 443 -j KUBE-SVC-66KSR7NWIH4YX2IH

-A KUBE-SERVICES -d 192.168.119.90/32 -p tcp -m comment --comment "kube-system/heapster cluster IP" -m tcp --dport 80 -j KUBE-SVC-RCWFORW73ETJ6YXB

-A KUBE-SERVICES -d 192.168.92.153/32 -p tcp -m comment --comment "default/toolsvc1 cluster IP" -m tcp --dport 80 -j KUBE-SVC-TNPTAIAPDTSZHISK

-A KUBE-SERVICES -d 192.168.135.228/32 -p tcp -m comment --comment "kube-system/storage-crd-validate-service cluster IP" -m tcp --dport 443 -j KUBE-SVC-GOW3TA4S46OYR677

-A KUBE-SERVICES -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http cluster IP" -m tcp --dport 80 -j KUBE-SVC-KCMUPQBA6BMT5PWB

-A KUBE-SERVICES -d 47.100.127.59/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http loadbalancer IP" -m tcp --dport 80 -j KUBE-EXT-KCMUPQBA6BMT5PWB

-A KUBE-SERVICES -d 192.168.173.131/32 -p tcp -m comment --comment "kube-system/storage-monitor-service cluster IP" -m tcp --dport 11280 -j KUBE-SVC-DXG5JUVGCECFEJJO

-A KUBE-SERVICES -d 192.168.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-66KSR7NWIH4YX2IH ! -s 10.51.0.0/16 -d 192.168.227.109/32 -p tcp -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-66KSR7NWIH4YX2IH -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook -> 10.51.0.74:8443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-5IZZOFXRSL34IXKY

-A KUBE-SVC-66KSR7NWIH4YX2IH -m comment --comment "kube-system/ingress-nginx-controller-admission:https-webhook -> 10.51.0.75:8443" -j KUBE-SEP-JY27P56VLVTUUZQL

-A KUBE-SVC-DXG5JUVGCECFEJJO ! -s 10.51.0.0/16 -d 192.168.173.131/32 -p tcp -m comment --comment "kube-system/storage-monitor-service cluster IP" -m tcp --dport 11280 -j KUBE-MARK-MASQ

-A KUBE-SVC-DXG5JUVGCECFEJJO -m comment --comment "kube-system/storage-monitor-service -> 10.51.0.7:11280" -j KUBE-SEP-65X7VKXKEK6L2MVH

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.51.0.0/16 -d 192.168.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.51.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-DPWZLIL5O4NMP6QK

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.51.0.67:53" -j KUBE-SEP-BPPU64W3NOPYWNM5

-A KUBE-SVC-GOW3TA4S46OYR677 ! -s 10.51.0.0/16 -d 192.168.135.228/32 -p tcp -m comment --comment "kube-system/storage-crd-validate-service cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-GOW3TA4S46OYR677 -m comment --comment "kube-system/storage-crd-validate-service -> 10.51.0.66:443" -j KUBE-SEP-T6LJWGOAVHKCB7NO

-A KUBE-SVC-J4ENLV444DNEMLR3 ! -s 10.51.0.0/16 -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.74:443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-MES6WWRAPAKGDAQB

-A KUBE-SVC-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.75:443" -j KUBE-SEP-5KICMAHZVZC4YBZJ

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.51.0.0/16 -d 192.168.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.51.0.2:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-TMAFKYTFOVHXDWSM

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.51.0.67:9153" -j KUBE-SEP-KXH7SF53VIJDUG2X

-A KUBE-SVC-KCMUPQBA6BMT5PWB ! -s 10.51.0.0/16 -d 192.168.19.208/32 -p tcp -m comment --comment "kube-system/nginx-ingress-lb:http cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-KCMUPQBA6BMT5PWB -m comment --comment "kube-system/nginx-ingress-lb:http -> 10.51.0.74:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-VK2DGRTHDYRV4MDD

-A KUBE-SVC-KCMUPQBA6BMT5PWB -m comment --comment "kube-system/nginx-ingress-lb:http -> 10.51.0.75:80" -j KUBE-SEP-VXA3PP5XCRB3EPQV

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.51.0.0/16 -d 192.168.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.28.112.16:6443" -j KUBE-SEP-WVOWR4FK2264LKHY

-A KUBE-SVC-QMWWTXBG7KFJQKLO ! -s 10.51.0.0/16 -d 192.168.145.68/32 -p tcp -m comment --comment "kube-system/metrics-server cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-QMWWTXBG7KFJQKLO -m comment --comment "kube-system/metrics-server -> 10.51.0.73:443" -j KUBE-SEP-U3TDJ74FPMZMR74G

-A KUBE-SVC-RCWFORW73ETJ6YXB ! -s 10.51.0.0/16 -d 192.168.119.90/32 -p tcp -m comment --comment "kube-system/heapster cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-RCWFORW73ETJ6YXB -m comment --comment "kube-system/heapster -> 10.51.0.73:8082" -j KUBE-SEP-ZNQ7DYIUMNU2QORP

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.51.0.0/16 -d 192.168.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.51.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-RBMGN2LZOACKQS7P

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.51.0.67:53" -j KUBE-SEP-HW3YZVOH4F72H5TO

-A KUBE-SVC-TNPTAIAPDTSZHISK ! -s 10.51.0.0/16 -d 192.168.92.153/32 -p tcp -m comment --comment "default/toolsvc1 cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ

-A KUBE-SVC-TNPTAIAPDTSZHISK -m comment --comment "default/toolsvc1 -> 10.51.0.10:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-43S7UU6B7UNRH37P

-A KUBE-SVC-TNPTAIAPDTSZHISK -m comment --comment "default/toolsvc1 -> 10.51.0.8:80" -j KUBE-SEP-3PPBKWHSZ4NNVQY5

-A KUBE-SVL-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.74:443" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-MES6WWRAPAKGDAQB

-A KUBE-SVL-J4ENLV444DNEMLR3 -m comment --comment "kube-system/nginx-ingress-lb:https -> 10.51.0.75:443" -j KUBE-SEP-5KICMAHZVZC4YBZJ

-A KUBE-SVL-KCMUPQBA6BMT5PWB -m comment --comment "kube-system/nginx-ingress-lb:http -> 10.51.0.74:80" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-VK2DGRTHDYRV4MDD

-A KUBE-SVL-KCMUPQBA6BMT5PWB -m comment --comment "kube-system/nginx-ingress-lb:http -> 10.51.0.75:80" -j KUBE-SEP-VXA3PP5XCRB3EPQV

COMMIT

ipvs

该模式下,每个node都存在一张kube-ipvs0的虚拟网卡,服务创建时需要将服务 IP 地址绑定到虚拟接口,同时分别为每个服务 IP 地址创建 IPVS 虚拟服务器

IPVS 用于负载均衡,它无法处理 kube-proxy 中的其他问题,例如 包过滤,数据包欺骗,SNAT等,IPVS proxier 在上述场景中利用 iptables

4: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default link/ether b2:5a:5f:70:36:dd brd ff:ff:ff:ff:ff:ff inet 192.168.0.10/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever ...... inet 192.168.69.4/32 scope global kube-ipvs0 valid_lft forever preferred_lft forever

这里只有一个的原因是只就绪了一个,另一个没有ready,就不会进流量, 通过Cluster IP做虚拟IP通过rr策略访问到pod

[root@iZuf634qce0653xtqgbxxmZ ~]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

......

TCP 192.168.69.4:80 rr

-> 10.16.0.16:80 Masq 1 0 0

......

UDP 192.168.0.10:53 rr

-> 10.16.0.24:53 Masq 1 0 209

iptables-save查看node规则

......

*filter

:INPUT ACCEPT [2757:358050]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [2777:546727]

:KUBE-FIREWALL - [0:0]

:KUBE-FORWARD - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-NODE-PORT - [0:0]

-A INPUT -d 169.254.20.10/32 -p udp -m udp --dport 53 -j ACCEPT

-A INPUT -d 169.254.20.10/32 -p tcp -m tcp --dport 53 -j ACCEPT

-A INPUT -m comment --comment "kubernetes health check rules" -j KUBE-NODE-PORT

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -s 10.16.0.0/16 -j ACCEPT

-A FORWARD -d 10.16.0.0/16 -j ACCEPT

-A OUTPUT -s 169.254.20.10/32 -p udp -m udp --sport 53 -j ACCEPT

-A OUTPUT -s 169.254.20.10/32 -p tcp -m tcp --sport 53 -j ACCEPT

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A KUBE-NODE-PORT -m comment --comment "Kubernetes health check node port" -m set --match-set KUBE-HEALTH-CHECK-NODE-PORT dst -j ACCEPT

COMMIT

*nat

:PREROUTING ACCEPT [488:29306]

:INPUT ACCEPT [5:300]

:OUTPUT ACCEPT [186:11256]

:POSTROUTING ACCEPT [539:33114]

:KUBE-FIREWALL - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

:KUBE-LOAD-BALANCER - [0:0]

:KUBE-MARK-DROP - [0:0]

:KUBE-MARK-MASQ - [0:0]

:KUBE-NODE-PORT - [0:0]

:KUBE-POSTROUTING - [0:0]

:KUBE-SERVICES - [0:0]

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -s 10.16.0.0/16 -d 10.16.0.0/16 -j RETURN

-A POSTROUTING -s 10.16.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

-A POSTROUTING ! -s 10.16.0.0/16 -d 10.16.0.0/26 -j RETURN

-A POSTROUTING ! -s 10.16.0.0/16 -d 10.16.0.0/16 -j MASQUERADE

-A KUBE-FIREWALL -j KUBE-MARK-DROP

-A KUBE-LOAD-BALANCER -m comment --comment "Kubernetes service load balancer ip + port with externalTrafficPolicy=local" -m set --match-set KUBE-LOAD-BALANCER-LOCAL dst,dst -j RETURN

-A KUBE-LOAD-BALANCER -j KUBE-MARK-MASQ

-A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-NODE-PORT -p tcp -m comment --comment "Kubernetes nodeport TCP port with externalTrafficPolicy=local" -m set --match-set KUBE-NODE-PORT-LOCAL-TCP dst -j RETURN

-A KUBE-NODE-PORT -p tcp -m comment --comment "Kubernetes nodeport TCP port for masquerade purpose" -m set --match-set KUBE-NODE-PORT-TCP dst -j KUBE-MARK-MASQ

-A KUBE-POSTROUTING -m comment --comment "Kubernetes endpoints dst ip:port, source ip for solving hairpin purpose" -m set --match-set KUBE-LOOP-BACK dst,dst,src -j MASQUERADE

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE

-A KUBE-SERVICES -m comment --comment "Kubernetes service lb portal" -m set --match-set KUBE-LOAD-BALANCER dst,dst -j KUBE-LOAD-BALANCER

-A KUBE-SERVICES ! -s 10.16.0.0/16 -m comment --comment "Kubernetes service cluster ip + port for masquerade purpose" -m set --match-set KUBE-CLUSTER-IP dst,dst -j KUBE-MARK-MASQ

-A KUBE-SERVICES -m addrtype --dst-type LOCAL -j KUBE-NODE-PORT

-A KUBE-SERVICES -m set --match-set KUBE-CLUSTER-IP dst,dst -j ACCEPT

-A KUBE-SERVICES -m set --match-set KUBE-LOAD-BALANCER dst,dst -j ACCEPT

COMMIT

同样一个service,可以看到iptables规则很少,完全不是一个套路,且根本无法找到关联的信息,ipvs的优越性就体现出来了,整个环节集合了iptables入口拦截、ipset链规则动态管理、ipvs虚拟IP均衡访问

看到里面--match-set 很多变量名,通过保存在ipset,这样就可以关联起来了,整个逻辑相当于iptabels规则是静态的,利用了动态的ipset数据源

[root@iZuf634qce0653xtqgbxxmZ ~]# ipset list

Name: KUBE-NODE-PORT-LOCAL-UDP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-LOOP-BACK

Type: hash:ip,port,ip

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 2912

References: 1

Number of entries: 34

Members:

10.16.0.16,tcp:80,10.16.0.16

......

Name: KUBE-NODE-PORT-TCP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 1

Number of entries: 19

Members:

30008

30143

......

Name: KUBE-NODE-PORT-LOCAL-TCP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 1

Number of entries: 12

Members:

30008

30279

......

Name: KUBE-NODE-PORT-UDP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-NODE-PORT-SCTP-HASH

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 104

References: 0

Number of entries: 0

Members:

Name: KUBE-HEALTH-CHECK-NODE-PORT

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 1

Number of entries: 5

Members:

30848

31360

31493

32147

32743

Name: KUBE-CLUSTER-IP

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 3688

References: 2

Number of entries: 58

Members:

192.168.69.4,tcp:80

...

Name: KUBE-EXTERNAL-IP

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 104

References: 0

Number of entries: 0

Members:

Name: KUBE-LOAD-BALANCER-FW

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 104

References: 0

Number of entries: 0

Members:

Name: KUBE-LOAD-BALANCER-SOURCE-IP

Type: hash:ip,port,ip

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 112

References: 0

Number of entries: 0

Members:

Name: KUBE-LOAD-BALANCER-SOURCE-CIDR

Type: hash:ip,port,net

Revision: 7

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 368

References: 0

Number of entries: 0

Members:

Name: KUBE-NODE-PORT-LOCAL-SCTP-HASH

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 104

References: 0

Number of entries: 0

Members:

Name: KUBE-EXTERNAL-IP-LOCAL

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 104

References: 0

Number of entries: 0

Members:

Name: KUBE-LOAD-BALANCER

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 1192

References: 2

Number of entries: 17

Members:

......

47.117.65.242,tcp:80

Name: KUBE-LOAD-BALANCER-LOCAL

Type: hash:ip,port

Revision: 5

Header: family inet hashsize 1024 maxelem 65536

Size in memory: 872

References: 1

Number of entries: 12

Members:

47.117.65.xxx,tcp:80

......

Name: KUBE-6-LOAD-BALANCER

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-LOAD-BALANCER-SOURCE-IP

Type: hash:ip,port,ip

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 136

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-TCP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-LOCAL-SCTP-HAS

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-LOOP-BACK

Type: hash:ip,port,ip

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 136

References: 0

Number of entries: 0

Members:

Name: KUBE-6-CLUSTER-IP

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-EXTERNAL-IP

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-LOAD-BALANCER-FW

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-LOAD-BALANCER-LOCAL

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-LOCAL-TCP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-LOCAL-UDP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-6-HEALTH-CHECK-NODE-PORT

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 1

Number of entries: 0

Members:

Name: KUBE-6-EXTERNAL-IP-LOCAL

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Name: KUBE-6-LOAD-BALANCER-SOURCE-CID

Type: hash:ip,port,net

Revision: 7

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 1160

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-UDP

Type: bitmap:port

Revision: 3

Header: range 0-65535

Size in memory: 8284

References: 0

Number of entries: 0

Members:

Name: KUBE-6-NODE-PORT-SCTP-HASH

Type: hash:ip,port

Revision: 5

Header: family inet6 hashsize 1024 maxelem 65536

Size in memory: 120

References: 0

Number of entries: 0

Members:

Istio

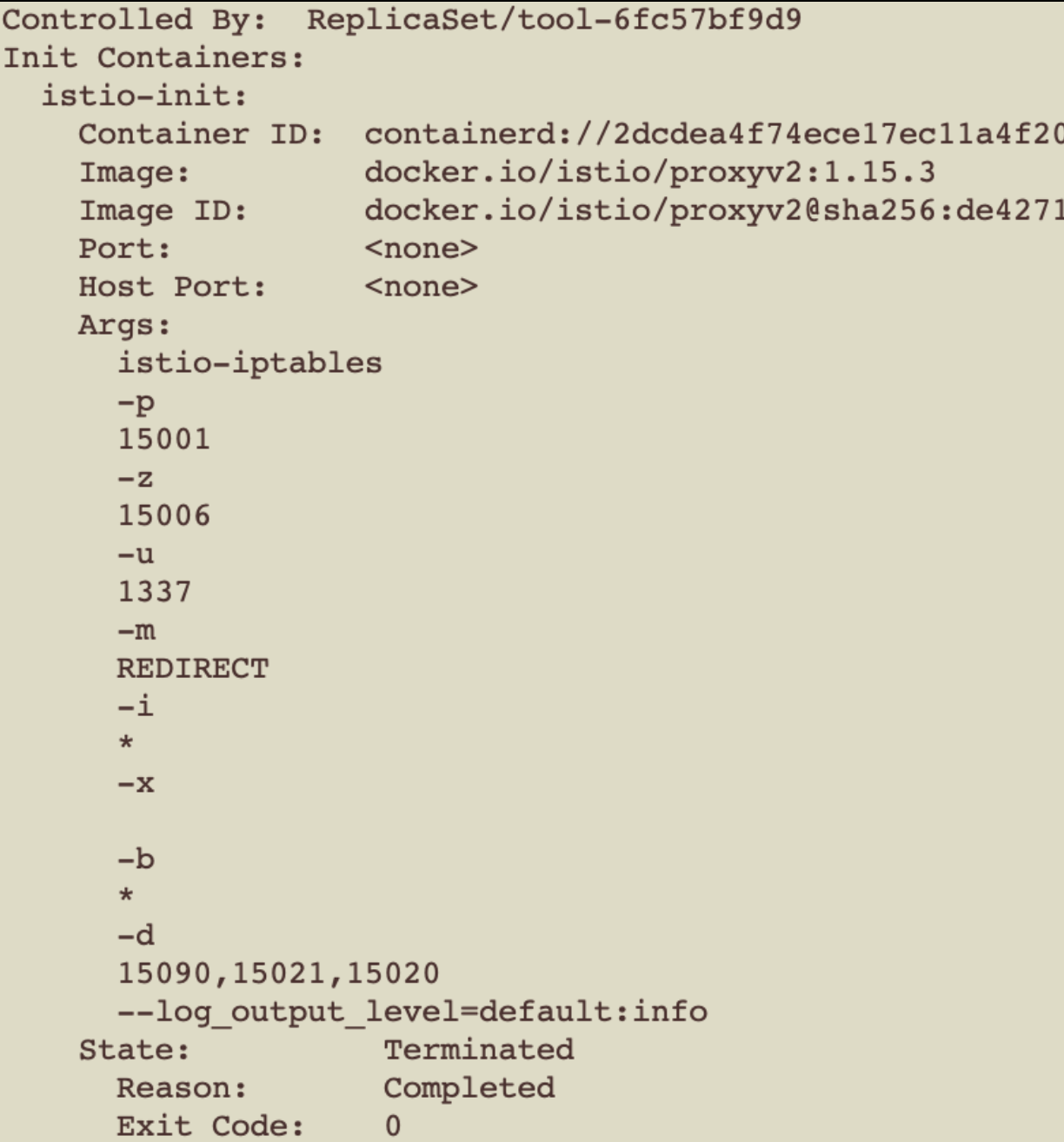

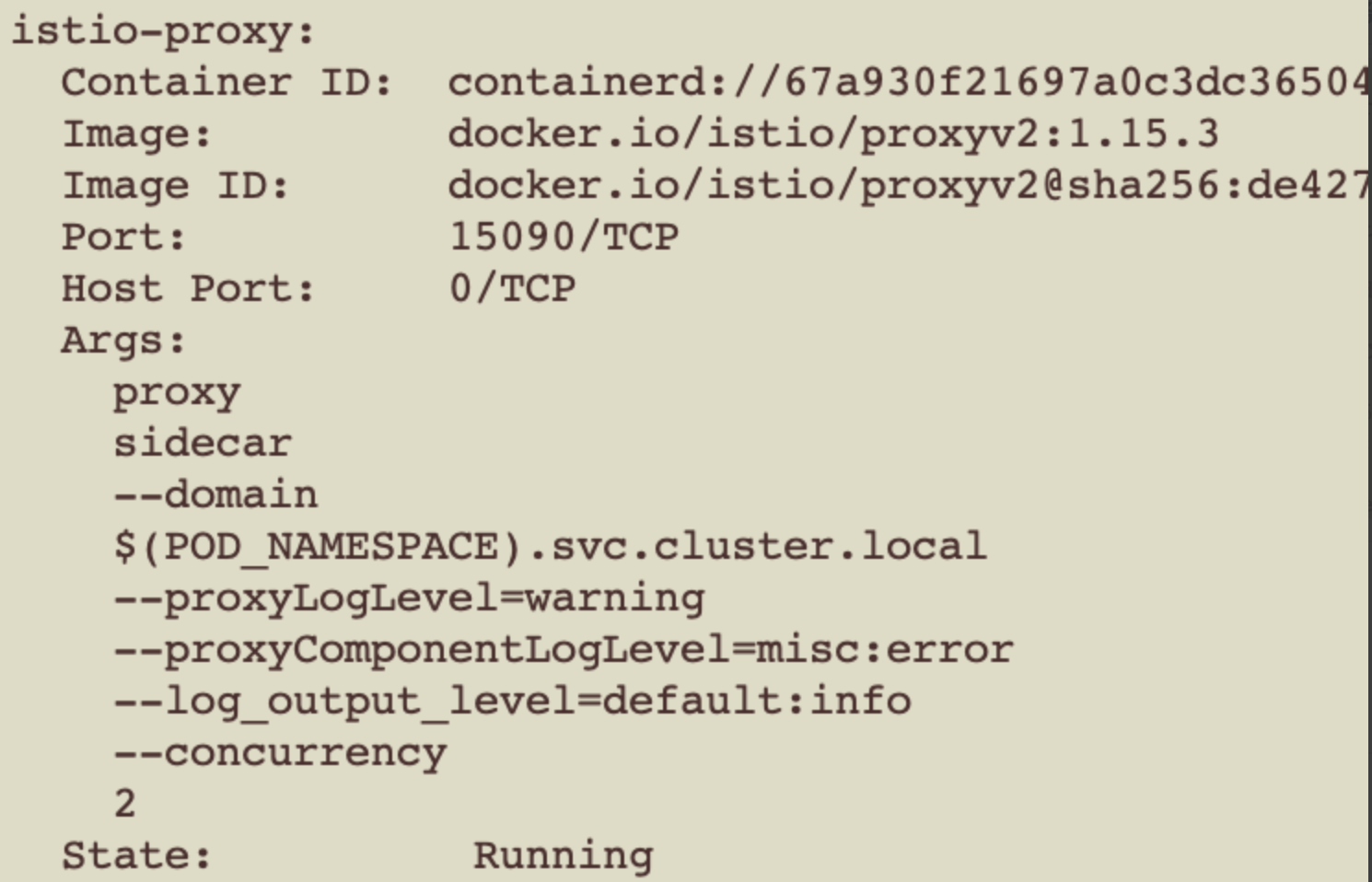

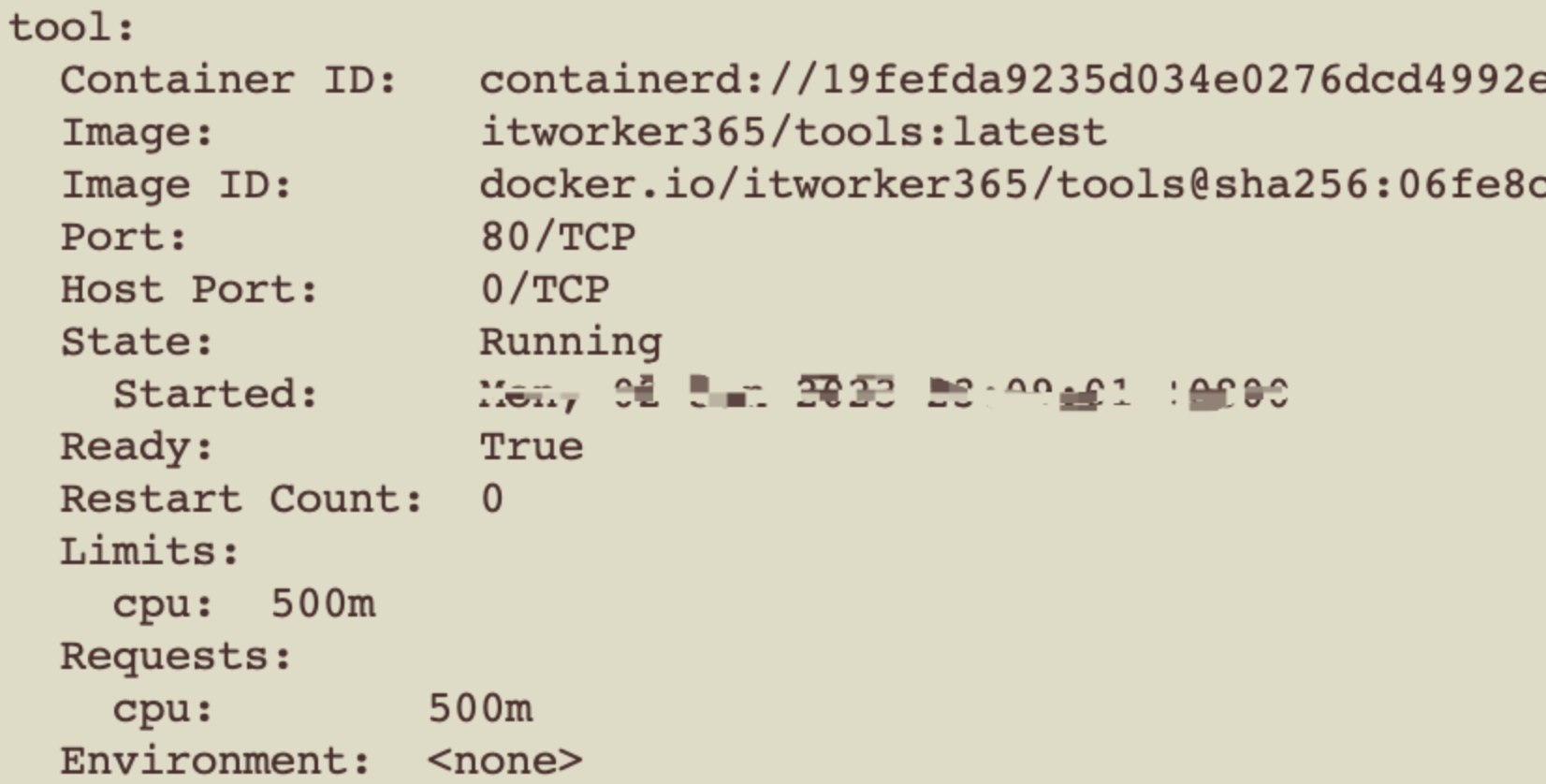

Describe pod可以看到三个容器

| 已终止的容器 | proxy容器 | 业务容器 |

|

|

|

| 用于给Sidecar容器做初始化,设置 iptables转发规则,将入站流量重定向到 Sidecar,再拦截应用容器的出站流量经过 Sidecar 处理后再出站,生成后在应用容器和Sidecar容器内部生效 | Pilot监听k8s配置,生成xds相关规则,下发Listener、Route、Cluster和Endpoint给Sidecar实例,流量劫持到Sidecar之后,由Envoy根据请求特征,将流量派发至合适的Listener,进而完成后续的流量路由、负载均衡等 |

Init Container

开启流量劫持,并设置流量劫持规则

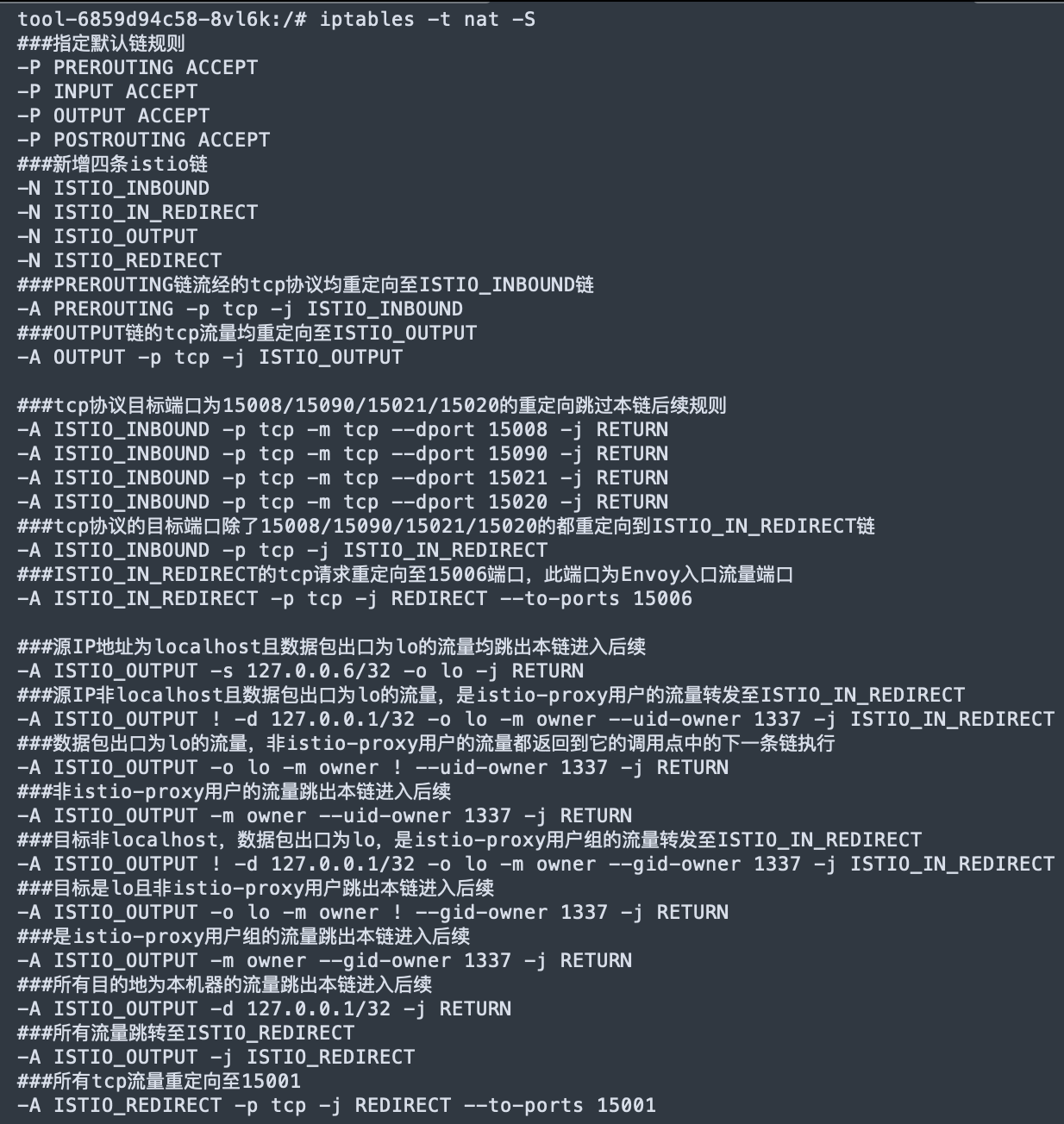

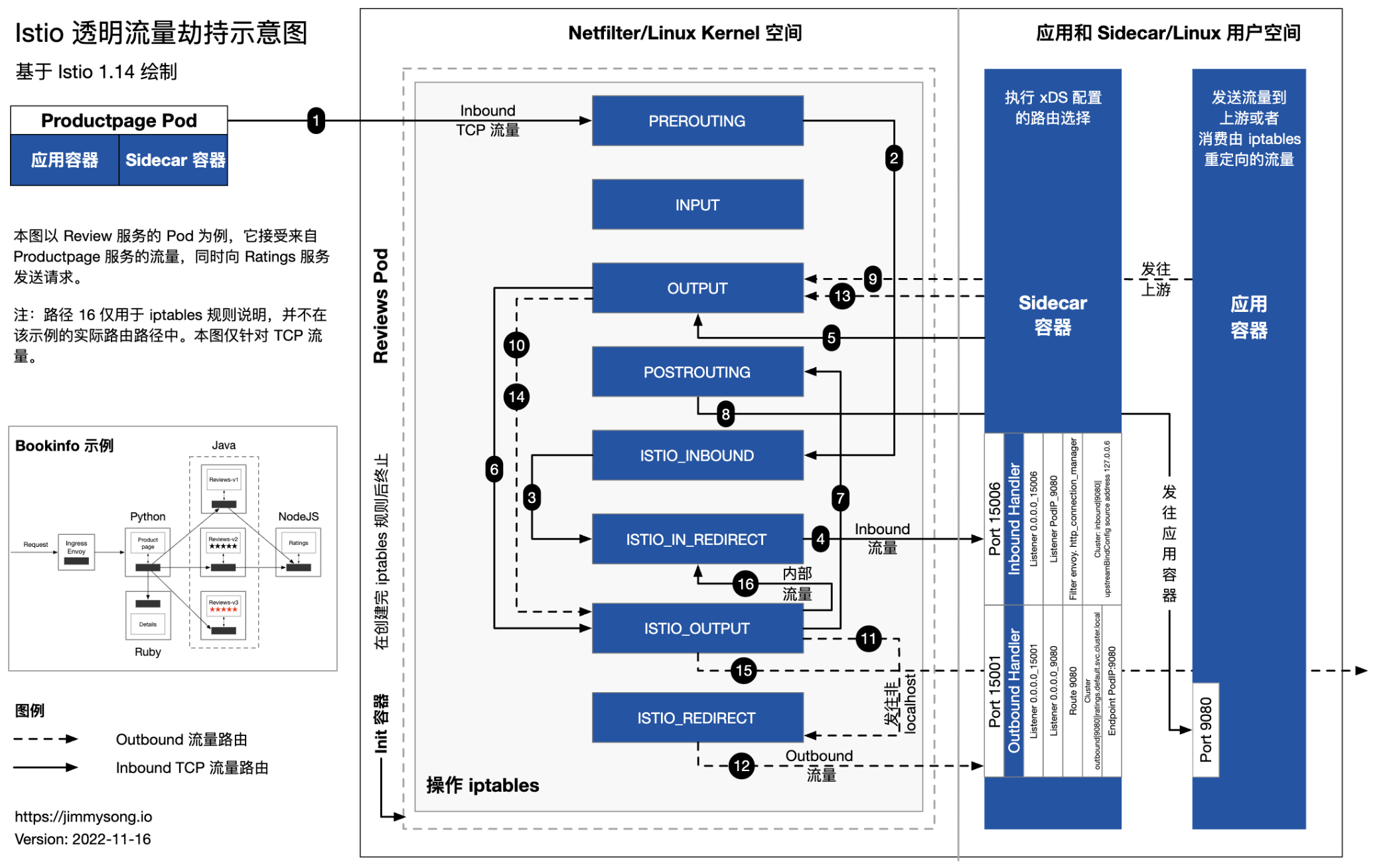

拦截路径

Istio通过iptables进行透明流量拦截

入口为tcp协议且目标端口为15008、15090、15021、15020的请求不拦截,其他目标端口报文重定向至15006;

出口业务请求重定向到15001,Sidecar与Sidecar代理的service通信,从lo(内部之间互联通过lo网卡,使用localhost通信)流出且目标地址非127.0.0.1/32且为Envoy内部进程UID和GID为1337的流量,通过ISTIO_IN_REDIRECT

来自: https://jimmysong.io/blog/envoy-sidecar-injection-in-istio-service-mesh-deep-dive/

透明拦截的各种情况详解

https://jimmysong.io/blog/istio-sidecar-traffic-types/

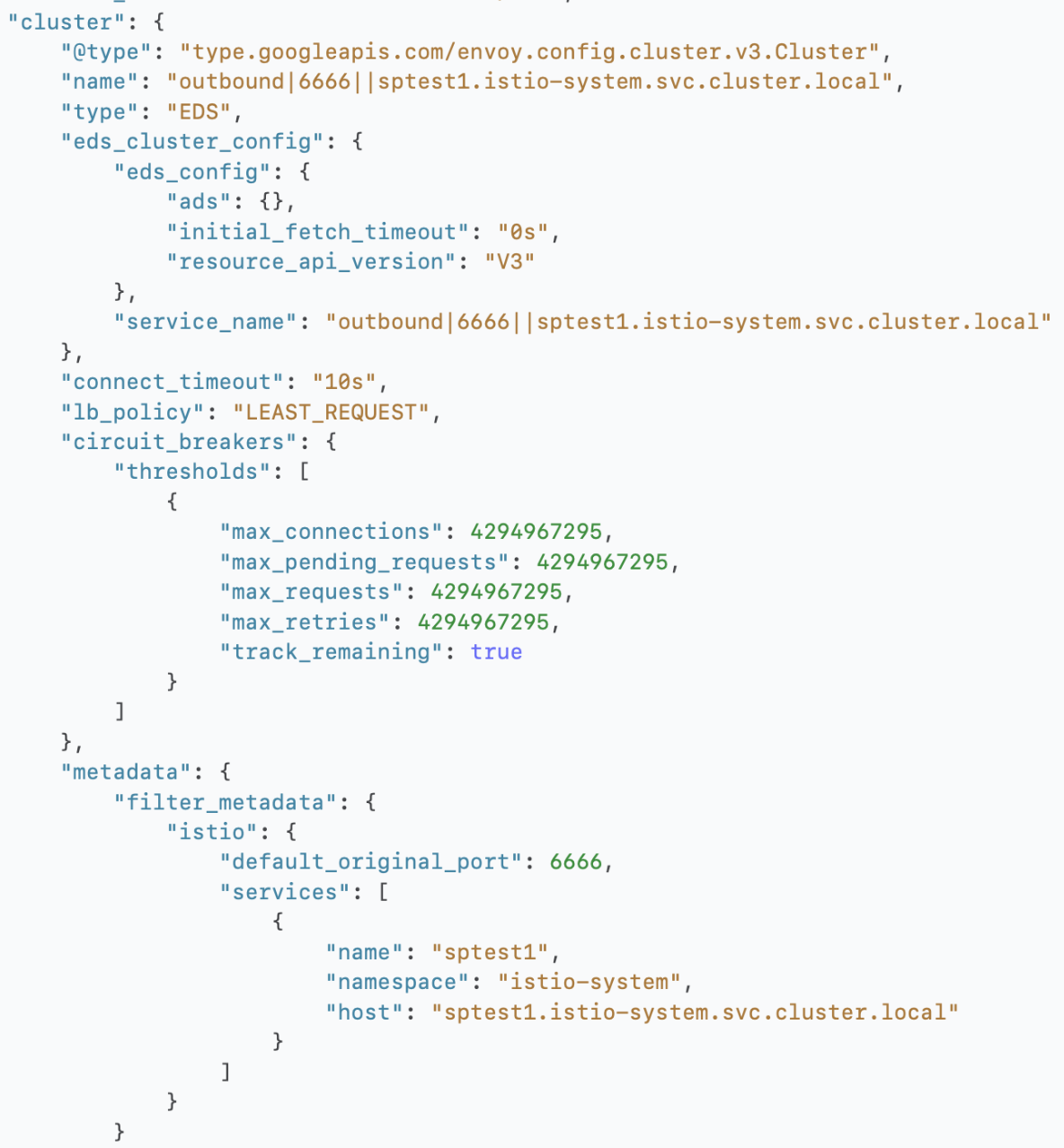

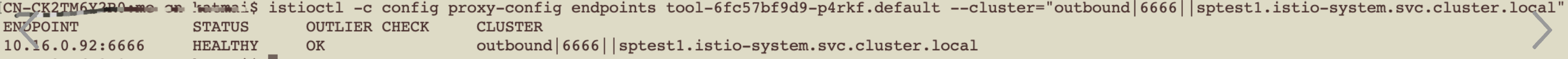

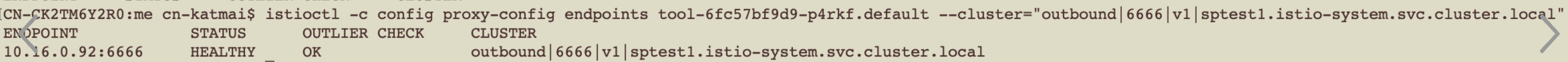

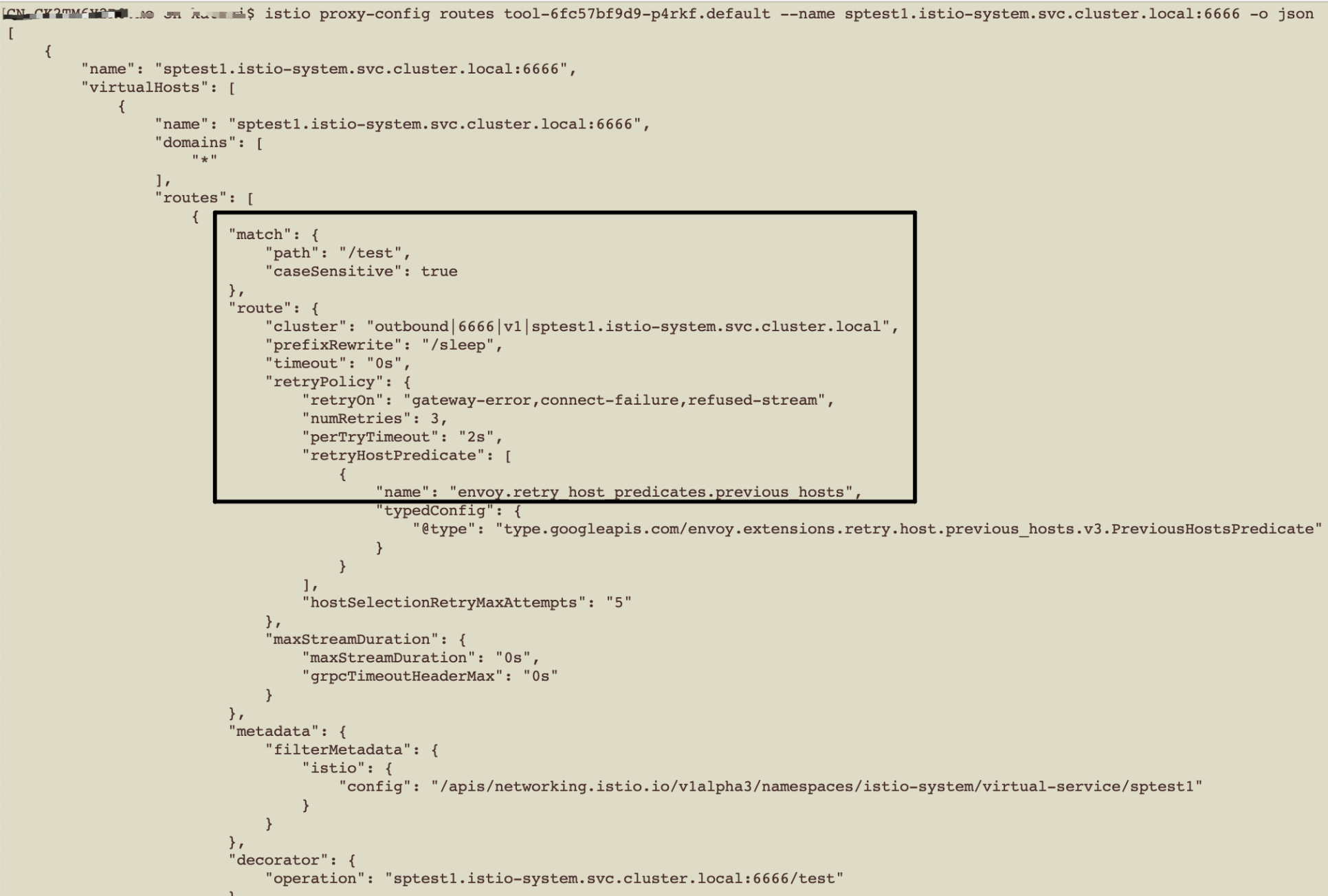

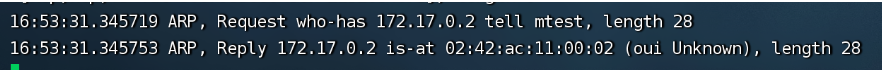

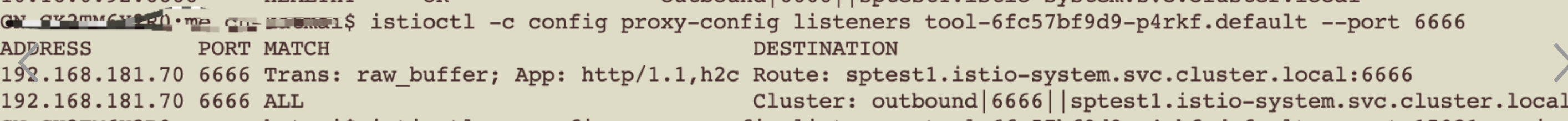

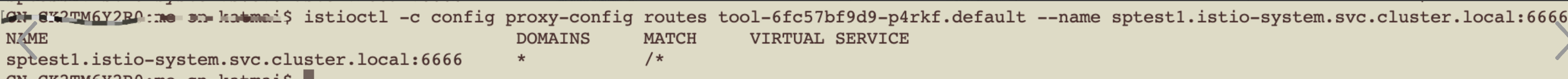

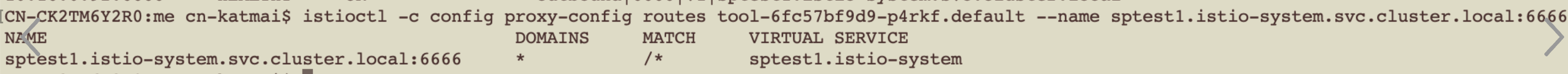

Sidecar

通过istio xds规则配置梳理流程

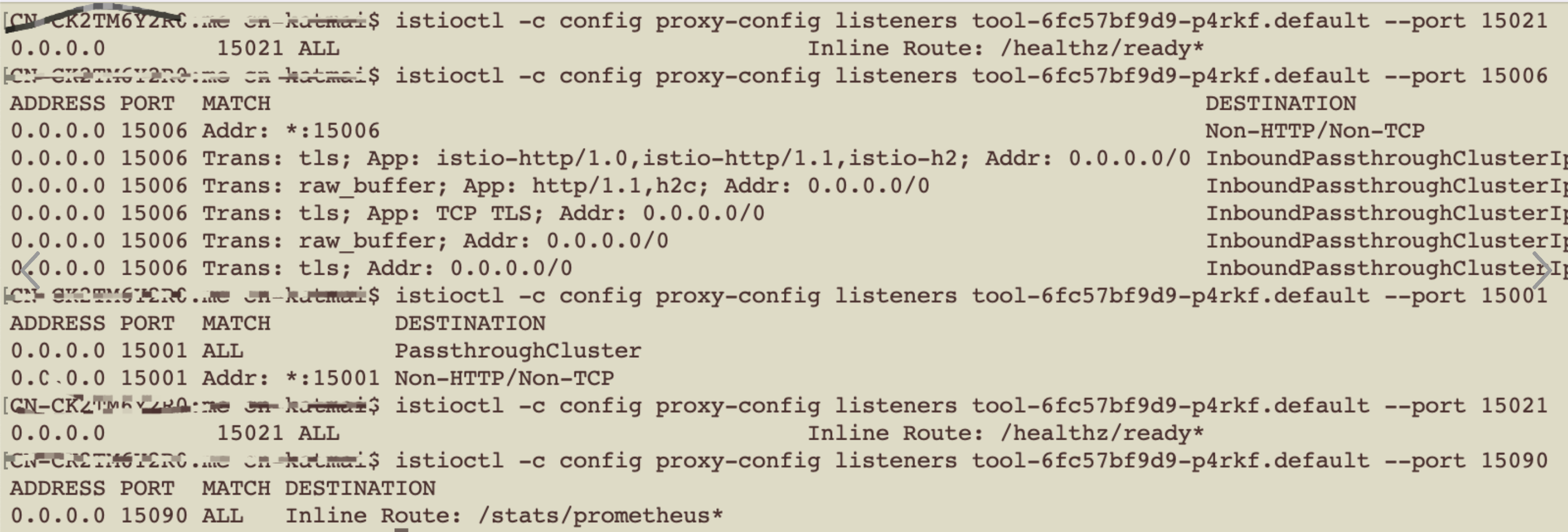

几个特殊端口的监听

PassthroughCluster和InboundPassthroughClusterIpv4:发往此类集群的请求报文会被直接透传至其请求中的原始目标地址,Envoy不会进行重新路由

Mesh内部通过endpoints ip/port通信,规则均通过xds以Listener、Route、Cluster和Endpoint规则给Sidecar实例,通过边车作用生效

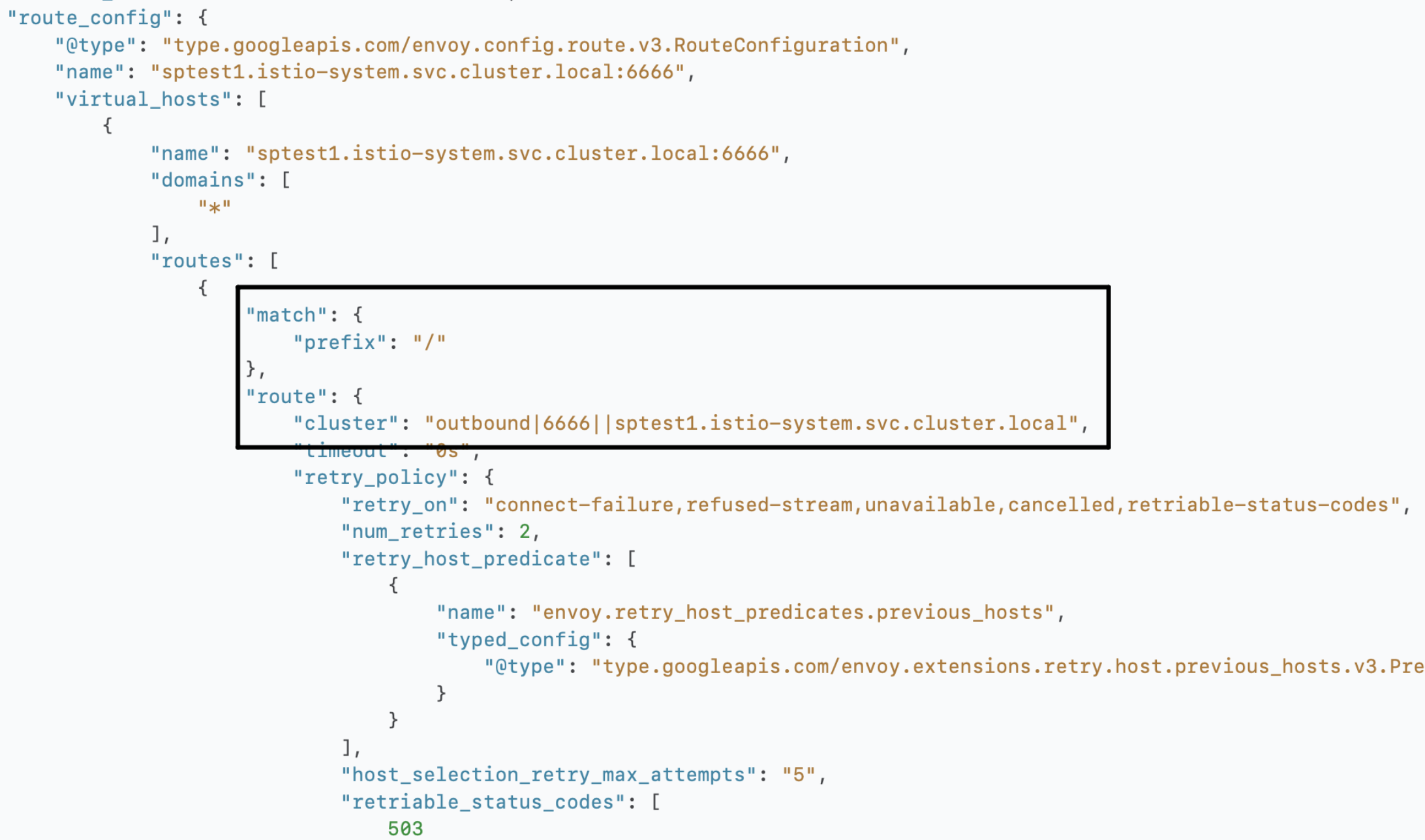

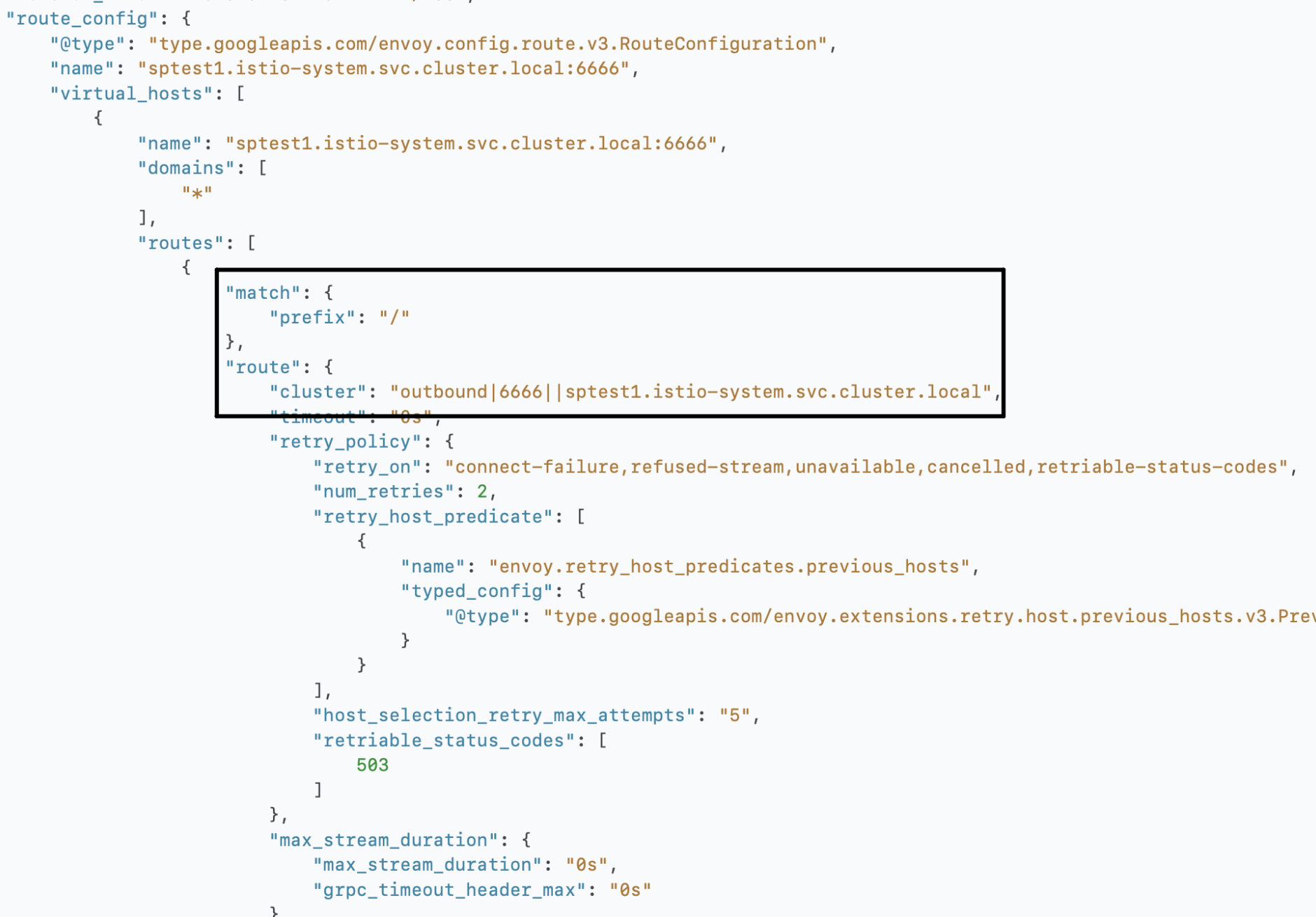

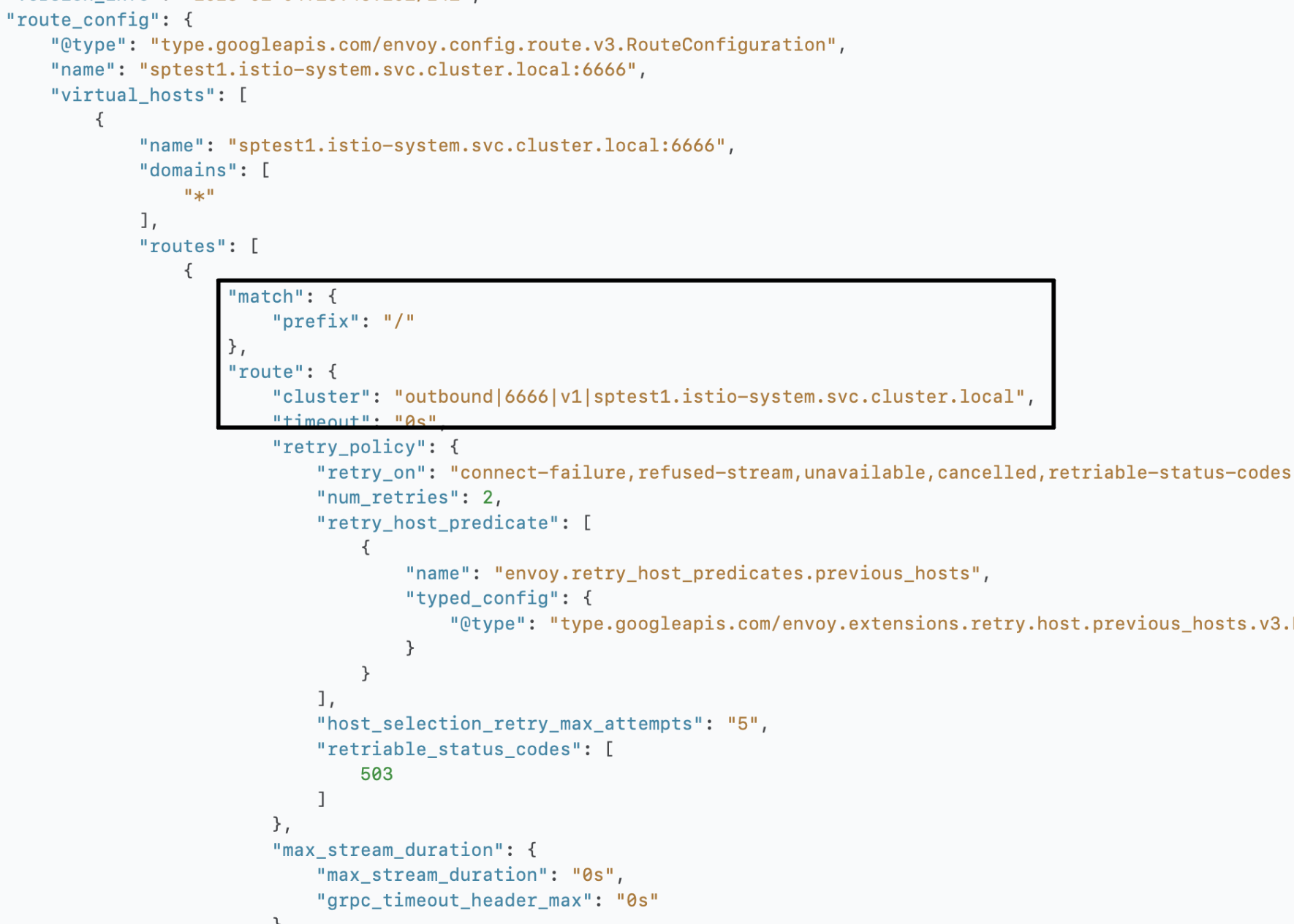

| without vs/dr | with vs/dr | |

| Listener |

|

|

| Route |

|

|

| Cluster |  |

|

| Endpoints |  |

|

稍微再细一点点,listener->route->cluster→endpoint一路看下来,所有的istio crd创建的对象都体现在了几个配置文件里,边车通过配置文件做对应的网络层处理

结合上图,至此基本清晰了,里面规则很多,就不一一列举了

https://betheme.net/yidongkaifa/13233.html?action=onClick

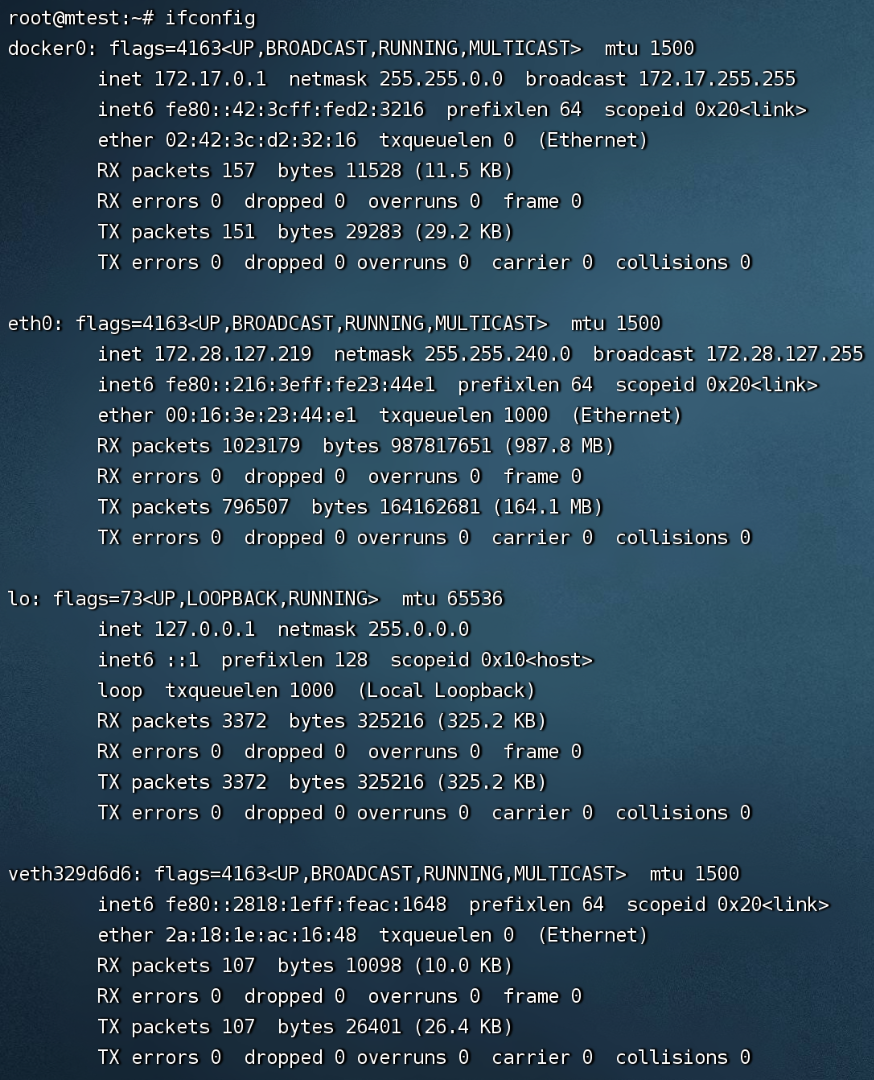

Docker

通过虚拟网卡veth pair,通过docker0网桥交互,两个容器通过docker0 arp寻地路由;跨网络通过iptables规则将请求路由到docker0再往后路由

构建Docker环境,启一个监听

![]()

通过iptables规则可以看到,主要是修改了filter和nat表,filter

*filter

:INPUT ACCEPT [0:0]

:FORWARD DROP [0:0]

:OUTPUT ACCEPT [0:0]

:DOCKER - [0:0]

:DOCKER-ISOLATION-STAGE-1 - [0:0]

:DOCKER-ISOLATION-STAGE-2 - [0:0]

:DOCKER-USER - [0:0]

-A FORWARD -j DOCKER-USER

-A FORWARD -j DOCKER-ISOLATION-STAGE-1

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -o docker0 -j DOCKER

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

###外部tcp请求目标为172.17.0.2、端口为3000的接收请求

-A DOCKER -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 3000 -j ACCEPT

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

-A DOCKER-ISOLATION-STAGE-1 -j RETURN

-A DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP

-A DOCKER-ISOLATION-STAGE-2 -j RETURN

-A DOCKER-USER -j RETURN

COMMIT

*nat

:PREROUTING ACCEPT [0:0]

:INPUT ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:POSTROUTING ACCEPT [0:0]

:DOCKER - [0:0]

###PREROUTING链拦截到本地的请求到DOCKER链

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

###OUTPUT链拦截目标非本地的请求到DOCKER链

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.17.0.2/32 -d 172.17.0.2/32 -p tcp -m tcp --dport 3000 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

###外部请求tcp 3000端口目标地址转换为podip和端口

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 3000 -j DNAT --to-destination 172.17.0.2:3000

COMMIT

容器交互逻辑

veth pair为虚拟网卡对,连接到同一个docker0网桥上,进入一个docker容器,veth pair包含两个虚拟网卡,容器内部一端网卡为 eth0

内部细节

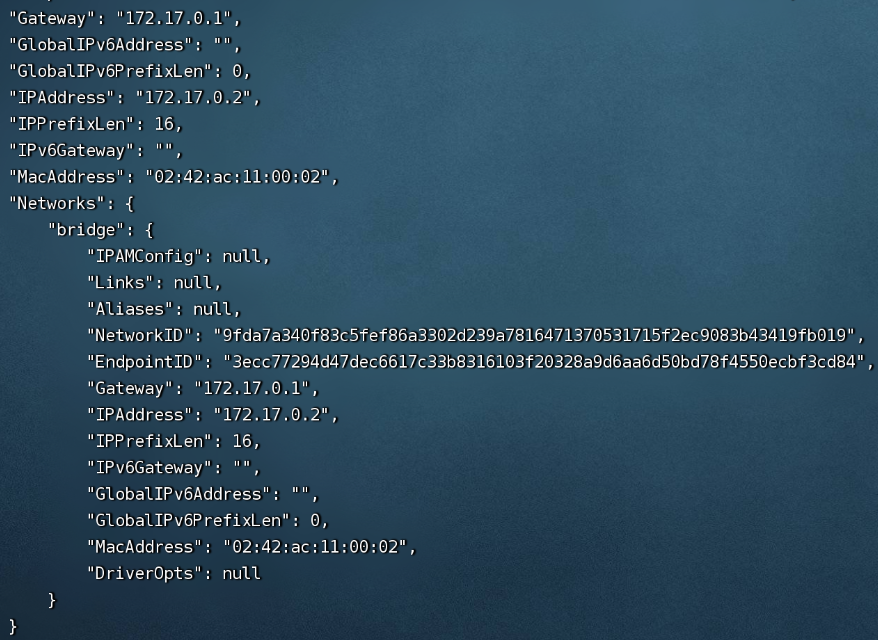

|

容器网络环境 docker inspect 获取网络属性,容器ip为172.17.0.2 |

|

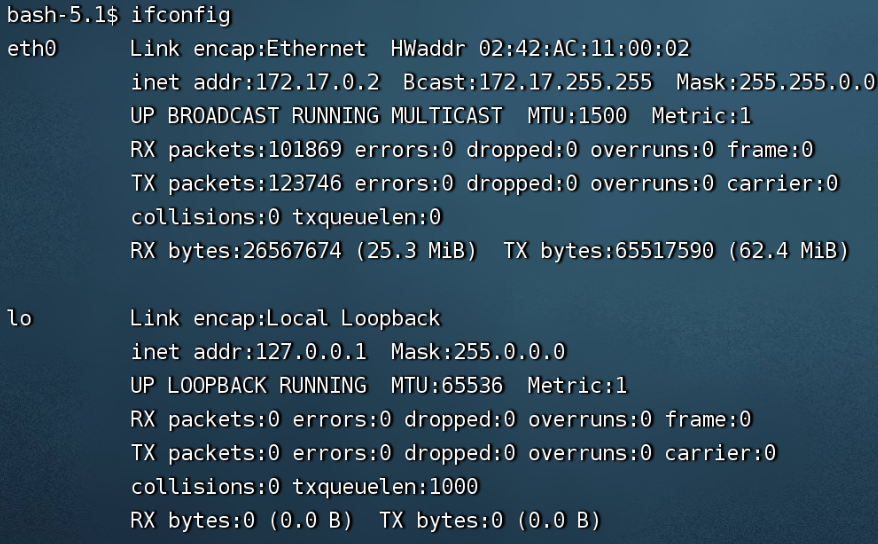

|

容器内 虚拟网卡信息eth0 veth pair在容器内的端点 |

|

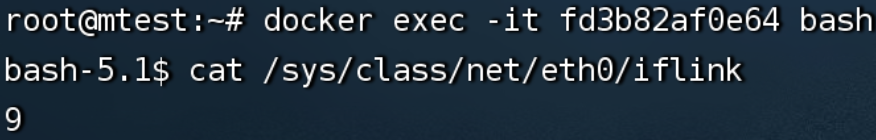

|

进入容器内部查看 /sys/class/net/eth0/iflink 网卡序号为9 |

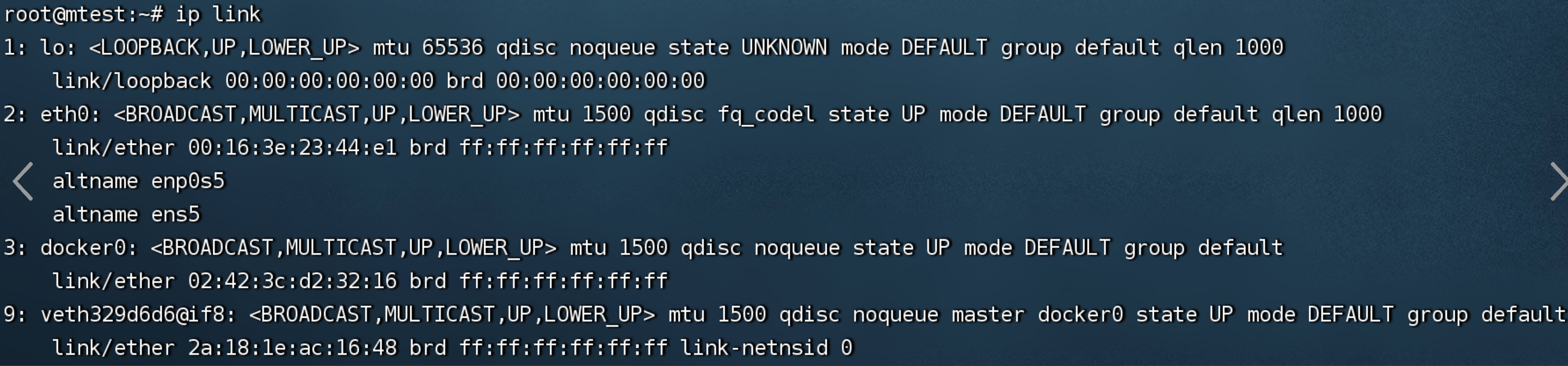

|

|

对应iplink veth329d6d6 |

|

|

查看系统网卡

docker0为容器虚拟网桥

veth pair在容器外的端点veth329d6d6 |

|

|

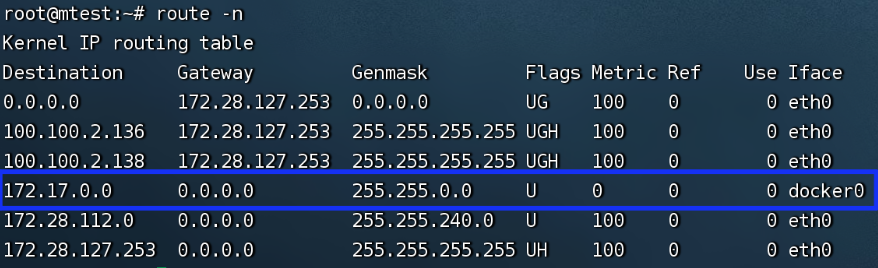

路由信息,目标为172.17.0.x的请求均通过0.0.0.0直连网络,目标到docker0 路由规则 (U 表示UP 有效规则,G表示网关,H表示主机) 当容器内请求的目的地址为172.17.0.x时,数据包经过eth0网卡,发目标主机,目标主机的Mac通过ARP(IP找到MAC)广播传播,再用MAC地址进行交互;数据进入docker0网桥,docker0处理转发,到其他宿主机网络 |

|

| tcpdump -i veth329d6d6 跟踪一小段会看到ARP请求,广播问谁认识172.17.0.2,然后应到找到网卡,通过网桥路由 |  |

|

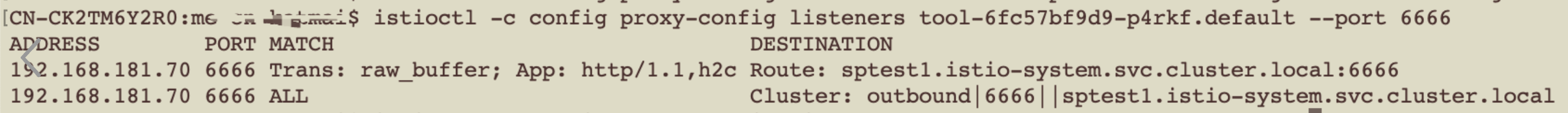

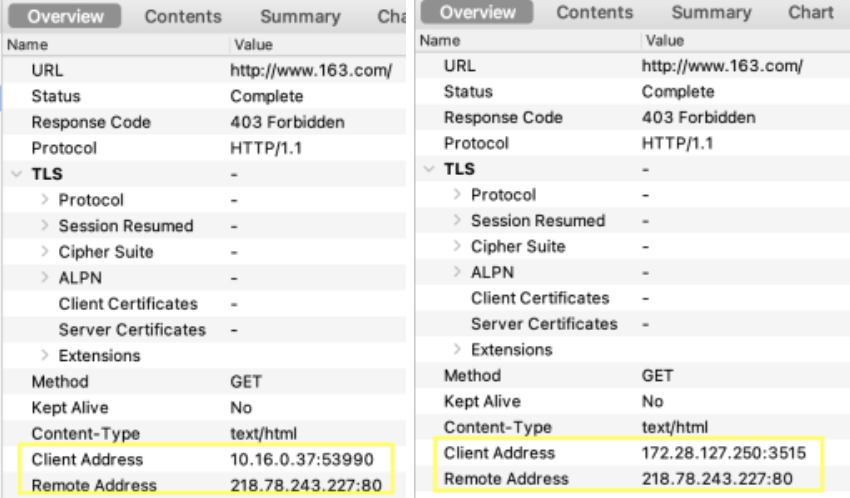

试一下pod内访问外部SNAT的效果 curl www.163.com

tcpdump -i veth虚拟接口 -w filebefore.pcap tcpdump -i eth0 -w fileafter.pcap |

同一个请求,容器内出来的是容器IP,到宿主机网卡出去的时候经过 SNAT(-j MASQUERADE)变为宿主机IP

|

参考其他文档不做过多分析,通过iptables各个环节拦截处理

浙公网安备 33010602011771号

浙公网安备 33010602011771号