DNS

Service提供了一组pod外部访问的统一入口,通过Servicename可以访问集群内的服务,DNS解决了从servicename→ip的解析问题

CoreDNS 通过watch&list ApiServer,监听Pod 和SVC的变化,从而实现同步配置到A记录(域名/主机→IP的关系),使得servicename.namespace.svc.cluster.local可以访问,也可以独立配置域名到IP的解析

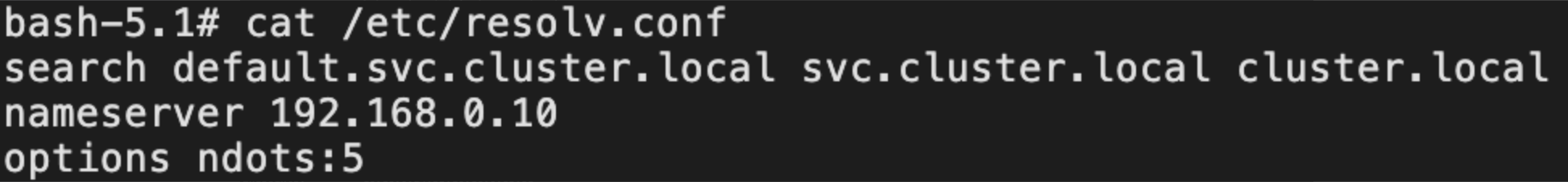

kubelet设置每个Pod的/etc/resolv.conf配置为使用coredns作为nameserver

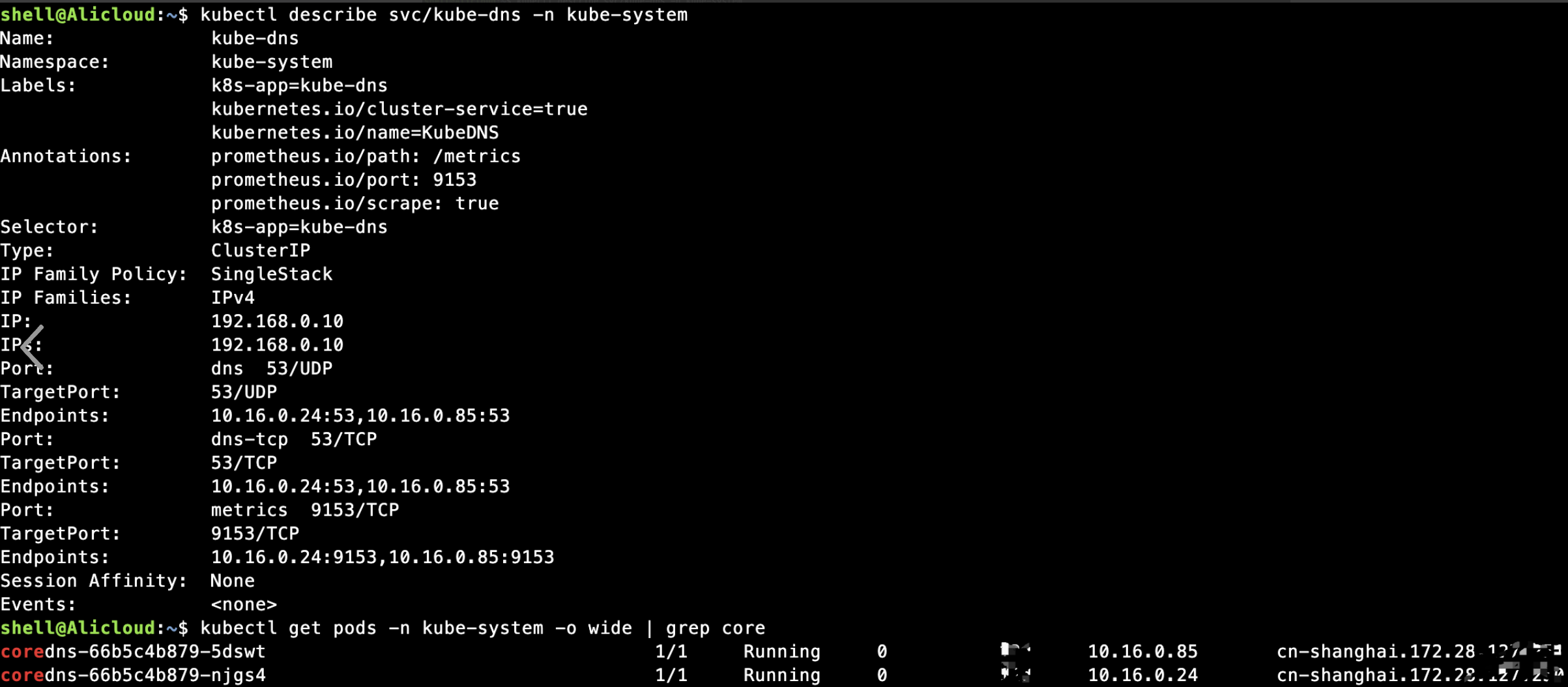

FQDN(Fully qualified domain name)完整域名,一般以 “.” 结束,如果一个域名是FQDN,那么这个域名会被转发给DNS服务器解析,如果不是,则到search解析

nameserver:DNS查询请求转发到CoreDNS服务解析

search:搜索路径

ndots:少于5个点,先走search,最后将其视为绝对域名查询;大于等于五个视为绝对域名查询,只有当查不到时才走search域

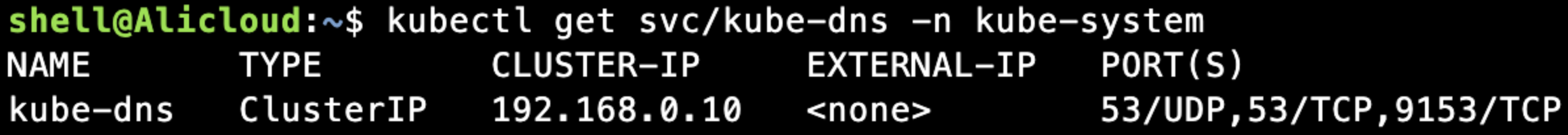

nameserver的192.168.0.10对应的服务是kube-dns

对应的后端为coredns的服务pod

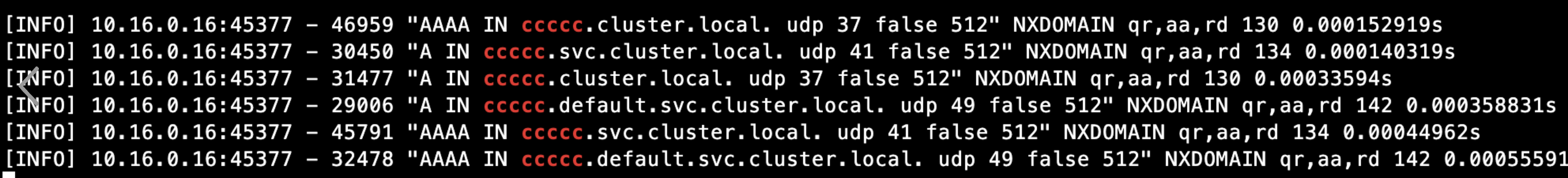

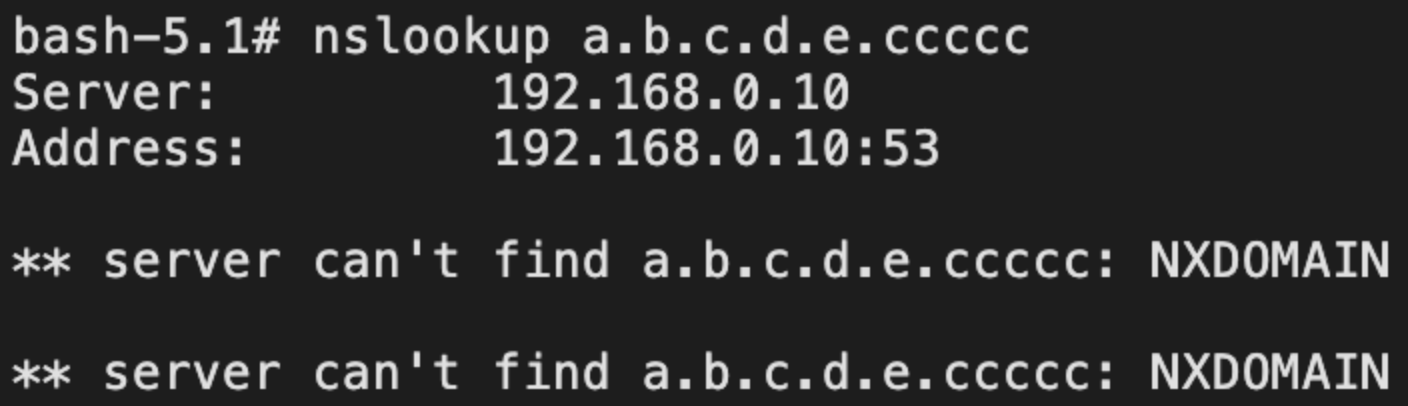

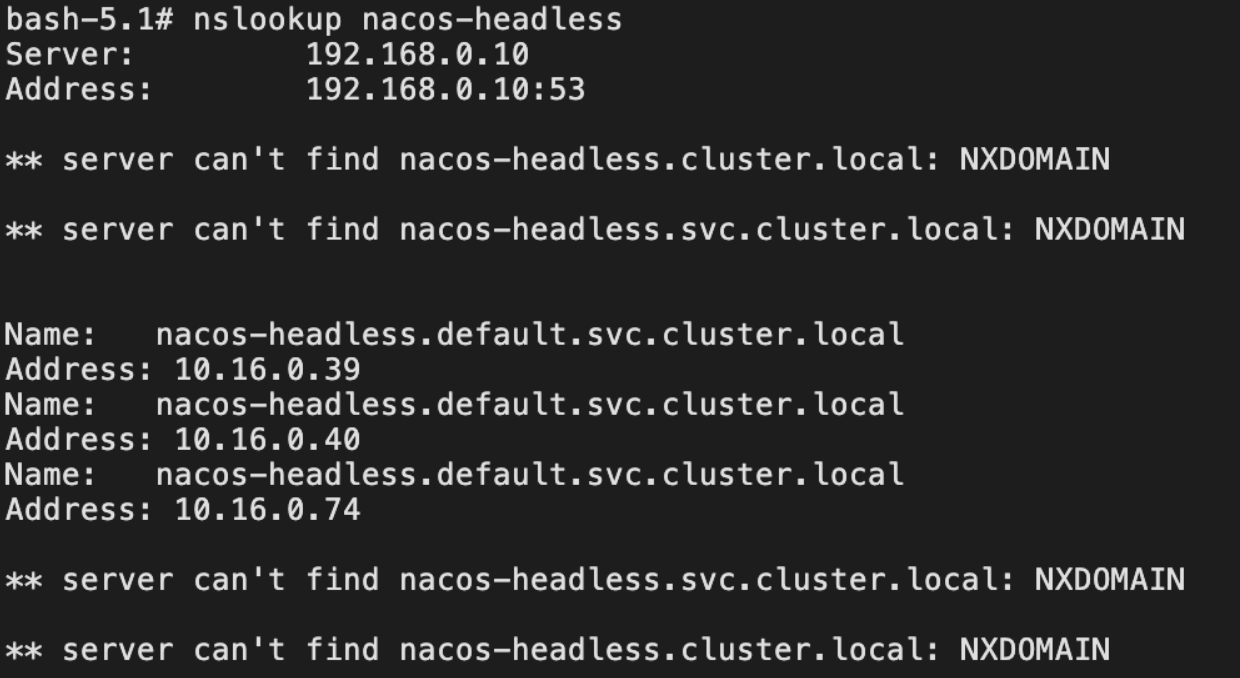

结合域名解析和日志,看下解析过程

![]()

Pod DNS策略配置pod.spec.dnsPolicy,常用的两种,一个是默认用上面的策略,另一个是自己在pod定义上指定

- ClusterFirst:默认策略,使用集群内部的CoreDNS来做域名解析,Pod内/etc/resolv.conf文件中配置的nameserver是集群的DNS服务器

- None:忽略k8s集群环境中的DNS设置,Pod会使用其dnsConfig字段所提供的DNS配置,dnsConfig字段的内容要在创建Pod时手动设置好

apiVersion: apps/v1

kind: Deployment

metadata:

name: tool

labels:

app: tool

spec:

replicas: 1

selector:

matchLabels:

app: tool

template:

metadata:

labels:

app: tool

spec:

containers:

- name: tool1

image: itworker365/tools:latest

ports:

- containerPort: 80

resources:

limits:

cpu: "500m"

securityContext:

privileged: true

dnsConfig:

options:

- name: ndots

value: '3'

dnsPolicy: ClusterFirst

进入pod内部查看/etc/resolv.conf中ndots是3

Service

ExternalName

创建一个类型为ExternalName的服务,指向某个外部域名,DNS服务会创建对应的CNAME记录指向外部域名,这样可以使用稳定的服务名来提供外部访问,多加一层便于管理切换

apiVersion: v1

kind: Service

metadata:

name: cnblogs

namespace: default

spec:

type: ExternalName

externalName: www.cnblogs.com

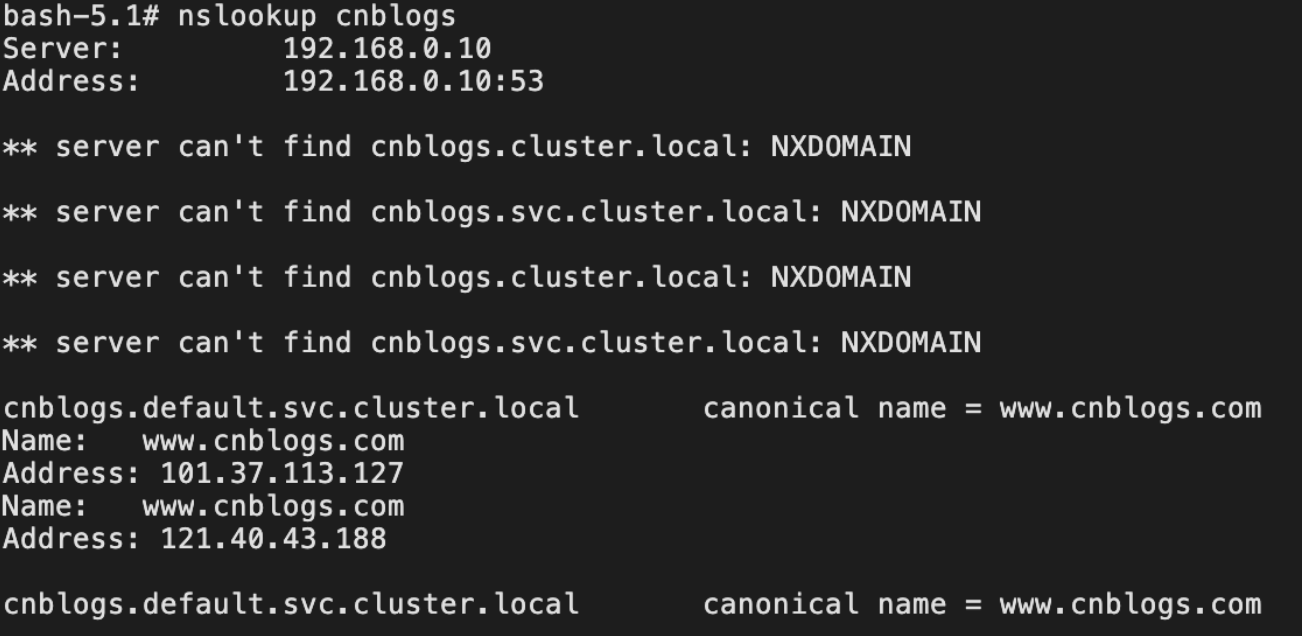

集群任意pod执行nslookup查看域名解析情况,发现已将内部服务名通过DNS CNAME重定向到外部,192.168.0.10为DNS服务地址

尝试nslookup解析原地址,不存在CNAME,正常解析

Headless service

Headless service 和 statefulset一起使用

以nacos为例,nacos本身为有状态部署集合,需要pod之间互访,nacos采用的就是Headless的方式,服务通过nacos-{num}.nacos-headless.default.svc.cluster.local:8848互相访问,选举

apiVersion: v1

kind: Service

metadata:

labels:

app: nacos-headless

name: nacos-headless

namespace: default

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: server

port: 8848

protocol: TCP

targetPort: 8848

- name: client-rpc

port: 9848

protocol: TCP

targetPort: 9848

- name: raft-rpc

port: 9849

protocol: TCP

targetPort: 9849

- name: old-raft-rpc

port: 7848

protocol: TCP

targetPort: 7848

selector:

app: nacos

sessionAffinity: None

type: ClusterIP

通过headless创建的服务,不存在负载均衡,域名解析一般服务会解析为serviceip,headless解析内容为对应全部pod信息

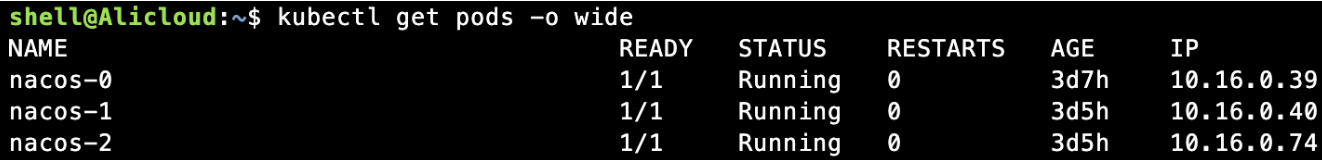

对应看,就是这三个,都有独立域名,可以互相访问,nacos-x.nacos-headless.default.svc.cluster.local

Service + Endpoint

集群内endpoint是集群自动创建的,用于将service和pod关联起来,表示了一个Service对应的所有Pod副本的访问地址,Endpoints Controller负责生成和维护所有Endpoints对象的控制器,负责监听Service和对应的Pod副本的变化

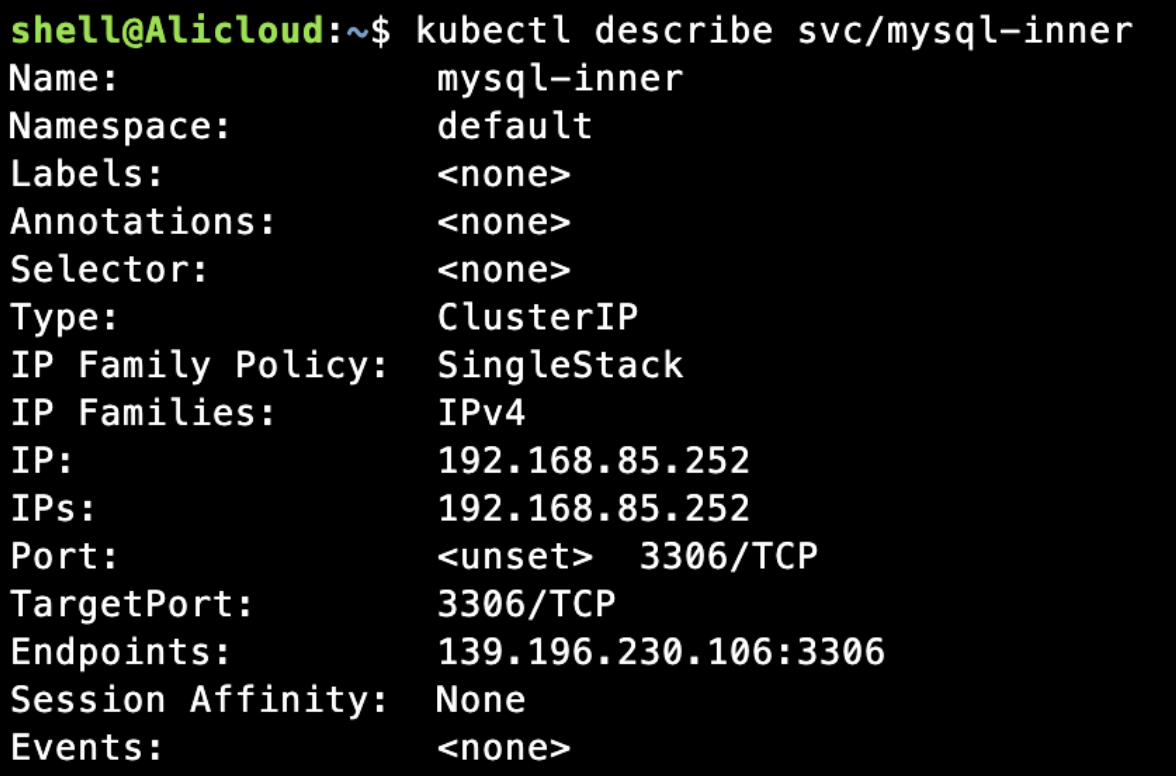

如果想让集群外部的服务被集群内的pod规范化访问,可以人工创建endpoint和service,在没有istio的情况下,常用这种方式统一外部调用

kind: Endpoints

apiVersion: v1

metadata:

name: mysql-inner

subsets:

- addresses:

- ip: 139.196.230.106

ports:

- port: 3306

#-------------------------

kind: Service

apiVersion: v1

metadata:

name: mysql-inner

spec:

ports:

- protocol: TCP

port: 3306

targetPort: 3306

通过service可以看到现在已经通过endpoint在服务了

集群内可以通过mysql -P 3306 -h mysql-inner -uroot -p尝试访问到外部139.196.xxx:3306的数据资源

Nginx Ingress

创建模版

IngressClass用于在集群内有多个ingress controller时候,区分ingress由谁处理,可以设默认

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

name: nginx

annotations:

ingressclass.kubernetes.io/is-default-class: "true"

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: ingress-nginx

name: nginx-ingress-controller

namespace: kube-system

annotations:

component.version: "1.2.1"

component.revision: "1"

spec:

replicas: 2

selector:

matchLabels:

app: ingress-nginx

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

hostNetwork: false

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- ingress-nginx

topologyKey: "kubernetes.io/hostname"

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

# virtual nodes have this label

- key: type

operator: NotIn

values:

- virtual-kubelet

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

# autoscaled nodes have this label

- key: k8s.aliyun.com

operator: NotIn

values:

- "true"

weight: 100

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

tolerations:

serviceAccountName: ingress-nginx

priorityClassName: system-node-critical

terminationGracePeriodSeconds: 300

initContainers:

- name: init-sysctl

image: registry-vpc.cn-shanghai.aliyuncs.com/acs/busybox:v1.29.2

command:

- /bin/sh

- -c

- |

if [ "$POD_IP" != "$HOST_IP" ]; then

mount -o remount rw /proc/sys

sysctl -w net.core.somaxconn=65535

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w kernel.core_uses_pid=0

fi

securityContext:

capabilities:

drop:

- ALL

add:

- SYS_ADMIN

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

containers:

- name: nginx-ingress-controller

image: registry-vpc.cn-shanghai.aliyuncs.com/acs/aliyun-ingress-controller:v1.2.1-aliyun.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader-nginx

- --ingress-class=nginx

- --watch-ingress-without-class

- --controller-class=k8s.io/ingress-nginx

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

- --publish-service=$(POD_NAMESPACE)/nginx-ingress-lb

- --v=2

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 3

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

volumeMounts:

- name: localtime

mountPath: /etc/localtime

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

volumes:

- name: localtime

hostPath:

path: /etc/localtime

type: File

外部访问

通过云厂商的LoadBalancer产品,阿里云为例子,默认nginx-ingress-controller被定制,且组件模版包含注释形式的规格、负载配置参数

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-lb

namespace: kube-system

labels:

app: nginx-ingress-lb

annotations:

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-connection-drain: "on"

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-connection-drain-timeout: "240"

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-instance-charge-type: "PayBySpec" #计费方式

service.beta.kubernetes.io/alibaba-cloud-loadbalancer-resource-group-id: "rg-acfmzdiehkmwgxx" #资源组定义

spec:

type: LoadBalancer

externalTrafficPolicy: "Local"

ipFamilyPolicy: SingleStack

ports:

- port: 80

name: http

targetPort: 80

- port: 443

name: https

targetPort: 443

selector:

app: ingress-nginx

组件创建后,生成LoadBalancer规则监听80/443端口,指向nginx-ingress-controller的nginx-ingress-lb服务,指向后端nginx-ingress-controller,于是可以通过47.117.65.242的公网IP地址访问集群nginx-ingress-controller

规则绑定执行

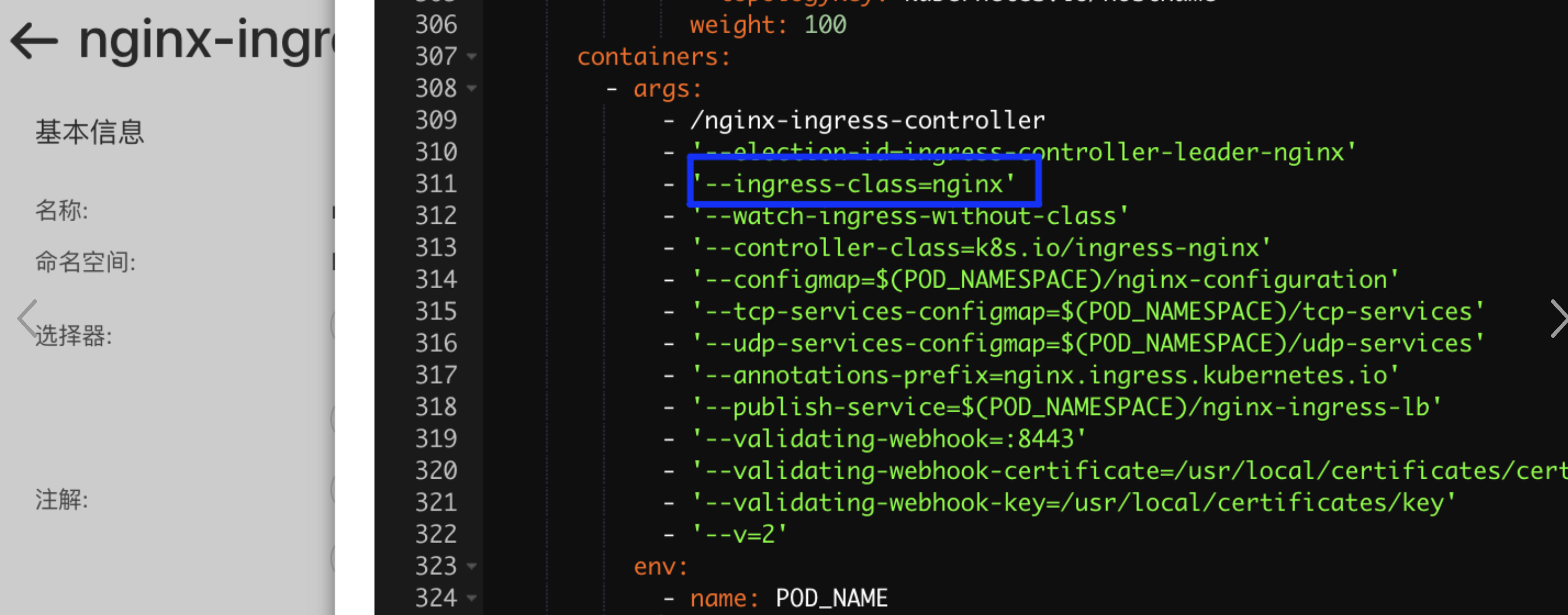

部署nginx-ingress-controller,启动参数指定--ingress-class=nginx

增加路由规则,通过注解kubernetes.io/ingress.class: nginx 将Ingress路由规则绑定到nginx-ingress-controller上

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: sptest

namespace: default

spec:

rules:

- http:

paths:

- backend:

service:

name: sptest

port:

number: 6666

path: /sleep

pathType: Exact

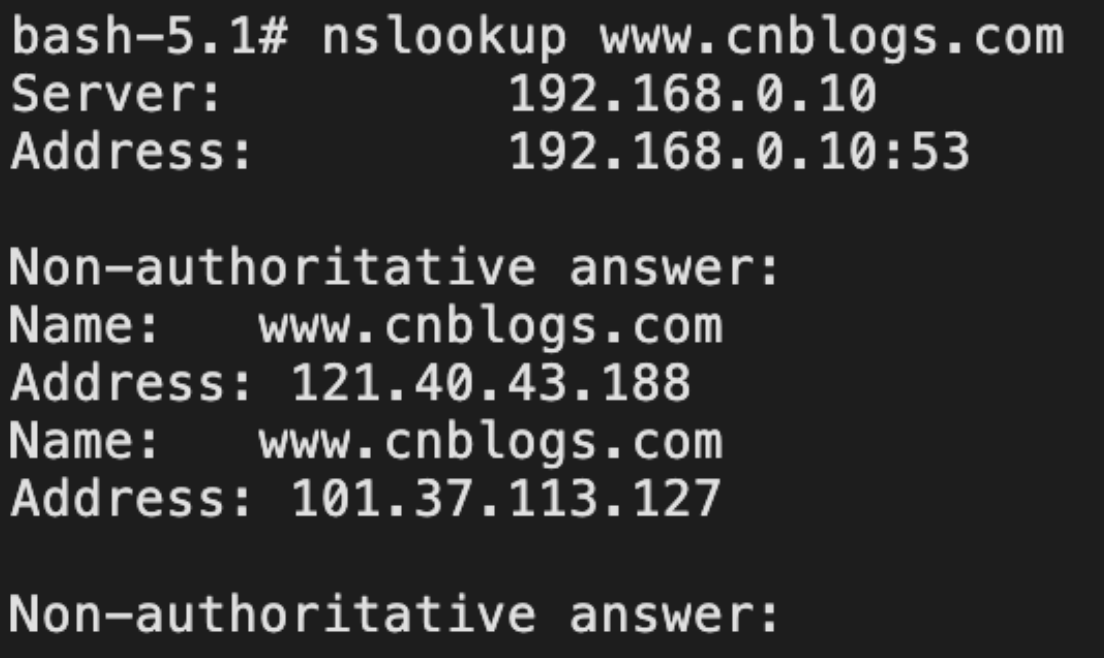

原服务请求/sleep?sleep=1 根据路由规则,通过 LB 访问,请求 http://47.117.65.xxx/sleep?sleep=1,得到sptest服务返回

查看nginx-ingress-controller日志,可以看到对应的请求日志,被成功路由到后端服务

![]()

由于nginx-ingress-controller基于模版+动态lua的配置形式,无法仅通过配置文件直接查看路由,非定制版nginx-ingress-controller可通过dbg命令或安装krew等工具查看

kubectl ingress-nginx backends...

域名解析

前面都是通过LB的外网IP访问,那么如果需要通过域名访问是怎么做的?当然是买个域名配置域名解析到对应的IP了,比如下面的域名,配置A记录,对应解析到IP地址,生效后即可通过访问域名->DNS解析→IP或其他地址

这里的记录还可以是其他类型,比较常用的就是A记录的IP地址和CNAME的另一个域名

这里有必要区别一下nginx-ingress和istiogateway的底层区别,nginx-ingress是lua+nginx,流量最终是经过nginx转发,istiogateway并不是基于nginx,而是纯基于Envoy的流量管控

Istio Gateway

入口网关

istio-ingressgateway的LB逻辑与上段几乎相同,与LB的关联体现在标签 service.k8s.alibaba/loadbalancer-id: lb-uf6vik4l73wtfp588gxxx

大致意思:路由virtualservice制定路由规则 + gateway设定访问规则 → istio-ingressgateway 执行规则

再换种说法:在 IngressGateway pod 上配置 Gateway 资源和 VirtualService 资源定义。Gateway 可以配置端口、协议以及相关安全证书等,VirtualService 配置路由信息用于找到正确的服务,Istio IngressGateway pod 会根据路由配置信息将请求路由到对应的应用服务上

创建一个Gateway,定义了服务从外面怎么访问,在入口处对服务进行统一治理,将网关对象绑定到app: istio-ingressgateway上

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: sptest-gateway

spec:

selector:

app: istio-ingressgateway (同样也可以 istio: ingressgateway)

servers:

- hosts:

- '*'

port:

name: http

number: 80

protocol: HTTP

创建Virtualservice规则,定义了匹配到的内部服务如何流转,通过gateways定义,将VirtualService与Gateway绑定 ,使用标准的 Istio 规则来控制进入 Gateway 的 HTTP 和 TCP 流量

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: sptestvs

namespace: istio-system

spec:

hosts:

- "*"

gateways:

- sptest-gateway

http:

- match:

- uri:

exact: /sleep

route:

- destination:

host: sptest.default.svc.cluster.local

port:

number: 6666

此时,通过istio-ingressgateway注册的LB外网IP访问http://106.15.5.xxx/sleep?sleep=1,路由规则即刻生效,这类情况的延伸可以做更多的组合和策略

出口网关

- 离开服务网格的所有流量必须流经一组专用节点,这一组节点会有特殊的监控和审查

- 集群中允许应用节点以受控方式访问外部服务

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: istio-egressgateway

spec:

selector:

istio: egressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- '*'

这里将规则绑定到gateways

其中Mesh的意思是规则作用于整个网格中所有的sidecar,将出口流量引导至host/subset/port上

mesh定义应用路由规则的来源流量,可以是一个或多个网关,或网格内部的 sidecar,保留字段 mesh 表示网格内部所有的 sidecar,当该参数缺省时,会默认填写 mesh,即该路由规则的来源流量为网格内部所有 sidecar

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: test-egress-gateway

spec:

gateways:

- istio-egressgateway

- mesh

hosts:

- www.baidu.com

http:

- match:

- port: 80

uri:

exact: /baidu

rewrite:

uri: /

route:

- destination:

host: www.baidu.com

port:

number: 80

weight: 100

- match:

- port: 80

uri:

exact: /sleep

route:

- destination:

host: sptest.default.svc.cluster.local

port:

number: 6666

weight: 100

使用 curl -i -H 'Host: www.baidu.com' istio-egressgateway.istio-system.svc.cluster.local/baidu 即可通过egressgateway访问百度;

使用 curl -i -H 'Host: www.baidu.com' istio-egressgateway.istio-system.svc.cluster.local/sleep?sleep=1 即可通过egressgateway访问sptest服务

ServiceEntry

istio集群对外通信及管理,将外部服务纳入stio网格之内,包装对外部mysql的访问,且服务条目可以结合virtualService、destinationRule做更加精细的访问控制、流量控制,还能利用istio其他特性,比如故障注入、重试等功能

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: mysqldb

namespace: default

spec:

endpoints:

- address: 139.196.230.106

labels:

version: v1

ports:

tcp: 3306

- address: 139.196.230.106

labels:

version: v2

ports:

tcp: 3306

hosts:

- mysqldb.svc.remote

location: MESH_EXTERNAL

ports:

- name: tcp

number: 3306

protocol: TCP

resolution: STATIC

DestinationRule

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: mysqldb

namespace: default

spec:

host: mysqldb.svc.remote

subsets:

- labels:

version: v1

name: v1

- labels:

version: v2

name: v2

VirtualService

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: mysqldb

namespace: default

spec:

hosts:

- mysqldb.svc.remote

tcp:

- route:

- destination:

host: mysqldb.svc.remote

subset: v1

可以通过mysql -P 3306 -h mysqldb.svc.remote mysql -uroot -p访问外部,好处是可以利用istio的一些附加能力

也可以将VM作为后端引入集群使用,定义ServiceEntry,通过workloadSelector选择到WorkloadEntry,再由WorkloadEntry到VM

apiVersion: networking.istio.io/v1alpha3

kind: ServiceEntry

metadata:

name: vmentry

namespace: default

spec:

hosts:

- xxx.example.com

location: MESH_INTERNAL

ports:

- name: http

number: 7777

protocol: HTTP

resolution: STATIC

workloadSelector:

labels:

app: vm-deploy

WorkloadEntry声明虚拟机工作负载,这里139.196.230.xxx是一台云主机

apiVersion: networking.istio.io/v1alpha3

kind: WorkloadEntry

metadata:

name: vm204

namespace: default

spec:

address: 139.196.230.xxx

labels:

app: vm-deploy

class: vm

version: v1

配置完成后,进入任意Pod → 添加任意配置项"ip xxx.example.com"到/etc/hosts → curl xxx.example.com:7777/{path} 即可访问到虚拟机上对应的服务

浙公网安备 33010602011771号

浙公网安备 33010602011771号