Nginx 动态化实现难点

1、 nginx路由匹配是基于静态的Trie前缀树、哈希表、正则数组实现的,一旦server_name、location变动,不执行reload就无法实现配置的动态变更;

2、master+n worker中如何同步获取配置

以下只讨论apisix系列、k8s-ingress系列

apisix:

From: https://cloud.tencent.com/developer/article/1861296

. ├── apisix # apisix lua源码 │ ├── admin # apisix admin api │ ├── balancer # upstream 负载均衡 │ ├── cli # 命令行,包含配置初始化,apisix启停等 │ ├── control # control api │ ├── core # 一些配置,etcd交互,请求解析等 │ ├── discovery # 服务发现 │ ├── http # 路由匹配等 │ ├── plugins # 自带的插件在该目录 │ ├── ssl # ssl sni 相关 │ ├── stream # 流处理相关,包含了流处理的一些插件 │ └── utils ...

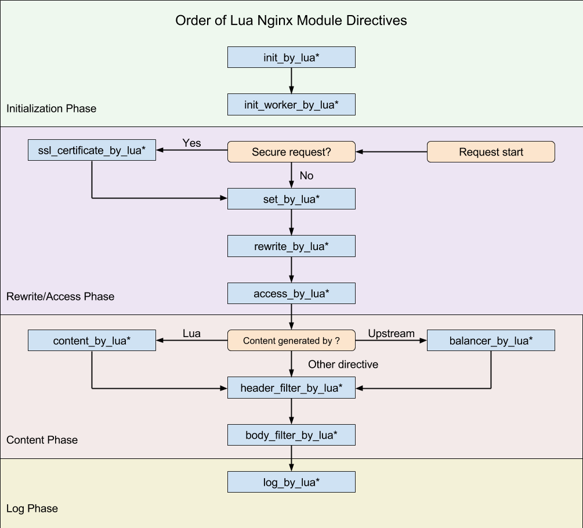

执行钩子

APISIX仅使用了其中8个钩子(注意,APISIX没有使用set_by_lua和rewrite_by_lua,rewrite阶段的plugin其实是APISIX自定义的,与Nginx无关),包括:

init_by_lua:Master进程启动时的初始化;

init_worker_by_lua:每个Worker进程启动时的初始化(包括privileged agent进程的初始化,这是实现java等多语言plugin远程RPC调用的关键);

ssl_certificate_by_lua:在处理TLS握手时,openssl提供了一个钩子,OpenResty通过修改Nginx源码以Lua方式暴露了该钩子;

access_by_lua:接收到下游的HTTP请求头部后,在此匹配Host域名、URI、Method等路由规则,并选择Service、Upstream中的Plugin及上游Server;

balancer_by_lua:在content阶段执行的所有反向代理模块,在选择上游Server时都会回调init_upstream钩子函数,OpenResty将其命名为balancer_by_lua;

header_filter_by_lua:将HTTP响应头部发送给下游前执行的钩子;

body_filter_by_lua:将HTTP响应包体发送给下游前执行的钩子;

log_by_lua:记录access日志时的钩子。

Lua定时器及lua-resty-etcd

Nginx配置从哪儿来?

apisix/cli/nxg_tpl.lua 配置模板

-- -- Licensed to the Apache Software Foundation (ASF) under one or more -- contributor license agreements. See the NOTICE file distributed with -- this work for additional information regarding copyright ownership. -- The ASF licenses this file to You under the Apache License, Version 2.0 -- (the "License"); you may not use this file except in compliance with -- the License. You may obtain a copy of the License at -- -- http://www.apache.org/licenses/LICENSE-2.0 -- -- Unless required by applicable law or agreed to in writing, software -- distributed under the License is distributed on an "AS IS" BASIS, -- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -- See the License for the specific language governing permissions and -- limitations under the License. -- return [=[ # Configuration File - Nginx Server Configs # This is a read-only file, do not try to modify it. {% if user and user ~= '' then %} user {* user *}; {% end %} master_process on; worker_processes {* worker_processes *}; {% if os_name == "Linux" and enable_cpu_affinity == true then %} worker_cpu_affinity auto; {% end %} # main configuration snippet starts {% if main_configuration_snippet then %} {* main_configuration_snippet *} {% end %} # main configuration snippet ends error_log {* error_log *} {* error_log_level or "warn" *}; pid logs/nginx.pid; worker_rlimit_nofile {* worker_rlimit_nofile *}; events { accept_mutex off; worker_connections {* event.worker_connections *}; } worker_rlimit_core {* worker_rlimit_core *}; worker_shutdown_timeout {* worker_shutdown_timeout *}; env APISIX_PROFILE; env PATH; # for searching external plugin runner's binary {% if envs then %} {% for _, name in ipairs(envs) do %} env {*name*}; {% end %} {% end %} {% if stream_proxy then %} stream { lua_package_path "{*extra_lua_path*}$prefix/deps/share/lua/5.1/?.lua;$prefix/deps/share/lua/5.1/?/init.lua;]=] .. [=[{*apisix_lua_home*}/?.lua;{*apisix_lua_home*}/?/init.lua;;{*lua_path*};"; lua_package_cpath "{*extra_lua_cpath*}$prefix/deps/lib64/lua/5.1/?.so;]=] .. [=[$prefix/deps/lib/lua/5.1/?.so;;]=] .. [=[{*lua_cpath*};"; lua_socket_log_errors off; lua_shared_dict lrucache-lock-stream {* stream.lua_shared_dict["lrucache-lock-stream"] *}; lua_shared_dict plugin-limit-conn-stream {* stream.lua_shared_dict["plugin-limit-conn-stream"] *}; lua_shared_dict etcd-cluster-health-check-stream {* stream.lua_shared_dict["etcd-cluster-health-check-stream"] *}; resolver {% for _, dns_addr in ipairs(dns_resolver or {}) do %} {*dns_addr*} {% end %} {% if dns_resolver_valid then %} valid={*dns_resolver_valid*}{% end %}; resolver_timeout {*resolver_timeout*}; {% if ssl.ssl_trusted_certificate ~= nil then %} lua_ssl_trusted_certificate {* ssl.ssl_trusted_certificate *}; {% end %} # stream configuration snippet starts {% if stream_configuration_snippet then %} {* stream_configuration_snippet *} {% end %} # stream configuration snippet ends upstream apisix_backend { server 127.0.0.1:80; balancer_by_lua_block { apisix.stream_balancer_phase() } } init_by_lua_block { require "resty.core" apisix = require("apisix") local dns_resolver = { {% for _, dns_addr in ipairs(dns_resolver or {}) do %} "{*dns_addr*}", {% end %} } local args = { dns_resolver = dns_resolver, } apisix.stream_init(args) } init_worker_by_lua_block { apisix.stream_init_worker() } server { {% for _, item in ipairs(stream_proxy.tcp or {}) do %} listen {*item.addr*} {% if item.tls then %} ssl {% end %} {% if enable_reuseport then %} reuseport {% end %} {% if proxy_protocol and proxy_protocol.enable_tcp_pp then %} proxy_protocol {% end %}; {% end %} {% for _, addr in ipairs(stream_proxy.udp or {}) do %} listen {*addr*} udp {% if enable_reuseport then %} reuseport {% end %}; {% end %} {% if tcp_enable_ssl then %} ssl_certificate {* ssl.ssl_cert *}; ssl_certificate_key {* ssl.ssl_cert_key *}; ssl_certificate_by_lua_block { apisix.stream_ssl_phase() } {% end %} {% if proxy_protocol and proxy_protocol.enable_tcp_pp_to_upstream then %} proxy_protocol on; {% end %} preread_by_lua_block { apisix.stream_preread_phase() } proxy_pass apisix_backend; log_by_lua_block { apisix.stream_log_phase() } } } {% end %} {% if not (stream_proxy and stream_proxy.only ~= false) then %} http { # put extra_lua_path in front of the builtin path # so user can override the source code lua_package_path "{*extra_lua_path*}$prefix/deps/share/lua/5.1/?.lua;$prefix/deps/share/lua/5.1/?/init.lua;]=] .. [=[{*apisix_lua_home*}/?.lua;{*apisix_lua_home*}/?/init.lua;;{*lua_path*};"; lua_package_cpath "{*extra_lua_cpath*}$prefix/deps/lib64/lua/5.1/?.so;]=] .. [=[$prefix/deps/lib/lua/5.1/?.so;;]=] .. [=[{*lua_cpath*};"; lua_shared_dict internal-status {* http.lua_shared_dict["internal-status"] *}; lua_shared_dict plugin-limit-req {* http.lua_shared_dict["plugin-limit-req"] *}; lua_shared_dict plugin-limit-count {* http.lua_shared_dict["plugin-limit-count"] *}; lua_shared_dict prometheus-metrics {* http.lua_shared_dict["prometheus-metrics"] *}; lua_shared_dict plugin-limit-conn {* http.lua_shared_dict["plugin-limit-conn"] *}; lua_shared_dict upstream-healthcheck {* http.lua_shared_dict["upstream-healthcheck"] *}; lua_shared_dict worker-events {* http.lua_shared_dict["worker-events"] *}; lua_shared_dict lrucache-lock {* http.lua_shared_dict["lrucache-lock"] *}; lua_shared_dict balancer-ewma {* http.lua_shared_dict["balancer-ewma"] *}; lua_shared_dict balancer-ewma-locks {* http.lua_shared_dict["balancer-ewma-locks"] *}; lua_shared_dict balancer-ewma-last-touched-at {* http.lua_shared_dict["balancer-ewma-last-touched-at"] *}; lua_shared_dict plugin-limit-count-redis-cluster-slot-lock {* http.lua_shared_dict["plugin-limit-count-redis-cluster-slot-lock"] *}; lua_shared_dict tracing_buffer {* http.lua_shared_dict.tracing_buffer *}; # plugin: skywalking lua_shared_dict plugin-api-breaker {* http.lua_shared_dict["plugin-api-breaker"] *}; lua_shared_dict etcd-cluster-health-check {* http.lua_shared_dict["etcd-cluster-health-check"] *}; # etcd health check # for openid-connect and authz-keycloak plugin lua_shared_dict discovery {* http.lua_shared_dict["discovery"] *}; # cache for discovery metadata documents # for openid-connect plugin lua_shared_dict jwks {* http.lua_shared_dict["jwks"] *}; # cache for JWKs lua_shared_dict introspection {* http.lua_shared_dict["introspection"] *}; # cache for JWT verification results # for authz-keycloak lua_shared_dict access-tokens {* http.lua_shared_dict["access-tokens"] *}; # cache for service account access tokens # for custom shared dict {% if http.lua_shared_dicts then %} {% for cache_key, cache_size in pairs(http.lua_shared_dicts) do %} lua_shared_dict {*cache_key*} {*cache_size*}; {% end %} {% end %} {% if enabled_plugins["proxy-cache"] then %} # for proxy cache {% for _, cache in ipairs(proxy_cache.zones) do %} proxy_cache_path {* cache.disk_path *} levels={* cache.cache_levels *} keys_zone={* cache.name *}:{* cache.memory_size *} inactive=1d max_size={* cache.disk_size *} use_temp_path=off; {% end %} {% end %} {% if enabled_plugins["proxy-cache"] then %} # for proxy cache map $upstream_cache_zone $upstream_cache_zone_info { {% for _, cache in ipairs(proxy_cache.zones) do %} {* cache.name *} {* cache.disk_path *},{* cache.cache_levels *}; {% end %} } {% end %} {% if enabled_plugins["error-log-logger"] then %} lua_capture_error_log 10m; {% end %} lua_ssl_verify_depth 5; ssl_session_timeout 86400; {% if http.underscores_in_headers then %} underscores_in_headers {* http.underscores_in_headers *}; {%end%} lua_socket_log_errors off; resolver {% for _, dns_addr in ipairs(dns_resolver or {}) do %} {*dns_addr*} {% end %} {% if dns_resolver_valid then %} valid={*dns_resolver_valid*}{% end %}; resolver_timeout {*resolver_timeout*}; lua_http10_buffering off; lua_regex_match_limit 100000; lua_regex_cache_max_entries 8192; {% if http.enable_access_log == false then %} access_log off; {% else %} log_format main escape={* http.access_log_format_escape *} '{* http.access_log_format *}'; uninitialized_variable_warn off; {% if use_apisix_openresty then %} apisix_delay_client_max_body_check on; {% end %} access_log {* http.access_log *} main buffer=16384 flush=3; {% end %} open_file_cache max=1000 inactive=60; client_max_body_size {* http.client_max_body_size *}; keepalive_timeout {* http.keepalive_timeout *}; client_header_timeout {* http.client_header_timeout *}; client_body_timeout {* http.client_body_timeout *}; send_timeout {* http.send_timeout *}; variables_hash_max_size {* http.variables_hash_max_size *}; server_tokens off; include mime.types; charset {* http.charset *}; # error_page error_page 500 @50x.html; {% if real_ip_header then %} real_ip_header {* real_ip_header *}; {% print("\nDeprecated: apisix.real_ip_header has been moved to nginx_config.http.real_ip_header. apisix.real_ip_header will be removed in the future version. Please use nginx_config.http.real_ip_header first.\n\n") %} {% elseif http.real_ip_header then %} real_ip_header {* http.real_ip_header *}; {% end %} {% if http.real_ip_recursive then %} real_ip_recursive {* http.real_ip_recursive *}; {% end %} {% if real_ip_from then %} {% print("\nDeprecated: apisix.real_ip_from has been moved to nginx_config.http.real_ip_from. apisix.real_ip_from will be removed in the future version. Please use nginx_config.http.real_ip_from first.\n\n") %} {% for _, real_ip in ipairs(real_ip_from) do %} set_real_ip_from {*real_ip*}; {% end %} {% elseif http.real_ip_from then %} {% for _, real_ip in ipairs(http.real_ip_from) do %} set_real_ip_from {*real_ip*}; {% end %} {% end %} {% if ssl.ssl_trusted_certificate ~= nil then %} lua_ssl_trusted_certificate {* ssl.ssl_trusted_certificate *}; {% end %} # http configuration snippet starts {% if http_configuration_snippet then %} {* http_configuration_snippet *} {% end %} # http configuration snippet ends upstream apisix_backend { server 0.0.0.1; {% if use_apisix_openresty then %} keepalive {* http.upstream.keepalive *}; keepalive_requests {* http.upstream.keepalive_requests *}; keepalive_timeout {* http.upstream.keepalive_timeout *}; # we put the static configuration above so that we can override it in the Lua code balancer_by_lua_block { apisix.http_balancer_phase() } {% else %} balancer_by_lua_block { apisix.http_balancer_phase() } keepalive {* http.upstream.keepalive *}; keepalive_requests {* http.upstream.keepalive_requests *}; keepalive_timeout {* http.upstream.keepalive_timeout *}; {% end %} } {% if enabled_plugins["dubbo-proxy"] then %} upstream apisix_dubbo_backend { server 0.0.0.1; balancer_by_lua_block { apisix.http_balancer_phase() } # dynamical keepalive doesn't work with dubbo as the connection here # is managed by ngx_multi_upstream_module multi {* dubbo_upstream_multiplex_count *}; keepalive {* http.upstream.keepalive *}; keepalive_requests {* http.upstream.keepalive_requests *}; keepalive_timeout {* http.upstream.keepalive_timeout *}; } {% end %} init_by_lua_block { require "resty.core" apisix = require("apisix") local dns_resolver = { {% for _, dns_addr in ipairs(dns_resolver or {}) do %} "{*dns_addr*}", {% end %} } local args = { dns_resolver = dns_resolver, } apisix.http_init(args) } init_worker_by_lua_block { apisix.http_init_worker() } {% if not use_openresty_1_17 then %} exit_worker_by_lua_block { apisix.http_exit_worker() } {% end %} {% if enable_control then %} server { listen {* control_server_addr *}; access_log off; location / { content_by_lua_block { apisix.http_control() } } location @50x.html { set $from_error_page 'true'; try_files /50x.html $uri; } } {% end %} {% if enabled_plugins["prometheus"] and prometheus_server_addr then %} server { listen {* prometheus_server_addr *}; access_log off; location / { content_by_lua_block { local prometheus = require("apisix.plugins.prometheus") prometheus.export_metrics() } } {% if with_module_status then %} location = /apisix/nginx_status { allow 127.0.0.0/24; deny all; stub_status; } {% end %} } {% end %} {% if enable_admin and port_admin then %} server { {%if https_admin then%} listen {* port_admin *} ssl; ssl_certificate {* admin_api_mtls.admin_ssl_cert *}; ssl_certificate_key {* admin_api_mtls.admin_ssl_cert_key *}; {%if admin_api_mtls.admin_ssl_ca_cert and admin_api_mtls.admin_ssl_ca_cert ~= "" then%} ssl_verify_client on; ssl_client_certificate {* admin_api_mtls.admin_ssl_ca_cert *}; {% end %} ssl_session_cache shared:SSL:20m; ssl_protocols {* ssl.ssl_protocols *}; ssl_ciphers {* ssl.ssl_ciphers *}; ssl_prefer_server_ciphers on; {% if ssl.ssl_session_tickets then %} ssl_session_tickets on; {% else %} ssl_session_tickets off; {% end %} {% else %} listen {* port_admin *}; {%end%} log_not_found off; # admin configuration snippet starts {% if http_admin_configuration_snippet then %} {* http_admin_configuration_snippet *} {% end %} # admin configuration snippet ends set $upstream_scheme 'http'; set $upstream_host $http_host; set $upstream_uri ''; location /apisix/admin { {%if allow_admin then%} {% for _, allow_ip in ipairs(allow_admin) do %} allow {*allow_ip*}; {% end %} deny all; {%else%} allow all; {%end%} content_by_lua_block { apisix.http_admin() } } location @50x.html { set $from_error_page 'true'; try_files /50x.html $uri; } } {% end %} server { {% for _, item in ipairs(node_listen) do %} listen {* item.port *} default_server {% if enable_reuseport then %} reuseport {% end %} {% if item.enable_http2 then %} http2 {% end %}; {% end %} {% if ssl.enable then %} {% for _, port in ipairs(ssl.listen_port) do %} listen {* port *} ssl default_server {% if ssl.enable_http2 then %} http2 {% end %} {% if enable_reuseport then %} reuseport {% end %}; {% end %} {% end %} {% if proxy_protocol and proxy_protocol.listen_http_port then %} listen {* proxy_protocol.listen_http_port *} default_server proxy_protocol; {% end %} {% if proxy_protocol and proxy_protocol.listen_https_port then %} listen {* proxy_protocol.listen_https_port *} ssl default_server {% if ssl.enable_http2 then %} http2 {% end %} proxy_protocol; {% end %} {% if enable_ipv6 then %} {% for _, item in ipairs(node_listen) do %} listen [::]:{* item.port *} default_server {% if enable_reuseport then %} reuseport {% end %} {% if item.enable_http2 then %} http2 {% end %}; {% end %} {% if ssl.enable then %} {% for _, port in ipairs(ssl.listen_port) do %} listen [::]:{* port *} ssl default_server {% if ssl.enable_http2 then %} http2 {% end %} {% if enable_reuseport then %} reuseport {% end %}; {% end %} {% end %} {% end %} {% -- if enable_ipv6 %} server_name _; {% if ssl.enable then %} ssl_certificate {* ssl.ssl_cert *}; ssl_certificate_key {* ssl.ssl_cert_key *}; ssl_session_cache shared:SSL:20m; ssl_session_timeout 10m; ssl_protocols {* ssl.ssl_protocols *}; ssl_ciphers {* ssl.ssl_ciphers *}; ssl_prefer_server_ciphers on; {% if ssl.ssl_session_tickets then %} ssl_session_tickets on; {% else %} ssl_session_tickets off; {% end %} {% end %} # http server configuration snippet starts {% if http_server_configuration_snippet then %} {* http_server_configuration_snippet *} {% end %} # http server configuration snippet ends {% if with_module_status then %} location = /apisix/nginx_status { allow 127.0.0.0/24; deny all; access_log off; stub_status; } {% end %} {% if enable_admin and not port_admin then %} location /apisix/admin { set $upstream_scheme 'http'; set $upstream_host $http_host; set $upstream_uri ''; {%if allow_admin then%} {% for _, allow_ip in ipairs(allow_admin) do %} allow {*allow_ip*}; {% end %} deny all; {%else%} allow all; {%end%} content_by_lua_block { apisix.http_admin() } } {% end %} {% if ssl.enable then %} ssl_certificate_by_lua_block { apisix.http_ssl_phase() } {% end %} {% if http.proxy_ssl_server_name then %} proxy_ssl_name $upstream_host; proxy_ssl_server_name on; {% end %} location / { set $upstream_mirror_host ''; set $upstream_upgrade ''; set $upstream_connection ''; set $upstream_scheme 'http'; set $upstream_host $http_host; set $upstream_uri ''; set $ctx_ref ''; set $from_error_page ''; {% if enabled_plugins["dubbo-proxy"] then %} set $dubbo_service_name ''; set $dubbo_service_version ''; set $dubbo_method ''; {% end %} access_by_lua_block { apisix.http_access_phase() } proxy_http_version 1.1; proxy_set_header Host $upstream_host; proxy_set_header Upgrade $upstream_upgrade; proxy_set_header Connection $upstream_connection; proxy_set_header X-Real-IP $remote_addr; proxy_pass_header Date; ### the following x-forwarded-* headers is to send to upstream server set $var_x_forwarded_for $remote_addr; set $var_x_forwarded_proto $scheme; set $var_x_forwarded_host $host; set $var_x_forwarded_port $server_port; if ($http_x_forwarded_for != "") { set $var_x_forwarded_for "${http_x_forwarded_for}, ${realip_remote_addr}"; } if ($http_x_forwarded_host != "") { set $var_x_forwarded_host $http_x_forwarded_host; } if ($http_x_forwarded_port != "") { set $var_x_forwarded_port $http_x_forwarded_port; } proxy_set_header X-Forwarded-For $var_x_forwarded_for; proxy_set_header X-Forwarded-Proto $var_x_forwarded_proto; proxy_set_header X-Forwarded-Host $var_x_forwarded_host; proxy_set_header X-Forwarded-Port $var_x_forwarded_port; {% if enabled_plugins["proxy-cache"] then %} ### the following configuration is to cache response content from upstream server set $upstream_cache_zone off; set $upstream_cache_key ''; set $upstream_cache_bypass ''; set $upstream_no_cache ''; proxy_cache $upstream_cache_zone; proxy_cache_valid any {% if proxy_cache.cache_ttl then %} {* proxy_cache.cache_ttl *} {% else %} 10s {% end %}; proxy_cache_min_uses 1; proxy_cache_methods GET HEAD; proxy_cache_lock_timeout 5s; proxy_cache_use_stale off; proxy_cache_key $upstream_cache_key; proxy_no_cache $upstream_no_cache; proxy_cache_bypass $upstream_cache_bypass; {% end %} proxy_pass $upstream_scheme://apisix_backend$upstream_uri; {% if enabled_plugins["proxy-mirror"] then %} mirror /proxy_mirror; {% end %} header_filter_by_lua_block { apisix.http_header_filter_phase() } body_filter_by_lua_block { apisix.http_body_filter_phase() } log_by_lua_block { apisix.http_log_phase() } } location @grpc_pass { access_by_lua_block { apisix.grpc_access_phase() } grpc_set_header Content-Type application/grpc; grpc_socket_keepalive on; grpc_pass $upstream_scheme://apisix_backend; header_filter_by_lua_block { apisix.http_header_filter_phase() } body_filter_by_lua_block { apisix.http_body_filter_phase() } log_by_lua_block { apisix.http_log_phase() } } {% if enabled_plugins["dubbo-proxy"] then %} location @dubbo_pass { access_by_lua_block { apisix.dubbo_access_phase() } dubbo_pass_all_headers on; dubbo_pass_body on; dubbo_pass $dubbo_service_name $dubbo_service_version $dubbo_method apisix_dubbo_backend; header_filter_by_lua_block { apisix.http_header_filter_phase() } body_filter_by_lua_block { apisix.http_body_filter_phase() } log_by_lua_block { apisix.http_log_phase() } } {% end %} {% if enabled_plugins["proxy-mirror"] then %} location = /proxy_mirror { internal; if ($upstream_mirror_host = "") { return 200; } proxy_http_version 1.1; proxy_set_header Host $upstream_host; proxy_pass $upstream_mirror_host$request_uri; } {% end %} location @50x.html { set $from_error_page 'true'; try_files /50x.html $uri; header_filter_by_lua_block { apisix.http_header_filter_phase() } log_by_lua_block { apisix.http_log_phase() } } } # http end configuration snippet starts {% if http_end_configuration_snippet then %} {* http_end_configuration_snippet *} {% end %} # http end configuration snippet ends } {% end %} ]=]

apisix/conf/config-default.yaml默认配置,部分填充配置模板

# # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # # PLEASE DO NOT UPDATE THIS FILE! # If you want to set the specified configuration value, you can set the new # value in the conf/config.yaml file. # apisix: node_listen: 9080 # APISIX listening port enable_admin: true enable_admin_cors: true # Admin API support CORS response headers. enable_debug: false enable_dev_mode: false # Sets nginx worker_processes to 1 if set to true enable_reuseport: true # Enable nginx SO_REUSEPORT switch if set to true. enable_ipv6: true config_center: etcd # etcd: use etcd to store the config value # yaml: fetch the config value from local yaml file `/your_path/conf/apisix.yaml` #proxy_protocol: # Proxy Protocol configuration #listen_http_port: 9181 # The port with proxy protocol for http, it differs from node_listen and port_admin. # This port can only receive http request with proxy protocol, but node_listen & port_admin # can only receive http request. If you enable proxy protocol, you must use this port to # receive http request with proxy protocol #listen_https_port: 9182 # The port with proxy protocol for https #enable_tcp_pp: true # Enable the proxy protocol for tcp proxy, it works for stream_proxy.tcp option #enable_tcp_pp_to_upstream: true # Enables the proxy protocol to the upstream server enable_server_tokens: true # Whether the APISIX version number should be shown in Server header. # It's enabled by default. # configurations to load third party code and/or override the builtin one. extra_lua_path: "" # extend lua_package_path to load third party code extra_lua_cpath: "" # extend lua_package_cpath to load third party code proxy_cache: # Proxy Caching configuration cache_ttl: 10s # The default caching time if the upstream does not specify the cache time zones: # The parameters of a cache - name: disk_cache_one # The name of the cache, administrator can be specify # which cache to use by name in the admin api memory_size: 50m # The size of shared memory, it's used to store the cache index disk_size: 1G # The size of disk, it's used to store the cache data disk_path: /tmp/disk_cache_one # The path to store the cache data cache_levels: 1:2 # The hierarchy levels of a cache #- name: disk_cache_two # memory_size: 50m # disk_size: 1G # disk_path: "/tmp/disk_cache_two" # cache_levels: "1:2" allow_admin: # http://nginx.org/en/docs/http/ngx_http_access_module.html#allow - 127.0.0.0/24 # If we don't set any IP list, then any IP access is allowed by default. #- "::/64" #port_admin: 9180 # use a separate port #https_admin: true # enable HTTPS when use a separate port for Admin API. # Admin API will use conf/apisix_admin_api.crt and conf/apisix_admin_api.key as certificate. admin_api_mtls: # Depends on `port_admin` and `https_admin`. admin_ssl_cert: "" # Path of your self-signed server side cert. admin_ssl_cert_key: "" # Path of your self-signed server side key. admin_ssl_ca_cert: "" # Path of your self-signed ca cert.The CA is used to sign all admin api callers' certificates. # Default token when use API to call for Admin API. # *NOTE*: Highly recommended to modify this value to protect APISIX's Admin API. # Disabling this configuration item means that the Admin API does not # require any authentication. admin_key: - name: admin key: edd1c9f034335f136f87ad84b625c8f1 role: admin # admin: manage all configuration data # viewer: only can view configuration data - name: viewer key: 4054f7cf07e344346cd3f287985e76a2 role: viewer delete_uri_tail_slash: false # delete the '/' at the end of the URI global_rule_skip_internal_api: true # does not run global rule in internal apis # api that path starts with "/apisix" is considered to be internal api router: http: radixtree_uri # radixtree_uri: match route by uri(base on radixtree) # radixtree_host_uri: match route by host + uri(base on radixtree) # radixtree_uri_with_parameter: like radixtree_uri but match uri with parameters, # see https://github.com/api7/lua-resty-radixtree/#parameters-in-path for # more details. ssl: radixtree_sni # radixtree_sni: match route by SNI(base on radixtree) #stream_proxy: # TCP/UDP proxy # only: true # use stream proxy only, don't enable HTTP stuff # tcp: # TCP proxy port list # - addr: 9100 # tls: true # - addr: "127.0.0.1:9101" # udp: # UDP proxy port list # - 9200 # - "127.0.0.1:9201" #dns_resolver: # If not set, read from `/etc/resolv.conf` # - 1.1.1.1 # - 8.8.8.8 #dns_resolver_valid: 30 # if given, override the TTL of the valid records. The unit is second. resolver_timeout: 5 # resolver timeout enable_resolv_search_opt: true # enable search option in resolv.conf ssl: enable: true enable_http2: true listen_port: 9443 #ssl_trusted_certificate: /path/to/ca-cert # Specifies a file path with trusted CA certificates in the PEM format # used to verify the certificate when APISIX needs to do SSL/TLS handshaking # with external services (e.g. etcd) ssl_protocols: TLSv1.2 TLSv1.3 ssl_ciphers: ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384 ssl_session_tickets: false # disable ssl_session_tickets by default for 'ssl_session_tickets' would make Perfect Forward Secrecy useless. # ref: https://github.com/mozilla/server-side-tls/issues/135 key_encrypt_salt: edd1c9f0985e76a2 # If not set, will save origin ssl key into etcd. # If set this, must be a string of length 16. And it will encrypt ssl key with AES-128-CBC # !!! So do not change it after saving your ssl, it can't decrypt the ssl keys have be saved if you change !! enable_control: true #control: # ip: 127.0.0.1 # port: 9090 disable_sync_configuration_during_start: false # safe exit. Remove this once the feature is stable nginx_config: # config for render the template to generate nginx.conf #user: root # specifies the execution user of the worker process. # the "user" directive makes sense only if the master process runs with super-user privileges. # if you're not root user,the default is current user. error_log: logs/error.log error_log_level: warn # warn,error worker_processes: auto # if you want use multiple cores in container, you can inject the number of cpu as environment variable "APISIX_WORKER_PROCESSES" enable_cpu_affinity: true # enable cpu affinity, this is just work well only on physical machine worker_rlimit_nofile: 20480 # the number of files a worker process can open, should be larger than worker_connections worker_shutdown_timeout: 240s # timeout for a graceful shutdown of worker processes event: worker_connections: 10620 #envs: # allow to get a list of environment variables # - TEST_ENV stream: lua_shared_dict: etcd-cluster-health-check-stream: 10m lrucache-lock-stream: 10m plugin-limit-conn-stream: 10m # As user can add arbitrary configurations in the snippet, # it is user's responsibility to check the configurations # don't conflict with APISIX. main_configuration_snippet: | # Add custom Nginx main configuration to nginx.conf. # The configuration should be well indented! http_configuration_snippet: | # Add custom Nginx http configuration to nginx.conf. # The configuration should be well indented! http_server_configuration_snippet: | # Add custom Nginx http server configuration to nginx.conf. # The configuration should be well indented! http_admin_configuration_snippet: | # Add custom Nginx admin server configuration to nginx.conf. # The configuration should be well indented! http_end_configuration_snippet: | # Add custom Nginx http end configuration to nginx.conf. # The configuration should be well indented! stream_configuration_snippet: | # Add custom Nginx stream configuration to nginx.conf. # The configuration should be well indented! http: enable_access_log: true # enable access log or not, default true access_log: logs/access.log access_log_format: "$remote_addr - $remote_user [$time_local] $http_host \"$request\" $status $body_bytes_sent $request_time \"$http_referer\" \"$http_user_agent\" $upstream_addr $upstream_status $upstream_response_time \"$upstream_scheme://$upstream_host$upstream_uri\"" access_log_format_escape: default # allows setting json or default characters escaping in variables keepalive_timeout: 60s # timeout during which a keep-alive client connection will stay open on the server side. client_header_timeout: 60s # timeout for reading client request header, then 408 (Request Time-out) error is returned to the client client_body_timeout: 60s # timeout for reading client request body, then 408 (Request Time-out) error is returned to the client client_max_body_size: 0 # The maximum allowed size of the client request body. # If exceeded, the 413 (Request Entity Too Large) error is returned to the client. # Note that unlike Nginx, we don't limit the body size by default. send_timeout: 10s # timeout for transmitting a response to the client.then the connection is closed underscores_in_headers: "on" # default enables the use of underscores in client request header fields real_ip_header: X-Real-IP # http://nginx.org/en/docs/http/ngx_http_realip_module.html#real_ip_header real_ip_recursive: "off" # http://nginx.org/en/docs/http/ngx_http_realip_module.html#real_ip_recursive real_ip_from: # http://nginx.org/en/docs/http/ngx_http_realip_module.html#set_real_ip_from - 127.0.0.1 - "unix:" #lua_shared_dicts: # add custom shared cache to nginx.conf # ipc_shared_dict: 100m # custom shared cache, format: `cache-key: cache-size` # Enables or disables passing of the server name through TLS Server Name Indication extension (SNI, RFC 6066) # when establishing a connection with the proxied HTTPS server. proxy_ssl_server_name: true upstream: keepalive: 320 # Sets the maximum number of idle keepalive connections to upstream servers that are preserved in the cache of each worker process. # When this number is exceeded, the least recently used connections are closed. keepalive_requests: 1000 # Sets the maximum number of requests that can be served through one keepalive connection. # After the maximum number of requests is made, the connection is closed. keepalive_timeout: 60s # Sets a timeout during which an idle keepalive connection to an upstream server will stay open. charset: utf-8 # Adds the specified charset to the "Content-Type" response header field, see # http://nginx.org/en/docs/http/ngx_http_charset_module.html#charset variables_hash_max_size: 2048 # Sets the maximum size of the variables hash table. lua_shared_dict: internal-status: 10m plugin-limit-req: 10m plugin-limit-count: 10m prometheus-metrics: 10m plugin-limit-conn: 10m upstream-healthcheck: 10m worker-events: 10m lrucache-lock: 10m balancer-ewma: 10m balancer-ewma-locks: 10m balancer-ewma-last-touched-at: 10m plugin-limit-count-redis-cluster-slot-lock: 1m tracing_buffer: 10m plugin-api-breaker: 10m etcd-cluster-health-check: 10m discovery: 1m jwks: 1m introspection: 10m access-tokens: 1m etcd: host: # it's possible to define multiple etcd hosts addresses of the same etcd cluster. - "http://127.0.0.1:2379" # multiple etcd address, if your etcd cluster enables TLS, please use https scheme, # e.g. https://127.0.0.1:2379. prefix: /apisix # apisix configurations prefix timeout: 30 # 30 seconds #resync_delay: 5 # when sync failed and a rest is needed, resync after the configured seconds plus 50% random jitter #health_check_timeout: 10 # etcd retry the unhealthy nodes after the configured seconds #user: root # root username for etcd #password: 5tHkHhYkjr6cQY # root password for etcd tls: # To enable etcd client certificate you need to build APISIX-Openresty, see # http://apisix.apache.org/docs/apisix/how-to-build#6-build-openresty-for-apisix #cert: /path/to/cert # path of certificate used by the etcd client #key: /path/to/key # path of key used by the etcd client verify: true # whether to verify the etcd endpoint certificate when setup a TLS connection to etcd, # the default value is true, e.g. the certificate will be verified strictly. #discovery: # service discovery center # dns: # servers: # - "127.0.0.1:8600" # use the real address of your dns server # eureka: # host: # it's possible to define multiple eureka hosts addresses of the same eureka cluster. # - "http://127.0.0.1:8761" # prefix: /eureka/ # fetch_interval: 30 # default 30s # weight: 100 # default weight for node # timeout: # connect: 2000 # default 2000ms # send: 2000 # default 2000ms # read: 5000 # default 5000ms graphql: max_size: 1048576 # the maximum size limitation of graphql in bytes, default 1MiB #ext-plugin: #cmd: ["ls", "-l"] plugins: # plugin list (sorted by priority) - client-control # priority: 22000 - ext-plugin-pre-req # priority: 12000 - zipkin # priority: 11011 - request-id # priority: 11010 - fault-injection # priority: 11000 - serverless-pre-function # priority: 10000 - batch-requests # priority: 4010 - cors # priority: 4000 - ip-restriction # priority: 3000 - ua-restriction # priority: 2999 - referer-restriction # priority: 2990 - uri-blocker # priority: 2900 - request-validation # priority: 2800 - openid-connect # priority: 2599 - wolf-rbac # priority: 2555 - hmac-auth # priority: 2530 - basic-auth # priority: 2520 - jwt-auth # priority: 2510 - key-auth # priority: 2500 - consumer-restriction # priority: 2400 - authz-keycloak # priority: 2000 #- error-log-logger # priority: 1091 - proxy-mirror # priority: 1010 - proxy-cache # priority: 1009 - proxy-rewrite # priority: 1008 - api-breaker # priority: 1005 - limit-conn # priority: 1003 - limit-count # priority: 1002 - limit-req # priority: 1001 #- node-status # priority: 1000 - gzip # priority: 995 - server-info # priority: 990 - traffic-split # priority: 966 - redirect # priority: 900 - response-rewrite # priority: 899 #- dubbo-proxy # priority: 507 - grpc-transcode # priority: 506 - prometheus # priority: 500 - echo # priority: 412 - http-logger # priority: 410 - sls-logger # priority: 406 - tcp-logger # priority: 405 - kafka-logger # priority: 403 - syslog # priority: 401 - udp-logger # priority: 400 #- log-rotate # priority: 100 # <- recommend to use priority (0, 100) for your custom plugins - example-plugin # priority: 0 #- skywalking # priority: -1100 - serverless-post-function # priority: -2000 - ext-plugin-post-req # priority: -3000 stream_plugins: # sorted by priority - ip-restriction # priority: 3000 - limit-conn # priority: 1003 - mqtt-proxy # priority: 1000 # <- recommend to use priority (0, 100) for your custom plugins plugin_attr: log-rotate: interval: 3600 # rotate interval (unit: second) max_kept: 168 # max number of log files will be kept skywalking: service_name: APISIX service_instance_name: APISIX Instance Name endpoint_addr: http://127.0.0.1:12800 prometheus: export_uri: /apisix/prometheus/metrics enable_export_server: true export_addr: ip: 127.0.0.1 port: 9091 server-info: report_interval: 60 # server info report interval (unit: second) report_ttl: 3600 # live time for server info in etcd (unit: second) dubbo-proxy: upstream_multiplex_count: 32

apisix/cli/ops.lua 通过resty.template组装

-- -- Licensed to the Apache Software Foundation (ASF) under one or more -- contributor license agreements. See the NOTICE file distributed with -- this work for additional information regarding copyright ownership. -- The ASF licenses this file to You under the Apache License, Version 2.0 -- (the "License"); you may not use this file except in compliance with -- the License. You may obtain a copy of the License at -- -- http://www.apache.org/licenses/LICENSE-2.0 -- -- Unless required by applicable law or agreed to in writing, software -- distributed under the License is distributed on an "AS IS" BASIS, -- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. -- See the License for the specific language governing permissions and -- limitations under the License. -- local ver = require("apisix.core.version") local etcd = require("apisix.cli.etcd") local util = require("apisix.cli.util") local file = require("apisix.cli.file") local ngx_tpl = require("apisix.cli.ngx_tpl") local html_page = require("apisix.cli.html_page") local profile = require("apisix.core.profile") local template = require("resty.template") local argparse = require("argparse") local pl_path = require("pl.path") local jsonschema = require("jsonschema") local stderr = io.stderr local ipairs = ipairs local pairs = pairs local print = print local type = type local tostring = tostring local tonumber = tonumber local io_open = io.open local execute = os.execute local table_insert = table.insert local getenv = os.getenv local max = math.max local floor = math.floor local str_find = string.find local str_byte = string.byte local str_sub = string.sub local _M = {} local function help() print([[ Usage: apisix [action] <argument> help: show this message, then exit init: initialize the local nginx.conf init_etcd: initialize the data of etcd start: start the apisix server stop: stop the apisix server quit: stop the apisix server gracefully restart: restart the apisix server reload: reload the apisix server version: print the version of apisix ]]) end local function version_greater_equal(cur_ver_s, need_ver_s) local cur_vers = util.split(cur_ver_s, [[.]]) local need_vers = util.split(need_ver_s, [[.]]) local len = max(#cur_vers, #need_vers) for i = 1, len do local cur_ver = tonumber(cur_vers[i]) or 0 local need_ver = tonumber(need_vers[i]) or 0 if cur_ver > need_ver then return true end if cur_ver < need_ver then return false end end return true end local function get_openresty_version() local str = "nginx version: openresty/" local ret = util.execute_cmd("openresty -v 2>&1") local pos = str_find(ret, str, 1, true) if pos then return str_sub(ret, pos + #str) end str = "nginx version: nginx/" pos = str_find(ret, str, 1, true) if pos then return str_sub(ret, pos + #str) end end local function local_dns_resolver(file_path) local file, err = io_open(file_path, "rb") if not file then return false, "failed to open file: " .. file_path .. ", error info:" .. err end local dns_addrs = {} for line in file:lines() do local addr, n = line:gsub("^nameserver%s+([^%s]+)%s*$", "%1") if n == 1 then table_insert(dns_addrs, addr) end end file:close() return dns_addrs end -- exported for test _M.local_dns_resolver = local_dns_resolver local function version() print(ver['VERSION']) end local function get_lua_path(conf) -- we use "" as the placeholder to enforce the type to be string if conf and conf ~= "" then if #conf < 2 then -- the shortest valid path is ';;' util.die("invalid extra_lua_path/extra_lua_cpath: \"", conf, "\"\n") end local path = conf if path:byte(-1) ~= str_byte(';') then path = path .. ';' end return path end return "" end local config_schema = { type = "object", properties = { apisix = { properties = { config_center = { enum = {"etcd", "yaml"}, }, proxy_protocol = { type = "object", properties = { listen_http_port = { type = "integer", }, listen_https_port = { type = "integer", }, enable_tcp_pp = { type = "boolean", }, enable_tcp_pp_to_upstream = { type = "boolean", }, } }, port_admin = { type = "integer", }, https_admin = { type = "boolean", }, stream_proxy = { type = "object", properties = { tcp = { type = "array", minItems = 1, items = { anyOf = { { type = "integer", }, { type = "string", }, { type = "object", properties = { addr = { anyOf = { { type = "integer", }, { type = "string", }, } }, tls = { type = "boolean", } }, required = {"addr"} }, }, }, uniqueItems = true, }, udp = { type = "array", minItems = 1, items = { anyOf = { { type = "integer", }, { type = "string", }, }, }, uniqueItems = true, }, } }, dns_resolver = { type = "array", minItems = 1, items = { type = "string", } }, dns_resolver_valid = { type = "integer", }, ssl = { type = "object", properties = { ssl_trusted_certificate = { type = "string", } } }, } }, nginx_config = { type = "object", properties = { envs = { type = "array", minItems = 1, items = { type = "string", } } }, }, http = { type = "object", properties = { lua_shared_dicts = { type = "object", } } }, etcd = { type = "object", properties = { resync_delay = { type = "integer", }, user = { type = "string", }, password = { type = "string", }, tls = { type = "object", properties = { cert = { type = "string", }, key = { type = "string", }, } } } } } } local function init(env) if env.is_root_path then print('Warning! Running apisix under /root is only suitable for ' .. 'development environments and it is dangerous to do so. ' .. 'It is recommended to run APISIX in a directory ' .. 'other than /root.') end -- read_yaml_conf local yaml_conf, err = file.read_yaml_conf(env.apisix_home) if not yaml_conf then util.die("failed to read local yaml config of apisix: ", err, "\n") end local validator = jsonschema.generate_validator(config_schema) local ok, err = validator(yaml_conf) if not ok then util.die("failed to validate config: ", err, "\n") end -- check the Admin API token local checked_admin_key = false if yaml_conf.apisix.enable_admin and yaml_conf.apisix.allow_admin then for _, allow_ip in ipairs(yaml_conf.apisix.allow_admin) do if allow_ip == "127.0.0.0/24" then checked_admin_key = true end end end if yaml_conf.apisix.enable_admin and not checked_admin_key then local help = [[ %s Please modify "admin_key" in conf/config.yaml . ]] if type(yaml_conf.apisix.admin_key) ~= "table" or #yaml_conf.apisix.admin_key == 0 then util.die(help:format("ERROR: missing valid Admin API token.")) end for _, admin in ipairs(yaml_conf.apisix.admin_key) do if type(admin.key) == "table" then admin.key = "" else admin.key = tostring(admin.key) end if admin.key == "" then util.die(help:format("ERROR: missing valid Admin API token."), "\n") end if admin.key == "edd1c9f034335f136f87ad84b625c8f1" then stderr:write( help:format([[WARNING: using fixed Admin API token has security risk.]]), "\n" ) end end end if yaml_conf.apisix.enable_admin and yaml_conf.apisix.config_center == "yaml" then util.die("ERROR: Admin API can only be used with etcd config_center.\n") end local or_ver = get_openresty_version() if or_ver == nil then util.die("can not find openresty\n") end local need_ver = "1.17.3" if not version_greater_equal(or_ver, need_ver) then util.die("openresty version must >=", need_ver, " current ", or_ver, "\n") end local use_openresty_1_17 = false if not version_greater_equal(or_ver, "1.19.3") then use_openresty_1_17 = true end local or_info = util.execute_cmd("openresty -V 2>&1") local with_module_status = true if or_info and not or_info:find("http_stub_status_module", 1, true) then stderr:write("'http_stub_status_module' module is missing in ", "your openresty, please check it out. Without this ", "module, there will be fewer monitoring indicators.\n") with_module_status = false end local use_apisix_openresty = true if or_info and not or_info:find("apisix-nginx-module", 1, true) then use_apisix_openresty = false end local enabled_plugins = {} for i, name in ipairs(yaml_conf.plugins) do enabled_plugins[name] = true end if enabled_plugins["proxy-cache"] and not yaml_conf.apisix.proxy_cache then util.die("missing apisix.proxy_cache for plugin proxy-cache\n") end local ports_to_check = {} local control_server_addr if yaml_conf.apisix.enable_control then if not yaml_conf.apisix.control then if ports_to_check[9090] ~= nil then util.die("control port 9090 conflicts with ", ports_to_check[9090], "\n") end control_server_addr = "127.0.0.1:9090" ports_to_check[9090] = "control" else local ip = yaml_conf.apisix.control.ip local port = tonumber(yaml_conf.apisix.control.port) if ip == nil then ip = "127.0.0.1" end if not port then port = 9090 end if ports_to_check[port] ~= nil then util.die("control port ", port, " conflicts with ", ports_to_check[port], "\n") end control_server_addr = ip .. ":" .. port ports_to_check[port] = "control" end end local prometheus_server_addr if yaml_conf.plugin_attr.prometheus then local prometheus = yaml_conf.plugin_attr.prometheus if prometheus.enable_export_server then local ip = prometheus.export_addr.ip local port = tonumber(prometheus.export_addr.port) if ip == nil then ip = "127.0.0.1" end if not port then port = 9091 end if ports_to_check[port] ~= nil then util.die("prometheus port ", port, " conflicts with ", ports_to_check[port], "\n") end prometheus_server_addr = ip .. ":" .. port ports_to_check[port] = "prometheus" end end -- support multiple ports listen, compatible with the original style if type(yaml_conf.apisix.node_listen) == "number" then if ports_to_check[yaml_conf.apisix.node_listen] ~= nil then util.die("node_listen port ", yaml_conf.apisix.node_listen, " conflicts with ", ports_to_check[yaml_conf.apisix.node_listen], "\n") end local node_listen = {{port = yaml_conf.apisix.node_listen}} yaml_conf.apisix.node_listen = node_listen elseif type(yaml_conf.apisix.node_listen) == "table" then local node_listen = {} for index, value in ipairs(yaml_conf.apisix.node_listen) do if type(value) == "number" then if ports_to_check[value] ~= nil then util.die("node_listen port ", value, " conflicts with ", ports_to_check[value], "\n") end table_insert(node_listen, index, {port = value}) elseif type(value) == "table" then if type(value.port) == "number" and ports_to_check[value.port] ~= nil then util.die("node_listen port ", value.port, " conflicts with ", ports_to_check[value.port], "\n") end table_insert(node_listen, index, value) end end yaml_conf.apisix.node_listen = node_listen end if type(yaml_conf.apisix.ssl.listen_port) == "number" then local listen_port = {yaml_conf.apisix.ssl.listen_port} yaml_conf.apisix.ssl.listen_port = listen_port end if yaml_conf.apisix.ssl.ssl_trusted_certificate ~= nil then local cert_path = yaml_conf.apisix.ssl.ssl_trusted_certificate -- During validation, the path is relative to PWD -- When Nginx starts, the path is relative to conf -- Therefore we need to check the absolute version instead cert_path = pl_path.abspath(cert_path) local ok, err = util.is_file_exist(cert_path) if not ok then util.die(err, "\n") end yaml_conf.apisix.ssl.ssl_trusted_certificate = cert_path end local admin_api_mtls = yaml_conf.apisix.admin_api_mtls if yaml_conf.apisix.https_admin and not (admin_api_mtls and admin_api_mtls.admin_ssl_cert and admin_api_mtls.admin_ssl_cert ~= "" and admin_api_mtls.admin_ssl_cert_key and admin_api_mtls.admin_ssl_cert_key ~= "") then util.die("missing ssl cert for https admin") end -- enable ssl with place holder crt&key yaml_conf.apisix.ssl.ssl_cert = "cert/ssl_PLACE_HOLDER.crt" yaml_conf.apisix.ssl.ssl_cert_key = "cert/ssl_PLACE_HOLDER.key" local tcp_enable_ssl -- compatible with the original style which only has the addr if yaml_conf.apisix.stream_proxy and yaml_conf.apisix.stream_proxy.tcp then local tcp = yaml_conf.apisix.stream_proxy.tcp for i, item in ipairs(tcp) do if type(item) ~= "table" then tcp[i] = {addr = item} else if item.tls then tcp_enable_ssl = true end end end end local dubbo_upstream_multiplex_count = 32 if yaml_conf.plugin_attr and yaml_conf.plugin_attr["dubbo-proxy"] then local dubbo_conf = yaml_conf.plugin_attr["dubbo-proxy"] if tonumber(dubbo_conf.upstream_multiplex_count) >= 1 then dubbo_upstream_multiplex_count = dubbo_conf.upstream_multiplex_count end end if yaml_conf.apisix.dns_resolver_valid then if tonumber(yaml_conf.apisix.dns_resolver_valid) == nil then util.die("apisix->dns_resolver_valid should be a number") end end -- Using template.render local sys_conf = { use_openresty_1_17 = use_openresty_1_17, lua_path = env.pkg_path_org, lua_cpath = env.pkg_cpath_org, os_name = util.trim(util.execute_cmd("uname")), apisix_lua_home = env.apisix_home, with_module_status = with_module_status, use_apisix_openresty = use_apisix_openresty, error_log = {level = "warn"}, enabled_plugins = enabled_plugins, dubbo_upstream_multiplex_count = dubbo_upstream_multiplex_count, tcp_enable_ssl = tcp_enable_ssl, control_server_addr = control_server_addr, prometheus_server_addr = prometheus_server_addr, } if not yaml_conf.apisix then util.die("failed to read `apisix` field from yaml file") end if not yaml_conf.nginx_config then util.die("failed to read `nginx_config` field from yaml file") end if util.is_32bit_arch() then sys_conf["worker_rlimit_core"] = "4G" else sys_conf["worker_rlimit_core"] = "16G" end for k,v in pairs(yaml_conf.apisix) do sys_conf[k] = v end for k,v in pairs(yaml_conf.nginx_config) do sys_conf[k] = v end local wrn = sys_conf["worker_rlimit_nofile"] local wc = sys_conf["event"]["worker_connections"] if not wrn or wrn <= wc then -- ensure the number of fds is slightly larger than the number of conn sys_conf["worker_rlimit_nofile"] = wc + 128 end if sys_conf["enable_dev_mode"] == true then sys_conf["worker_processes"] = 1 sys_conf["enable_reuseport"] = false elseif tonumber(sys_conf["worker_processes"]) == nil then sys_conf["worker_processes"] = "auto" end if sys_conf.allow_admin and #sys_conf.allow_admin == 0 then sys_conf.allow_admin = nil end local dns_resolver = sys_conf["dns_resolver"] if not dns_resolver or #dns_resolver == 0 then local dns_addrs, err = local_dns_resolver("/etc/resolv.conf") if not dns_addrs then util.die("failed to import local DNS: ", err, "\n") end if #dns_addrs == 0 then util.die("local DNS is empty\n") end sys_conf["dns_resolver"] = dns_addrs end for i, r in ipairs(sys_conf["dns_resolver"]) do if r:match(":[^:]*:") then -- more than one colon, is IPv6 if r:byte(1) ~= str_byte('[') then -- ensure IPv6 address is always wrapped in [] sys_conf["dns_resolver"][i] = "[" .. r .. "]" end end end local env_worker_processes = getenv("APISIX_WORKER_PROCESSES") if env_worker_processes then sys_conf["worker_processes"] = floor(tonumber(env_worker_processes)) end local exported_vars = file.get_exported_vars() if exported_vars then if not sys_conf["envs"] then sys_conf["envs"]= {} end for _, cfg_env in ipairs(sys_conf["envs"]) do local cfg_name local from = str_find(cfg_env, "=", 1, true) if from then cfg_name = str_sub(cfg_env, 1, from - 1) else cfg_name = cfg_env end exported_vars[cfg_name] = false end for name, value in pairs(exported_vars) do if value then table_insert(sys_conf["envs"], name .. "=" .. value) end end end -- fix up lua path sys_conf["extra_lua_path"] = get_lua_path(yaml_conf.apisix.extra_lua_path) sys_conf["extra_lua_cpath"] = get_lua_path(yaml_conf.apisix.extra_lua_cpath) local conf_render = template.compile(ngx_tpl) local ngxconf = conf_render(sys_conf) local ok, err = util.write_file(env.apisix_home .. "/conf/nginx.conf", ngxconf) if not ok then util.die("failed to update nginx.conf: ", err, "\n") end local cmd_html = "mkdir -p " .. env.apisix_home .. "/html" util.execute_cmd(cmd_html) local ok, err = util.write_file(env.apisix_home .. "/html/50x.html", html_page) if not ok then util.die("failed to write 50x.html: ", err, "\n") end end local function init_etcd(env, args) etcd.init(env, args) end local function start(env, ...) -- Because the worker process started by apisix has "nobody" permission, -- it cannot access the `/root` directory. Therefore, it is necessary to -- prohibit APISIX from running in the /root directory. if env.is_root_path then util.die("Error: It is forbidden to run APISIX in the /root directory.\n") end local cmd_logs = "mkdir -p " .. env.apisix_home .. "/logs" util.execute_cmd(cmd_logs) -- check running local pid_path = env.apisix_home .. "/logs/nginx.pid" local pid = util.read_file(pid_path) pid = tonumber(pid) if pid then local lsof_cmd = "lsof -p " .. pid local res, err = util.execute_cmd(lsof_cmd) if not (res and res == "") then if not res then print(err) else print("APISIX is running...") end return end print("nginx.pid exists but there's no corresponding process with pid ", pid, ", the file will be overwritten") end local parser = argparse() parser:argument("_", "Placeholder") parser:option("-c --config", "location of customized config.yaml") -- TODO: more logs for APISIX cli could be added using this feature parser:flag("--verbose", "show init_etcd debug information") local args = parser:parse() local customized_yaml = args["config"] if customized_yaml then profile.apisix_home = env.apisix_home .. "/" local local_conf_path = profile:yaml_path("config") local err = util.execute_cmd_with_error("mv " .. local_conf_path .. " " .. local_conf_path .. ".bak") if #err > 0 then util.die("failed to mv config to backup, error: ", err) end err = util.execute_cmd_with_error("ln " .. customized_yaml .. " " .. local_conf_path) if #err > 0 then util.execute_cmd("mv " .. local_conf_path .. ".bak " .. local_conf_path) util.die("failed to link customized config, error: ", err) end print("Use customized yaml: ", customized_yaml) end init(env) init_etcd(env, args) util.execute_cmd(env.openresty_args) end local function cleanup() local local_conf_path = profile:yaml_path("config") local bak_exist = io_open(local_conf_path .. ".bak") if bak_exist then local err = util.execute_cmd_with_error("rm " .. local_conf_path) if #err > 0 then print("failed to remove customized config, error: ", err) end err = util.execute_cmd_with_error("mv " .. local_conf_path .. ".bak " .. local_conf_path) if #err > 0 then util.die("failed to mv original config file, error: ", err) end end end local function quit(env) cleanup() local cmd = env.openresty_args .. [[ -s quit]] util.execute_cmd(cmd) end local function stop(env) cleanup() local cmd = env.openresty_args .. [[ -s stop]] util.execute_cmd(cmd) end local function restart(env) stop(env) start(env) end local function reload(env) -- reinit nginx.conf init(env) local test_cmd = env.openresty_args .. [[ -t -q ]] -- When success, -- On linux, os.execute returns 0, -- On macos, os.execute returns 3 values: true, exit, 0, and we need the first. local test_ret = execute((test_cmd)) if (test_ret == 0 or test_ret == true) then local cmd = env.openresty_args .. [[ -s reload]] execute(cmd) return end print("test openresty failed") end local action = { help = help, version = version, init = init, init_etcd = etcd.init, start = start, stop = stop, quit = quit, restart = restart, reload = reload, } function _M.execute(env, arg) local cmd_action = arg[1] if not cmd_action then return help() end if not action[cmd_action] then stderr:write("invalid argument: ", cmd_action, "\n") return help() end action[cmd_action](env, arg[2]) end return _M

这里每类配置对应的处理逻辑都不相同,因此APISIX抽象出apisix/core/config_etcd.lua文件,专注etcd上各类配置的更新维护。在http_init_worker函数中每类配置都会生成1个config_etcd对象:

function _M.init_worker() local err plugin_configs, err = core.config.new("/plugin_configs", { automatic = true, item_schema = core.schema.plugin_config, checker = plugin_checker, }) if not plugin_configs then error("failed to sync /plugin_configs: " .. err) end end

apisix/core/config_etcd.lua

ngx_timer_at(0, _automatic_fetch, obj)

然后一路加载

APISIX在每个Nginx Worker进程的启动过程中,通过ngx.timer.at函数将_automatic_fetch插入定时器。_automatic_fetch函数执行时会通过sync_data函数,基于watch机制接收etcd中的配置变更通知,这样,每个Nginx节点、每个Worker进程都将保持最新的配置。如此设计还有1个明显的优点:etcd中的配置直接写入Nginx Worker进程中,这样处理请求时就能直接使用新配置,无须在进程间同步配置,这要比启动1个agent进程更简单!

local function sync_data(self) ... local dir_res, err = waitdir(self.etcd_cli, self.key, self.prev_index + 1, self.timeout)

直接修改etcd也可以变更,但风险太大,通过apisix-admin url会做校验

https://www.taohui.org.cn/2021/08/10/%E5%BC%80%E6%BA%90%E7%BD%91%E5%85%B3APISIX%E6%9E%B6%E6%9E%84%E5%88%86%E6%9E%90/

浙公网安备 33010602011771号

浙公网安备 33010602011771号