Kubernetes 部署

Kubernetes 部署

⽤kubeadm搭建集群环境

架构

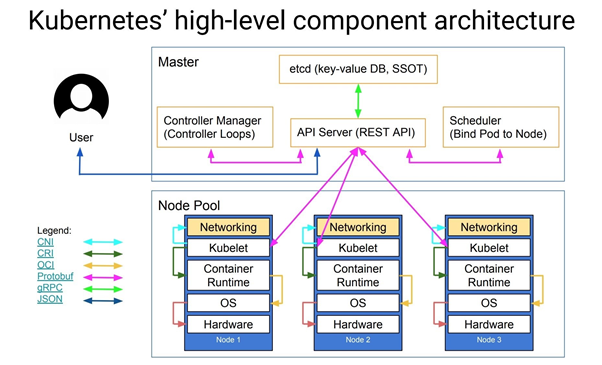

讲解了k8s的基本概念与⼏个主要的组件,我们在了解了k8s的基本概念过后,实 际上就可以去正式使⽤了,但是我们前⾯的课程都是在katacoda上⾯进⾏的演示,只提供给我们15分 钟左右的使⽤时间,所以最好的⽅式还是我们⾃⼰来⼿动搭建⼀套k8s的环境,在搭建环境之前,我 们再来看⼀张更丰富的k8s的架构图。

- 核⼼层:Kubernetes最核⼼的功能,对外提供API构建⾼层的应⽤,对内提供插件式应⽤执⾏环

- 应⽤层:部署(⽆状态应⽤、有状态应⽤、批处理任务、集群应⽤等)和路由(服务发现、DNS 解析等)

- 管理层:系统度量(如基础设施、容器和⽹络的度量),⾃动化(如⾃动扩展、动态Provision 等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

- 接⼝层:kubectl命令⾏⼯具、客户端SDK以及集群联邦

- ⽣态系统:在接⼝层之上的庞⼤容器集群管理调度的⽣态系统,可以划分为两个范畴

- Kubernetes外部:⽇志、监控、配置管理、CI、CD、Workflow等

- Kubernetes内部:CRI、CNI、CVI、镜像仓库、CloudProvider、集群⾃身的配置和管理等

- 在更进⼀步了解了k8s集群的架构后,我们就可以来证书的的安装我们的k8s集群环境了,我们这⾥使⽤的是kubeadm⼯具来进⾏集群的搭建。

Kubernetes

使用kubeadm安装kubernetes

安装要求:

-

一台或者多台服务器,操作系统Centos7.6

-

集群之间所有机器要互通 需要关闭防火墙

-

可以访问外网,需要拉取镜像

-

禁止swap分区

| 角色 | IP |

|---|---|

| k8s-master | 10.10.10.128 |

| k8s-node1 | 10.10.10.129 |

| k8s-node2 | 10.10.10.130 |

搭建Kubernetes(准备环境)

所有的节点需要做以下的前期工作,保证搭建的时候不会出现意外情况。

更改主机名称:

[root@localhost ~]# hostnamectl set-hostname k8s-master

[root@localhost ~]# hostnamectl set-hostname k8s-node1

[root@localhost ~]# hostnamectl set-hostname k8s-node2 添加映射:

$ vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.10.10.128 k8s-master

10.10.10.129 k8s-node1

10.10.10.130 k8s-node2 关闭防火墙:

$ systemctl stop firewalld

$ systemctl disable firewalld 关闭selinux:

$ sed -i 's/enforcing/disabled/' /etc/selinux/config

$ setenforce 0 (临时关闭)关闭swap:

$ swapoff -a 配置路由转发:

将桥接的IPv4流量传递到iptables的链:

$ cat > /etc/sysctl.d/k8s.conf << EOF

> net.bridge.bridge-nf-call-ip6tables = 1

> net.ipv4.ip_forward = 1

> net.bridge.bridge-nf-call-iptables = 1

> EOF

$ sysctl --system kube-proxy开启ipvs:

$ modprobe br_netfilter

$ cat > /etc/sysconfig/modules/ipvs.modules <<EOF

> #!/bin/bash

> modprobe -- ip_vs

> modprobe -- ip_vs_rr

> modprobe -- ip_vs_wrr

> modprobe -- ip_vs_sh

> modprobe -- nf_conntrack

> EOF

$ chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

配置时间同步:

$ yum install -y ntpdate

$ ntpdate time.windows.com

安装Docker/kubeadm/kubelet (所有节点)

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

安装Docker:

$ yum install -y yum-utils device-mapper-persistent-data lvm2

$ yum-config-manager --add-repo http://mirrors.aliyun.com/dockerce/linux/centos/docker-ce.repo

$ yum makecache fast

$ yum -y install docker配置镜像加速:

$ cat > /etc/docker/daemon.json << EOF

> {

> "registry-mirrors": ["https://ably8t50.mirror.aliyuncs.com"],

> "exec-opts":["native.cgroupdriver=systemd"]

> }

> EOF

$ systemctl daemon-reload

$ systemctl restart docker 配置阿里YUM源:

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

> enabled=1

> gpgcheck=1

> repo_gpgcheck=1

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

$ yum install -y kubelet-1.20.0 kubeadm-1.20.0 kubectl-1.20.0

$ systemctl enable kubelet && systemctl start kubeletkubeadm部署Master集群:

$ kubeadm init \

> --apiserver-advertise-address=10.10.10.128 \

> --image-repository registry.aliyuncs.com/google_containers \

> --kubernetes-version v1.20.0 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16 \

> --ignore-preflight-errors=all

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

-

--apiserver-advertise-address 集群通告地址

-

--image-repository 由于默认的拉取镜像地址k8s.gcr.io国内无法访问,这里使用阿里云镜像仓库地址

-

--kubernetes-version k8s的版本

-

--service-cidr 集群内部虚拟网络,Pod的统一访问入口

-

--pod-network-cidr Pod的网络,与下面部署的CNI网络组件yaml中保持一致

部署容器网络CNI:

拉取Pod网络插件

原因:coredns一直停滞在Pending,需要手动拉取, 最后选择的网络插件是:calico。

[root@k8s-node2 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-b7nml 0/1 Pending 0 16m

coredns-7f89b7bc75-mrn6x 0/1 Pending 0 16m

etcd-k8s-node2 1/1 Running 0 16m

kube-apiserver-k8s-node2 1/1 Running 0 16m

kube-controller-manager-k8s-node2 1/1 Running 0 16m

kube-proxy-78zrr 1/1 Running 0 16m

kube-scheduler-k8s-node2 1/1 Running 0 16m

$ curl -O https://docs.projectcalico.org/manifests/calico.yaml

$ kubectl apply -f calico.yaml

[root@k8s-node2 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5f6cfd688c-g9mlv 0/1 Pending 0 7s

calico-node-4vbtr 0/1 Init:0/3 0 7s

coredns-7f89b7bc75-b7nml 0/1 Pending 0 12h

coredns-7f89b7bc75-mrn6x 0/1 Pending 0 12h

etcd-k8s-node2 1/1 Running 0 12h

kube-apiserver-k8s-node2 1/1 Running 0 12h

kube-controller-manager-k8s-node2 1/1 Running 0 12h

kube-proxy-78zrr 1/1 Running 0 12h

kube-scheduler-k8s-node2 1/1 Running 0 12h

[root@k8s-node2 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-5f6cfd688c-g9mlv 1/1 Running 0 2m7s

calico-node-4vbtr 1/1 Running 0 2m7s

coredns-7f89b7bc75-b7nml 1/1 Running 0 12h

coredns-7f89b7bc75-mrn6x 1/1 Running 0 12h

etcd-k8s-node2 1/1 Running 0 12h

kube-apiserver-k8s-node2 1/1 Running 0 12h

kube-controller-manager-k8s-node2 1/1 Running 0 12h

kube-proxy-78zrr 1/1 Running 0 12h

kube-scheduler-k8s-node2 1/1 Running 0 12hkubeadm部署Node集群:

$ kubeadm join 10.10.10.130:6443 --token 9rkpbe.648h6r6rkp24f4xg \

--discovery-token-ca-cert-hash sha256:add1c33a33d53a261fb58516d207a9f13bc41ec5085960e36e4431bd70479e65 部署Dashboard:

拉取镜像

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml 配置NodePort:

配置recommended的yaml文件修改集群外部访问的方式,type为NodePort,nodeport外部访问端口是30001

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort[root@k8s-node2 ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard unchanged

serviceaccount/kubernetes-dashboard unchanged

service/kubernetes-dashboard unchanged

secret/kubernetes-dashboard-certs unchanged

secret/kubernetes-dashboard-csrf unchanged

secret/kubernetes-dashboard-key-holder unchanged

configmap/kubernetes-dashboard-settings unchanged

role.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

deployment.apps/kubernetes-dashboard unchanged

service/dashboard-metrics-scraper unchanged

deployment.apps/dashboard-metrics-scraper unchanged绑定默认管理员集群角色:

# 创建用户

$ kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

# 用户授权

$ kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

# 获取用户Token

$ kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-52gsz

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 18c45018-7eaa-4381-a0c8-5266cc46b8c9

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkREZGU2aExFYU83aDdxVm8zM09rdGNVVTVydkVIUmxIdDhMejZBY2F0NkUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNTJnc3oiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMThjNDUwMTgtN2VhYS00MzgxLWEwYzgtNTI2NmNjNDZiOGM5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.JAb0BvGP268aBDAPx3LaipuK5s9e4-RgYW3y35t29xY-4DUaUKrkbOM2h4unU1QPrXPMS3M4uODvQi3jv5ckg-KAzCebCGCzHrPCKOuZT_HwZidvdBf5QD3oWYAzaF8CoV5Yvwu2D2e0bl4S5cC6TOmW5BwO0CgPZor_iMXzkI0UUR8AADFbHa0V4xMHMF2MWihclkGV5UsfK8mt9uHpRxcQD4RSy_uFY1SYkGklNeMHrysoFBNAv8lTGq-8LuZnsiRrjjwE9YucMgVe2bSGqlg6gtnz_arCwW7fQwx1UkvAl_qdp452GAD3EGkPVqzvuH10R-Fpk84hgJJpk_AMrg

ca.crt: 1066 bytes

# 使用https://10.10.10.128:30001登录访问集群

浙公网安备 33010602011771号

浙公网安备 33010602011771号