nginx 每天各IP访问次数记录统计

此文章主要介绍了,在nginx代理的情况下,统计当天IP的出现次数,并且生成表格的相关步骤

1.nginx 配置的修改

在/etc/nginx/nginx.conf

http { access_log xxxxxxx/access.log; error_log xxxxxxx/error.log; }

2.定时执行的shell脚本

该脚本主要在每天的0点自动执行IPStatistics.py来记录昨天的IP访问次数,然后对昨天的日志进行备份,并且备份最长时间为maximum_retention_date天;

cut_nginx_log.sh脚本内容如下(分割线上方的变量我们可以根据需要自行配置):

# function:cut nginx log files # The original log file path of nginx.conf configuration log_files_from="xxxxxxx/nginxLog/" # Path of newly saved log file log_files_to="xxxxxxx/nginxLog/historyLog/" # Path to IPStatistics.py script python_file="xxxxxxxxx/nginxLog/script/IPStatistics.py" # File name of the log log_name="access" # Maximum access.log date saving time, for example, 7 days maximum_retention_date=`date -d "7 day ago" "+%Y-%m-%d"` backup_end_time=`date -d yesterday +%Y-%m-%d` file=${log_files_from}${log_name}.log if [ -f "$file" ];then # run python file to generate ip statistics csv, backup files python3 ${python_file}&&cp ${log_files_from}${log_name}.log ${log_files_to}${log_name}_${backup_end_time}.log :>${log_files_from}${log_name}.log # delete n days ago nginx log files rm -rf ${log_files_to}${log_name}_${maximum_retention_date}.log # restart nginx systemctl restart nginx fi

定时执行脚本需要进行如下操作:

# 1.赋予cut_nginx_log.sh执行权限 chmod +x xxxxxx/cut_nginx_log.sh # 2.使用 crontab 添加定时任务 # (1) 打开定时任务 crontab -e # (2) 添加定时任务,这里每天凌晨0点执行一次。 00 00 * * * /bin/sh xxxxxx/cut_nginx_log.sh # (3) 查看定时任务 crontab -l

3.统计当天内的IP访问次数的python 脚本

通过处理access.log的记录,生成IP统计csv文件

#!/usr/bin/env python # -*- coding:utf-8 -*- import os.path import re import datetime # nginx access.log文件的位置 log_Path = r"xxxxxxx/access.log" # 分析access.log后得到的当天Ip访问次数结果,记录的文件路径 statistical_ip_result_file = r"xxxxxxx/statistical_ip_result.csv" # 多长时间内的IP访问算作一次,单位是小时(例如从某IP当天第一次访问开始,在一个小时内,如果该IP再次访问,依然算作一次访问) time_interval = 1 # csv文件中最长的IP访问次数保留时间 maximum_retention_time = 180 # 用于解析 access.log的正则表达式(解析为ip,time) obj = re.compile(r'(?P<ip>.*?)- - \[(?P<time>.*?)\] ') # 用于存放日志的分析结果(ip,(time1,time2,time3...)) ip_dict = {} # 用于存放当天日志的ip访问次数 ip_count = {} class Access(object): def __init__(self, log_path): self.IP_database = {} self.log_path = log_path def analyze_log(self): """记录IP地址和时间""" with open(self.log_path) as f: Log_data = f.readlines() try: for log_data in Log_data: print(log_data) result = obj.match(log_data) ip = result.group("ip") time = result.group("time") time = time.replace(" +0000", "") t = datetime.datetime.strptime(time, "%d/%b/%Y:%H:%M:%S") if ip_dict.get(ip) is None: ip_dict[ip] = [] ip_dict[ip].append(t) except Exception as e: print(e) def statistical_ip(self): """分析IP出现的次数(time_interval个小时内连续的访问算做一次),从日志文件开始进行记录""" for item in ip_dict.items(): ip = item[0] time_list = item[1] start_time = time_list[0] ip_count[ip] = ip_count.get(ip, 0) + 1 print(ip) print(ip_count[ip]) for time in time_list: if (time - start_time).seconds > 60 * 60 * time_interval: ip_count[ip] = ip_count.get(ip) + 1 start_time = time def delete_expired_data(self): """超过maximum_retention_time天的记录会进行删除""" if os.path.exists(statistical_ip_result_file) is False: print("退出delete") return new_data = [] with open(statistical_ip_result_file, 'r+', encoding='utf-8') as f: current_time = datetime.datetime.now() new_data.append(f.readline()) for line in f.readlines(): record_time = datetime.datetime.strptime(line.split(',')[3].strip(), "%Y-%m-%d") if (current_time - record_time).days <= maximum_retention_time: new_data.append(line) with open(statistical_ip_result_file, 'w', encoding='utf-8') as f: f.writelines(new_data) f.close() def write_file(self): """将昨天的IP出现次数写到txt文件中,然后再将txt转化为csv(表格)""" print("enter write_file") if len(ip_count) == 0: print("ip count=0 return") return file_exists = os.path.exists(statistical_ip_result_file) number = 1 if file_exists is True: print("csv file is exists") with open(statistical_ip_result_file, 'r', encoding='utf-8') as f: number = int(f.readlines()[-1].split(',')[0]) + 1 with open(statistical_ip_result_file, 'a+', encoding='utf-8') as f: if file_exists is False: print("file is not exiests,and write row") f.write("number,ip,count,data\n") yesterday = (datetime.date.today() + datetime.timedelta(days=-1)).strftime("%Y-%m-%d") for item in ip_count.items(): ip = item[0] count = item[1] f.write(str(number) + "," + ip + "," + str(count) + "," + str(yesterday) + "\n") number = number + 1 def ip(self): self.analyze_log() self.statistical_ip() self.delete_expired_data() self.write_file() if __name__ == '__main__': IP = Access(log_Path) IP.ip()

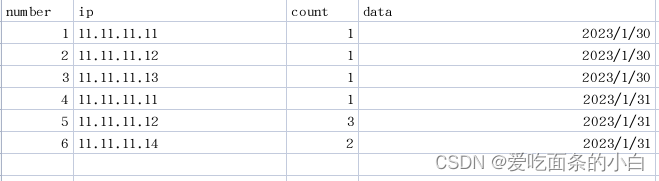

最后生成的csv表格:

————————————————

为人:谦逊、激情、博学、审问、慎思、明辨、 笃行

学问:纸上得来终觉浅,绝知此事要躬行

为事:工欲善其事,必先利其器。

态度:道阻且长,行则将至;行而不辍,未来可期

.....................................................................

------- 桃之夭夭,灼灼其华。之子于归,宜其室家。 ---------------

------- 桃之夭夭,有蕡其实。之子于归,宜其家室。 ---------------

------- 桃之夭夭,其叶蓁蓁。之子于归,宜其家人。 ---------------

=====================================================================

* 博客文章部分截图及内容来自于学习的书本及相应培训课程以及网络其他博客,仅做学习讨论之用,不做商业用途。

* 如有侵权,马上联系我,我立马删除对应链接。 * @author Alan -liu * @Email no008@foxmail.com

转载请标注出处! ✧*꧁一品堂.技术学习笔记꧂*✧. ---> https://www.cnblogs.com/ios9/

学问:纸上得来终觉浅,绝知此事要躬行

为事:工欲善其事,必先利其器。

态度:道阻且长,行则将至;行而不辍,未来可期

.....................................................................

------- 桃之夭夭,灼灼其华。之子于归,宜其室家。 ---------------

------- 桃之夭夭,有蕡其实。之子于归,宜其家室。 ---------------

------- 桃之夭夭,其叶蓁蓁。之子于归,宜其家人。 ---------------

=====================================================================

* 博客文章部分截图及内容来自于学习的书本及相应培训课程以及网络其他博客,仅做学习讨论之用,不做商业用途。

* 如有侵权,马上联系我,我立马删除对应链接。 * @author Alan -liu * @Email no008@foxmail.com

转载请标注出处! ✧*꧁一品堂.技术学习笔记꧂*✧. ---> https://www.cnblogs.com/ios9/

浙公网安备 33010602011771号

浙公网安备 33010602011771号