资源池‘ default’ 没有足够的系统内存来运行此查询

There is insufficient system memory in resource pool 'default' to run this query. on sql

I have a running service that gets 50-100 queries per minute. And these are not high cost queries. This service has been running for around 3-4 months without any errors.

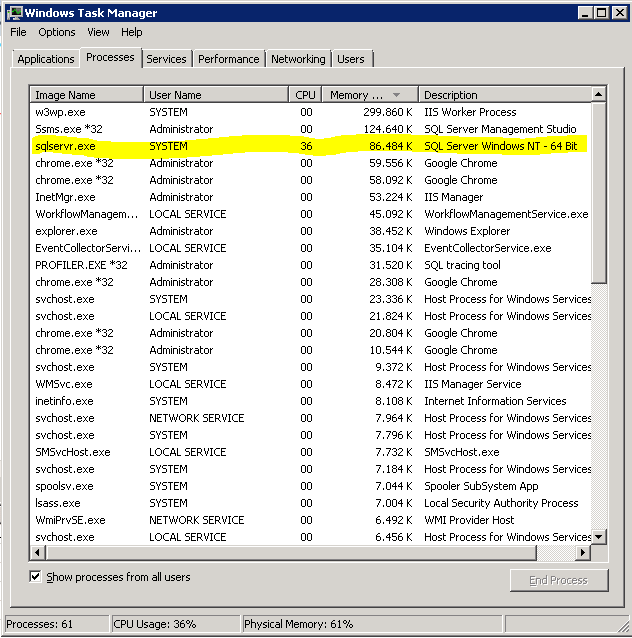

Suddenly few days ago it started giving "There is insufficient system memory in resource pool 'default' to run this query." error occasionally. When I investigate the problem I saw that sqlservr.exe was using ~1.5 gb ram and %25 of CPU(all of 1/4CPU). And when I restarted the sqlservr.exe the ram starts from ~50mb and slowly increase till it becomes ~1.5gb then leads to crashes in the apps using it.

After I have made little bit of research I figured that it is caused by the edition of sql server I use. It was express edition limiting the numbers to those. So I have upgraded my sql server from '2008r2 express' to '2012 enterprise'. When I started the service I thought my problems are finally over, since the service uses only ~60mb of memory, but in an hour unfortunately same problem started occurring, but this time the used memory I see on windows task manager is still ~60mb, not excessing any limits.

I use EntityFramework as ORM in a wcf service. And along with it I have SqlQueryNotification(broker and stuff) system for some caching operations.

Am I missing some crucial configuration points? Or 6gbs of memory and my 4 CPU is really not enough for this? But it can't be that because same load was like that for 3 months and there wasn't any error back then, and there is not any change of codes either.

4 Answers

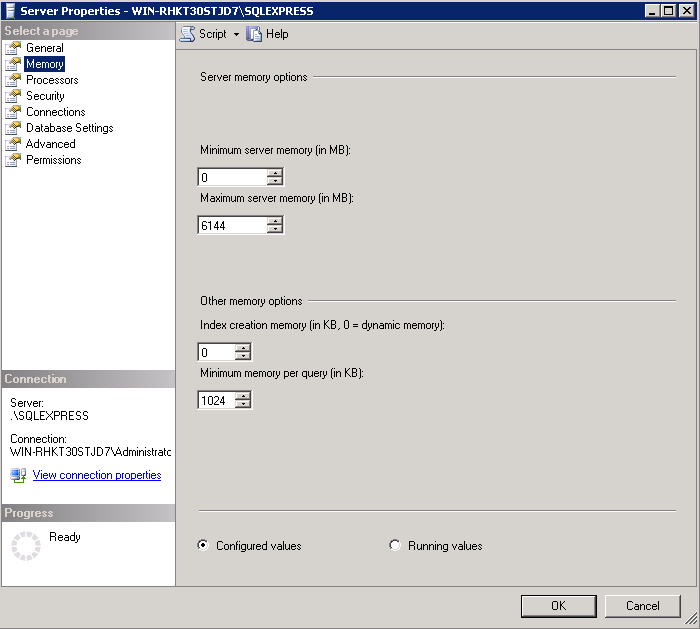

SQL Server will start with as much memory as needed, then slowly ramp up until it uses all allocated to it in the Server Properties:

It will not release any of this memory until the service is restarted; this is by design.

It is generally recommended to leave 2ish GB for the OS, and you need to be mindful of any other processing running on the same server as SQL. It is usually recommended to have SQL Server on it's own server without anything else running there.

That said, 1.5 GB of RAM for SQL Server isn't that much. If you don't have more available, it may be time to add some or upgrade the server.

See also: Technet, Brent Ozar

-

2No no it's not the case. On this machine I have 12gb ram right now, and I have a lot of memory available. I can increase the memory easily anytime. And the funny thing is now sqlervr.exe doesn't seem to be using more than 70MB ever. Aug 12, 2014 at 14:32

-

1But it still crashes with that memory exception in around 1 hour. Aug 12, 2014 at 14:33

-

@TolgaEvcimen are there other programs running on this server? How much physical memory does resmon.exe say you are using? Aug 12, 2014 at 14:34

-

@TolgaEvcimen how much memory do the sys.dm_os_sys_info and sys._dm_os_performance_counters indicate sql is using and how much is available? Aug 12, 2014 at 14:38

-

2

I am posting this answer because someone may find it useful.

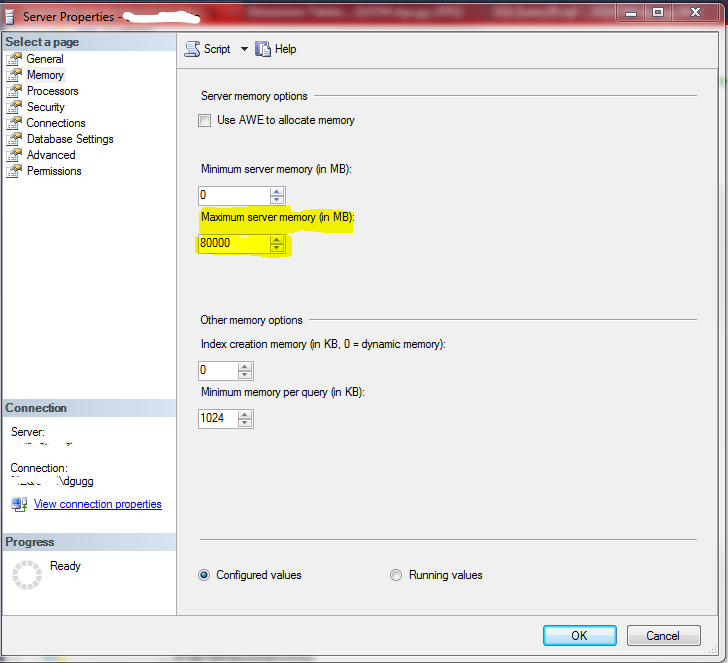

You can set max server memory even with this query:

sp_configure 'show advanced options', 1;

GO

RECONFIGURE;

GO

sp_configure 'max server memory', 32768; -- for 32 GB

GO

RECONFIGURE;

GO

Also you can use this query to release memory without restarting the service simply by setting to 2 GB (2048) and changing again back to 32 GB (32768) or to the value you want.

Please, do not set it below 1 GB because it will cause errors executing queries and therefore you will need to restart the service to be able to execute queries, even this query to increase memory again.

-

Be careful with this command, you still might need to restart the service despite what the author claims, it just happend to me on a productive system. Feb 18, 2021 at 10:59

-

Any ideas how to test beforehand whether a given instance will not faint after decreasing

max server memory? Jul 4, 2022 at 7:38

In our case it was because of Memory Optimized table types, with huge amount of data. There was multiple calls to different stored procedures at the same time and each using the same table type and loading huge amount of records in it (>100,000). For our application, there was way to reduce the number of records inserted in to memory optimized table type i.e. instead if storing all selected items in a memory optimized table type we conditionally stored only the non-selected records.

CREATE TYPE [TestType] AS TABLE (

[TestOrder] VARCHAR (1500) NOT NULL,

[TestDepartment] UNIQUEIDENTIFIER NULL,

[TestCourse] UNIQUEIDENTIFIER NULL,

[TestStudent] UNIQUEIDENTIFIER NULL,

INDEX IX_Test NONCLUSTERED (TestOrder))

WITH (MEMORY_OPTIMIZED = ON);

-

I inserted lots of data (around 1 million) to the table type from SP, and then caused this problem. Could you tell me how you skipped inserting data?– Jason LiSep 17, 2020 at 15:32

I'm getting this error:

There is insufficient system memory in resource pool 'default' to run this query.

I'm just running 100,000 simple insert statements as shown below. I got the error approx on the 85,000th insert.

This is a demo for a class I'm taking...

use sampleautogrow

INSERT INTO SampleData VALUES ('fazgypvlhl2svnh1t5di','8l8hzn95y5v20nlmoyzpq17v68chfjh9tbj496t4',1)

INSERT INTO SampleData VALUES ('31t7phmjs7rcwi7d3ctg','852wm0l8zvd7k5vuemo16e67ydk9cq6rzp0f0sbs',2)

INSERT INTO SampleData VALUES ('w3dtv4wsm3ho9l3073o1','udn28w25dogxb9ttwyqeieuz6almxg53a1ki72dq',1)

INSERT INTO SampleData VALUES ('23u5uod07zilskyuhd7d','dopw0c76z7h1mu4p1hrfe8d7ei1z2rpwsffvk3pi',3)

Thanks In Advance, Jim M

Update: Just noticed something very interesting. I created another database, forgot to create the SampleData table. I ran the query to add the 100,000 rows, and it got the out of memory error before it even complained that the table didn't exist. Thus, I'm guessing it is running out of memory just trying to "read in" my 100,000 lines?

2 Answers

You have 100.000 insert statements in one single batch request? Your server needs more RAM just to parse the request. Buy more RAM, upgrade to x64 or reduce the size of single batches sent to the server. Ie. sprinkle a GO every now and there in the .sql file.

-

If 1000 inserts cause no problem, why do 100,000 cause a problem? Does it have something to do with transactions? Why would adding "GO" statements help?– user242764Jan 3, 2010 at 18:36

-

10when you send 100k INSERT statements in one batch the server will initially see about 1 mil. characters of 'stuff'. It doesn't know what's inside, it could be all dots and spaces. It has to be parsed first, and T-SQL is parsed in batches, not in lines (unlike, say Basic is). That parsing consumes memory and this is the error you see. When you've tried with an unexisting table, the server did not even reach the point when it would check if the table exists, since that occurs during compile time not during parse time. It has to parse the entire batch first, and that cannot do. Jan 3, 2010 at 20:04

-

In my case it is a 1 batch with thousand of insert in a transaction so cannot use GO statement and adding more RAM is not a solution. Oct 5, 2015 at 16:45

-

One GO every 10000 inserts fixed the problem for me (my SQL Server process has 1GB of RAM assigned) Jun 18, 2018 at 15:59

You can try SQLServer Connection Tools application. It has a feature called Massive Sql Runner which executes every command one by one. With this feature very few memory will be used to execute script commands and you will no longer have the problem.

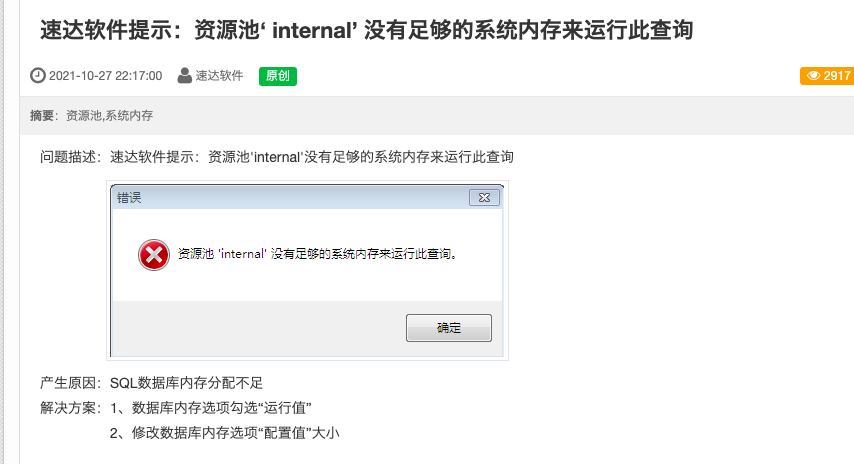

We are running a local SQL Server 2017 to support a datawarehouse database. The database is loaded on a schedule through SSIS largely through the use of staging tables and the MERGE function. Recently, we have begun seeing the error "There is insufficient system memory in resource pool 'internal' to run this query.". It has become more and more rampant over the last couple weeks.

We have tried:

- Turning off query store

- Updating SQL Server thru CU27

- Tracing the query (no good results)

- Running as many reports as we can find to pinpoint the issue

Recent changes that correlate to the timing of the error:

- Turning on Query Store

- Addition of a large number of indexes

Other information:

- We have 32gb of memory on the server and allocate 26gb to sql server

- The TARGET table of the MERGE is a CLUSTERED COLUMNSTORE INDEX

- The SOURCE table of the MERGE is a HEAP

- Over time/multiple failures, the SOURCE table that has staged the changed records has grown to over 200,000 records. The TARGET table is ~10 million rows.

Any help would be appreciated. I have scoured the internet for the last couple days looking for any guidance. All I've seen so far is:

- Update SQL version

- Modify your query

- Add memory to the server

MERGE STATEMENT:

DROP TABLE IF EXISTS #Changes;

DROP TABLE IF EXISTS #TransformedChanges;

CREATE TABLE #Changes

(

[Change Type] VARCHAR(100)

);

MERGE [dbo].[FactOrderLine] AS TARGET

USING ( SELECT

[FactOrderLine].[OrderLine_Key],

[FactOrderLine].[BookedDate_Date_Key],

[FactOrderLine].[BookedDate_Time_Key],

[FactOrderLine].[Account_Key],

[FactOrderLine].[CCN_Key],

[FactOrderLine].[BillTo_SalesOffice_Key],

[FactOrderLine].[BillTo_Territory_Key],

[FactOrderLine].[ShipTo_SalesOffice_Key],

[FactOrderLine].[ShipTo_Territory_Key],

[FactOrderLine].[AssemblyLocation_Key],

[FactOrderLine].[ProductDivision_Key],

[FactOrderLine].[Product_Key],

[FactOrderLine].[Booked Date],

[FactOrderLine].[Ordered Quantity],

[FactOrderLine].[Unit Price - CCN],

[FactOrderLine].[Unit Price - Transaction],

[FactOrderLine].[Discount Factor],

[FactOrderLine].[Split Factor],

[FactOrderLine].[Is Split?],

[DW_Checksum] = CHECKSUM([FactOrderLine].[BookedDate_Date_Key],

[FactOrderLine].[BookedDate_Time_Key],

[FactOrderLine].[Account_Key],

[FactOrderLine].[CCN_Key],

[FactOrderLine].[BillTo_SalesOffice_Key],

[FactOrderLine].[BillTo_Territory_Key],

[FactOrderLine].[ShipTo_SalesOffice_Key],

[FactOrderLine].[ShipTo_Territory_Key],

[FactOrderLine].[AssemblyLocation_Key],

[FactOrderLine].[ProductDivision_Key],

[FactOrderLine].[Product_Key],

[FactOrderLine].[Booked Date],

[FactOrderLine].[Ordered Quantity],

[FactOrderLine].[Unit Price - CCN],

[FactOrderLine].[Unit Price - Transaction],

[FactOrderLine].[Discount Factor],

[FactOrderLine].[Split Factor],

[FactOrderLine].[Is Split?],

0)

FROM [changeLog].[FactOrderLine] ) AS SOURCE

ON [Source].[OrderLine_Key] = [Target].[OrderLine_Key]

WHEN MATCHED AND ISNULL([Source].[DW_Checksum], 0) <> ISNULL([Target].[DW_Checksum], 0) THEN UPDATE SET

[Target].[BookedDate_Date_Key] = [Source].[BookedDate_Date_Key],

[Target].[BookedDate_Time_Key] = [Source].[BookedDate_Time_Key],

[Target].[Account_Key] = [Source].[Account_Key],

[Target].[CCN_Key] = [Source].[CCN_Key],

[Target].[BillTo_SalesOffice_Key] = [Source].[BillTo_SalesOffice_Key],

[Target].[BillTo_Territory_Key] = [Source].[BillTo_Territory_Key],

[Target].[ShipTo_SalesOffice_Key] = [Source].[ShipTo_SalesOffice_Key],

[Target].[ShipTo_Territory_Key] = [Source].[ShipTo_Territory_Key],

[Target].[AssemblyLocation_Key] = [Source].[AssemblyLocation_Key],

[Target].[ProductDivision_Key] = [Source].[ProductDivision_Key],

[Target].[Product_Key] = [Source].[Product_Key],

[Target].[Booked Date] = [Source].[Booked Date],

[Target].[Ordered Quantity] = [Source].[Ordered Quantity],

[Target].[Unit Price - CCN] = [Source].[Unit Price - CCN],

[Target].[Unit Price - Transaction] = [Source].[Unit Price - Transaction],

[Target].[Discount Factor] = [Source].[Discount Factor],

[Target].[Split Factor] = [Source].[Split Factor],

[Target].[Is Split?] = [Source].[Is Split?],

[Target].[DW_Checksum] = [Source].[DW_Checksum],

[Target].[DW_ModifiedOn] = GETUTCDATE(),

[Target].[DW_IsDeleted?] = 0

WHEN NOT MATCHED BY TARGET THEN INSERT

(

[OrderLine_Key],

[BookedDate_Date_Key],

[BookedDate_Time_Key],

[Account_Key],

[CCN_Key],

[BillTo_SalesOffice_Key],

[BillTo_Territory_Key],

[ShipTo_SalesOffice_Key],

[ShipTo_Territory_Key],

[AssemblyLocation_Key],

[ProductDivision_Key],

[Product_Key],

[Booked Date],

[Ordered Quantity],

[Unit Price - CCN],

[Unit Price - Transaction],

[Discount Factor],

[Split Factor],

[Is Split?],

[DW_IsDeleted?], [DW_Checksum], [Source_ModifiedOn], [DW_ModifiedOn], [DW_CreatedOn] ) VALUES (

[Source].[OrderLine_Key],

[Source].[BookedDate_Date_Key],

[Source].[BookedDate_Time_Key],

[Source].[Account_Key],

[Source].[CCN_Key],

[Source].[BillTo_SalesOffice_Key],

[Source].[BillTo_Territory_Key],

[Source].[ShipTo_SalesOffice_Key],

[Source].[ShipTo_Territory_Key],

[Source].[AssemblyLocation_Key],

[Source].[ProductDivision_Key],

[Source].[Product_Key],

[Source].[Booked Date],

[Source].[Ordered Quantity],

[Source].[Unit Price - CCN],

[Source].[Unit Price - Transaction],

[Source].[Discount Factor],

[Source].[Split Factor],

[Source].[Is Split?],

0,

[Source].[DW_Checksum],NULL,GETUTCDATE(),GETUTCDATE()

)

OUTPUT $action INTO #Changes;

CREATE TABLE #TransformedChanges

(

[Update Record Count] INT,

[Insert Record Count] INT

);

INSERT INTO #TransformedChanges

SELECT *

FROM

(

SELECT

TRIM(#Changes.[Change Type])+' Record Count' AS [Change Type],

COUNT(*) AS [Record Count]

FROM #Changes

GROUP BY TRIM(#Changes.[Change Type])+' Record Count'

) A

PIVOT

(

SUM([Record Count])

FOR A.[Change Type] IN ([Update Record Count], [Insert Record Count])

) [B];

DECLARE @TransformationChecker INT;

SET @TransformationChecker = (SELECT COUNT(*) FROM #TransformedChanges);

IF @TransformationChecker = 0 INSERT INTO #TransformedChanges VALUES(0,0);

SELECT ISNULL([Update Record Count], 0) [Update Record Count], ISNULL([Insert Record Count], 0) [Insert Record Count] FROM #TransformedChanges;

DROP TABLE IF EXISTS #Changes;

DROP TABLE IF EXISTS #TransformedChanges;

-

2It (probably) doesn't solve the problem, but I do suggest reading So, you want to use MERGE, eh?: TL;DR: "Please don't, but if you insist: Please ALWAYS use

HOLDLOCKon the target."– LarnuDec 15, 2021 at 18:33 -

No memory optimized anything or resource governor or SQLCLR. I will look into the "Lock pages in memory" right on the system for the service account. @Charlieface– AntyanDec 16, 2021 at 14:20

2 Answers

Thanks to the help of multiple commenters I found that my issue was resolved by removing the use of the MERGE statements and instead running independent INSERT/UPDATE/DELETE statements. This was recommended by multiple blogs, a DBA I work with and the commenters here but because of no available documentation that would suggest MERGE and memory issues may be linked (and my own stubborn-ness) I did not attempt switching them until trying multiple other routes.

I am still curious what exactly is going on under the hood that is causing the memory issues when on the surface you would expect the same number of updates, inserts and deletes to require the same (or a similar) amount of resources. Obviously, the MERGE statement does not operate this way and sometimes you have to be practical with solutions.

-

There are a significant number of problems already documented with MERGE listed here.– ZaneDec 20, 2021 at 16:33

"There is insufficient system memory in resource pool 'internal' to run this query." Check the spid associated with the error message in the error log. Because the target of the merge is a clustered columnstore index, I strongly suspect the error is not directly associated with the the MERGE, rather a consequence of the MERGE. A message from the MERGE itself shouldn't be in the internal resource pool.

The background tuple mover does run in the internal resource pool. Unless any remaining delta stores are compressed with a reorg with (COMPRESS_ALL_ROW_GROUPS = ON), at some point the tuple mover will close and compress delta stores. It needs a memory grant to do so. If the tuple mover times out for its memory grant or the minimal grant for tuple mover isn't available, that error is the outcome. I believe the tuple mover always uses a 1 GB memory grant.

-

1Thank you for your reply. I looked into the error log and found the associated SPID. I am not sure what to do with it now that I have it, I am still learning a lot of this stuff. I read up on the tuple mover. I would be surprised if the tuple mover which uses 1GB whenever delta stores hit a certain size threshold would be the culprit here unless multiple delta stores hit the threshold simultaneously and the tuple mover required a new memory grant for each? But my understanding is it would only need the 1 gb grant once. I am not sure what to do next from your answer.– AntyanDec 16, 2021 at 14:27

-

Does the spid have an 's' at the end - like 42s - or is it under 50? if so, it is a system thread. You're right about the 1 gb grant making it seem unlikely. Even if multiple delta stores quallify for tuple mover at the same time, only 1 system thread at a time will run tuple mover. Dec 17, 2021 at 13:16

-

So other than tuple mover, another good candidate would be a SQL Server 'Task Manager' session running an async auto stats update, if auto stats update and auto stats update async are both enabled in the database. Dec 17, 2021 at 13:18

-

Thank you for your time looking into this. A comment on the original question has solved the issue for me. Unfortunately I still do not know what the root cause was but by switching from a MERGE to separate INSERT/UPDATE/DELETE statements the memory issue has gone away. I appreciate that you are attempting to determine the root cause though as it may be more helpful if someone runs into this problem in the future. The spid was 61, no s and over 50.– AntyanDec 20, 2021 at 15:17

-

glad you were able to resolve it!! i never did ask - were the errors returned to the client that requested the query, or discovered in the error log without being retrned to the client? Dec 20, 2021 at 22:19

南来地,北往的,上班的,下岗的,走过路过不要错过!

======================个性签名=====================

之前认为Apple 的iOS 设计的要比 Android 稳定,我错了吗?

下载的许多客户端程序/游戏程序,经常会Crash,是程序写的不好(内存泄漏?刚启动也会吗?)还是iOS本身的不稳定!!!

如果在Android手机中可以简单联接到ddms,就可以查看系统log,很容易看到程序为什么出错,在iPhone中如何得知呢?试试Organizer吧,分析一下Device logs,也许有用.

@ioriwellings

@ioriwellings

Usingstatements when I am going to connect to db everytime. So I am sure I am not letting any connection to stay open. And my system is 64 bit. And 6144mb is the Maximum server memory, which I set from server properties.