Troubleshooting native memory leak in an IIS 7.x Application Pool

by Apurva Joshi

Tools Used in this Troubleshooter:

- Debug Diagnostics 1.2

- Performance Monitor

- Debugging Tools for Windows

This material is provided for informational purposes only. Microsoft makes no warranties, express or implied.

Overview

This troubleshooter will help you to identify the cause of native memory leak in an IIS application pool. It's important to keep in mind that it is normal for high memory allocation as a web application serves requests. However, if you consistently see both Process\Private Bytes and Process\Virtual Bytes are increasing or Process\Private Bytes and Process\Working Set are increasing and Memory\Available Bytes is decreasing, the memory leak will occur and it may cause an out-of-memory exception.

When the memory leak in an IIS application pool occurs, increasing physical memory (RAM) does not effective because the memory in this scenario is not the physical memory (RAM) but a virtual memory. The following table summarizes the virtual memory which is addressable memory from the web application.

| Process | Windows | Addressable memory (with a large address-aware process) | Practical limit for virtual bytes | Practical limit for private bytes |

|---|---|---|---|---|

| 32-bit | 32-bit | 2 GB | 1400 MB | 800 MB |

| 32-bit | 32-bit with /3GB | 3 GB | 2400 MB | 1800 MB |

| 32-bit | 64-bit | 4 GB | 3400 MB | 2800 MB |

| 64-bit | 64-bit | 8 TB | Not applicable | Not applicable |

Scenario

You consistently see both Process\Private Bytes and Process\Virtual Bytes are increasing or Process\Private Bytes and Process\Working Set of w3wp.exe are increasing and Memory\Available Bytes is decreasing.

It may cause an out-of-memory exception on an application pool in IIS.

To recover, the application pool must be restarted, but after doing so, the memory usage again climbs to a high level. When you see the error above, the application pool has already restarted automatically.

Data Collection

The first thing you should do when you encounter the high memory usage is to determine whether the memory of a worker process on an application pool in IIS is leaked or not. You can use Performance Monitor. For more information on using Performance Monitor, see Analyzing Performance Data later in this troubleshooter.

Tip

If you need to identify which application pool is associated with a particular w3wp.exe process, open an Administrative Command Prompt, switch into the %windir%\System32\inetsrv folder (cd %windir%\System32\inetsrv) and run appcmd list wp. This will show the process identifier (PID) of the w3wp.exe process in quotes. You can match that PID with the PID available in Task Manager.

Once you have confirmed that a w3wp.exe process is experiencing high memory usage, you will need to two pieces of information in order to determine what is causing the problem.

- A Performance Monitor data collector set.

- A user-mode memory dump of the w3wp.exe process.

Both of these will need to be collected from the low memory usage such as starting the process until the high memory usage such as experiencing an out-of-memory exception.

Collecting a Performance Monitor Data Collector Set

Performance Monitor (Perfmon) data can be viewed in real-time or it can be collected in a data collector set that can be reviewed later. For troubleshooting a high memory issue, we need to collect a data collector set. To create a data collector set for troubleshooting high memory, follow these steps.

- Open Administrative Tools from the Windows Control Panel.

- Double-click on Performance Monitor.

- Expand the Data Collector Sets node.

- Right-click on User Defined and select New, Data Collector Set.

- Enter High Memory as the name of the data collector set.

- Select the Create Manually (Advanced) radio button.

- Click Next.

- Select the Create Data Logs radio button.

- Check the Performance Counter checkbox.

- Click Next.

- Click the Add button.

- Expand Process from the list of counters.

- Select Private Bytes, Virtual Bytes and Working Set from the Thread object.

- Select <ALL instances> from the list of instances.

- Click Add.

- Expand Memory from the list of counters.

- Select Available Bytes from the Thread object.

- Click Add.

- Click OK.

- Set Sample interval to "1 Seconds" and then Next and Finish.

Your dialog should now look like the one shown in Figure 1.

Figure 1 - Creating a data collector set.

Creating a Debug Diagnostics 1.2 Rule

The easiest way to collect user-mode process dumps when a high memory condition occurs is to use Debug Diagnostics 1.2 (DebugDiag.) You can download DebugDiag from the following URL.

https://www.microsoft.com/download/en/details.aspx?id=26798 (https://www.microsoft.com/download/en/details.aspx?id=26798)

Install DebugDiag 1.2 on your server and run it. (You'll find it on the Start menu after installation.)

The most important information to figure out which function is caused the memory leak is the stack traces of the heap allocations. By default, these stack traces are not acquired. You can enable this feature per-process. Use the following command to enable stack tracing:

gflags -i w3wp.exe +ust

The command does not enable stack tracing for a process that is already running. Therefore, for processes that you cannot restart (for example, services, lsass, winlogon), you must restart your test computer.

Use the following commands to verify what settings have been set for a specific process:

gflags -i w3wp.exe

When you run DebugDiag, it will display the Select Rule Type dialog. Follow these steps to create a leak rule for your application pool.

-

Select Native (non-.NET) Memory and Handle Leak and click Next.

-

Select a process and click Next.

-

Click Configure.

-

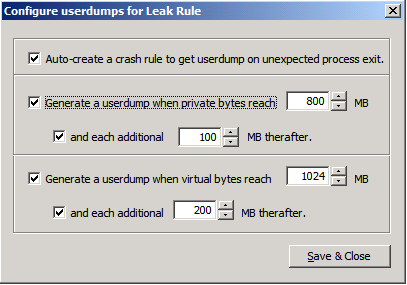

Set the following rule as shown in Figure 2. (You can adjust these values if needed, but be careful not to specify a small number of MB in order to generate the tons of dump files.)Generate a userdump when private bytes reach 800 MB and each additional 100 MB therafter.Generate a userdump when virtual bytes reach 1024 MB and each additional 200 MB therafter.

Figure 2 – Configuring userdumps for Leak Rule.

-

Click Save & Close.

-

Click Next.

-

Enter a name for your rule if you wish and make note of the location where the dumps will be saved. You can change this location if desired.

-

Click Next.

-

Select Activate the Rule Now and click Finish.

If you get the Ouf Of Memory error even when you get the memory dumps, you can get the memory dumps manually.

- Select Process tab.

- Right click the target process.

- Click Create Full Userdump.

Data Analysis

After getting the Out Of Memory error or creating the memory dumps you will have two sets of data to review; the Perfmon data collector set and the memory dumps. Let's begin by reviewing the Perfmon data.

Analyzing Performance Data

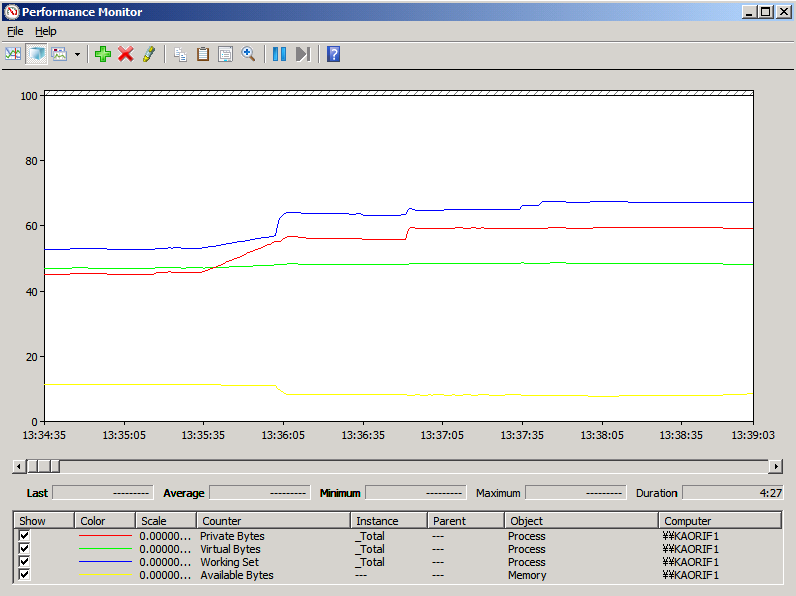

To review the Perfmon data for your issue, right-click on the High Memory data collector set listed under the User Defined node and select Latest Report. You'll see something similar to the screen shown in Figure 3.

Figure 4 - Perfmon displaying the High Memory data.

To get to the root of what is causing the high CPU problem, let's review the dumps that were created using DebugDiag

Dump Analysis with DebugDiag

DebugDiag has the ability to recognize many problems by doing an automated dump analysis. For this particular issue, DebugDiag's Performance Analyzers are well-suited to helping to identify the root cause of the high CPU issue. To use the analyzer, follow these steps.

- Select the Advanced Analysis tab in DebugDiag.

- Select the Memory Pressure Analyzers. Make sure that you use MemoryAnalysis.asp instead of DotNetMemoryAnalysis-BETA.asp.

- Click Add Data Files.

- Browser to the location where the dumps were created. By default, this will be a subfolder of the

C:\Program Files\DebugDiag\Logsfolder. - Select one of the dumps and then press Ctrl+A to select all of the dumps in that folder.

- Click Open.

- Click Start Analysis.

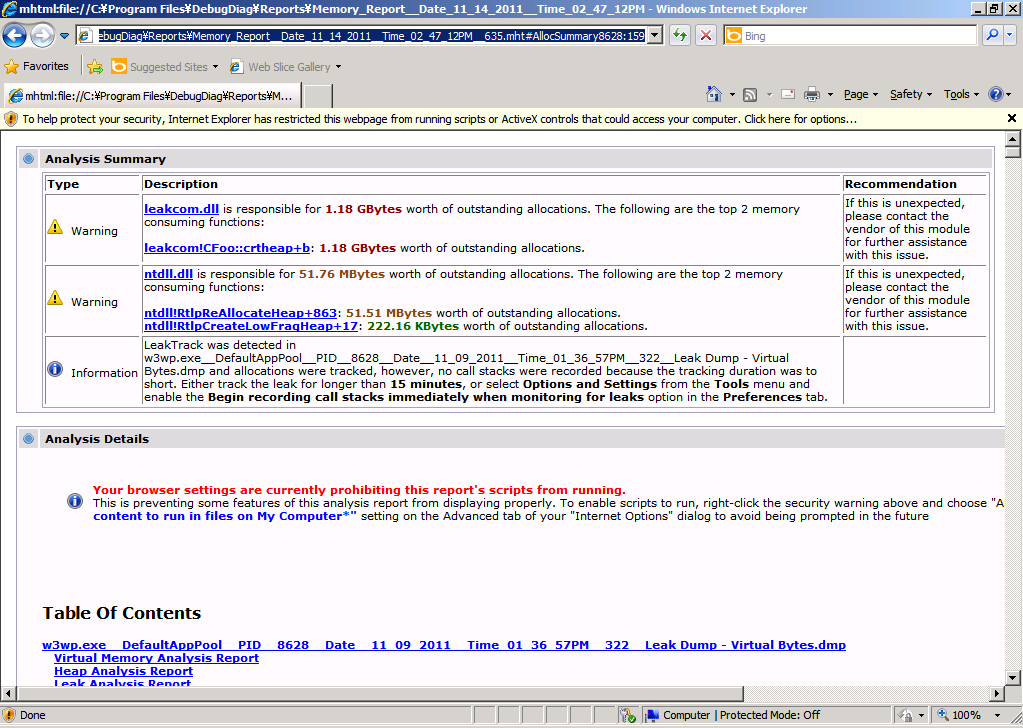

DebugDiag will take a few minutes to parse through the dumps and provide an analysis. When it has completed the analysis, you will see a page similar to that shown in Figure 5.

Figure 5 – A DebugDiag analysis report.

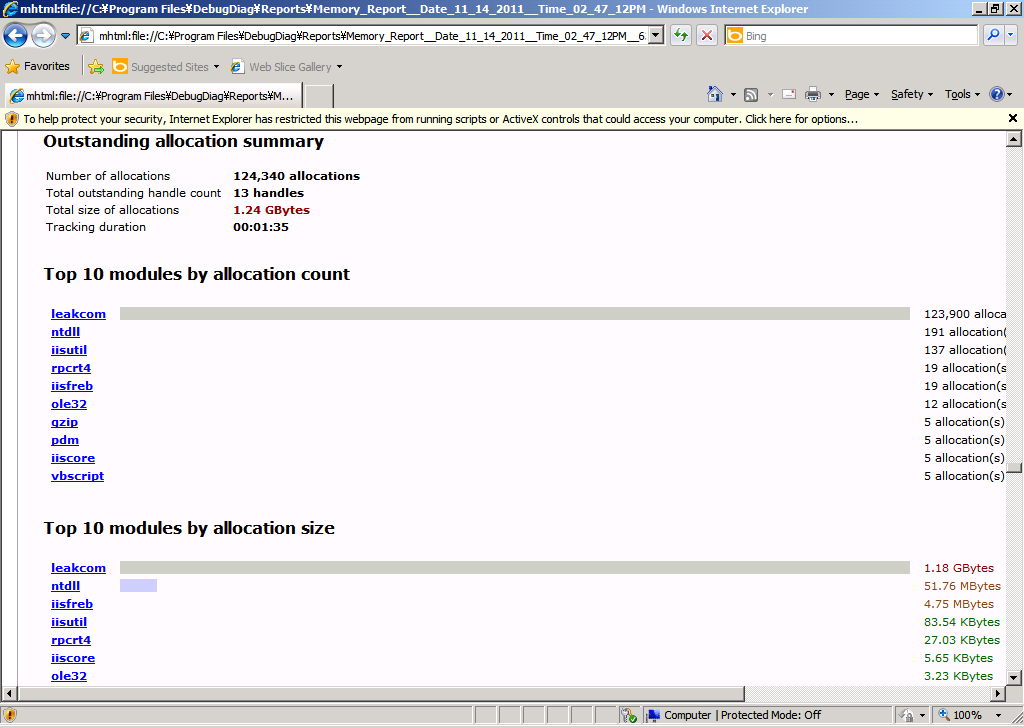

Notice that the top of the report tells you that the memory leak was detected. In the Table Of Contets, you'll see a link to the details of Leak Analysis Report. Click that link and you'll see information about what those top 10 modules by allocation count or allocation size. Figure 6 shows those examples.

Figure 6 – Details on high memory module.

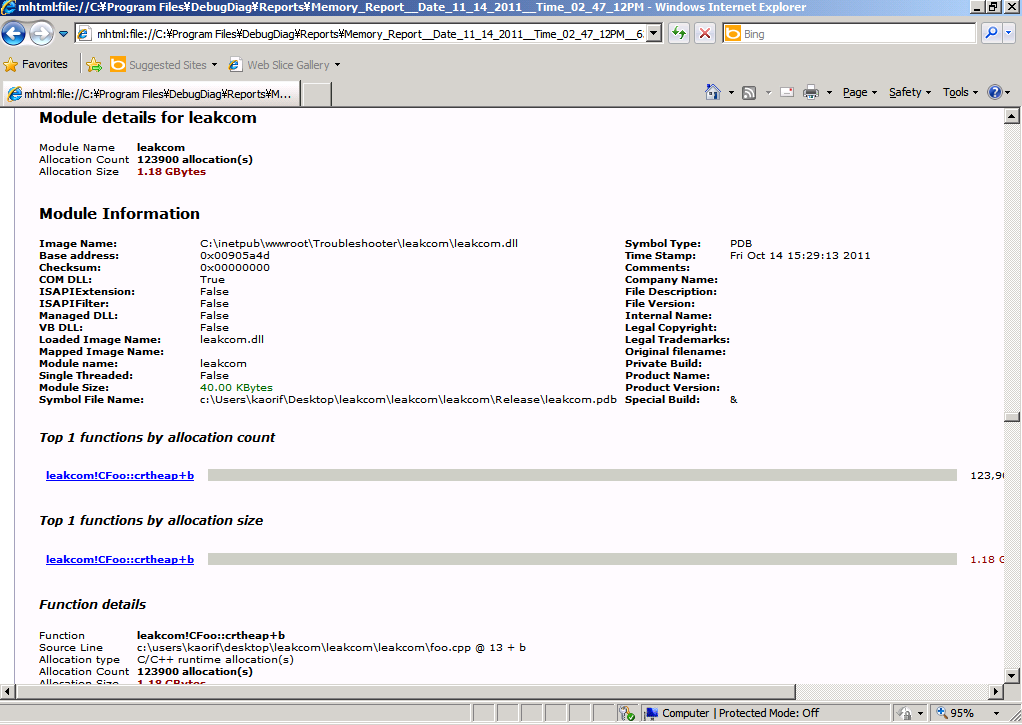

I can tell from this analysis that the leakcom COM component is running. If I want to look further down the Module Information of leak com (Figure 7), I can see that CFoo::crtheap method allocates the outstanding memory.

Figure 7 – Details on the module.

The next step is to review the code of CFoo::crtheap method. When I do that, I find the following.

STDMETHODIMP CFoo::crtheap(void)

{

malloc(1024 * 10);

return S_OK;

}

Kind of the above code will definitely cause memory leak because allocated memory is not released.

Tip

If you enable stack tracing (gflags -i w3wp.exe +ust), you can see the following call stack by analyzing dumps with WinDBG. You will never see the following call stack if you disable stack tracing by default.

0:000> !heap -p -a 42ae5b28

address 42ae5b28 found in

_HEAP @ 6690000

HEAP_ENTRY Size Prev Flags UserPtr UserSize - state

42ae5b28 0541 0000 [00] 42ae5b40 02800 - (busy)

77e9df42 ntdll!RtlAllocateHeap+0x00000274

75133db8 msvcr90!malloc+0x00000079

513f3cc7 LeakTrack!CCRTMemoryLT::R90mallocDetour+0x00000067

75933cef oleaut32!CTypeInfo2::Invoke+0x0000023f

61f527b8 leakcom!ATL::IDispatchImpl::Invoke+0x00000058

f05cb4d vbscript!IDispatchInvoke+0x00000059

f053f40 vbscript!InvokeDispatch+0x000001a5

f060795 vbscript!InvokeByName+0x00000043

f06080d vbscript!CScriptRuntime::RunNoEH+0x000022cf

f054122 vbscript!CScriptRuntime::Run+0x00000064

f054099 vbscript!CScriptEntryPoint::Call+0x00000074

f054279 vbscript!CSession::Execute+0x000000c8

f0544c0 vbscript!COleScript::ExecutePendingScripts+0x00000146

f052013 vbscript!COleScript::SetScriptState+0x0000014d

513023c0 asp!CActiveScriptEngine::TryCall+0x00000019

513027b3 asp!CActiveScriptEngine::Call+0x000000e7

513022c7 asp!CallScriptFunctionOfEngine+0x0000003e

513063d5 asp!ExecuteRequest+0x0000014a

51302676 asp!Execute+0x000001c4

513028f2 asp!CHitObj::ViperAsyncCallback+0x000003fc

51302030 asp!CViperAsyncRequest::OnCall+0x0000006a

563de19 comsvcs!CSTAActivityWork::STAActivityWorkHelper+0x00000032

771304fb ole32!EnterForCallback+0x000000f4

771284c7 ole32!SwitchForCallback+0x000001a8

77126964 ole32!PerformCallback+0x000000a3

7713df32 ole32!CObjectContext::InternalContextCallback+0x0000015b

771f47ef ole32!CObjectContext::DoCallback+0x0000001c

563dfbd comsvcs!CSTAActivityWork::DoWork+0x0000012f

563e51b comsvcs!CSTAThread::DoWork+0x00000018

563f24d comsvcs!CSTAThread::ProcessQueueWork+0x00000037

563f4c0 comsvcs!CSTAThread::WorkerLoop+0x00000135

773a1287 msvcrt!_endthreadex+0x00000044

Conclusion

By using Perfmon and DebugDiag, you can easily collect data that can be helpful in determining the cause of memory leak in application pools. If you are unable to find the root cause using these techniques, you can open a support ticket with Microsoft via https://support.microsoft.com/ and we can assist you with determining the cause of your issue. You will collect dumps with enabling stack tracing (gflags -i w3wp.exe +ust) beforehand since the stack tracing is useful information for us to analyze the memory usage in details. By having the Perfmon data and dumps with the stack tracing ready for us when you open a case, you will dramatically reduce the amount of time necessary for us to assist.

Other Resources

- How to use the IIS Debug Diagnostics tool to troubleshoot a memory leak in an IIS process

- Why the low fragmentation heap (LFH) mechanism may be disabled on some computers that are running Windows Server 2003, Windows XP, or Windows 2000 (

https://support.microsoft.com/kb/929136)

The scenario is pretty simple:

I have a memory leak in a Windows Store App, identified as native after using dotMemory profiler. Since this tool is limited to analyzing managed memory I did further research by performing a memory dump analysis using DebugDiag. This got me one step further and I could find that the increasing memory is committed to a heap used by mscoreei (anyone has additional info about this dll? A simple google search does not return much)

DebugDiag also has a nice feature called Memory Leak analysis witch injects the LeakTrack.dll into a running process to collect information about memory allocation, which in turn potentially can help identify which parts of managed code use the native leaking resource. However, I am getting an error when I try use this on my Windows Store App

"Failed to monitor for leaks in process .... Please make sure that everyone has read and execute permissions for ...\LeakTrack.dll".

I did modify the permissions to that file so everyone has full access, but still the same error.

My questions are:

How can I use LeakTrack on a Windows Store Apps?

What is the the best/alternative approach to perform native memory leaks in Windows Store Apps?

1 Answer

We solved this by giving the currently logged on user (actually the group to which he belonged) the 'Debug Programs' permission.

Here are instructions on how to do this:

To access the local group policy:

- From the Start menu, choose Control Panel.

- In Control Panel, double-click Administrative Tools.

- In the Administrative Tools window, double-click Local Security Policy.

- In the Local Security Settings window, expand the Local Policies folder.

- Click User Rights Assignment.

- In the Policy column, double-click Debug programs to view current local group policy assignments in the Local Security Policy Setting dialog box.

- To add new users, click the Add User or Group button.

南来地,北往的,上班的,下岗的,走过路过不要错过!

======================个性签名=====================

之前认为Apple 的iOS 设计的要比 Android 稳定,我错了吗?

下载的许多客户端程序/游戏程序,经常会Crash,是程序写的不好(内存泄漏?刚启动也会吗?)还是iOS本身的不稳定!!!

如果在Android手机中可以简单联接到ddms,就可以查看系统log,很容易看到程序为什么出错,在iPhone中如何得知呢?试试Organizer吧,分析一下Device logs,也许有用.