Sample-Efficient Deep Reinforcement Learning via Episodic Backward Update

发表时间:2019 (NeurIPS 2019)

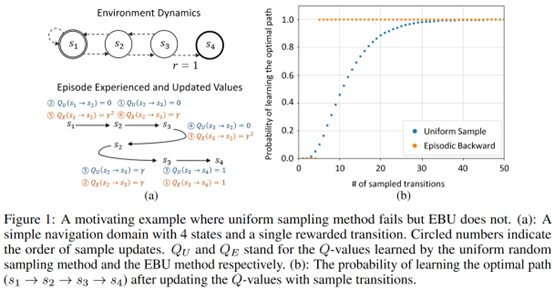

文章要点:这篇文章提出Episodic Backward Update (EBU)算法,采样一整条轨迹,然后从后往前依次更新做experience replay,这种方法对稀疏和延迟回报的环境有很好的效果(allows sparse and delayed rewards to propagate directly through all transitions of the sampled episode.)。

作者的观点是

(1) We have a low chance of sampling a transition with a reward for its sparsity.

(2) there is no point in updating values of one-step transitions with zero rewards if the values of future transitions with nonzero rewards have not been updated yet.

作者的解决方法是

(1) by sampling transitions in an episodic manner.

(2) by updating the values of transitions in a backward manner

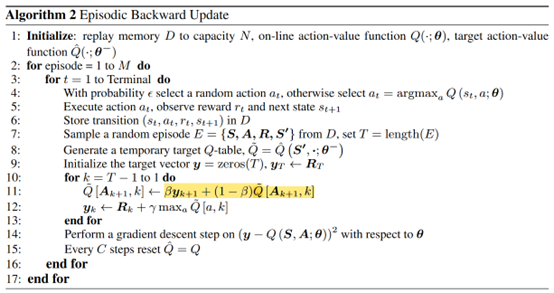

为了打破数据的相关性缓解overestimation,作者采用了一个diffusion factor \(\beta\)来做trade off。这个参数会在最新的估计和之前的估计之间做加权,take a weighted sum of the new backpropagated value and the pre-existing value estimate

算法伪代码如下

最后作者用多个learner设置不同的diffusion factor来学习,最终选一个来输出动作。We generate K learner networks with different diffusion factors, and a single actor to output a policy. For each episode, the single actor selects one of the learner networks in a regular sequence.这些learner的参数隔一段时间同步一次。

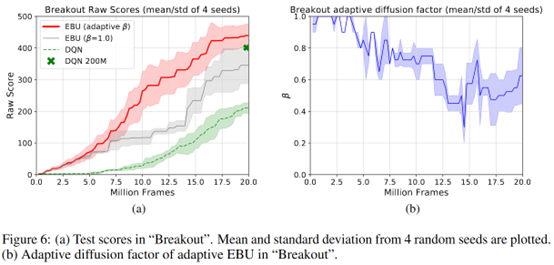

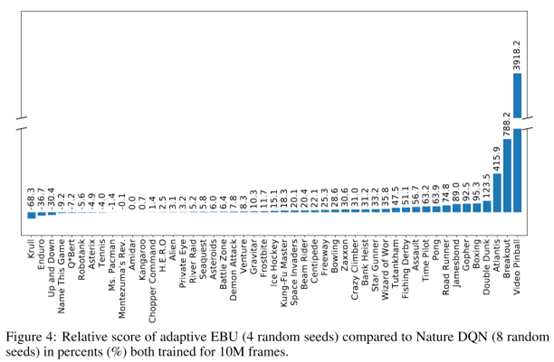

最终看起来有一定效果

总结:感觉依次更新问题应该不少啊,可能trick有点多。另外作者强调achieves the same mean and median human normalized performance of DQN by using only 5% and 10% of samples,有点牵强了。明显看出来训练一样多的step,很多游戏提升也不大

疑问:里面这个diffusion factor好像也不能打乱数据之间的相关性吧,不知道会不会有问题。

浙公网安备 33010602011771号

浙公网安备 33010602011771号