安装并运行pytorch报错“核心已转储”

1 问题和解决概要

主机环境:Ubuntu20.04,RTX3090,GPU Driver Version 525.89.02

问题:用anaconda创建虚拟环境python3.10,安装pytorch2.2.2-cu118和对应torchvision后,训练模型出现报错:“核心已转储”。

定位和解决:

- 查阅资料,确认driver支持cuda-11.8,主机安装cuda-11.8后编译一个sample也正常。

- 用一个network sample来验证pytorch的有效性(因为常规import torch之后print都正常),代码见下。确定是安装的pytorch或者torchvision问题。

- 尝试了Python3.8和Python3.10(均无效),尝试了带cu118的torch2.2.2和torch2.2.1(均无效),尝试了带cu117的torch2.0.1(有效)。

【疑问】理论上带cu118的torch应该都可以正常运行,但实际上无效,后退一个cuda版本至cu117就有效,原因不明!

下面是train network sample代码,验证安装的pytorch和torchvison是否真正可用

import argparse

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets, transforms

from torch.optim.lr_scheduler import StepLR

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 32, 3, 1)

self.conv2 = nn.Conv2d(32, 64, 3, 1)

self.dropout1 = nn.Dropout(0.25)

self.dropout2 = nn.Dropout(0.5)

self.fc1 = nn.Linear(9216, 128)

self.fc2 = nn.Linear(128, 10)

def forward(self, x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, 2)

x = self.dropout1(x)

x = torch.flatten(x, 1)

x = self.fc1(x)

x = F.relu(x)

x = self.dropout2(x)

x = self.fc2(x)

output = F.log_softmax(x, dim=1)

return output

def train(args, model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % args.log_interval == 0:

print('Train Epoch: {} [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

epoch, batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

if args.dry_run:

break

def test(model, device, test_loader):

model.eval()

test_loss = 0

correct = 0

with torch.no_grad():

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() # sum up batch loss

pred = output.argmax(dim=1, keepdim=True) # get the index of the max log-probability

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

def main():

# Training settings

parser = argparse.ArgumentParser(description='PyTorch MNIST Example')

parser.add_argument('--batch-size', type=int, default=64, metavar='N',

help='input batch size for training (default: 64)')

parser.add_argument('--test-batch-size', type=int, default=1000, metavar='N',

help='input batch size for testing (default: 1000)')

parser.add_argument('--epochs', type=int, default=14, metavar='N',

help='number of epochs to train (default: 14)')

parser.add_argument('--lr', type=float, default=0.01, metavar='LR',

help='learning rate (default: 1.0)')

parser.add_argument('--gamma', type=float, default=0.7, metavar='M',

help='Learning rate step gamma (default: 0.7)')

parser.add_argument('--no-cuda', action='store_true', default=False,

help='disables CUDA training')

parser.add_argument('--no-mps', action='store_true', default=False,

help='disables macOS GPU training')

parser.add_argument('--dry-run', action='store_true', default=False,

help='quickly check a single pass')

parser.add_argument('--seed', type=int, default=1, metavar='S',

help='random seed (default: 1)')

parser.add_argument('--log-interval', type=int, default=10, metavar='N',

help='how many batches to wait before logging training status')

# parser.add_argument('--save-model', action='store_true', default=False,

# help='For Saving the current Model')

args = parser.parse_args()

use_cuda = not args.no_cuda and torch.cuda.is_available()

use_mps = not args.no_mps and torch.backends.mps.is_available()

torch.manual_seed(args.seed)

if use_cuda:

device = torch.device("cuda")

elif use_mps:

device = torch.device("mps")

else:

device = torch.device("cpu")

train_kwargs = {'batch_size': args.batch_size}

test_kwargs = {'batch_size': args.test_batch_size}

if use_cuda:

cuda_kwargs = {'num_workers': 1,

'pin_memory': True,

'shuffle': True}

train_kwargs.update(cuda_kwargs)

test_kwargs.update(cuda_kwargs)

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

dataset1 = datasets.MNIST('./data', train=True, download=True,

transform=transform)

dataset2 = datasets.MNIST('./data', train=False,

transform=transform)

train_loader = torch.utils.data.DataLoader(dataset1,**train_kwargs)

test_loader = torch.utils.data.DataLoader(dataset2, **test_kwargs)

model = Net().to(device)

optimizer = optim.Adadelta(model.parameters(), lr=args.lr)

scheduler = StepLR(optimizer, step_size=1, gamma=args.gamma)

for epoch in range(1, args.epochs + 1):

train(args, model, device, train_loader, optimizer, epoch)

test(model, device, test_loader)

scheduler.step()

# if args.save_model:

# torch.save(model.state_dict(), "mnist_cnn.pt")

if __name__ == '__main__':

main()

2 具体过程

2.1 背景

为了使用lightning框架,environment.yaml中建议pytorch=2.*

dependencies:

- python=3.10

- pytorch=2.*

- torchvision=0.*

- lightning=2.*

而台式机上pytorch都是1.12及以下,因此需重新安装Pytorch环境。

2.2 确认GPU Driver和cuda版本对应

- nvidia-smi查询 GPU Driver Version: 525.89.02

- 确认驱动支持cuda11.8,参考:cuda-compatibility,以及cuda toolkit docs。

2.3 主机安装cuda11.8,以及对应的cudnn8.9.7,验证是否正常

【这个实验中pytorch自带了cuda runtime,所以其实并不需要主机上单独安装】

安装cuda11.8和对应的cudnn8.9.7:

-

下载并安装cuda-11.8;

-

/usr/local/cuda链接至cuda-11.8,配置~/.bashrc环境,可参考多个cuda和cudnn版本切换;

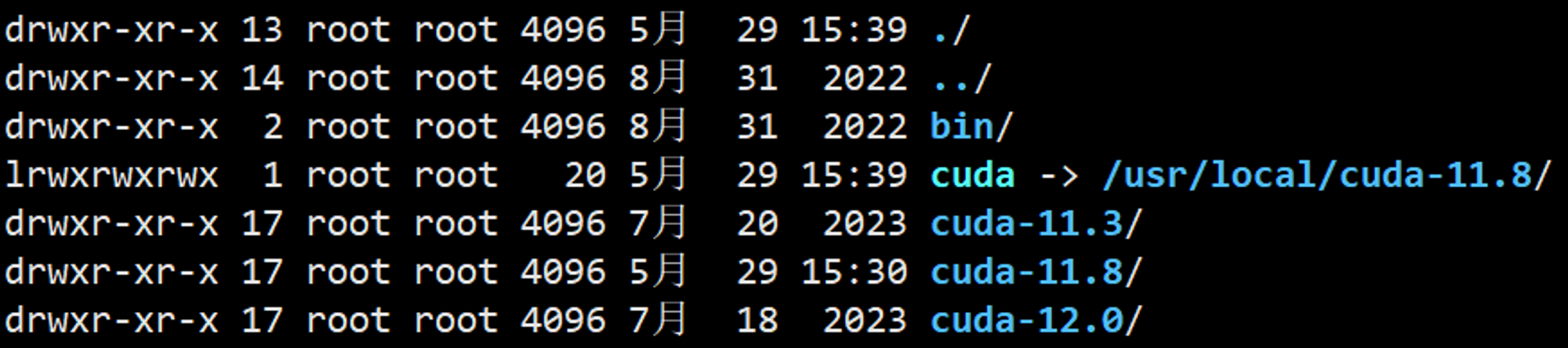

当前主机中有三个cuda,如下:

确认cuda正常:

方法1:nvcc --version

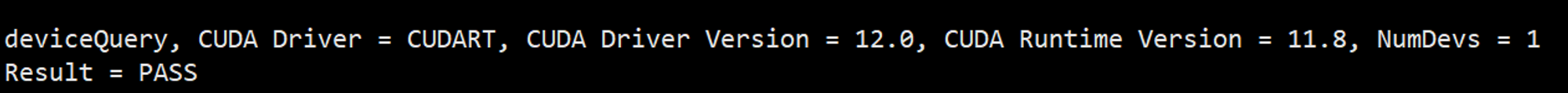

方法2:编译并运行cuda自带的samples(由于/usr/local/cuda-11.8文件夹中没有samples,因此去其他版本cuda中测一下)

cd /usr/local/cuda-11.3/samples/1_Utilities/deviceQuery

sudo make

sudo ./deviceQuery

出现下面输出,确认cuda11.8正常。

2.4 离线安装pytorch和torchvision,验证是否正常

- Anaconda创建python-3.10环境:conda create -n py310 python=3.10

- 官网确认版本PyTorch和torchvision对应关系

# CUDA 11.8

pip install torch==2.2.2 torchvision==0.17.2 --index-url https://download.pytorch.org/whl/cu118

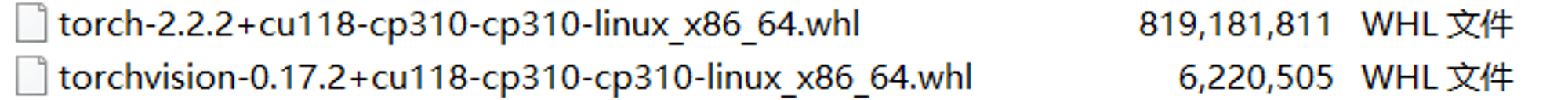

- 在Pytorch版本archive【这里】下载对应版本,对应python3.10版本和cuda11.8版本.

- 下载后直接pip安装

pip install torch-2.2.2+cu118-cp310-cp310-linux_x86_64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install torchvision-0.17.2+cu118-cp310-cp310-linux_x86_64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple

-

验证Pytorch和torchvison是否可用,代码见第一部分问题和解决概要。

结果报错“核心已转储”。

2.5 问题解决

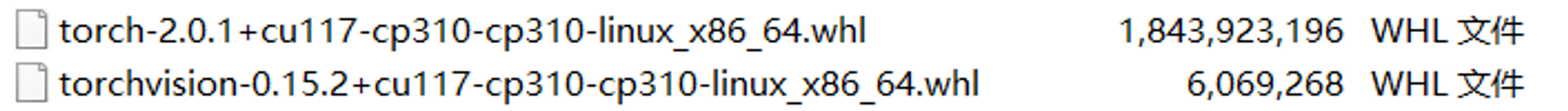

新建python3.10虚拟环境,下载pytorch带cuda11.7的版本并重新安装。验证OK。

【疑问】查阅资料可知该GPU driver支持cuda-11.8,且主机安装的cuda-11.8也验证正常,因此理论上带cu118的torch应该都可以正常运行,但实际上无效,后退一个cuda版本至cu117就有效,原因不明!