PyTorch练手项目二:MNIST手写数字识别

本文目的:展示如何利用PyTorch进行手写数字识别。

1 导入相关库,定义一些参数

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

#定义一些参数

BATCH_SIZE = 64

EPOCHS = 10

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

2 准备数据

使用Pytorch自带数据集。

#图像预处理

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))

])

#训练集

train_set = datasets.MNIST('data', train=True, transform=transform, download=True)

train_loader = DataLoader(train_set,

batch_size=BATCH_SIZE,

shuffle=True)

#测试集

test_set = datasets.MNIST('data', train=False, transform=transform, download=True)

test_loader = DataLoader(test_set,

batch_size=BATCH_SIZE,

shuffle=True)

3 准备模型

#搭建模型

class ConvNet(nn.Module):

#图像输入是(batch,1,28,28)

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 10, (3,3)) #输入通道数为1,输出通道数为10,卷积核(3,3)

self.conv2 = nn.Conv2d(10, 32, (3,3))

self.fc1 = nn.Linear(12*12*32, 100)

self.fc2 = nn.Linear(100, 10)

def forward(self, x):

x = self.conv1(x) #(batch,10,26,26)

x = F.relu(x)

x = self.conv2(x) #(batch,32,24,24)

x = F.relu(x)

x = F.max_pool2d(x, (2,2)) #(batch,32,12,12)

x = x.view(x.size(0), -1) #flatten (batch,12*12*32)

x = self.fc1(x) #(batch,100)

x = F.relu(x)

x = self.fc2(x) #(batch,10)

out = F.log_softmax(x, dim=1) #softmax激活并取对数,数值上更稳定

return out

4 训练

#定义模型和优化器

model = ConvNet().to(DEVICE) #模型移至GPU

optimizer = torch.optim.Adam(model.parameters())

#定义训练函数

def train(model, device, train_loader, optimizer, epoch): #跑一个epoch

model.train() #开启训练模式,即启用BatchNormalization和Dropout等

for batch_idx, (data, target) in enumerate(train_loader): #每次产生一个batch

data, target = data.to(device), target.to(device) #产生的数据移至GPU

output = model(data)

loss = F.nll_loss(output, target) #CrossEntropyLoss = log_softmax + NLLLoss

optimizer.zero_grad() #所有梯度清零

loss.backward() #反向传播求所有参数梯度

optimizer.step() #沿负梯度方向走一步

if(batch_idx+1) % 234 == 0:

print('Train Epoch: {} [{}/{} ({:.1f}%)]\tLoss: {:.6f}'.format(

epoch, (batch_idx+1) * len(data), len(train_loader.dataset),

100. * (batch_idx+1) / len(train_loader), loss.item()))

#定义测试函数

def test(model, device, test_loader):

model.eval() #测试模式,不启用BatchNormalization和Dropout

test_loss = 0

correct = 0

with torch.no_grad(): #避免梯度跟踪

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item() #将一批损失相加

pred = output.max(1, keepdim=True)[1] #找到概率最大的下标

#上句效果等同于 pred = torch.argmax(output, dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(test_loader.dataset)

#len(train_loader)为batch数,len(train_loader.dataset)为样本总数

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.1f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

100. * correct / len(test_loader.dataset)))

#开始训练

for epoch in range(1, EPOCHS + 1):

train(model, DEVICE, train_loader, optimizer, epoch)

test(model, DEVICE, test_loader)

注意,torch.max()有两种用法:

- 直接传入一个tensor,则返回全局最大值;

- torch.max(a, dim, [keepdim])返回一个tuple,前者为最大值结果,后者为indices(效果同argmax);

- 详见 https://pytorch.org/docs/stable/torch.html?highlight=max#torch.max

- 此处 output.max() 与 torch.max()类似,只不过无需传入tensor

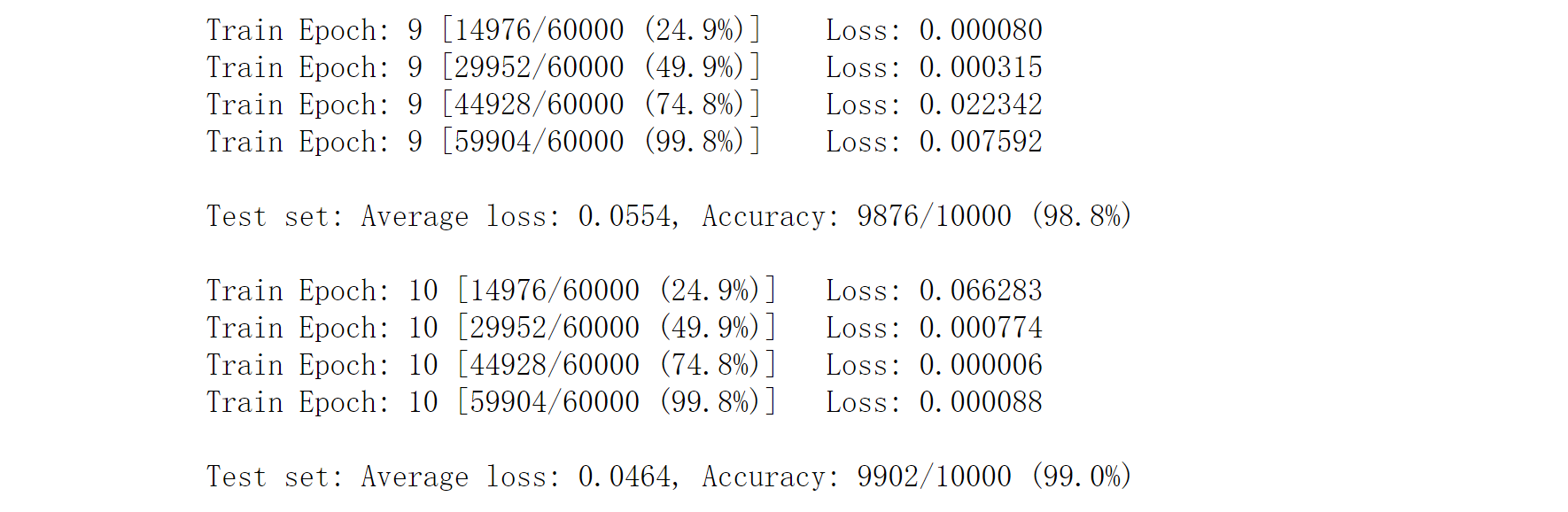

最终结果如下:

5 小结

- 任务流程:准备数据,准备模型,训练

- 如何使用PyTorch自带数据集进行训练

- 自定义模型需要实现forward函数

- model.train()和model.eval()作用

- 最后一层x的交叉熵两种方式等价:CrossEntropyLoss = log_softmax + nll_loss

- torch.max()有两种用法,返回值不一样

Reference