centos7配置Hadoop集群环境

参考:

https://blog.csdn.net/pucao_cug/article/details/71698903

设置免密登陆后,必须重启ssh服务

systermctl restart sshd.service

ssh服务介绍:

两种登陆方式:

1.密码登陆

2.密钥登陆

启动

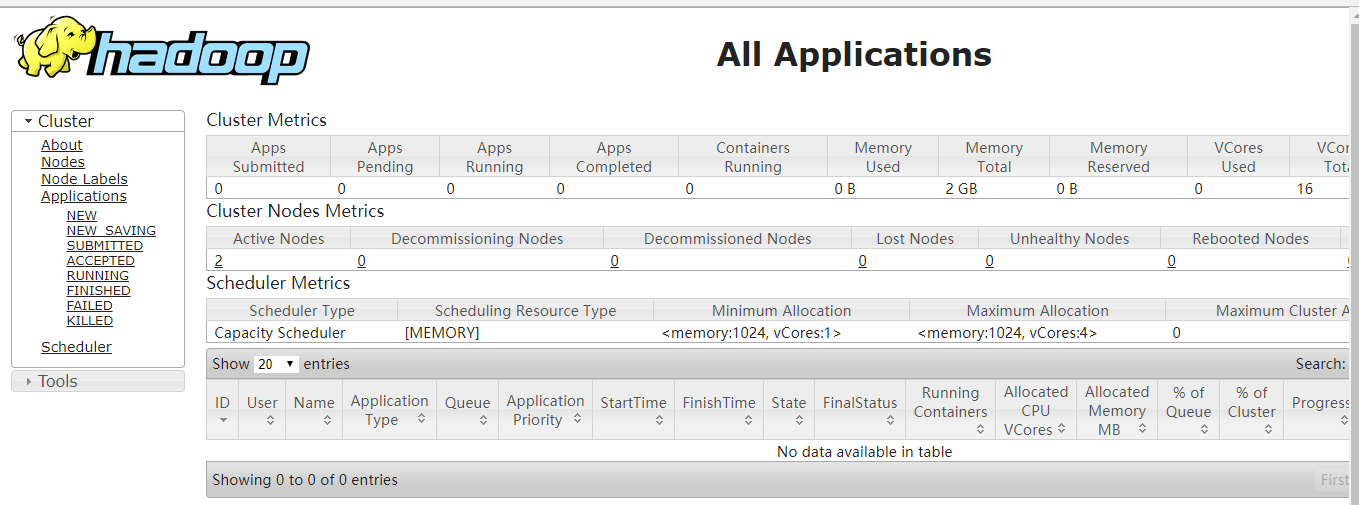

http://192.168.5.130:8088/cluster

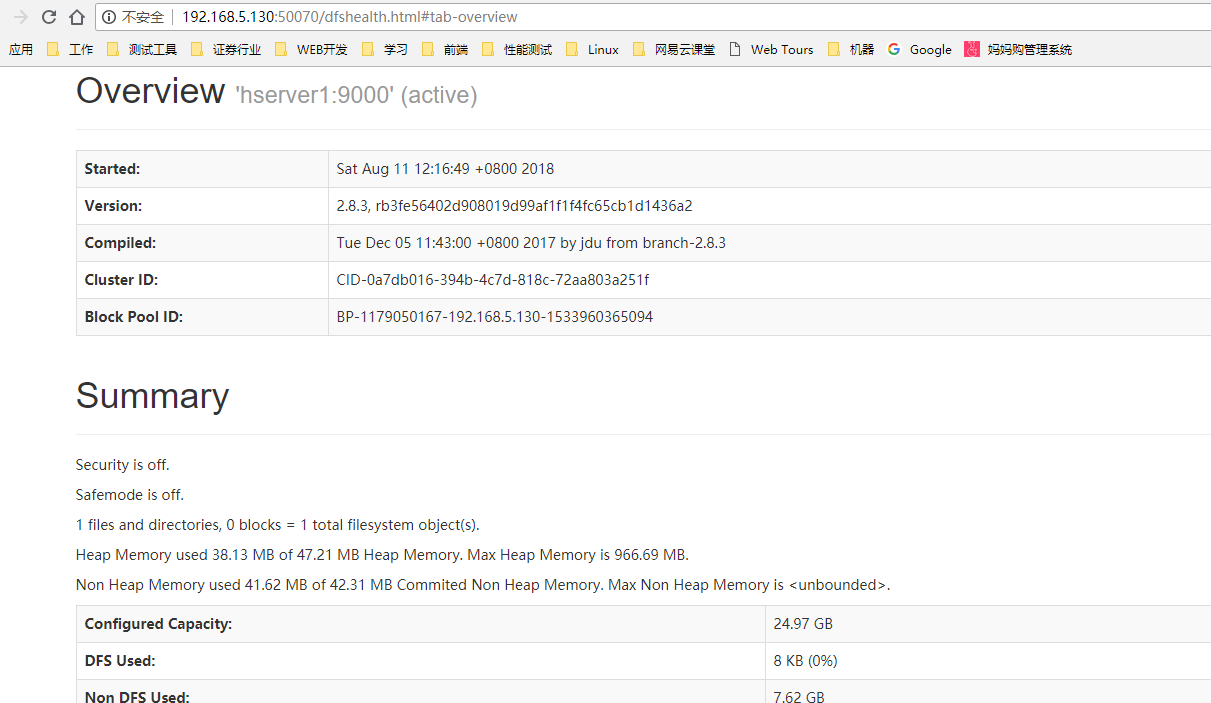

http://192.168.5.130:50070/dfshealth.html#tab-overview

安装Hadoop家族工具

hive 结合 mysql的jdbc插件 https://blog.csdn.net/pucao_cug/article/details/71773665

impala

sqoop https://blog.csdn.net/pucao_cug/article/details/72083172

hbase https://blog.csdn.net/pucao_cug/article/details/72229223

启动会报错

https://blog.csdn.net/l1028386804/article/details/51538611

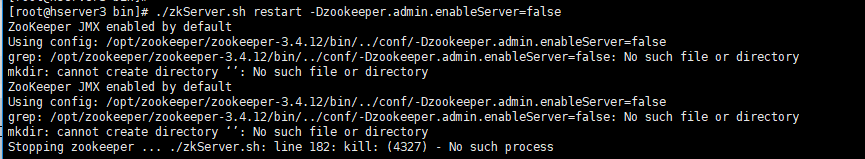

安装zookeeper

参考:https://blog.csdn.net/pucao_cug/article/details/72228973

zookeeper status

原因是:myid和 zoo.cfg里的配置不匹配

hive 导入txt文件数据到表中:

create table student(id int,name string) row format delimited fields terminated by '\t';

load data local inpath '/opt/hadoop/hive/student.txt' into table db_hive_edu.student;

-- hive导入csv文件 create table table_name( id string, name string, age string ) row format serde 'org.apache.hadoop.hive.serde2.OpenCSVSerde' with SERDEPROPERTIES ("separatorChar"=",","quotechar"="\"") STORED AS TEXTFILE;

load data local inpath '/opt/hadoop/hive/table_name.csv' overwrite into table table_name;

将表转换成ORC表:

create table table_name_orc( id string, name string, age string )row format delimited fields terminated by "\t" STORED AS ORC insert overwrite table table_name_orc select * from table_name

浙公网安备 33010602011771号

浙公网安备 33010602011771号