[k8s]k8s配置nfs做后端存储&配置多nginx共享存储&&statefulset配置

所有节点安装nfs

yum install nfs-utils rpcbind -y

mkdir -p /ifs/kubernetes

echo "/ifs/kubernetes 192.168.x.0/24(rw,sync,no_root_squash)" >> /etc/exports

仅在nfs服务器上 systemctl start rpcbind nfs

节点测试没问题即可

可以参考下以前写的:

http://blog.csdn.net/iiiiher/article/details/77865530

安装nfs作为存储

参考:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

deployment这是一个nfs的client的,会挂载/ifs/kubernetes, 以后创建的目录,会在这个目录下创建各个子目录.

$ cat deployment.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.x.135

- name: NFS_PATH

value: /ifs/kubernetes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.x.135

path: /ifs/kubernetes

$ cat class.yaml

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

$ cat test-claim.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

$ cat test-pod.yaml

kind: Pod

apiVersion: v1

metadata:

name: test-pod

spec:

containers:

- name: test-pod

image: busybox

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: test-claim

默认情况创建pvc,pv自动创建. pvc手动干掉后,nfs里面是archive状态.还没弄清楚在哪控制这东西

todo:

验证pvc的:

容量

读写

回收策略

实现下共享存储(左半部分)

$ cat nginx-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-claim

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 1Mi

$ cat nginx-deployment.yaml

apiVersion: apps/v1beta1 # for versions before 1.8.0 use apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: nfs-pvc

mountPath: "/usr/share/nginx/html"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nginx-claim

$ cat nginx-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: svc-nginx

spec:

selector:

app: nginx

type: NodePort

ports:

- protocol: TCP

targetPort: 80

右半部分参考

https://feisky.gitbooks.io/kubernetes/concepts/statefulset.html

todo:

我想验证左边模式ReadWriteOnce情况怎么破.

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage"

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Mi

pvc通过storageClassName调用nfs存储(strorageclass)

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: spring-pvc

namespace: kube-public

spec:

storageClassName: "managed-nfs-storage"

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 100Mi

gfs参考:

https://github.com/kubernetes-incubator/external-storage/tree/master/gluster/glusterfs

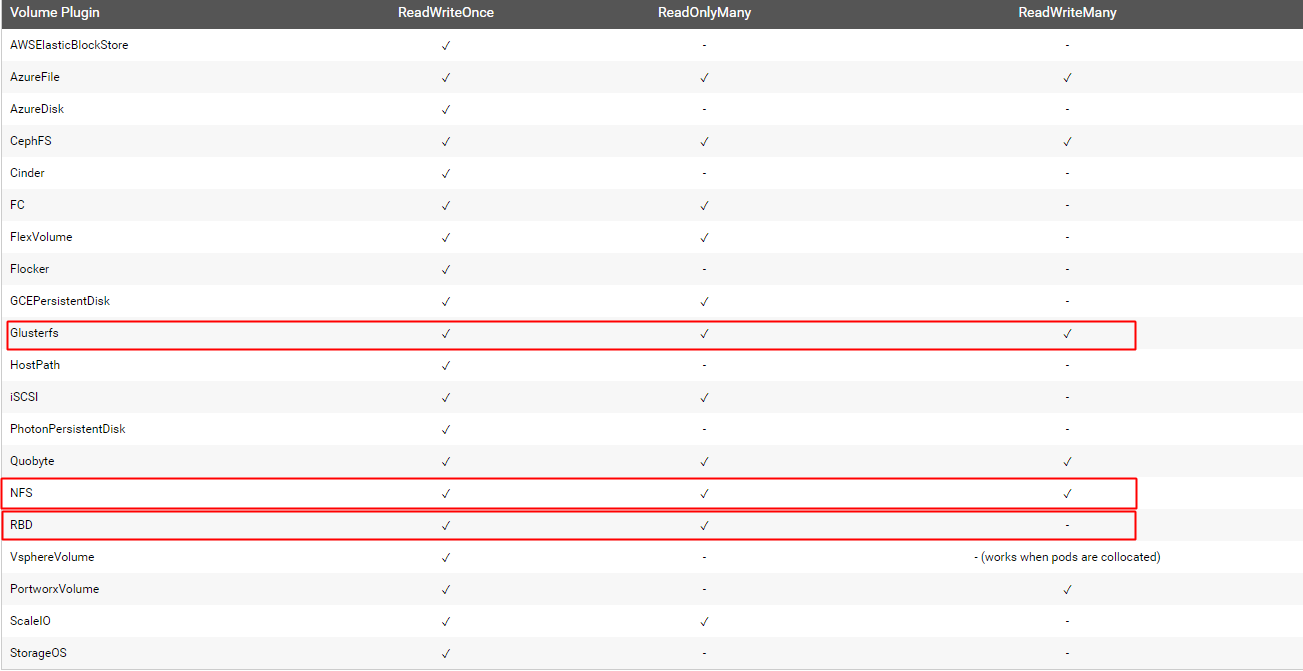

pv读写模式(1.9)

参考

ReadWriteOnce / ReadOnlyMany / ReadWriteMany

)