python写的地震前兆数据自动下载归档软件

地震台站系统要处理各种前兆数据,每天都要下载各种前兆数据文件,日志文件等,耗时费力。为了解决这一问题。本人最近利用python3.8软件,编写了一个基于python爬虫的,地震台站系统前兆数据自动下载归档软件,实现了对形变(水管仪、伸缩仪、垂直摆)、水温、重力、气象三要素等前兆数据文件和观测日志自动保存归档的功能。

利用pyinstaller打包后放在工作电脑上。值班人员只需要双击这个软件,程序就自动完成全部前兆数据下载归档的工作,减轻了工作人员的负担。

"""

# 本程序为长白山火山监测数据下载专用软件。可实现火山形变、重力、深层水温等监测数据自动下载到指定目录

# 重要提示:在前兆文件下载页要求输入用户名和密码页,用谷歌浏览器按F12,出现“开发者工具”,按Ctrl+R,

# 选择network标签,filter标签选择all,可以看见download..点一下,可以看见requestURL,这时候输入用户名和密码,提交

# 又出现一个requestURL,点下面的,这时候右侧的url中包含了用户输入的用户名和密码信息,这个url就是实际文件的下载页

# 复制这个url,通过编辑下载这个网页文件就行了。

"""

import os

import re

import requests

import time

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from tqdm import tqdm

from urllib.request import urlopen

def getGravityCookie():

"""

本方法的关键是要先下载一个chromedriver.exe程序,软件在运行的时候通过代码打开谷歌浏览器,并通过代码完成输入用户名密码,完成

完全由代码探友的模拟登陆过程。从而获取网站的正确cookie.这个解决方案非常适合于破解cookie经常动态变动的网站

在获取正确的cookie后,用户

"""

# 一下三行为无头模式运行,无头模式不开启浏览器,也就是在程序里面运行的

chrome_options = Options()

chrome_options.add_argument("--headless")

# browser = webdriver.Chrome(executable_path=(r'C:\Users\Administrator\Desktop\chrome_beta\ChromePortable\App\Chrome\chrome.exe'), options=chrome_options)

# #如果不用上面三行,那么就用下面这一行。运行的时候回自动的开启浏览器,并在浏览器中自动运行,你可以看到自动运行的过程

browser = webdriver.Chrome(

# 下行中需要根据程序运行环境输入实际的chromedriver.exe文件路径

executable_path=(r'D:\chrome_beta\ChromePortable\App\Chrome\chromedriver.exe'))

# 设置访问链接

browser.get("http://10.22.114.37")

# 点击登录按钮

# browser.find_element_by_name("submit").click()

# 输入用户名

browser.find_element_by_id("userName").send_keys("debug")

# 点击“下一步”

# browser.find_element_by_id("login-signin").click()

# 等待10秒,以防读取不到(#login-passwd)元素

# sleep(10)

# 输入密码

browser.find_element_by_id("pwd").send_keys("01234567")

# 点击signin按钮

browser.find_element_by_name("submit").click()

# 获取cookie

cookie_items = browser.get_cookies()

cookie_str = ""

# 组装cookie字符串

for item_cookie in cookie_items:

item_str = item_cookie["name"] + "=" + item_cookie["value"] + "; "

cookie_str += item_str

cookie = cookie_str[0:-2]

# cookie_str的值就是正确的重力仪cookie

return cookie

def welcome(i):

print('-' * 80)

print('欢迎使用:\033[1;31m[火山前兆监测数据自动下载归档软件 V2.0]\033[0m')

print('软件开发者:\033[1;31m吉林省长白山天池火山监测站\033[0m\n')

# print('发行时间:"\033[1;31m2020-02-06\033[0m"')

print('\033[1;31mCopyright © 2020 All Rights Reserved 版权所有\033[0m')

print('现在开始下载 ' + i)

print('-' * 80)

def create_dir(base_url, target_url, file_name, gravity_cookie, num_saved):

"""实现分析目标url,创建文件保存路径,保存文件等功能"""

count = 0

for i in target_url:

# 定义tempname,临时保存当前的file_name, 分析文件名,定向到指定目录

if base_url == 'http://10.22.114.37/':

year = file_name[count][0:4]

elif base_url == 'http://10.22.104.44/':

year = file_name[count][17:21]

elif base_url == 'http://10.22.114.42/':

year = file_name[count][-13:-9]

else:

year = '20' + file_name[count][9:11]

if base_url == 'http://10.22.114.31/' or base_url == 'http://10.22.114.32/':

month = file_name[count][11:13]

elif base_url == 'http://10.22.114.34/' and i[-3:] == 'epd':

month = file_name[count][13:15]

elif base_url == 'http://10.22.114.34/' and i[-3:] == 'sec':

month = file_name[count][13:15]

elif base_url == 'http://10.22.114.37/':

month = file_name[count][4:6]

elif base_url == 'http://10.22.104.44/':

month = file_name[count][21:23]

elif base_url == 'http://10.22.114.42/':

month = file_name[count][-8:-6]

if base_url == 'http://10.22.114.31/':

dir = 'd:/观测数据下载/形变数据/水管仪/'

elif base_url == 'http://10.22.114.32/':

dir = 'd:/观测数据下载/形变数据/伸缩仪/'

elif base_url == 'http://10.22.114.34/' and i[-3:] == 'epd':

dir = 'd:/观测数据下载/形变数据/垂直摆/分数据/'

elif base_url == 'http://10.22.114.34/' and i[-3:] == 'sec':

dir = 'd:/观测数据下载/形变数据/垂直摆/秒数据/'

elif base_url == 'http://10.22.114.37/':

dir = 'd:/观测数据下载/重力数据/'

elif base_url == 'http://10.22.104.44/':

dir = 'd:/观测数据下载/气象三要素数据/'

elif base_url == 'http://10.22.114.42/':

dir = 'd:/观测数据下载/深层水温数据/'

if base_url == 'http://10.22.114.37/': # 重力数据下又分成“数据”和“日志”两个子目录分别保存*.dat和*.log文件

newdir = dir + year + '/' + month + '/' + '数据' + '/'

if not os.path.isdir(newdir):

os.makedirs(newdir)

logdir = dir + year + '/' + month + '/' + '日志' + '/'

if not os.path.isdir(logdir):

os.makedirs(logdir)

else:

newdir = dir + year + '/' + month + '/'

if not os.path.isdir(newdir):

os.makedirs(newdir)

# 重力文件名是特殊的,在正则匹配出来的文件名前面还要加上仪器序列号

if base_url == 'http://10.22.114.37/':

tempname = newdir + '22004X212MPET0046' + file_name[count]

# 再匹配生成日志文件名logname

logname = tempname.replace('/数据/', '/日志/').replace('dat', 'log')

else:

tempname = newdir + file_name[count]

# 因为重力手段要下载的数据分为data和log两类,与其它前兆手段数据都不同,所以下面检测文件是否存在和写入文件都分开写代码

if base_url == 'http://10.22.114.37/':

if os.path.isfile(tempname) and os.path.isfile(logname):

print('文件%s和日志%s已经保存过了.....跳过!' % (file_name[count], file_name[count][:-4] + '.log'))

count += 1

continue

else:

if os.path.isfile(tempname):

print('文件%s已经保存过了.....跳过!' % file_name[count])

count += 1

continue

# headers是关键,如果requests.get()内不加header,则下载来的文件只是网页上的文字

# 水管和伸缩仪数据不加headers,垂直摆和重力必须加相应的headers

# TODO 重力数据自动保存目前还有一些问题,主要问题就是重力服务器的Cookie总变化,如果它变化 了,就需要重新输入正确的Cookie,才能正常运行

# print('正在保存文件' + file_name[count] + '.....', end='')

print(file_name[count], end = '')

if base_url == 'http://10.22.114.34/':

headers = {

'User-Agent': 't: Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'Connection': 'keep-alive',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Cookie': 'htvpcookie=01234567'

}

content = requests.get(i, headers = headers, stream = True)

elif base_url == 'http://10.22.114.37/':

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'Connection': 'keep-alive',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Cookie': gravity_cookie

}

content = requests.get(i, headers = headers, stream = True)

elif base_url == 'http://10.22.104.44/':

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'Connection': 'keep-alive',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Cookie': 'UserName=administrator; Auth=111'

}

content = requests.get(i, headers = headers, stream = True)

else:

content = requests.get(i, stream = True)

# 将requests.get()获得的数据内容写入对应目录的,存成文件,注意重力需要分别保存在‘数据’和‘日志’两个文件夹中

# 保存重力之外的其它前兆手段的数据文件,并显示进度条

if base_url != 'http://10.22.114.37/':

chunk_size = 1024

size = 0

content_size = len(content.content)

with open(tempname, 'wb') as f:

for data in content.iter_content(chunk_size = chunk_size):

f.write(data)

size += len(data)

print('\r' + file_name[count] +':%s%.2f%%' % ('>' * int(size*50/content_size),

float(size/content_size*100)),end='')

else:

num_saved += 1

print('')

count += 1

else:

# 保存重力数据文件并显示进度条

chunk_size = 1024

size = 0

# print('hello hello hello')

content_size = len(content.content)

with open(tempname, 'wb') as f:

for data in content.iter_content(chunk_size=chunk_size):

f.write(data)

size += len(data)

print('\r' + file_name[count] + ':%s%.2f%%' % ('>' * int(size * 50 / content_size),

float(size / content_size * 100)), end='')

else:

num_saved += 1

print('')

# 保存重力日志文件并显示进度条

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'Connection': 'keep-alive',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Cookie': 'PHPSESSID=7sgmd7aanp68tvudsu01nhld13; path=/'

}

i_log = i[0:44] + 'log' + i[-13:-4] + '.log'

content_log = requests.get(i_log, headers = headers, stream = True)

chunk_size = 1024

size = 0

content_size = len(content_log.content)

with open(logname, 'wb') as f:

for data in content_log.iter_content(chunk_size=chunk_size):

f.write(data)

size += len(data)

print('\r' + file_name[count][:-4] + '.log' + ':%s%.2f%%' % ('>' * int(size * 50 / content_size),

float(size / content_size * 100)), end='')

else:

num_saved += 1

print('')

count += 1

return num_saved

def getHTMLText(url, gravity_cookie):

if url == 'http://10.22.114.37/Download.php?page=1' or url == 'http://10.22.114.37/Download.php?page=2' or url == 'http://10.22.114.37/Download.php?page=3':

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'Connection': 'keep-alive',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9',

'Cookie': gravity_cookie

}

response = requests.get(url, headers=headers).content

web_content = response.decode('utf8', 'ignore')

return web_content

else:

try:

r=requests.get(url)

r.raise_for_status()

r.encoding=r.apparent_encoding

return r.text

except:

print("request failed")

def data_download(url, web_data, gravity_cookie, num_saved):

target_url = []

log_url = []

if url == 'http://10.22.114.31/download.htm' or url == 'http://10.22.114.32/download.htm':

res = re.compile('([0-9]+X[0-9]+[A-Z].EPD)')

elif url == 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=0':

res = re.compile('([0-9]+X[0-9]+\.epd)')

elif url == 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=2':

res = re.compile('([0-9]+X[0-9]+\.sec)')

elif url == 'http://10.22.114.37/Download.php?page=1' or url == 'http://10.22.114.37/Download.php?page=2' or url == 'http://10.22.114.37/Download.php?page=3':

res = re.compile('(\d+.dat)')

elif url == 'http://10.22.104.44/Main/DownloadFiles.asp':

res = re.compile('([0-9a-zA-Z]+.EPMS)')

elif url == 'http://10.22.114.42/download.asp':

res = re.compile('(X431DQYQ1455_[\d]{4}-[\d]{2}-[\d]{2}.sw)')

file_name = re.findall(res, web_data)

file_name = file_name[0::2]

file_name.sort()

if url == 'http://10.22.114.42/download.asp' or url == 'http://10.22.114.37/Download.php?page=2' or url == 'http://10.22.114.37/Download.php?page=3' or url == 'http://10.22.104.44/Main/DownloadFiles.asp':

pass

else:

file_name = file_name[0:-1]

# 获取传入url的基url

base_url = url[:20]

# 伸缩仪数据下载网址 http://10.22.114.32/22004231X200131A.EPD?u=administrator&p=01234567&B1=%CC%E1+%BD%BB

# 根据url的值判断是要下载哪个监测手段的数据,不同手段数据下载目标target_url具体设置

if url == 'http://10.22.114.31/download.htm' or url == 'http://10.22.114.32/download.htm':

for i in file_name:

target_url.append(base_url + i + '?u=administrator&p=01234567&B1=%CC%E1+%BD%BB')

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

elif url == 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=0':

for i in file_name:

target_url.append(base_url + 'cgi-bin/download.cgi?id=0&filename=' + i)

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

elif url == 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=2':

for i in file_name:

target_url.append(base_url + 'cgi-bin/download.cgi?id=2&filename=' + i)

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

elif url == 'http://10.22.114.37/Download.php?page=1' or url == 'http://10.22.114.37/Download.php?page=2' or url == 'http://10.22.114.37/Download.php?page=3':

for i in file_name:

target_url.append(base_url + 'infoDown.php?id=/mnt/sd/dat/' + i)

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

elif url == 'http://10.22.104.44/Main/DownloadFiles.asp':

for i in file_name:

target_url.append(base_url + 'Main/download.asp?path=\SDMEM\DataFiles\&file=' + i)

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

elif url == 'http://10.22.114.42/download.asp':

for i in file_name:

target_url.append(base_url + 'shiwu/' + i)

num_saved = create_dir(base_url, target_url, file_name, gravity_cookie, num_saved)

return num_saved

def main():

num_saved = 0

begin = time.time()

gravity_cookie = getGravityCookie()

url1 = 'http://10.22.114.31/download.htm'

url2 = 'http://10.22.114.32/download.htm'

url3 = 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=0' # 垂直摆分数据

url4 = 'http://10.22.114.34/cgi-bin/getdownload.cgi?id=2' # 垂直摆秒数据

url5 = 'http://10.22.114.37/Download.php?page=1' #重力数据下载第1页

url6 = 'http://10.22.114.37/Download.php?page=2' #重力数据下载第1页

url7 = 'http://10.22.114.37/Download.php?page=3' #重力数据下载第1页

url8 = 'http://10.22.104.44/Main/DownloadFiles.asp'

url9 = 'http://10.22.114.42/download.asp'

url = [url1, url2, url3, url4, url5, url6, url7, url8, url9]

for i in url:

welcome(i)

web_data = getHTMLText(i, gravity_cookie)

num_saved = data_download(i, web_data, gravity_cookie, num_saved)

end = time.time()

diff = (end - begin) / 60

print('本次数据下载共运行%.2f分钟, 下载数据和日志文件%d个' % (diff, num_saved))

print("*************************\033[1;31m软件将于1分钟后关闭\033[0m*************************")

time.sleep(60)

main()

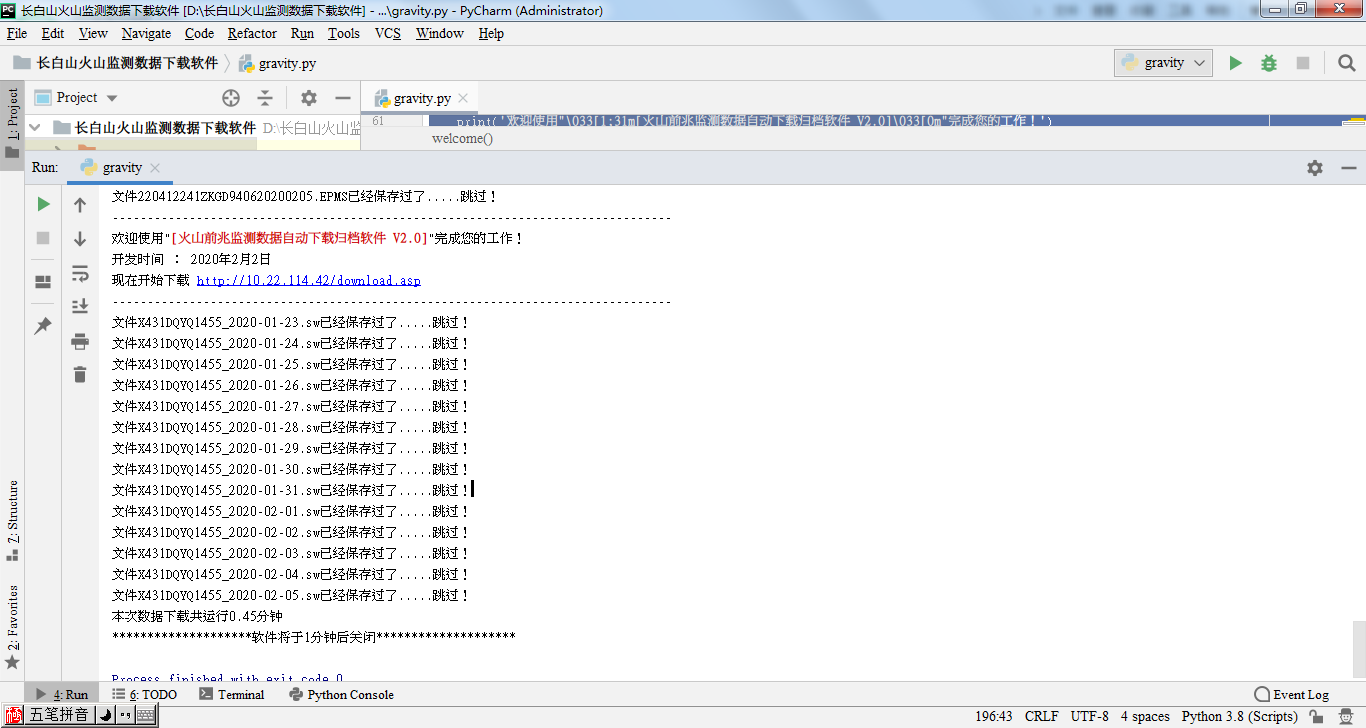

程序运行截图