centos7安装hadoop伪分布集群

1、获取hadoop

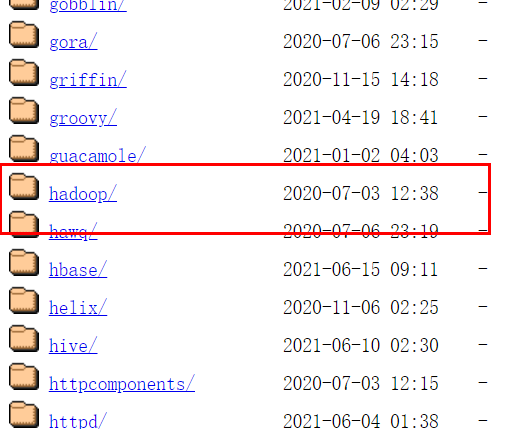

使用国内镜像下载速度很快,清华镜像地址:Index of /apache (tsinghua.edu.cn)

找到hadoop目录

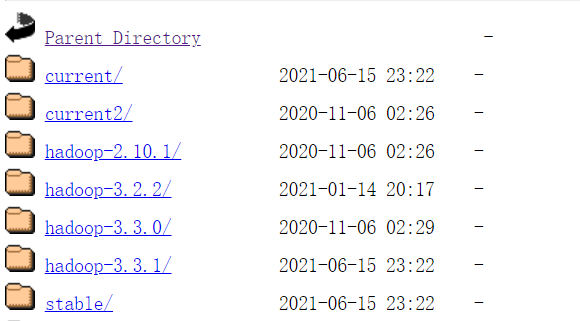

点击common

选择自己需要的版本

本示例使用hadoop-3.2.2

2、因环境受限,使用伪分布的安装方式

ip:设置静态ip

[root@bigdata01 ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

修改以下参数:

BOOTPROTO="static"

新增:

IPADDR=192.168.184.128

GATEWAY=192.168.184.2

DNS1=192.168.184.2

保存后重启网络

[root@192 ~]# service network restart

Restarting network (via systemctl): [ OK ]

3、设置永久主机名

[root@192 ~]# vi /etc/hostname

![]()

4、关闭防火墙

临时关闭防火墙

[root@192 ~]# systemctl stop firewalld

查看防火墙状态

[root@bigdata01 ~]# systemctl status firewalld

永久关闭防火墙

[root@bigdata01 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

5、实现ssh免密登录

现在服务器上执行以下命令,rsa表示的是一种加密算法,执行命令后需要默认连续按4次回车回到命令行,不需输入任何内容。

[root@bigdata01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:J1ZsjlwvB9MZBUylhVPAjj6gQJgshpCs14zETqQmLhM root@bigdata01

The key's randomart image is:

+---[RSA 2048]----+

|==.o +=B= |

|++B . . .== |

|E* = Bo+. |

|=.+ + ..*.+. |

|oo . .So+ o |

|.. .. ooo |

| . |

| |

| |

+----[SHA256]-----+

执行以后会在~/.ssh目录下生产对应的公钥和秘钥文件

[root@bigdata01 ~]# ll ~/.ssh

total 12

-rw-------. 1 root root 1679 Jul 3 17:42 id_rsa

-rw-r--r--. 1 root root 396 Jul 3 17:42 id_rsa.pub

-rw-r--r--. 1 root root 203 Jul 3 17:41 known_hosts

上述文件中pub表示公钥文件

下一步是把公钥拷贝到需要免密码登录的机器上面

因本示例只有一台服务器,所以拷贝到本服务器对应目录中

[root@bigdata01 ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

>>表示重定向

至此就可以通过ssh免密登录。

[root@bigdata01 ~]# ssh bigdata01

Last login: Sat Jul 3 17:52:45 2021 from fe80::3c67:9a76:5a25:c4d9%ens33

6、安装jdk

创建data/soft目录用于存放安装文件

[root@bigdata01 /]# mkdir -p /data/soft

将jdk文件上传到/data/soft目录下

[root@bigdata01 soft]# ll

total 190424

-rw-r--r--. 1 root root 194990602 Jul 3 18:04 jdk-8u211-linux-x64.tar.gz

解压jdk安装包

[root@bigdata01 soft]# tar -zxvf jdk-8u211-linux-x64.tar.gz

解压后的文件夹名称有点长,我们修改一下

[root@bigdata01 soft]# mv jdk1.8.0_211/ jdk1.8

配置环境变量 JAVA_HOME

[root@bigdata01 soft]# vi /etc/profile

.....

export JAVA_HOME=/data/soft/jdk1.8

export PATH=.:$JAVA_HOME/bin:$PATH

使配置生效

[root@bigdata01 soft]# source /etc/profile

确认是否安装成功

[root@bigdata01 soft]# java -version

java version "1.8.0_211"

Java(TM) SE Runtime Environment (build 1.8.0_211-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.211-b12, mixed mode)

7、安装hadoop

上传安装包到/data/soft目录下

查看文件

[root@bigdata01 soft]# ll

total 576608

-rw-r--r--. 1 root root 395448622 Jul 3 18:22 hadoop-3.2.2.tar.gz

drwxr-xr-x. 7 10 143 245 Apr 2 2019 jdk1.8

-rw-r--r--. 1 root root 194990602 Jul 3 18:04 jdk-8u211-linux-x64.tar.gz

解压安装包

[root@bigdata01 soft]# tar -zxvf hadoop-3.2.2.tar.gz

查看目录

[root@bigdata01 soft]# ll

total 576608

drwxr-xr-x. 9 1000 1000 149 Jan 3 18:11 hadoop-3.2.2

-rw-r--r--. 1 root root 395448622 Jul 3 18:22 hadoop-3.2.2.tar.gz

drwxr-xr-x. 7 10 143 245 Apr 2 2019 jdk1.8

-rw-r--r--. 1 root root 194990602 Jul 3 18:04 jdk-8u211-linux-x64.tar.gz

[root@bigdata01 soft]# cd hadoop-3.2.2

[root@bigdata01 hadoop-3.2.2]# ll

total 184

drwxr-xr-x. 2 1000 1000 203 Jan 3 18:11 bin

drwxr-xr-x. 3 1000 1000 20 Jan 3 17:29 etc

drwxr-xr-x. 2 1000 1000 106 Jan 3 18:11 include

drwxr-xr-x. 3 1000 1000 20 Jan 3 18:11 lib

drwxr-xr-x. 4 1000 1000 4096 Jan 3 18:11 libexec

-rw-rw-r--. 1 1000 1000 150569 Dec 5 2020 LICENSE.txt

-rw-rw-r--. 1 1000 1000 21943 Dec 5 2020 NOTICE.txt

-rw-rw-r--. 1 1000 1000 1361 Dec 5 2020 README.txt

drwxr-xr-x. 3 1000 1000 4096 Jan 3 17:29 sbin

drwxr-xr-x. 4 1000 1000 31 Jan 3 18:46 share

hadoop目录下有两个重要的目录,一个是bin目录,一个是sbin目录。

先看一下bin目录

[root@bigdata01 hadoop-3.2.2]# cd bin

[root@bigdata01 bin]# ll

total 1032

-rwxr-xr-x. 1 1000 1000 442728 Jan 3 17:54 container-executor

-rwxr-xr-x. 1 1000 1000 8707 Jan 3 17:28 hadoop

-rwxr-xr-x. 1 1000 1000 11265 Jan 3 17:28 hadoop.cmd

-rwxr-xr-x. 1 1000 1000 11274 Jan 3 17:32 hdfs

-rwxr-xr-x. 1 1000 1000 8081 Jan 3 17:32 hdfs.cmd

-rwxr-xr-x. 1 1000 1000 6237 Jan 3 17:57 mapred

-rwxr-xr-x. 1 1000 1000 6311 Jan 3 17:57 mapred.cmd

-rwxr-xr-x. 1 1000 1000 29184 Jan 3 17:54 oom-listener

-rwxr-xr-x. 1 1000 1000 485312 Jan 3 17:54 test-container-executor

-rwxr-xr-x. 1 1000 1000 12112 Jan 3 17:54 yarn

-rwxr-xr-x. 1 1000 1000 12840 Jan 3 17:54 yarn.cmd

里面有hdfs,yarn等脚本,这些脚本后期主要是为了操作hadoop集群中的hdfs 和yarn组件的。

再看一下sbin目录,这里面有很多start stop开头的脚本,这些脚本是负责启动 或者停止集群中的组件的。

[root@bigdata01 hadoop-3.2.2]# cd sbin

[root@bigdata01 sbin]# ll

total 108

-rwxr-xr-x. 1 1000 1000 2756 Jan 3 17:32 distribute-exclude.sh

drwxr-xr-x. 4 1000 1000 36 Jan 3 17:54 FederationStateStore

-rwxr-xr-x. 1 1000 1000 1983 Jan 3 17:28 hadoop-daemon.sh

-rwxr-xr-x. 1 1000 1000 2522 Jan 3 17:28 hadoop-daemons.sh

-rwxr-xr-x. 1 1000 1000 1542 Jan 3 17:33 httpfs.sh

-rwxr-xr-x. 1 1000 1000 1500 Jan 3 17:29 kms.sh

-rwxr-xr-x. 1 1000 1000 1841 Jan 3 17:57 mr-jobhistory-daemon.sh

-rwxr-xr-x. 1 1000 1000 2086 Jan 3 17:32 refresh-namenodes.sh

-rwxr-xr-x. 1 1000 1000 1779 Jan 3 17:28 start-all.cmd

-rwxr-xr-x. 1 1000 1000 2221 Jan 3 17:28 start-all.sh

-rwxr-xr-x. 1 1000 1000 1880 Jan 3 17:32 start-balancer.sh

-rwxr-xr-x. 1 1000 1000 1401 Jan 3 17:32 start-dfs.cmd

-rwxr-xr-x. 1 1000 1000 5170 Jan 3 17:32 start-dfs.sh

-rwxr-xr-x. 1 1000 1000 1793 Jan 3 17:32 start-secure-dns.sh

-rwxr-xr-x. 1 1000 1000 1571 Jan 3 17:54 start-yarn.cmd

-rwxr-xr-x. 1 1000 1000 3342 Jan 3 17:54 start-yarn.sh

-rwxr-xr-x. 1 1000 1000 1770 Jan 3 17:28 stop-all.cmd

-rwxr-xr-x. 1 1000 1000 2166 Jan 3 17:28 stop-all.sh

-rwxr-xr-x. 1 1000 1000 1783 Jan 3 17:32 stop-balancer.sh

-rwxr-xr-x. 1 1000 1000 1455 Jan 3 17:32 stop-dfs.cmd

-rwxr-xr-x. 1 1000 1000 3898 Jan 3 17:32 stop-dfs.sh

-rwxr-xr-x. 1 1000 1000 1756 Jan 3 17:32 stop-secure-dns.sh

-rwxr-xr-x. 1 1000 1000 1642 Jan 3 17:54 stop-yarn.cmd

-rwxr-xr-x. 1 1000 1000 3083 Jan 3 17:54 stop-yarn.sh

-rwxr-xr-x. 1 1000 1000 1982 Jan 3 17:28 workers.sh

-rwxr-xr-x. 1 1000 1000 1814 Jan 3 17:54 yarn-daemon.sh

-rwxr-xr-x. 1 1000 1000 2328 Jan 3 17:54 yarn-daemons.sh

因为我们会用到bin目录和sbin目录下面的一些脚本,为了方便使用,我们需要配置一下环境变量。

[root@bigdata01 sbin]# vi /etc/profile

.....

export JAVA_HOME=/data/soft/jdk1.8

export HADOOP_HOME=/data/soft/hadoop-3.2.2

export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:$PATH

[root@bigdata01 sbin]# source /etc/profile

修改Hadoop相关配置文件

进入配置文件所在目录

[root@bigdata01 hadoop-3.2.2]# cd etc/hadoop/

主要修改下面这几个文件:

hadoop-env.sh

core-site.xml

hdfs-site.xml

mapred-site.xml

yarn-site.xml

workers

首先修改hadoop-env.sh,增加环境变量,添加到文件末尾即可。

[root@bigdata01 hadoop]# vi hadoop-env.sh

.......

export JAVA_HOME=/data/soft/jdk1.8

export HADOOP_LOG_DIR=/data/hadoop_repo/logs/hadoop

修改 core-site.xml 文件

注意 fs.defaultFS 属性中的主机名需要和你配置的主机名保持一致,添加到末尾

[root@bigdata01 hadoop]# vi core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://bigdata01:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/data/hadoop_repo</value> </property> </configuration>

修改hdfs-site.xml文件,把hdfs中文件副本的数量设置为1,因为现在伪分布集群只有一个节点

[root@bigdata01 hadoop]# vi hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

修改mapred-site.xml,设置mapreduce使用的资源调度框架

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

修改yarn-site.xml,设置yarn上支持运行的服务和环境变量白名单

[root@bigdata01 hadoop]# vi yarn-site.xml <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.env-whitelist</name> <value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value> </property> </configuration>

修改workers,设置集群中从节点的主机名信息,在这里就一台集群,所以就填写bigdata01即可

[root@bigdata01 hadoop]# vi workers

bigdata01

配置文件到这就修改好了,但是还不能直接启动,因为Hadoop中的HDFS是一个分布式的文件系统,文件系统在使用之前是需要先格式化的,就类似我们买一块新的磁盘,在安装系统之前需要先格式化才可以 使用。

格式化HDFS

[root@bigdata01 hadoop]# cd /data/soft/hadoop-3.2.0

[root@bigdata01 hadoop-3.2.0]# bin/hdfs namenode -format

WARNING: /data/hadoop_repo/logs/hadoop does not exist. Creating.

2021-07-03 20:52:59,441 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = 192.168.184.128/192.168.184.128

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.2

STARTUP_MSG: classpath = /data/soft/hadoop-3.2.2/etc/hadoop:/data/soft/hadoop-3.2.2/share/hadoop/common/lib/jetty-security-9.4.20.v20190813.jar:

.......

2021-07-03 20:53:01,097 INFO common.Storage: Storage directory /data/hadoop_repo/dfs/name has been successfully formatted.

2021-07-03 20:53:01,149 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hadoop_repo/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2021-07-03 20:53:01,277 INFO namenode.FSImageFormatProtobuf: Image file /data/hadoop_repo/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2021-07-03 20:53:01,291 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2021-07-03 20:53:01,301 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2021-07-03 20:53:01,302 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at 192.168.184.128/192.168.184.128

************************************************************/

看到successfully formatted说明格式化成功了。 如果提示错误,一般都是因为配置文件的问题,需要根据具体的报错信息去分析问题。

如果因为配置错误,需要重复执行格式化,需要把/data/hadoop_repo目录中的内容全部删除

[root@bigdata01 data]# cd /data [root@bigdata01 data]# ls hadoop_repo soft [root@bigdata01 data]# rm -r hadoop_repo/

再按之前格式步骤重新执行格式化

如果是返回成功信息后就不可以再重新格式化了,否则集群会出现问题。

启动伪分布集群

执行sbin目录下的start-all.sh脚本

[root@bigdata01 hadoop-3.2.2]# sbin/start-all.sh Starting namenodes on [bigdata01] ERROR: Attempting to operate on hdfs namenode as root ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation. Starting datanodes ERROR: Attempting to operate on hdfs datanode as root ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation. Starting secondary namenodes [bigdata01] ERROR: Attempting to operate on hdfs secondarynamenode as root ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation. Starting resourcemanager ERROR: Attempting to operate on yarn resourcemanager as root ERROR: but there is no YARN_RESOURCEMANAGER_USER defined. Aborting operation. Starting nodemanagers ERROR: Attempting to operate on yarn nodemanager as root ERROR: but there is no YARN_NODEMANAGER_USER defined. Aborting operation.

发现有很多ERROR信息,提示缺少HDFS和YARN的一些用户信息。

解决方案如下: 修改sbin目录下的 start-dfs.sh , stop-dfs.sh 这两个脚本文件,在文件前面增加如下内容

start-dfs.sh 文件中# Start hadoop dfs daemons之前增加如下内容:

[root@bigdata01 sbin]# vi start-dfs.sh

HDFS_DATANODE_USER=root HDFS_DATANODE_SECURE_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root

stop-dfs.sh文件中# Stop hadoop dfs daemons之前增加如下内容:

[root@bigdata01 sbin]# vi stop-dfs.sh

HDFS_DATANODE_USER=root HDFS_DATANODE_SECURE_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root

修改sbin目录下的 start-yarn.sh , stop-yarn.sh 这两个脚本文件,在文件前面增加如下内容

start-yarn.sh文件中## @description usage info之前增加

[root@bigdata01 sbin]# vi start-yarn.sh

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

stop-yarn.sh文件中## @description usage info之前增加

[root@bigdata01 sbin]# vi stop-yarn.sh YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

重新启动伪分布集群

[root@bigdata01 sbin]# cd /data/soft/hadoop-3.2.2 [root@bigdata01 hadoop-3.2.2]# sbin/start-all.sh Starting namenodes on [bigdata01] Last login: Sat Jul 3 17:53:46 CST 2021 from fe80::3c67:9a76:5a25:c4d9%ens33 on pts/3 Starting datanodes Last login: Sat Jul 3 21:25:38 CST 2021 on pts/2 Starting secondary namenodes [bigdata01] Last login: Sat Jul 3 21:25:40 CST 2021 on pts/2 Starting resourcemanager Last login: Sat Jul 3 21:25:48 CST 2021 on pts/2 Starting nodemanagers Last login: Sat Jul 3 21:25:58 CST 2021 on pts/2

验证集群进程信息 执行jps命令可以查看集群的进程信息,去掉Jps这个进程之外还需要有5个进程才说明集群是正常启动的

[root@bigdata01 hadoop-3.2.2]# jps 2561 DataNode 2403 NameNode 3448 Jps 3115 NodeManager 2751 SecondaryNameNode 2991 ResourceManager

还可以通过webui界面来验证集群服务是否正常

HDFS webui界面:http://192.168.184.128:9870

YARN webui界面:http://192.168.184.128:8088

如果想通过主机名访问,则需要修改windows机器中的hosts文件.

文件所在位置为:C:\Windows\System32\drivers\etc\HOSTS 在文件中增加下面内容,这个其实就是Linux虚拟机的ip和主机名,在这里做一个映射之后,就可以在 Windows机器中通过主机名访问这个Linux虚拟机了。

停止集群

如果修改了集群的配置文件或者是其它原因要停止集群,可以使用下面命令

[root@bigdata01 hadoop-3.2.0]# sbin/stop-all.sh Stopping namenodes on [bigdata01] Last login: Tue Apr 7 17:59:40 CST 2020 on pts/0 Stopping datanodes Last login: Tue Apr 7 18:06:09 CST 2020 on pts/0 Stopping secondary namenodes [bigdata01] Last login: Tue Apr 7 18:06:10 CST 2020 on pts/0 Stopping nodemanagers Last login: Tue Apr 7 18:06:13 CST 2020 on pts/0 Stopping resourcemanager Last login: Tue Apr 7 18:06:16 CST 2020 on pts/0

浙公网安备 33010602011771号

浙公网安备 33010602011771号