【人工智能实战2019-何峥】第5次作业

训练逻辑与门和逻辑或门

作业要求

| 项目 | 内容 |

|---|---|

| 课程 | 人工智能实战2019 |

| 作业要求 | 训练一个逻辑与门和逻辑或门,结果及代码形成博客 |

| 我的课程目标 | 掌握相关知识和技能,获得项目经验 |

| 本次作业对我的帮助 | 理解神经网络的基本原理,并掌握代码实现的基本方法 |

| 作业正文 | 【人工智能实战2019-何峥】第5次作业 |

| 其他参考文献 | B6-神经网络基本原理简明教程 |

作业正文

1. 训练一个逻辑与门和逻辑或门,结果及代码形成博客

逻辑与门样本

| 样本 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| X1 | 0 | 0 | 1 | 1 |

| X2 | 0 | 1 | 0 | 1 |

| Y | 0 | 0 | 0 | 1 |

逻辑或门样本

| 样本 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

| X1 | 0 | 0 | 1 | 1 |

| X2 | 0 | 1 | 0 | 1 |

| Y | 0 | 1 | 1 | 1 |

代码展示

import numpy as np

import matplotlib.pyplot as plt

import math

def And_Or(key):

X1 =np.array([0,0,1,1])

X2 = np.array([0,1,0,1])

X = np.vstack((X1, X2))

if (key == 'And'):

Y = np.array([0,0,0,1])

elif (key == 'Or'):

Y = np.array([0,1,1,1])

return X, Y

def Initialize(X, m, n):

W = np.zeros((1,n))

B = np.zeros((1,1))

eta = 0.8

max_epoch = 10000

return W, B, eta, max_epoch

def Sigmiod(x):

A = 1/(1+np.exp(-x))

return A

def ForwardCal(W, X, B):

Z = np.dot(W,X) + B

A = Sigmiod(Z)

return Z, A

def CheckLoss(Y, A, m):

p1 = 1 - Y

p2 = np.log(A)

p3 = np.log(1-A)

p4 = np.multiply(Y, p2)

p5 = np.multiply(p1, p3)

Loss = np.sum(-(p4 + p5))

loss = Loss / m

return loss

def BackwardCal(X, Y, A, m):

dZ = A - Y

dB = dZ.sum(axis = 1, keepdims = True)/m

dW = np.dot(dZ, X.T)/m

return dW, dB

def UpdateWeights(eta, dW, dB, W, B):

W = W - eta*dW

B = B - eta*dB

return W, B

def train(key):

X, Y = And_Or(key)

n = X.shape[0]

m = X.shape[1]

W, B, eta, max_epoch = Initialize(X, m, n)

epoch = 0

# while (True):

# epoch = epoch + 1

for epoch in range(max_epoch):

Z, A = ForwardCal(W, X, B)

dW, dB = BackwardCal(X, Y, A, m)

W, B = UpdateWeights(eta, dW, dB, W, B)

loss = CheckLoss(Y, A, m)

if loss <= 1e-2:

break

print(W, B)

print(loss)

print(epoch)

ShowFigure(X, Y, W, B, m)

def ShowFigure(X, Y, W, B, m):

for i in range(m):

if Y[i] == 0:

plt.plot(X[0,i], X[1,i], '.', c='r')

elif Y[i] == 1:

plt.plot(X[0,i], X[1,i], '^', c='g')

a = - (W[0,0] / W[0,1])

b = - (B[0,0] / W[0,1])

x = np.linspace(-0.1,1.1,100)

y = a * x + b

plt.plot(x,y)

plt.axis([-0.1,1.1,-0.1,1.1])

plt.show()

if __name__=='__main__':

key = 'Or' #逻辑与门将Or换为And

train(key)

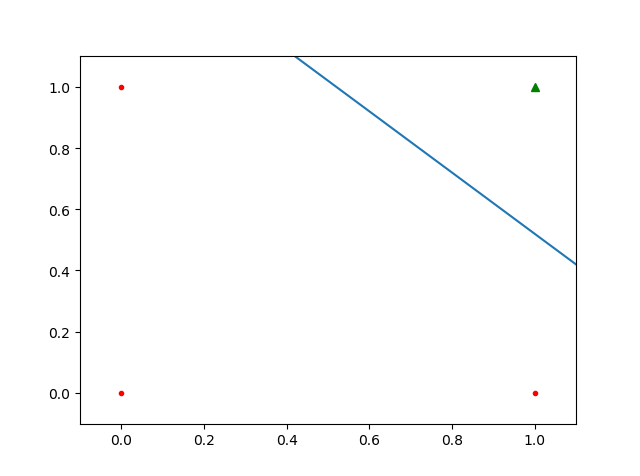

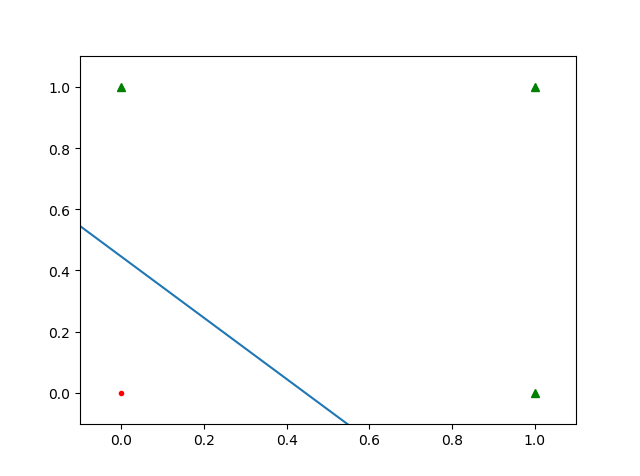

训练结果

- 逻辑或门

w = [8.51697047, 8.51697047]

b = -3.79279452

loss = 0.009999219577877546

epoch = 1163

- 逻辑与门

w = [8.53544939, 8.53544939]

b = -12.97388027

loss = 0.009999210510001869

epoch = 2171