Springboot 主从库切换配置

应用场景:

在主从库读写分离时,让程序自动根据业务来区分对主库还是从库进行读写操作,在所有的写操作时,自动对主库进行操作,所有的读操作时,则访问从库。

应用前提:

在两台机器上配置好两个数据库,建立主从关系,接下来在springboot的框架中配置

首先在.yml或者.porperties文件中配置主从数据库

#自定义druid主从连接

druid:

datasource:

type: com.alibaba.druid.pool.DruidDataSource

#主节点

master:

url: jdbc:mysql://192.168.1.1:3306/datebase1?characterEncoding=UTF-8&autoReconnect=true&zeroDateTimeBehavior=convertToNull&useUnicode=true&serverTimezone=GMT

username: root

password: root

driverClassName: com.mysql.cj.jdbc.Driver

#下面为连接池的补充设置,应用到上面所有数据源中

filters: stat,wall,log4j

initialSize: 5

maxActive: 20

maxPoolPreparedStatementPerConnectionSize: 20

maxWait: 60000

minEvictableIdleTimeMillis: 300000

minIdle: 5

poolPreparedStatements: true

testOnBorrow: false

testOnReturn: false

testWhileIdle: true

timeBetweenEvictionRunsMillis: 60000

useGlobalDataSourceStat: true

validationQuery: SELECT 1 FROM DUAL

#从节点

slave:

url: jdbc:mysql://192.168.1.2:3306/datebase2?characterEncoding=UTF-8&autoReconnect=true&zeroDateTimeBehavior=convertToNull&useUnicode=true&serverTimezone=GMT

username: root

password: root

driverClassName: com.mysql.cj.jdbc.Driver

#下面为连接池的补充设置,应用到上面所有数据源中

initialSize: 5

minIdle: 5

maxActive: 20

# 配置获取连接等待超时的时间

maxWait: 60000

# 配置间隔多久才进行一次检测,检测需要关闭的空闲连接,单位是毫秒

timeBetweenEvictionRunsMillis: 60000

# 配置一个连接在池中最小生存的时间,单位是毫秒

minEvictableIdleTimeMillis: 300000

validationQuery: SELECT 1

testWhileIdle: true

testOnBorrow: false

testOnReturn: false

# 打开PSCache,并且指定每个连接上PSCache的大小

poolPreparedStatements: true

# 配置监控统计拦截的filters,去掉后监控界面sql无法统计,'wall'用于防火墙

filters: stat,wall,log4j

logSlowSql: true

maxPoolPreparedStatementPerConnectionSize: 20

# 通过connectProperties属性来打开mergeSql功能;慢SQL记录

connectionProperties:

druid:

stat:

mergeSql: true

slowSqlMillis: 5000

# 合并多个DruidDataSource的监控数据

useGlobalDataSourceStat: true

紧接着配置mybatis相关

#mybatis

mybatis:

#扫描xml

mapper-locations: classpath:com/email/support/mapping/*.xml

#配置

# configuration:

#控制台sql日志输出

# log-impl: org.apache.ibatis.logging.log4j2.Log4j2Impl

#mybatis返回类型省略全路径包名

type-aliases-package: com.email.support.mapper

然后添加datesource配置类

/**

* 数据库连接池配置

*/

@Configuration

@EnableTransactionManagement

public class DatasourceConfig {

private static Logger LOG = LoggerFactory.getLogger(DatasourceConfig.class);

/**

* 引入druid数据库连接池类型

*/

@Value("${druid.datasource.type}")

private Class<? extends DataSource> datasourceType;

/**

* 主数据源

* @return DataSource

*/

@Primary

@Bean("masterDatasource")

@ConfigurationProperties("druid.datasource.master")

public DataSource masterDatasource() {

LOG.info("=========== master run =========");

return DataSourceBuilder.create().type(datasourceType).build();

}

/**

* 从数据源

* @return DataSource

*/

@Bean("slaveDatasource")

@ConfigurationProperties("druid.datasource.slave")

public DataSource slaveDatasource() {

LOG.info("============ slave run ==========");

return DataSourceBuilder.create().type(datasourceType).build();

}

/**

* druid的servlet

* @return ServletRegistrationBean

*/

@Bean

public ServletRegistrationBean druidServlet() {

ServletRegistrationBean<StatViewServlet> servletRegistrationBean = new ServletRegistrationBean<>();

servletRegistrationBean.setServlet(new StatViewServlet());

servletRegistrationBean.addUrlMappings("/druid/*");

servletRegistrationBean.addInitParameter("allow", "127.0.0.1");

servletRegistrationBean.addInitParameter("deny", "/deny");

return servletRegistrationBean;

}

/**

* druid的filter

* @return FilterRegistrationBean

*/

@Bean

public FilterRegistrationBean druidFilter() {

FilterRegistrationBean<WebStatFilter> webStatFilterRegistrationBean = new FilterRegistrationBean<>();

webStatFilterRegistrationBean.setFilter(new WebStatFilter());

webStatFilterRegistrationBean.addUrlPatterns("/*");

webStatFilterRegistrationBean.addInitParameter("exclusions", "*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

return webStatFilterRegistrationBean;

}

}

然后配置数据库的读写操作

新建contextholder类

**

* 数据源本地线程操作

*/

public class DynamicDataSourceContextHolder {

private static final ThreadLocal<DatasourseType> CONTEXT_HOLDER = new ThreadLocal<>();

public enum DatasourseType{

/**

* 数据源类型

*/

MASTER,

SLAVE;

}

/**

* 设置本地线程变量

* @param datasourseType 数据类型

*/

public static void setContextHolder(DatasourseType datasourseType){

CONTEXT_HOLDER.set(datasourseType);

}

/**

* 获取本地线程变量的值 默认返回主数据源

* @return DatasourseType

*/

public static DatasourseType getContextHolder(){

DatasourseType datasourseType = CONTEXT_HOLDER.get();

return Objects.isNull(datasourseType) ? DatasourseType.MASTER :datasourseType;

}

/**

* 清空本地线程变量

*/

public static void removeDataSourceType(){

CONTEXT_HOLDER.remove();

}

}

添加mybatis配置类继承MybatisAutoConfiguration接口 注意:mybatis和datesource有强依赖关系 所以必须先添加datesource的相关配置再进行mybatis的配置

然后将datesource通过@Resource注解 进行注入 放入mybatis的sqlsessionfactory进行管理

/**

* mybatis配置

*/

@Configuration

public class MybatisConfig extends MybatisAutoConfiguration {

/**

* 主数据源 根据名称注入

*/

@Resource(name = "slaveDatasource")

private DataSource slaveDatasource;

@Resource(name = "masterDatasource")

private DataSource masterDatasource;

@Bean

public SqlSessionFactory sqlSessionFactory() throws Exception {

return super.sqlSessionFactory(datasourceStrategy());

}

/**

* 数据源策略 ----此方法是在添加DynamicDataSource后 将数据源注入进sqlsessionfactroy进行数据源管理

* @return 数据源

*/

@SuppressWarnings("unchecked")

private AbstractRoutingDataSource datasourceStrategy(){

DynamicDataSource dynamicDataSource = new DynamicDataSource();

//软引用map

ClassLoaderRepository.SoftHashMap softHashMap = new ClassLoaderRepository.SoftHashMap();

//设置主从

softHashMap.put(DynamicDataSourceContextHolder.DatasourseType.MASTER, masterDatasource);

softHashMap.put(DynamicDataSourceContextHolder.DatasourseType.SLAVE, slaveDatasource);

//默认使用主数据源

dynamicDataSource.setTargetDataSources(softHashMap);

//将两个数据源放入map

dynamicDataSource.setDefaultTargetDataSource(masterDatasource);

return dynamicDataSource;

}

}

接下来就是要把主从库动态切换的配置

新建RoutingDataSource 继承 AbstractRoutingDataSource

/**

* 配置数据源容器对象

*/

public class DynamicDataSource extends AbstractRoutingDataSource {

@Override

protected Object determineCurrentLookupKey() {

return DynamicDataSourceContextHolder.getContextHolder();

}

}

接下来使用自定义注解告诉方法访问哪个数据库

建立SlaveDatasource注解

/**

* slave数据源标识

*/

@Target({ElementType.METHOD, ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

public @interface SlaveDatasource {

}

新建自定义注解的aop切面

/**

* slave数据源aop

*/

@Aspect

@Component

public class SlaveDatasourceAop implements Ordered {

private static final Logger LOGGER = LoggerFactory.getLogger(SlaveDatasourceAop.class);

@Pointcut("@annotation(com.email.support.annotation.SlaveDatasource)")

public void slaveDatasource(){}

@Around("slaveDatasource()")

public Object doAround(ProceedingJoinPoint joinPoint) throws Throwable {

try {

DynamicDataSourceContextHolder.setContextHolder(DynamicDataSourceContextHolder.DatasourseType.SLAVE);

LOGGER.info("========== change to slave ==========");

return joinPoint.proceed();

}finally {

DynamicDataSourceContextHolder.removeDataSourceType();

}

}

@Override

public int getOrder() {

return 0;

}

}

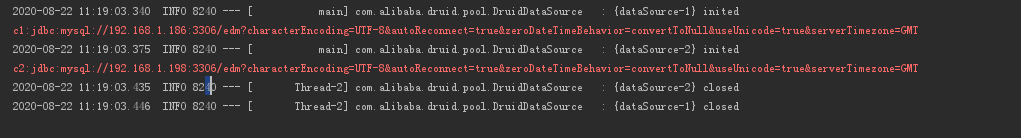

至此配置完成 下面测试查看效果

添加测试方法

//测试主从库切换

@Resource(name = "slaveDatasource")

private DataSource slaveDatasource;

@Resource(name = "masterDatasource")

private DataSource masterDatasource;

@Test

public void contextLoads() throws Exception{

Connection c1=masterDatasource.getConnection("主数据库账号","主数据库密码");

System.err.println("c1:" + c1.getMetaData().getURL());

Connection c2=slaveDatasource.getConnection("从数据库账号","从数据库密码");

System.err.println("c2:" + c2.getMetaData().getURL());

}

输出结果

c1:为我的主库地址

c2:为我的从库地址

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 从HTTP原因短语缺失研究HTTP/2和HTTP/3的设计差异

· 三行代码完成国际化适配,妙~啊~