Ubuntu搭建全分布式Hadoop

@

采用三台节点搭建全分布式

| 主机名 | IP地址 |

|---|---|

| master | 192.168.200.100 |

| slave1 | 192.168.200.101 |

| slave2 | 192.168.200.102 |

软件准备:Ubuntu22.04服务器版,VMware15版,Hadoop3.3.0,Java-jdk1.8,Xshell工具

环境准备:可以ping外网,已经安装ssh工具、vim工具,已经做好主机名映射

配置ssh免密登录

使用ssh-keygen命令,连续回车四下,三个节点同样操作

root@master:~# ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa

Your public key has been saved in /root/.ssh/id_rsa.pub #生成ssh目录公钥位置

The key fingerprint is:

SHA256:8lXRWJH4Zoo83WxR3bc4VZq/gEDiUIaTbe50ixb1SOk root@master

The key's randomart image is:

+---[RSA 3072]----+

| .=+ .. .=oo=|

| ++oo+ o.o+=|

| +.+.o ..=.o|

| + E.o.o=o |

| + S.o+ B...|

| * o+ o = .|

| . . . . . |

| |

| |

+----[SHA256]-----+

root@master:~#

# 其他节点同样操作

使用ssh-copy-id -i 命令把密钥传输到所有节点

root@master:~# ssh-copy-id -i /root/.ssh/id_rsa.pub root@master

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@master's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@master'"

and check to make sure that only the key(s) you wanted were added.

root@master:~#

root@master:~# ssh-copy-id -i /root/.ssh/id_rsa.pub root@slave1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@slave2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@slave1'"

and check to make sure that only the key(s) you wanted were added.

root@master:~#

root@master:~# ssh-copy-id -i /root/.ssh/id_rsa.pub root@slave2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@slave2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@slave2'"

and check to make sure that only the key(s) you wanted were added.

root@master:~#

ssh测试是否可以免密登录三台节点

root@master:~# ssh master

Welcome to Ubuntu 22.04 LTS (GNU/Linux 5.15.0-25-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 3 06:16:05 AM UTC 2022

System load: 0.15380859375 Processes: 226

Usage of /: 35.5% of 19.51GB Users logged in: 1

Memory usage: 13% IPv4 address for ens33: 192.168.200.100

Swap usage: 0%

* Super-optimized for small spaces - read how we shrank the memory

footprint of MicroK8s to make it the smallest full K8s around.

https://ubuntu.com/blog/microk8s-memory-optimisation

110 updates can be applied immediately.

67 of these updates are standard security updates.

To see these additional updates run: apt list --upgradable

Last login: Sat Sep 3 06:11:27 2022 from 192.168.200.100

root@master:~#

root@master:~# ssh slave1

Welcome to Ubuntu 22.04 LTS (GNU/Linux 5.15.0-25-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 3 06:16:42 AM UTC 2022

System load: 0.0166015625 Processes: 217

Usage of /: 35.4% of 19.51GB Users logged in: 1

Memory usage: 10% IPv4 address for ens33: 192.168.200.101

Swap usage: 0%

110 updates can be applied immediately.

67 of these updates are standard security updates.

To see these additional updates run: apt list --upgradable

Last login: Fri Sep 2 02:13:48 2022 from 192.168.200.100

root@slave1:~#

root@master:~# ssh slave2

Welcome to Ubuntu 22.04 LTS (GNU/Linux 5.15.0-25-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 3 06:17:13 AM UTC 2022

System load: 0.01171875 Processes: 224

Usage of /: 35.3% of 19.51GB Users logged in: 1

Memory usage: 12% IPv4 address for ens33: 192.168.200.102

Swap usage: 0%

* Super-optimized for small spaces - read how we shrank the memory

footprint of MicroK8s to make it the smallest full K8s around.

https://ubuntu.com/blog/microk8s-memory-optimisation

111 updates can be applied immediately.

67 of these updates are standard security updates.

To see these additional updates run: apt list --upgradable

Last login: Fri Sep 2 02:13:48 2022 from 192.168.200.100

root@slave2:~#

# 测试完毕,master节点可以实现对master、slave1,slave2的ssh免密登录,其余二个节点同样操作

配置Java、hadoop环境

只需上传到master节点即可,配置完毕后远程传输其它节点

root@master:/home/huhy# ls

hadoop-3.3.0.tar jdk-8u241-linux-x64.tar.gz

root@master:/home/huhy#

#上传包会默认放在用户目录下

解压jdk到/usr/local/目录下

root@master:/home/huhy# tar -zxvf jdk-8u241-linux-x64.tar.gz -C /usr/local/

root@master:/home/huhy# cd /usr/local/

root@master:/usr/local# ls

bin etc games include jdk1.8.0_241 lib man sbin share src

root@master:/usr/local# mv jdk1.8.0_241/ jdk1.8.0

# 使用mv改下名,待会儿方便写环境变量

root@master:/usr/local#

root@master:/usr/local# ll

total 44

drwxr-xr-x 11 root root 4096 Sep 3 06:47 ./

drwxr-xr-x 14 root root 4096 Apr 21 00:57 ../

drwxr-xr-x 2 root root 4096 Apr 21 00:57 bin/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 etc/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 games/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 include/

drwxr-xr-x 7 10143 10143 4096 Dec 11 2019 jdk1.8.0/

drwxr-xr-x 3 root root 4096 Apr 21 01:00 lib/

lrwxrwxrwx 1 root root 9 Apr 21 00:57 man -> share/man/

drwxr-xr-x 2 root root 4096 Apr 21 01:00 sbin/

drwxr-xr-x 4 root root 4096 Apr 21 01:01 share/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 src/

root@master:/usr/local# ll

total 44

drwxr-xr-x 11 root root 4096 Sep 3 06:47 ./

drwxr-xr-x 14 root root 4096 Apr 21 00:57 ../

drwxr-xr-x 2 root root 4096 Apr 21 00:57 bin/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 etc/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 games/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 include/

drwxr-xr-x 7 huhy huhy 4096 Dec 11 2019 jdk1.8.0/

drwxr-xr-x 3 root root 4096 Apr 21 01:00 lib/

lrwxrwxrwx 1 root root 9 Apr 21 00:57 man -> share/man/

drwxr-xr-x 2 root root 4096 Apr 21 01:00 sbin/

drwxr-xr-x 4 root root 4096 Apr 21 01:01 share/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 src/

root@master:/usr/local#

root@master:/home/huhy# ls

hadoop-3.3.0.tar jdk-8u241-linux-x64.tar.gz

root@master:/home/huhy# tar -xvf hadoop-3.3.0.tar -C /usr/local/

#注意解压的参数少一个z

root@master:/home/huhy# cd /usr/local/

root@master:/usr/local# ll

total 48

drwxr-xr-x 12 root root 4096 Sep 3 07:09 ./

drwxr-xr-x 14 root root 4096 Apr 21 00:57 ../

drwxr-xr-x 2 root root 4096 Apr 21 00:57 bin/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 etc/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 games/

drwxr-xr-x 10 root root 4096 Jul 15 2021 hadoop-3.3.0/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 include/

drwxr-xr-x 7 huhy huhy 4096 Dec 11 2019 jdk1.8.0/

drwxr-xr-x 3 root root 4096 Apr 21 01:00 lib/

lrwxrwxrwx 1 root root 9 Apr 21 00:57 man -> share/man/

drwxr-xr-x 2 root root 4096 Apr 21 01:00 sbin/

drwxr-xr-x 4 root root 4096 Apr 21 01:01 share/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 src/

root@master:/usr/local# ll

total 48

drwxr-xr-x 12 root root 4096 Sep 3 07:09 ./

drwxr-xr-x 14 root root 4096 Apr 21 00:57 ../

drwxr-xr-x 2 root root 4096 Apr 21 00:57 bin/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 etc/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 games/

drwxr-xr-x 10 huhy huhy 4096 Jul 15 2021 hadoop-3.3.0/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 include/

drwxr-xr-x 7 huhy huhy 4096 Dec 11 2019 jdk1.8.0/

drwxr-xr-x 3 root root 4096 Apr 21 01:00 lib/

lrwxrwxrwx 1 root root 9 Apr 21 00:57 man -> share/man/

drwxr-xr-x 2 root root 4096 Apr 21 01:00 sbin/

drwxr-xr-x 4 root root 4096 Apr 21 01:01 share/

drwxr-xr-x 2 root root 4096 Apr 21 00:57 src/

root@master:/usr/local#

设置/etc/profile用户环境变量

在/etc/profile.d/这个下面的创建一个my_env.sh来放我们的环境变量,每次sources时都会加载这里面的环境变量,就建议不要往/etc/profile下面直接添加

root@master:~# vim /etc/profile.d/my_env.sh

#添加如下内容

#JAVA_HOME

export JAVA_HOME=/usr/local/jdk1.8.0

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/usr/local/hadoop-3.3.0

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

#解决后面启动集群的权限问题

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

root@master:~# source /etc/profile

root@master:~# java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

root@master:~# hadoop version

Hadoop 3.3.0

Source code repository Unknown -r Unknown

Compiled by root on 2021-07-15T07:35Z

Compiled with protoc 3.7.1

From source with checksum 5dc29b802d6ccd77b262ef9d04d19c4

This command was run using /usr/local/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0.jar

root@master:~#

#环境安装成功

配置hadoop文件

本次搭建完成集群的定制的四个配置文件,包括core-site.xml、hdfs.site.xml、yarn-site.xml、mapred-site.xml。以及hadoop-env.sh、yarm-env.sh两个环境变量指定文件

集群配置规划如下

1,NameNode和DataNode连个进程消耗内存较大,分别放于两个节点上

2,SecoundNameNode放于slave2节点上

3,每个节点上都有三个进程

| 参数名称 | master | slave1 | slave2 |

|---|---|---|---|

| hdfs | NameNode / DataNode | DataNode | SecondaryNameNode / DataNode |

| yarn | NodeManager | ResourceManager / NodeManager | NodeManager |

默认的配置文件,里面有关于文件的默认配置,需要jar -xvf解压查看,在share目录中

| 配置文件 | 配置文件位置 |

|---|---|

| core-default.xml | hadoop-common-3.1.3.jar/core-default.xml |

| hdf-default.xml | hadoop-hdfs-3.1.3.jar/hdfs-default.xml |

| yarn-default.xml | hadoop-yarn-common-3.1.3.jar/yarn-default.xml |

| mapred-default.xml | hadoop-mapreduce-client-core-3.1.3.jar/mapred-default.xml |

配置hadoop-env.sh

进入Hadoop配置文件位置,进行文件配置

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# pwd

/usr/local/hadoop-3.3.0/etc/hadoop

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# ls

capacity-scheduler.xml httpfs-env.sh mapred-site.xml

configuration.xsl httpfs-log4j.properties shellprofile.d

container-executor.cfg httpfs-site.xml ssl-client.xml.example

core-site.xml kms-acls.xml ssl-server.xml.example

hadoop-env.cmd kms-env.sh user_ec_policies.xml.template

hadoop-env.sh kms-log4j.properties workers

hadoop-metrics2.properties kms-site.xml yarn-env.cmd

hadoop-policy.xml log4j.properties yarn-env.sh

hadoop-user-functions.sh.example mapred-env.cmd yarnservice-log4j.properties

hdfs-rbf-site.xml mapred-env.sh yarn-site.xml

hdfs-site.xml mapred-queues.xml.template

root@master:/usr/local/hadoop-3.3.0/etc/hadoop#

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim hadoop-env.sh

#直接在首行写入即可

export JAVA_HOME=/usr/local/jdk1.8.0

#然后保存退出即可

配置yarm-env.sh

和hadoop-env.sh一样编辑java环境变量的位置

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim yarn-env.sh

#如果没有发现有改变量值,就在第一行添加即可

export JAVA_HOME=/usr/local/jdk1.8.0

配置core-site.xml

该文件定义NameNode进程的URL、临时文件的存储目录以及顺序文件I/O缓存的大小

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim core-site.xml

<configuration>

<!-- 设置默认使用的文件系统 Hadoop支持file、HDFS、GFS、ali|Amazon云等文件系统 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:8020</value>

</property>

<!-- 设置Hadoop本地保存数据路径 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop-3.3.0/data</value>

</property>

</configuration>

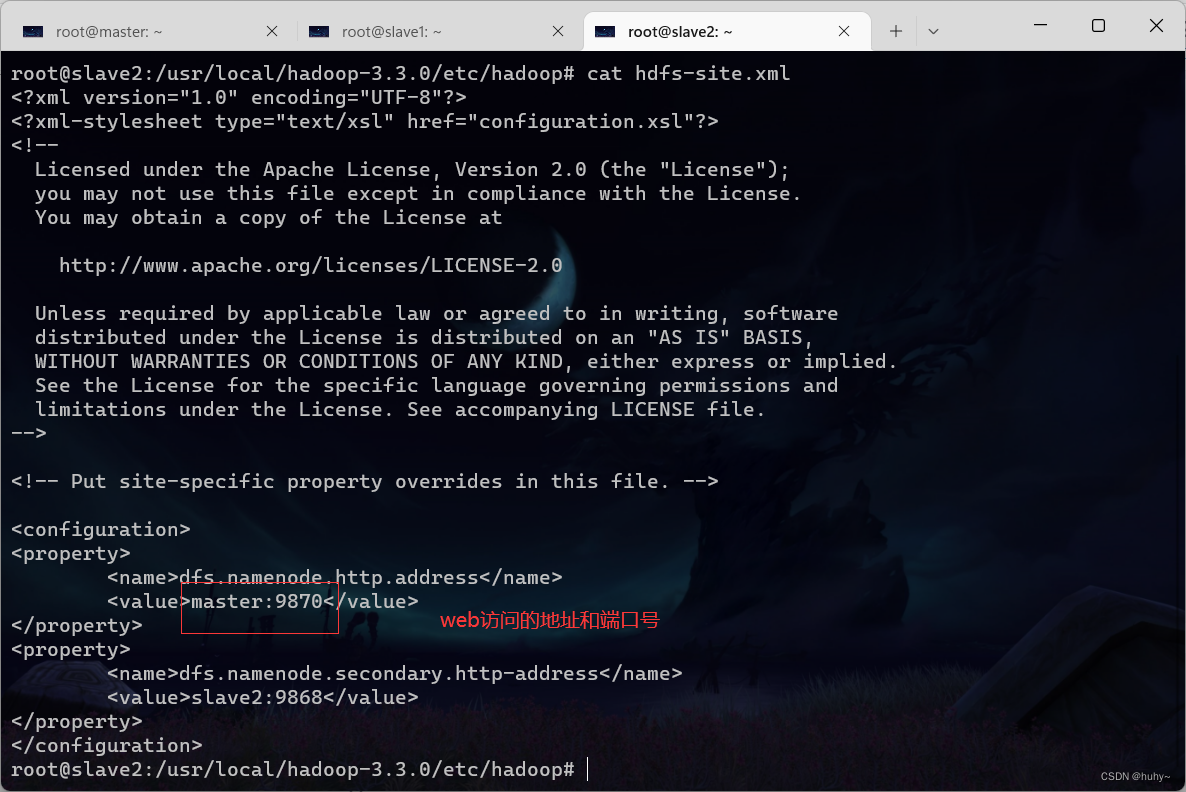

配置hdfs-site.xml

该文件用来设置HDFS的NameNode和DataNode两大进程的重要参数

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim hdfs-site.xml

<configuration>

<!-- 设置hdfs的访问地址 -->

<property>

<name>dfs.namenode.http.address</name>

<value>master:9870</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave2:9868</value>

</property>

</configuration>

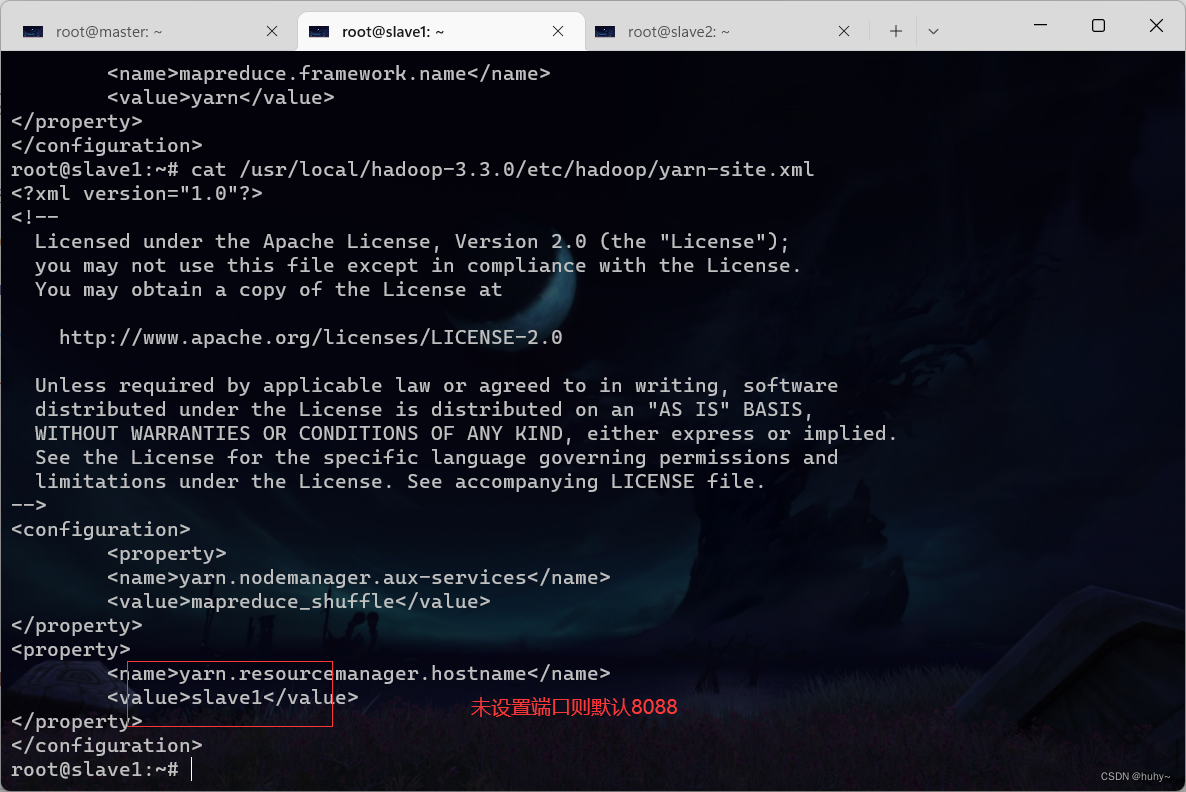

配置yarn-site.xml

该文件用来设置YARN的ResourceManager和NodeManager两大进程的重要配置参数

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim yarn-site.xml

<configuration>

<!-- 设置YARN集群主角色运行机器位置 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>slave1</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 是否将对容器实施物理内存限制 -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!-- 是否将对容器实施虚拟内存限制。 -->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!-- 开启日志聚集 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/usr/local/hadoop-3.3.0/history-logs</value>

<!--日志聚合到一个文件夹中 -->

</property>

<!-- 设置yarn历史服务器地址 -->

<property>

<name>yarn.log.server.url</name>

<value>http://master:19888/jobhistory/logs</value>

</property>

<!-- 历史日志保存的时间 7天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

</configuration>

配置mapred-site.xml

该文件用来配置MapReduce应用程序以及JobHistory服务器的重要配置参数

#如果是旧版本就要使用cp命令复制mapred-site.xml.template文件为mapred-site.xml,3版本不需要

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim mapred-site.xml

<configuration>

<!-- 设置MR程序默认运行模式: yarn集群模式 local本地模式 -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- MR程序历史服务地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<!-- MR程序历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

配置workers文件

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# vim workers

master

slave1

slave2

#三台节点各占一行,不要有空格

最后!!!在hadoop-3.3.0下创建logs和history-logs日志聚合文件~

root@master:/usr/local/hadoop-3.3.0# mkdir history-logs

root@master:/usr/local/hadoop-3.3.0# mkdir logs

将jdk、Hadoop传输到其他节点

使用scp命令传输两个文件后记得环境变量也要修改

root@master:~# scp -r /usr/local/hadoop-3.3.0/ slave1:/usr/local/

root@master:~# scp -r /usr/local/hadoop-3.3.0/ slave2:/usr/local/

root@master:~# scp -r /usr/local/jdk1.8.0/ slave1:/usr/local/

root@master:~# scp -r /usr/local/jdk1.8.0/ slave2:/usr/local/

#其他两个节点.配置如下环境变量

#JAVA_HOME

export JAVA_HOME=/usr/local/jdk1.8.0

export PATH=$PATH:$JAVA_HOME/bin

#HADOOP_HOME

export HADOOP_HOME=/usr/local/hadoop-3.3.0

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

测试从节点的环境变量

root@slave1:~# source /etc/profile

root@slave1:~# java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

root@slave1:~# hadoop version

Hadoop 3.3.0

Source code repository Unknown -r Unknown

Compiled by root on 2021-07-15T07:35Z

Compiled with protoc 3.7.1

From source with checksum 5dc29b802d6ccd77b262ef9d04d19c4

This command was run using /usr/local/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0.jar

root@slave1:~#

root@slave2:~# source /etc/profile

root@slave2:~# java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)

root@slave2:~# hadoop version

Hadoop 3.3.0

Source code repository Unknown -r Unknown

Compiled by root on 2021-07-15T07:35Z

Compiled with protoc 3.7.1

From source with checksum 5dc29b802d6ccd77b262ef9d04d19c4

This command was run using /usr/local/hadoop-3.3.0/share/hadoop/common/hadoop-common-3.3.0.jar

root@slave2:~#

#节点配置完毕,可以开启集群了

启动集群!!!

第一次使用HDFS时,必须首先在master节点执行格式化命令创建一个新分布式文件系统,然后才能正常启动NameNode服务,过程中应该没有任何的报错!!

root@master:/usr/local/hadoop-3.3.0/etc/hadoop# hdfs namenode -format

2022-09-09 12:23:07,392 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.200.100

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.3.0

......................

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.200.100

************************************************************/

root@master:~#

#最后输出SHUTDOWN_MSG: Shutting down NameNode at master/192.168.200.100则表明成功

#如果报waning没有创建data文件的话,就把hadoop-3.3.0目录的权限修改为777(三个节点都要修改),重新格式化一下!!!

如果集群启动失败了!!!一定要停止namenode和datanode进程,然后删除所有节点的data和logs目录,然后再进行格式化!!!

可直接启动集群所有服务,2版本的Hadoop是需要在sbin目录下启动

start-dfs.sh启动dfs

root@master:~# start-dfs.sh

Starting namenodes on [master]

Starting datanodes

Starting secondary namenodes [slave2]

root@master:~# jps

6386 NameNode

6775 Jps

6535 DataNode

root@master:~#

root@slave2:~# jps

4627 DataNode

4806 Jps

4766 SecondaryNameNode

root@slave2:~#

#启动后namenode运行在master,secoundnamenode运行在slave2

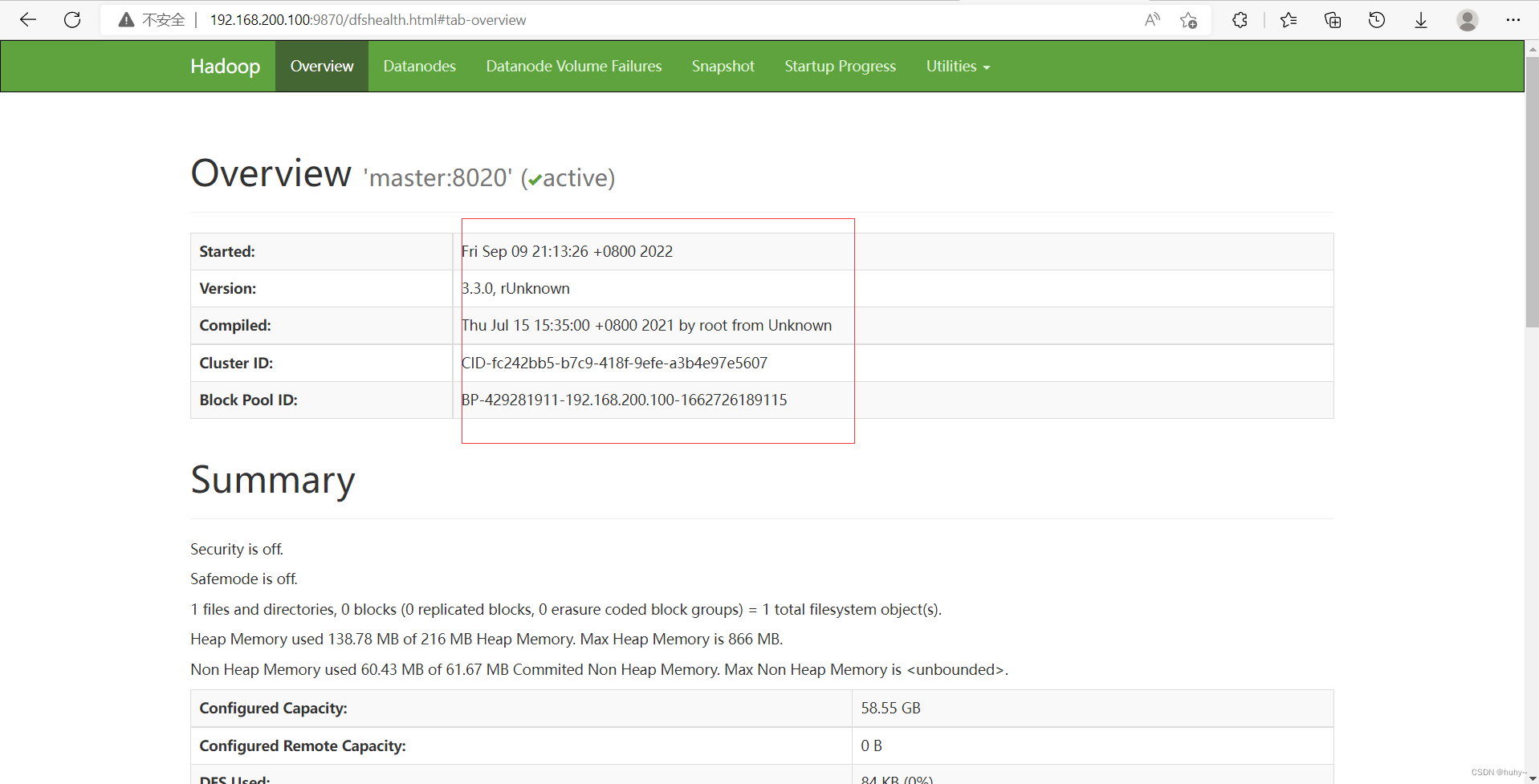

web访问hdfs;192.168.200.100:9870

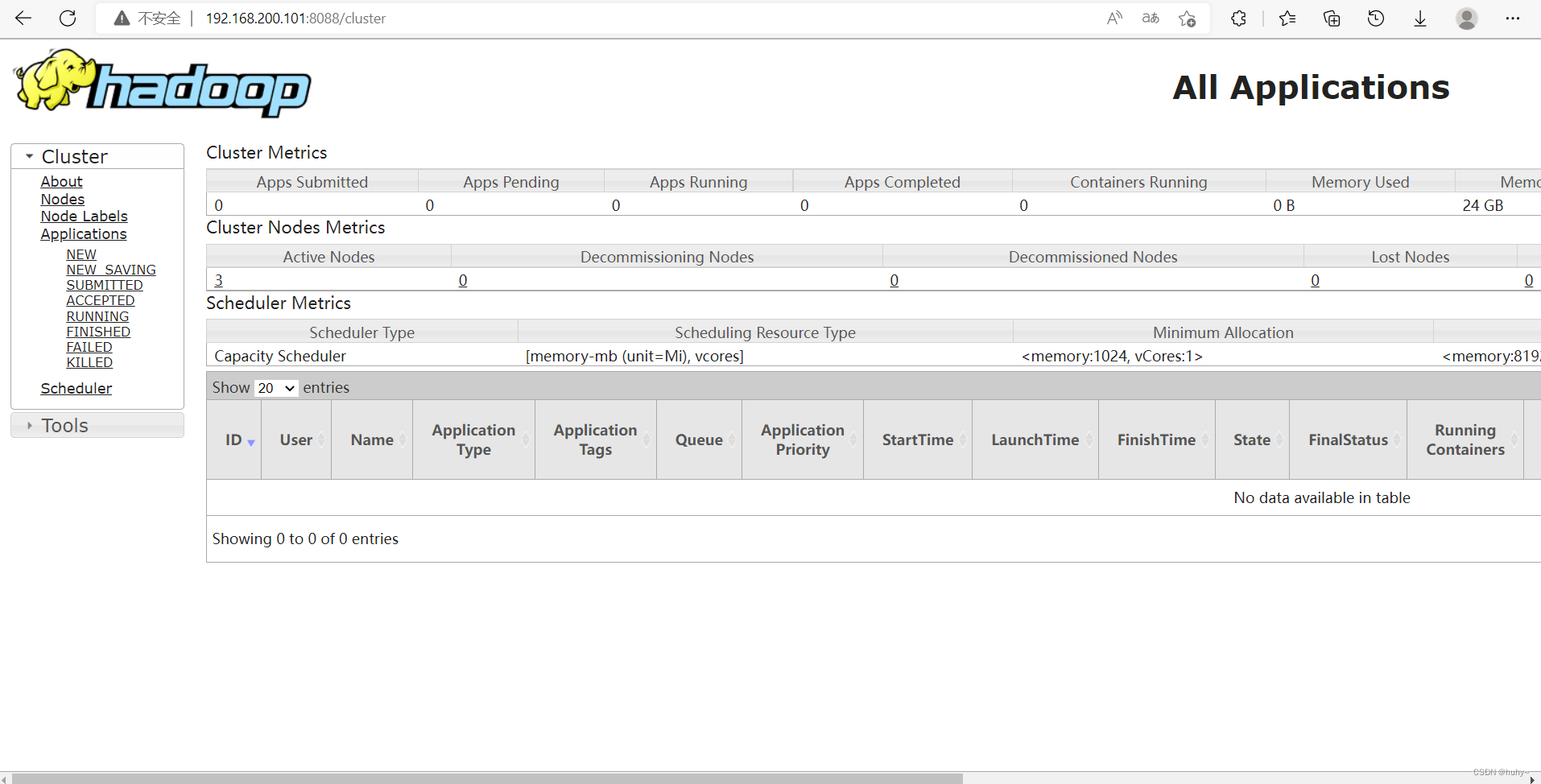

start-yarn.sh启动yarn

在slave1上启动

root@slave1:~# start-yarn.sh

Starting resourcemanager

Starting nodemanagers

root@slave1:~# jps

6567 DataNode

7259 Jps

6893 NodeManager

6734 ResourceManager

root@slave1:~#

web访问yarn;192.168.200.101:8088

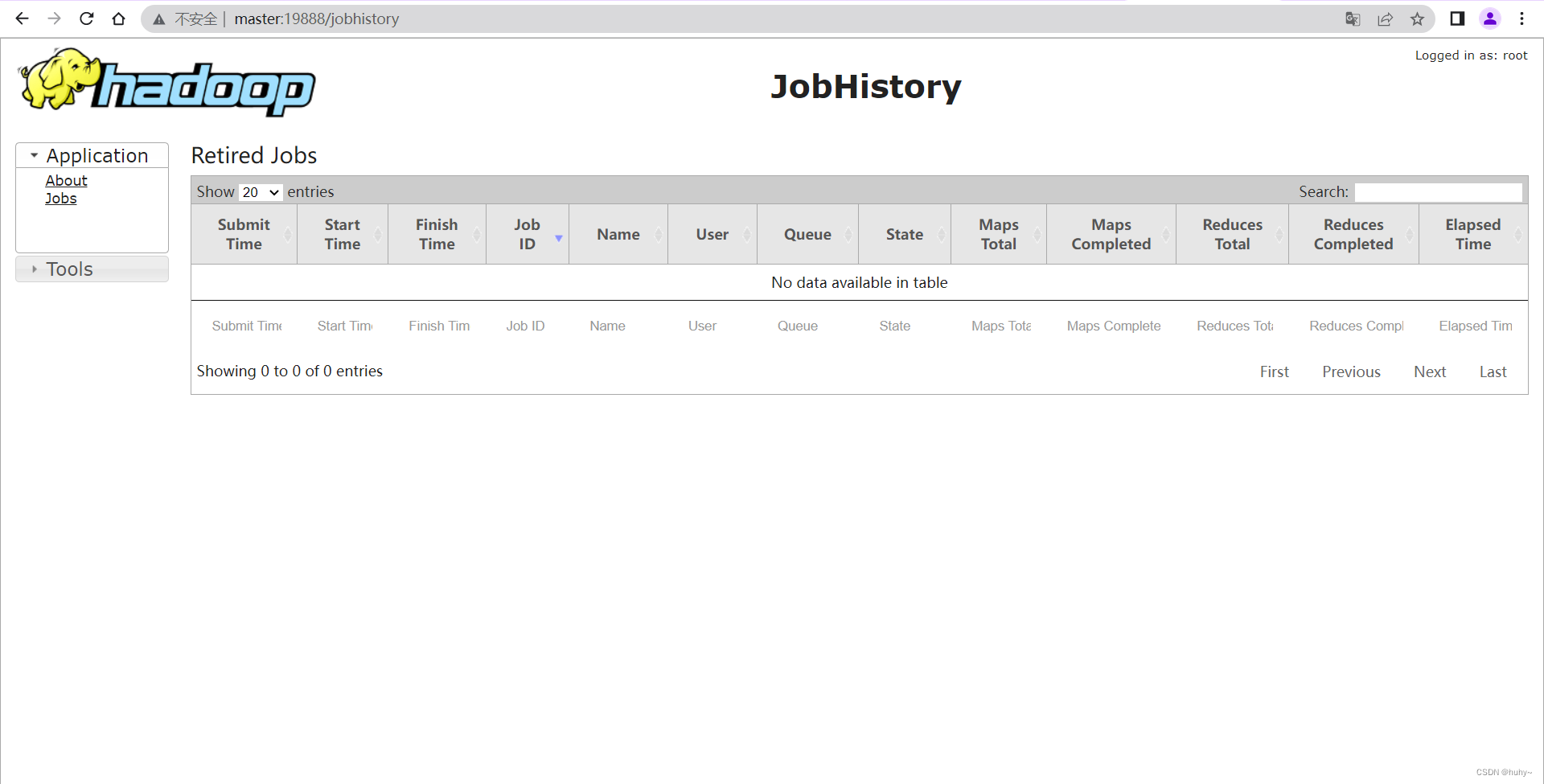

访问历史服务器

root@master:~# mapred --daemon start historyserver

root@master:~# jps

3473 DataNode

4614 Jps

4026 JobHistoryServer

3819 NodeManager

3325 NameNode

root@master:~#

也可以一键使用start-all.sh和stop-all.sh,来启动所有的进程和关闭所有的进程

顺便提一嘴,如果说window访问不了maste、slave1,则可以windows上做个域名解析,或者配置文件xml中使用地址

到此集群搭建完毕!!