flume高级组件及各种报错

1,one source two channel

创建conf文件,内容如下:

#定义agent名, source、channel、sink的名称 access.sources = r1 access.channels = c1 c2 access.sinks = k1 k2 #具体定义source access.sources.r1.type = netcat access.sources.r1.bind = 0.0.0.0 access.sources.r1.port = 41414 #具体定义channel access.channels.c1.type = memory access.channels.c1.capacity = 1000 access.channels.c1.transactionCapacity = 100 #具体定义channel access.channels.c2.type = memory access.channels.c2.capacity = 1000 access.channels.c2.transactionCapacity = 100 #定义拦截器,为消息添加时间戳 access.sources.r1.interceptors = i1 access.sources.r1.interceptors.i1.type = org.apache.flume.interceptor.TimestampInterceptor$Builder #具体定义sink access.sinks.k1.type = hdfs access.sinks.k1.hdfs.path = hdfs://192.168.22.131:9000/source/%Y%m%d access.sinks.k1.hdfs.filePrefix = events- access.sinks.k1.hdfs.fileType = DataStream #access.sinks.k1.hdfs.fileType = CompressedStream #access.sinks.k1.hdfs.codeC = gzip #不按照条数生成文件 access.sinks.k1.hdfs.rollCount = 0 #HDFS上的文件达到64M时生成一个文件 access.sinks.k1.hdfs.rollSize = 67108864 access.sinks.k1.hdfs.rollInterval = 0 #logger sink access.sinks.k2.type = logger #组装source、channel、sink access.sources.r1.channels = c1 c2 access.sinks.k1.channel = c1 access.sinks.k2.channel = c2

2,启动HDFS

sbin/start-dfs.sh

3,启动flume

root@Ubuntu-1:/usr/local/apache-flume# bin/flume-ng agent --conf conf/ --conf-file conf/one_source_two_channel.conf --name access -Dflume.root.logger=INFO,console &

4,

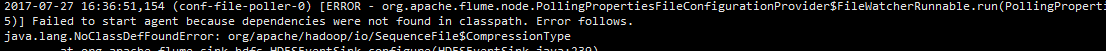

---报错1:

问题原因:缺少依赖包,这个依赖包是以下jar文件

解决办法:

cp /share/hadoop/common/commons-configuration-1.6.jar /usr/local/apache-flume/lib

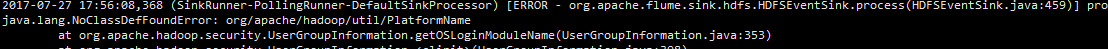

---报错2:

问题原因:缺少依赖包

解决办法:

root@Ubuntu-1:/usr/local/hadoop-2.6.0/share/hadoop/common/lib# cp hadoop-auth-2.6.0.jar /usr/local/apache-flume/lib/

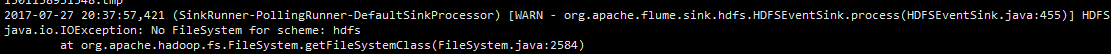

---报错3:

问题原因:缺少依赖包

解决办法:

root@Ubuntu-1:/usr/local/hadoop-2.6.0/share/hadoop/hdfs# cp hadoop-hdfs-2.6.0.jar /usr/local/apache-flume/lib/

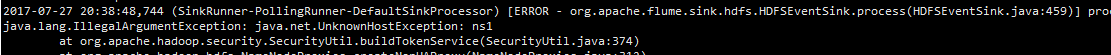

---报错4:

问题原因:主机名和端口不对

解决办法:

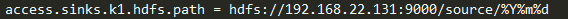

在上述flume的conf文件中更改此条配置为如下所示:

为主机名:HDFS的端口

---报错5:

问题原因:缺少依赖包

解决办法:

root@Ubuntu-1:/usr/local/hadoop-2.6.0/share/hadoop/common/lib# cp htrace-core-3.0.4.jar /usr/local/apache-flume/lib/

所有问题解决

telnet 0.0.0.0 41414

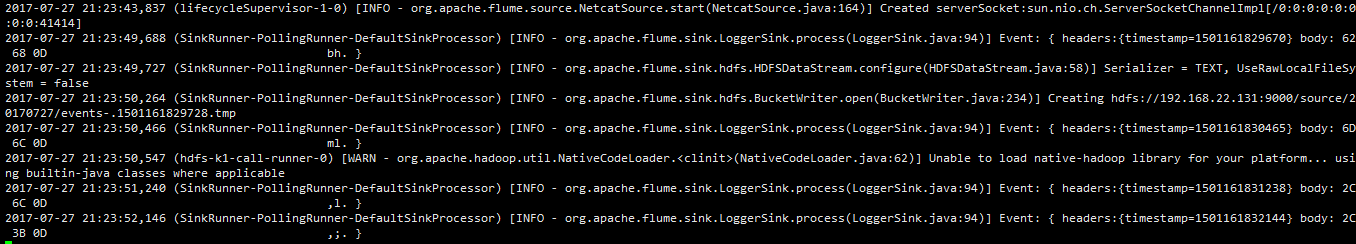

向HDFS中打入数据

成功

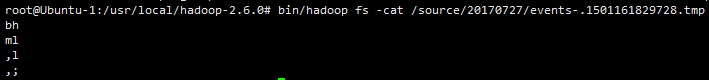

查看HDFS文件

完成。

参考:http://blog.csdn.net/strongyoung88/article/details/52937835