【转】Transparency (or Translucency) Rendering

From:https://developer.nvidia.com/content/transparency-or-translucency-rendering

Like many other visual effects, games attempt to mimic transparent (or translucent as it’s often synonymously referred to in the games industry) objects as closely as possible. Real world transparent objects are often modelled in games using a simple set of equations and rules; simplifications are made, and laws of physics are bent, in an attempt to reduce the cost of simulating such a complex phenomenon. For the most part we can get plausible results when rendering semi-transparent objects by ignoring any refraction or light scattering in participating media. In this article we’re going to focus on a few key methods for transparency rendering, discuss the basics and propose some alternatives/optimizations which should be of use to anyone who hasn’t heard them before.

Back-to-Front Rendering with OVER Blending

The OVER Operator

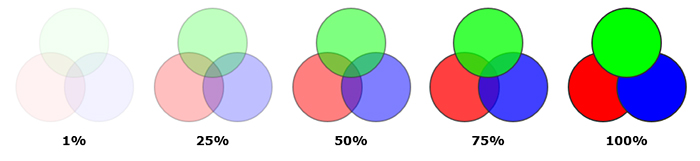

The most common way to simulate transparency. Fragments are composited in back-to-front order, using the traditional alpha blending equation also known as the OVER operator [Porter and Duff 84]:

Cdst = Asrc Csrc + (1 - Asrc) Cdst

In the above diagram, you can see the OVER operator in action, used to blend 3 identically proportioned circles rendered in the order; red, blue, green.

Pre-Multiplied Alpha

Pre-multiplied alpha is a useful trick which enables the possibility of mixing two blend modes (in this case ADDITIVE and OVER blending) in the same draw call. A typical use-case for this technique is rendering an explosion with additive (e.g. fire) and non-additive materials (e.g. smoke) intermixed in the same particle system.

This is achieved by setting the blend state to the following:

Cdst = Csrc + (1 - Asrc) Cdst

And pre-multiplying the blending source color by the source alpha in the pixel shader:

(output.rgb * output.a, output.a * t)

Note the ‘t’ parameter, which is a user-controlled scalar used to interpolate smoothly between ADDITIVE and OVER blend modes. (‘t’ should be in the 0 to 1 range).

Tip: You can perform this pre-multiplication by alpha as an off-line operation on your textures, which helps improve the quality of RGBA mip-maps (see [McDonald 2013] for more details).

Typical Blending Optimizations

DISCARD

This is a D3D11 assembly instruction, which maps to two high level HLSL constructs, the ‘clip(…)’ function, and the discard instruction, both of which have similar functionality. Using a DISCARD instruction inside your pixels shaders will let the GPU skip any work at the output-merger stage for this pixel, after the shader has complete. This can be extremely handy in ROP limited regimes such as particle systems with lots of heavy overdraw: using a DISCARD instruction for when alpha is close to 0 (e.g. less than 5% opaque) will make the GPU skip a lot of the blending work while producing a plausible approximation. Even today, blending can still be a rather expensive operation: it requires read-modify-write operations which are expensive memory-wise and have to be processed in order, which can be a substantial bottleneck under certain conditions despite the extensive blending hardware found in modern GPUs.

Tip: Using a DISCARD instruction in most circumstances will break EarlyZ (more on this later). However, if you’re not writing to depth (which is usually the case when alpha blending is enabled) it’s okay to use it and still have EarlyZ depth testing!

Reduced Rate Blending

This is another very common blending optimization. Here, rendering is performed using a lower resolution render target (typically half-resolution, although this isn’t a strict limitation). There are many caveats associated with this technique and describing them is beyond the scope of this article, for a good reference on how to implement this approach, we’d ask you to look at [Cantlay 08] and [Jansen and Bavoil 2011].

Front-to-Back Rendering with UNDER Blending

The UNDER Operator

Similar to the OVER blending technique explained above but here, color is pre-multiplied by alpha before blending and as the name suggests, this blend mode is used to composite fragments UNDER one-another. Thus, using this blend mode to render a collection of transparent objects would require the draw order to be front-to-back.

Also, note the separate alpha equation below (see [Bavoil and Myers 2008] for a proof of how this equation can be derived from the OVER operator):

Cdst = Adst (Asrc Csrc) + Cdst

Adst = 0 + (1 - Asrc) Adst

In this case, Adst stores the current transmittance of the fragments that have been blended so far, and is initialized to 1.0.

Finally, the blended fragments are composited with the background color Cbg using a pixel shader implementing:

Cdst = Adst Cbg + Cdst

Transmittance Thresholding

Transmittance thresholding (referred to as 'opacity thresholding' in [Wexler et al. 2005]), allows us to use an important property of front-to-back, UNDER blending which is that transmittance (stored in the alpha channel) tends towards 0 as more semi-transparent triangles are rendered for each screen pixel. When transmittance reaches 0, no further light can be observed past this point. The basic idea for this technique is borrowed from ray-marching (volume rendering) where it’s typical to early-out of the marching loop once opacity has reached some threshold.

To put it in real life context, imagine a very dense cloud of smoke. The smoke is so dense that you cannot see through to the other side and beyond. Now imagine rendering this smoke as a particle system: what would be the point of rendering fragments which don’t contribute to the final image because they are completely hidden behind dense smoke?

Fragments covered by dense smoke occlude what’s behind them, like the smoke stack occluding the sky in this photograph.

The Algorithm:

- Group blended geometry into buckets of view-space Z.

- Enable UNDER alpha blending.

- Enable depth testing.

- For each bucket;

- Render blended geometry in bucket, with depth writes disabled.

- Render full screen quad at the near plane, with depth writes enabled and colour writes disabled.

- Read frame buffer and discard pixels with a transmittance value lower than some threshold.

Using this technique, we use the depth testing hardware to aid us in culling pixels which don’t contribute to the final image. This can offer huge savings in pixel/ROP bound regimes, as the shaders won’t get invoked for pixels that have reached minimum transmittance, but in order to make sure we hit the fast path when culling pixels in this fashion, we must ensure EarlyZ is used when performing depth testing. Since the GeForce 8 series, the fine-grained depth-stencil test is performed either before (EarlyZ) or after (LateZ) the pixel shader invocations. Thus, EarlyZ is what allows us to reject pixels based on depth before the pixel shaders are invoked. This can be enforced by using the ‘earlydepthstencil’ HLSL attribute in your blended-geometry pixel shaders, or by following the rules for hitting EarlyZ below:

- Don’t write depth using the ‘SV_Depth’ semantic; instead try conservative depth (SV_DepthGreaterEqual or SV_DepthLessEqual) if possible.

- Don’t use ‘discard’ or ‘clip’ with depth writes enabled, but you may use them with depth writes disabled.

- Don’t use UAV’s in the pixel shader (unless using the ‘earlydepthstencil’ attribute).

Tip: Although it may make more sense to use stencil testing to perform the transmittance thresholding, in our own tests we've found depth testing is more consistently performant.

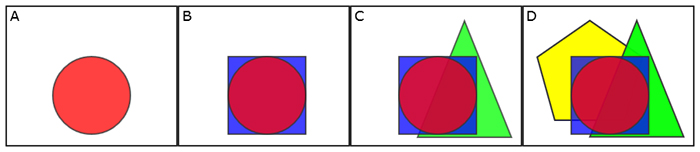

In the above diagram, you can see a sequential set of blended draw calls, each with an opacity of 75%. Note on the last draw call (frame D) the yellow pentagon is no longer visible behind the stack of other primitives. Transmittance thresholding allows us to skip these pixels as they don’t contribute to the final image (As demonstrated).

Weighted, Blended Order-Independent Transparency (OIT)

The major issue with the above 2 compositing operators is that they require the fragments per pixel to be rasterized in depth-sorted order. If the scene only contains semi-transparent camera-facing particles, the ordering problem can be solved by sorting the particles using their center depth (which can be done efficiently on the GPU using compute-shader bitonic or radix sorts). However, handling scenes containing arbitrary transparent objects (such as glass windows and self-intersecting tree leaves) can be expensive to achieve with bounded GPU memory and time as the sorting must be performed at the granularity of the rasterized fragments for the best results (see [Kubisch 2014] for a recent survey on A-buffer and k-buffer GPU implementations).

As noted above, when doing alpha blending with the OVER operator, the accumulated color Cacc (intermediate destination color after all fragments have been blended) must be composited with the opaque background color Cbg (the color behind the farthest composited fragment). This compositing equation is:

Cfinal = Aacc Cacc + (1 - Aacc) Cbg

And by defining the visibility of the opaque background as Vbg = (1 – Aacc), this equation becomes:

Cfinal = (1 - Vbg) Cacc + Vbg Cbg

Background Visibility

By matching the above equation with the UNDER blending equations, we see that Vbg is the transmittance through all the blended fragments, that is the product of their (1 – Asrc) values. Since multiplication is commutative, this Vbg term can be rendered correctly without any depth sorting and in a single geometry pass, by clearing Adst to 1.0 and configuring the blend state to perform:

Adst = (1 – Asrc) Adst

Per-Fragment Visibility

The Cacc term is the result of applying this UNDER blending operator on each fragment in front-to-back order:

Cdst = Adst (Asrc Csrc) + Cdst

Where Adst depends on all the opacity values of the semi-transparent fragments that are in front of the current source fragment. This Adst term can be seen as a per-fragment visibility Adst = V(zsrc), that is the fraction of the current source color contribution that is visible at the current source depth:

Cdst = V(zsrc) (Asrc Csrc) + Cdst

If we had an oracle available that would give us the value of V(zsrc) in the pixel shader for each source fragment being rasterized in arbitrary depth order, we would then be able to implement this blend equation in an order-independent way using additive blending.

It turns out that plausible transparency rendering results can be obtained by aggressively approximating the visibility function V(z) with simple closed-form functions. One can start with: V = 1 / Sum[ Asrc ], where Sum[ Asrc ] is the sum of all the opacity values. This effectively results in this weighted average:

Cacc = Sum[ Asrc Csrc ] / Sum[ Asrc ]

Although this visibility function does not decay with depth, it generates color values that are in the range of the source color values (since it is a weighted average) and is fully order independent.

For higher-quality visibility functions V(z) that falloff with depth, see [McGuire and Bavoil 2013]. Using a custom visibility function, the weighted-average blended color becomes:

Cacc = Sum[ V(zsrc) src Csrc ] / Sum[ V(zsrc) Asrc ]

Summary

The blended geometry is rendered in a single pass with 2 render targets and:

- 1 render-target channel storing Product[ 1 – Asrc ]

- 3 render-target channels storing Sum[ V(zsrc) Asrc Csrc ]

- 1 last render-target channel storing Sum[ V(zsrc) Asrc ].

And finally, a full-screen pass composites it all with the background color.

An example implementation is available in NVIDIA’s GameWorks OpenGL SDK.

Results

Here are screenshots from a prototype implementation made in UE3. In this case, the WEIGHTED blending pass may be slower than the UNDER blending because the WEIGHTED blending result uses multiple render targets. Still, the WEIGHTED approach has the advantage of requiring no sorting. See [McGuire 2014] for more results.

Reference image with UNDER blending and the particles rendered in back-to-front order.

Weighted blended OIT approximation with a depth-independent visibility function.

Weighted blended OIT approximation with a depth-dependent visibility function.

References

[Kubisch 2014] Christoph Kubisch. “Order Independent Transparency in OpenGL 4.x”. GTC 2014.

[McGuire 2014] Morgan McGuire. “Weighted Blended Order-Independent Transparency”. Blog Post. 2014.

[McGuire and Bavoil 2013] Morgan McGuire and Louis Bavoil. “Weighted Blended Order-Independent Transparency”. JCGT. 2013.

[McDonald 2013] John McDonald. “Alpha Blending: To Pre or Not To Pre”. NVIDIA Blog Post. 2013.

[Jansen and Bavoil 2011] Jon Jansen and Louis Bavoil. “Fast rendering of opacity- mapped particles using DirectX 11 tessellation and mixed resolutions”. NVIDIA Whitepaper. 2011.

[Cantlay 08] Iain Cantlay. "High-Speed, Off-Screen Particles" Chapter 23 - GPU Gems 3, NVIDIA, 2008.

[Bavoil and Myers 2008] Louis Bavoil and Kevin Myers. “Order Independent Transparency with Dual Depth Peeling”. NVIDIA Whitepaper. 2008.

[Wexler et al. 2005] Daniel Wexler, Larry Gritz, Eric Enderton, Jonathan Rice. “GPU-accelerated high-quality hidden surface removal”. HWWS '05 Proceedings of the ACM SIGGRAPH/EUROGRAPHICS conference on graphics hardware. 2005.

[Porter and Duff 84] Thomas Porter. Tom Duff. “Compositing digital images”. SIGGRAPH '84 Proceedings of the 11th annual conference on Computer graphics and interactive techniques. 1984.