10 kubernetes 日志收集流程简介、pod日志收集的几种方式

一 elk以及kafka集群 环境准备

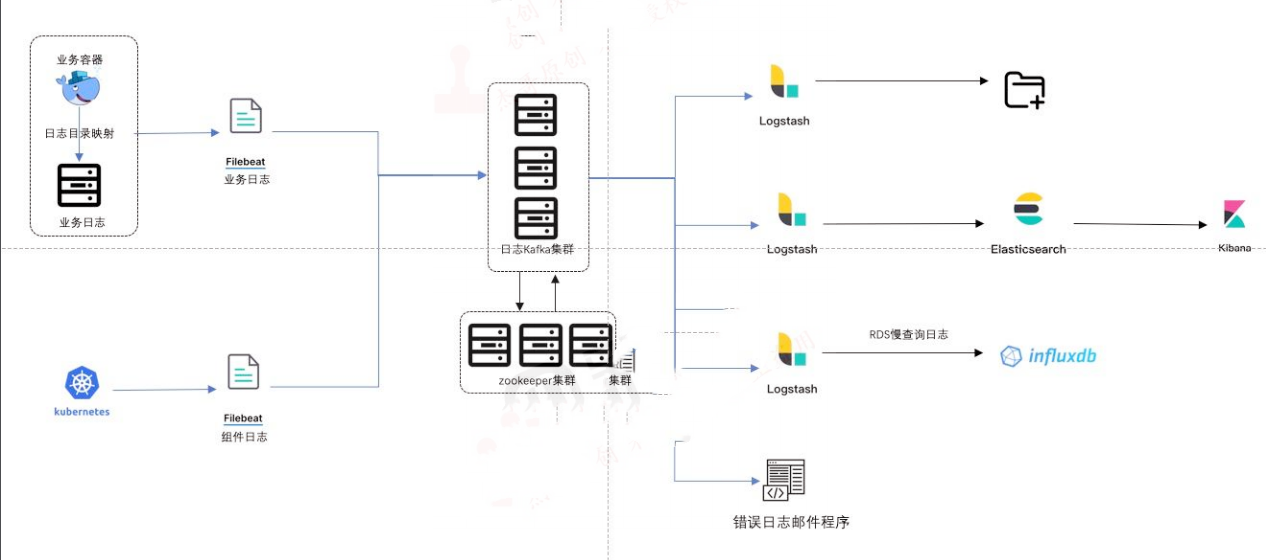

1.1 日志收集流程

1.2 elk集群准备

1.2.1 es集群环境准备

es版本为7.12

https://www.elastic.co/cn/downloads/

http://www.pingtaimeng.com/article/detail/id/2151994 7.12安装

1.2.1.1 准备java环境

yum install -y java-1.8.0-*

1.2.1.2 安装es

rpm -ivh elasticsearch-7.12.1-x86_64.rpm #rpm启动不了,

换成二进制安装即可

1.2.1.3 更改配置文件

配置文件给两外两台节点复制一份,,改下network.host和node.name即可

[es@localhost elasticsearch-7.12.1]$ grep -v "^#" config/elasticsearch.yml

cluster.name: elk

node.name: node-103

network.host: 172.31.7.103

http.port: 9200

discovery.seed_hosts: ["172.31.7.103", "172.31.7.104","172.31.7.105"]

cluster.initial_master_nodes: ["172.31.7.103", "172.31.7.104","172.31.7.105"] #哪些节点可以x选举为master

action.destructive_requires_name: true #禁止模糊删除数据

1.2.1.4 启动es

默认启动的时候回报两个错误如下以及解决办法:

#报错1

[1]: max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536] 意思是说你的进程不够用了

解决方案: 切到root 用户:进入到security目录下的limits.conf;执行命令

vim /etc/security/limits.conf 在文件的末尾添加下面的参数值:

* soft nofile 65536

* hard nofile 131072

* soft nproc 4096

* hard nproc 4096

#报错2

2,[2]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144] 需要修改系统变量的最大值

解决方案:切换到root用户修改配置sysctl.conf

sudo vim /etc/sysctl.conf

增加配置值: vm.max_map_count=655360

sudo sysctl -p

启动es必须需要普通用户去启动

useradd es

chown -R es /home/es/elasticsearch-7.12.1

su - es #必须用普通用户启动

./bin/elasticsearch -d #后台启动

vim config/jvm.options #修改jvm

nohup ./bin/elasticsearch > nohup.log & #后台启动

启动完成之后,还有9200和9300两个端口号

1.2.1.5 浏览器验证

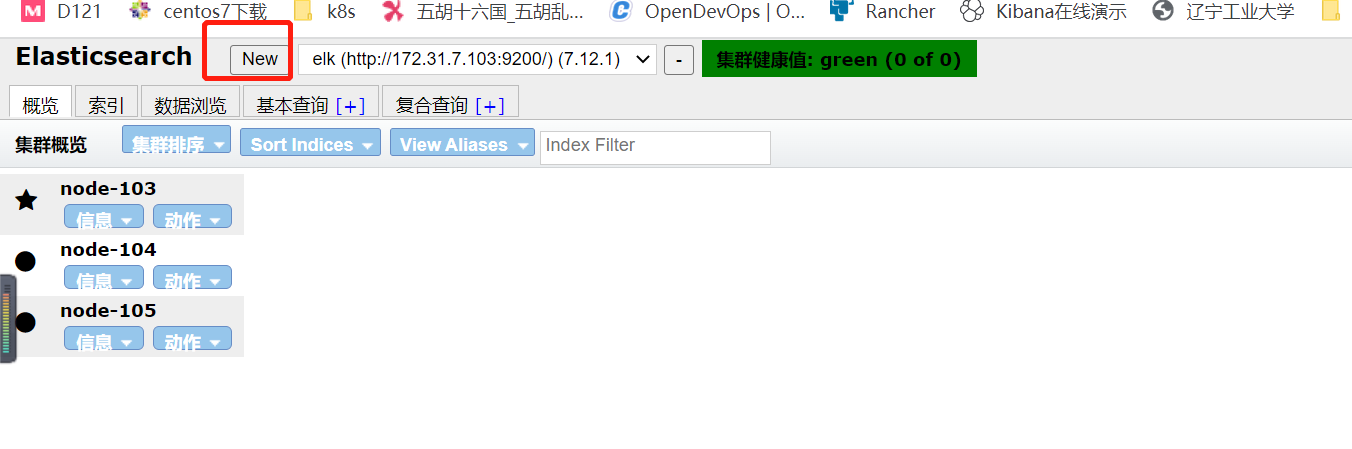

使用谷歌浏览器,去谷歌商店安装一款elasticsearch head的插件,就可以看到如下信息:

带*号的是主节点

1.2.2 kibana安装

使用rpm安装的,

1.2.2.1 kibana配置文件

[root@localhost ~]# grep -v "^#" /etc/kibana/kibana.yml |grep -v "^$"

server.port: 5601

server.host: "172.31.7.103"

elasticsearch.hosts: ["http://172.31.7.103:9200","http://172.31.7.104:9200","http://172.31.7.105:9200"]

1.2.2.2 启动服务

systemctl start kibana

1.2.2.3 浏览器访问

http://172.31.7.103:5601/app/home#/

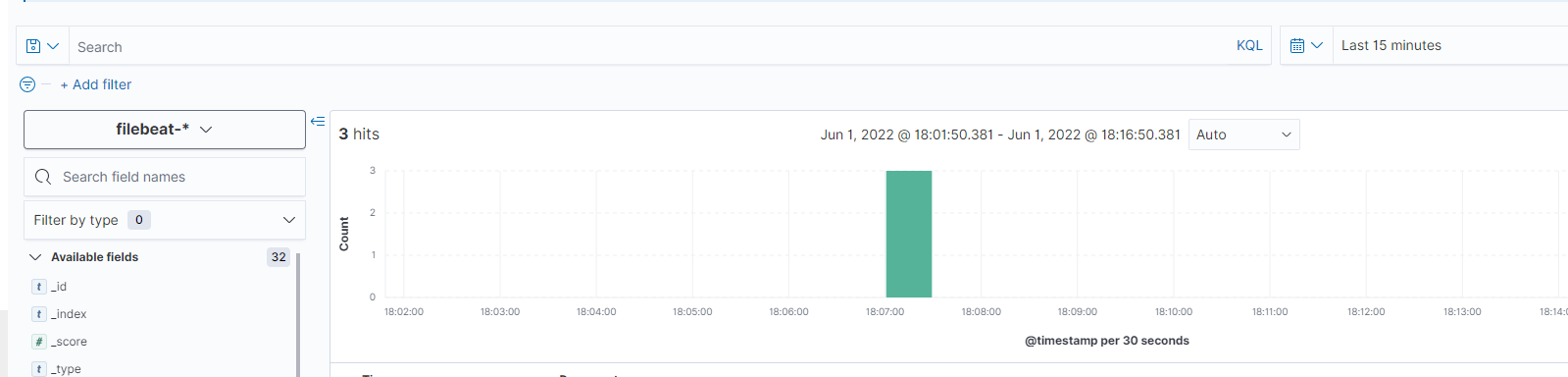

1.2.3 安装filebeat客户端进行验证

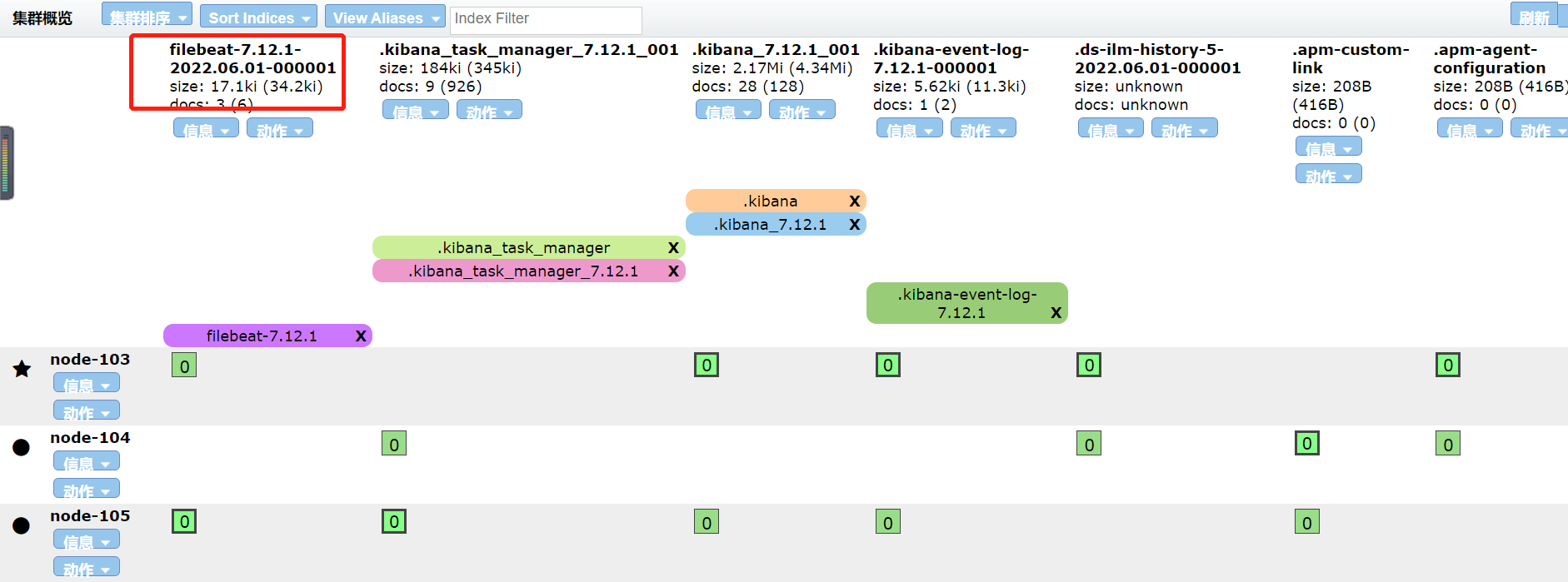

安装一个filebeat客户端,收集/var/log/*.log,然后把收集到的日志给到es集群如下

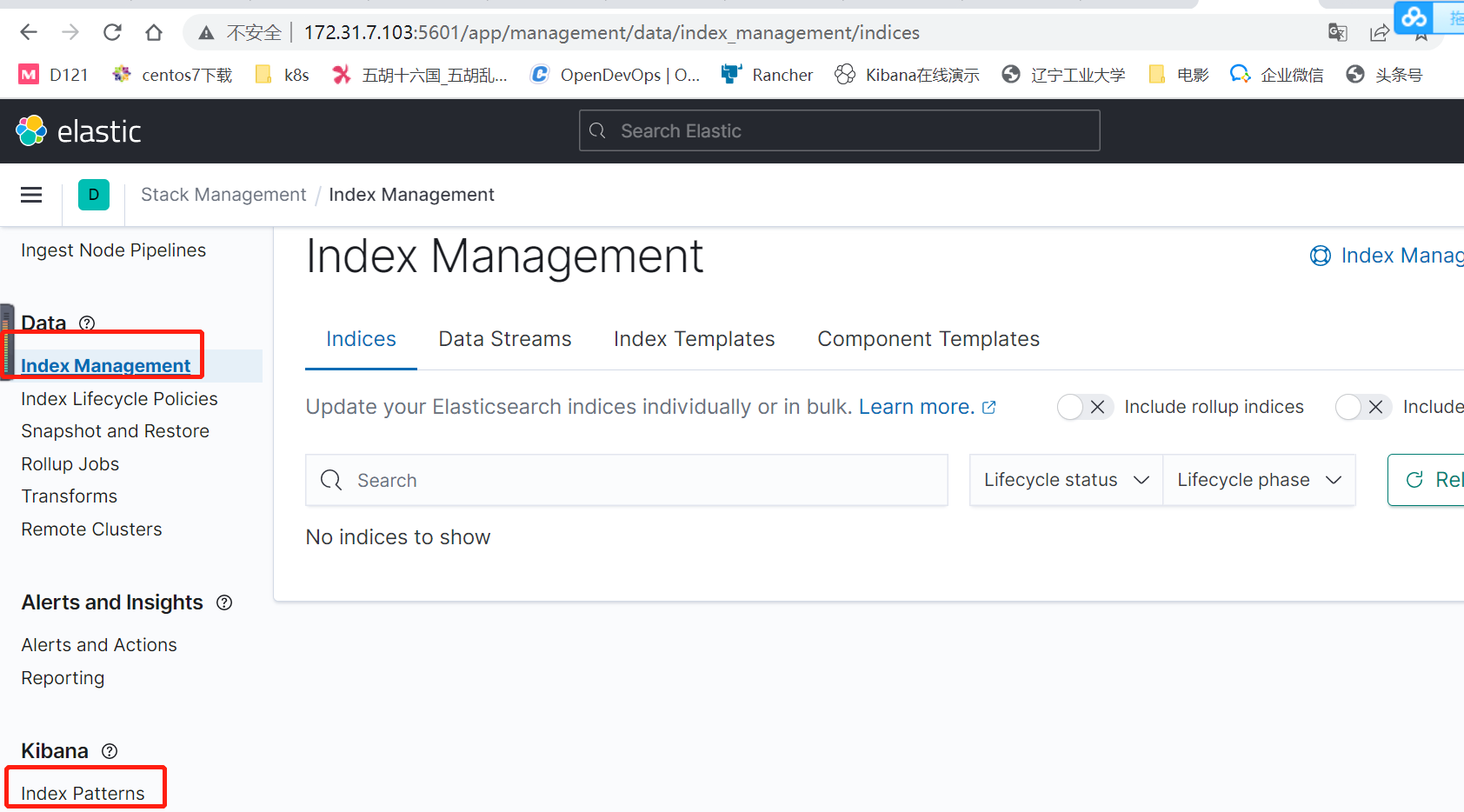

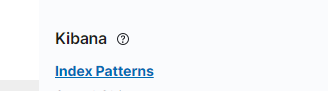

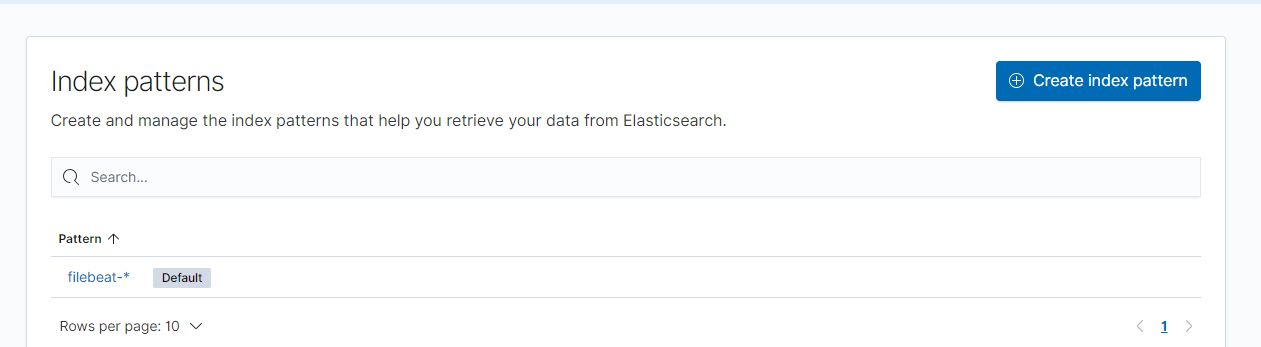

然后到kibana里面创建索引即可

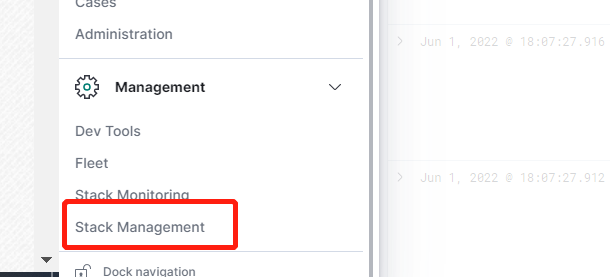

选择下面这个选项即可

下面有索引才可以创建

最终在kibana里面查看日志

1.3 1.3 kafka集群环境准备

参见 https://www.cnblogs.com/huningfei/p/17123138.html

二 pod日志收集方式

1 node节点收集,基于daemoset部署日志收集进程,实现json-file类型日志收集,就是直接在node节点部署logstash或者filebeat收集node节点上面产生pod日志,默认路径都在/var/lib/docker/containers/ 下

2 使用sidecar容器(一个pod多个容器)收集当前pod内一个或多个业务的容器日志(通常基于emptydir实现业务容器与sidcar之间的日志共享)

3 在容器内置日志收集服务进程

2.1 第一种-node节点收集-daemonsets收集jsonfile日志

首先,在每个node节点采用daeoset的形式安装logstash或者filebeat,收集日志然后转发给kakfa集群,kafka集群在将日志转发给elk系统的logstash上面,然后logstash在转发给es集群,最终通过kibana展示出来。

所以想要收集k8s pod的日志,必须先准备zookeeper集群和kafka集群,还有elk系统,其中es也是集群模式。

2.1.1 首先准备logstash镜像以及配置文件

2.1.2 logstash.conf配置文件

cat app1.conf

input {

file {

path => "/var/lib/docker/containers/*/*-json.log" #日志收集路径

start_position => "beginning"

type => "jsonfile-daemonset-applog"

}

file {

path => "/var/log/*.log"

start_position => "beginning"

type => "jsonfile-daemonset-syslog"

}

}

output {

if [type] == "jsonfile-daemonset-applog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "jsonfile-daemonset-syslog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}" #从logstash.yaml这里面获取这个变量

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}" #系统日志不是json格式

}}

}

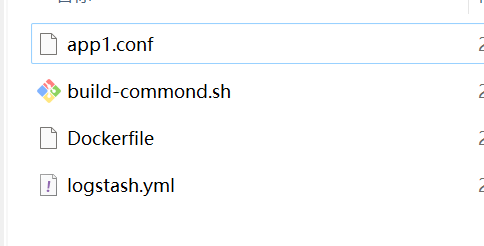

2.1.3 dockfile镜像打包文件

[root@k8s-master1 1.logstash-image-Dockerfile]# cat Dockerfile

FROM logstash:7.12.1

USER root

WORKDIR /usr/share/logstash

#RUN rm -rf config/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD app1.conf /usr/share/logstash/pipeline/logstash.conf

2.1.4 logstash.yml配置文件

[root@k8s-master1 1.logstash-image-Dockerfile]# cat logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

2.1.5 打包脚本

[root@k8s-master1 1.logstash-image-Dockerfile]# cat build-commond.sh

#!/bin/bash

docker build -t registry.cn-hangzhou.aliyuncs.com/huningfei/logstash:v7.12.1-json-file-log-v4 .

docker push registry.cn-hangzhou.aliyuncs.com/huningfei/logstash:v7.12.1-json-file-log-v4

2.1.6 运行yaml文件,部署logstash

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: logstash-elasticsearch

namespace: kube-system

labels:

k8s-app: logstash-logging

spec:

selector:

matchLabels:

name: logstash-elasticsearch

template:

metadata:

labels:

name: logstash-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: logstash-elasticsearch

image: registry.cn-hangzhou.aliyuncs.com/huningfei/logstash:v7.12.1-json-file-log-v4

env:

- name: "KAFKA_SERVER"

value: "172.31.4.101:9092,172.31.4.102:9092,172.31.4.103:9092"

- name: "TOPIC_ID"

value: "jsonfile-log-topic"

- name: "CODEC"

value: "json"

# resources:

# limits:

# cpu: 1000m

# memory: 1024Mi

# requests:

# cpu: 500m

# memory: 1024Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: false

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

2.1.7 logstash配置文件(elk里的logstash,从kafka里读取数据)

losstash里的type类型,来自于上面的app1.conf的配置

cat 3.logsatsh-daemonset-jsonfile-kafka-to-es.conf

input {

kafka {

bootstrap_servers => "172.31.4.101:9092,172.31.4.102:9092,172.31.4.103:9092"

topics => ["jsonfile-log-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

}

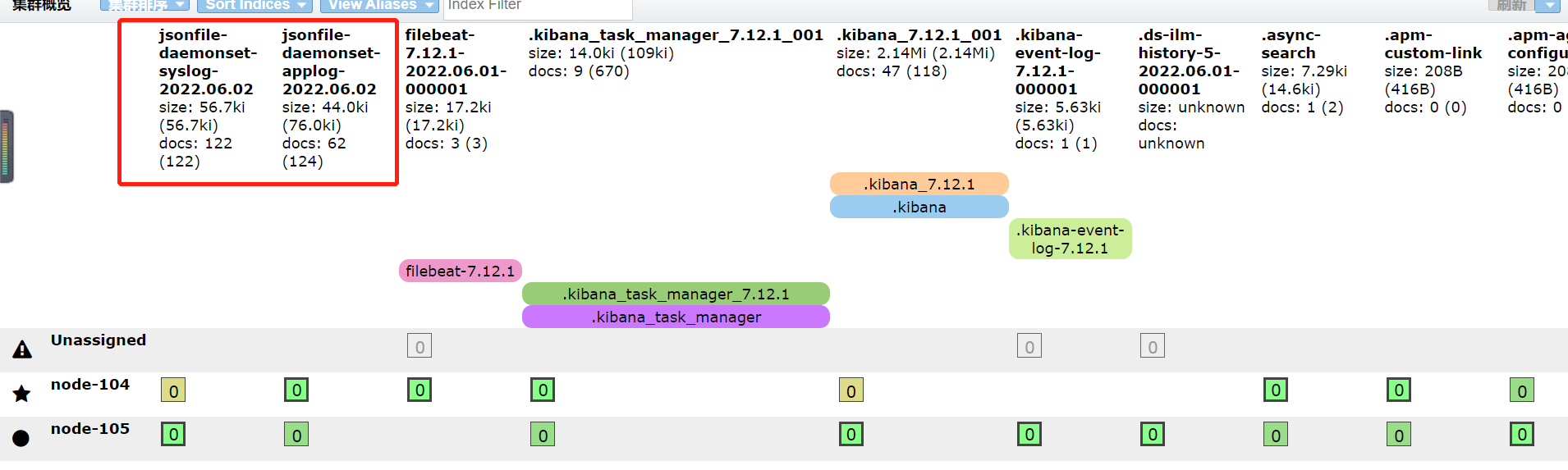

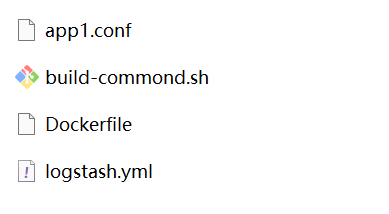

2.1.8 验证

全部部署完之后,去es和kibana上查看是否有日志收集过来

2.2 第二种-sidecar模式日志收集

使用sidcar容器(一个pod多容器)收集当前pod内一个或者多个业务容器的日志(通常基于emptyDir实现业务容器与sidcar之间的日志共享)。其实就是一个pod里运行两个容器,一个业务容器,一个日志容器。

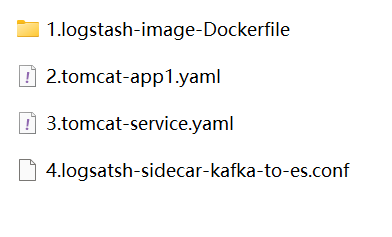

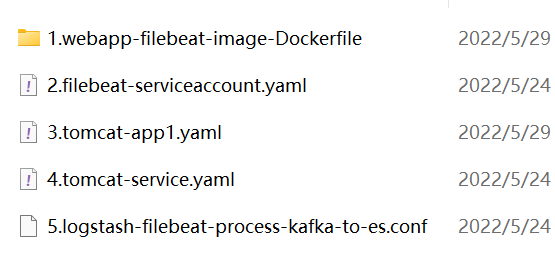

2.2.1 准备所需文件

2.2.2 构建镜像上传harbor

cat Dockerfile

FROM logstash:7.12.1

USER root

WORKDIR /usr/share/logstash

#RUN rm -rf config/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD app1.conf /usr/share/logstash/pipeline/logstash.conf

2.2.3 logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

2.2.4 logstash.conf

input {

file {

path => "/var/log/applog/catalina.out"

start_position => "beginning"

type => "app1-sidecar-catalina-log"

}

file {

path => "/var/log/applog/localhost_access_log.*.txt"

start_position => "beginning"

type => "app1-sidecar-access-log"

}

}

output {

if [type] == "app1-sidecar-catalina-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "app1-sidecar-access-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}}

}

2.2.5 运行业务镜像tomcat和日志收集镜像logstash(一个pod两个容器)

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-deployment-label

name: magedu-tomcat-app1-deployment #当前版本的deployment 名称

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-tomcat-app1-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-selector

spec:

containers:

- name: sidecar-container

image: harbor.magedu.local/baseimages/logstash:v7.12.1-sidecar

imagePullPolicy: Always

env:

- name: "KAFKA_SERVER"

value: "172.31.4.101:9092,172.31.4.102:9092,172.31.4.103:9092"

- name: "TOPIC_ID"

value: "tomcat-app1-topic"

- name: "CODEC"

value: "json"

volumeMounts:

- name: applogs

mountPath: /var/log/applog

- name: magedu-tomcat-app1-container

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/tomcat-app1:v1

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

volumeMounts:

- name: applogs

mountPath: /apps/tomcat/logs

startupProbe:

httpGet:

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5 #首次检测延迟5s

failureThreshold: 3 #从成功转为失败的次数

periodSeconds: 3 #探测间隔周期

readinessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

volumes:

- name: applogs

emptyDir: {}

service配置

cat 3.tomcat-service.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-tomcat-app1-service-label

name: magedu-tomcat-app1-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 40080

selector:

app: magedu-tomcat-app1-selector

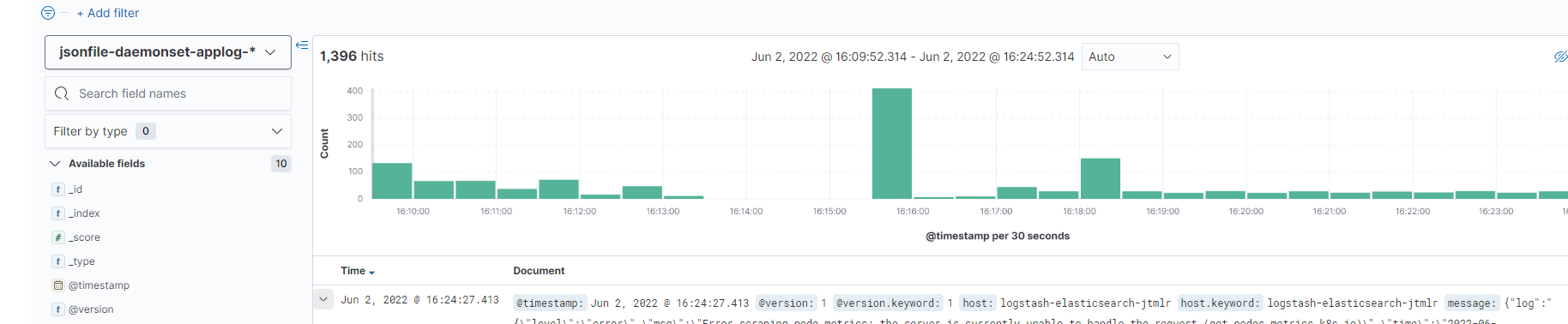

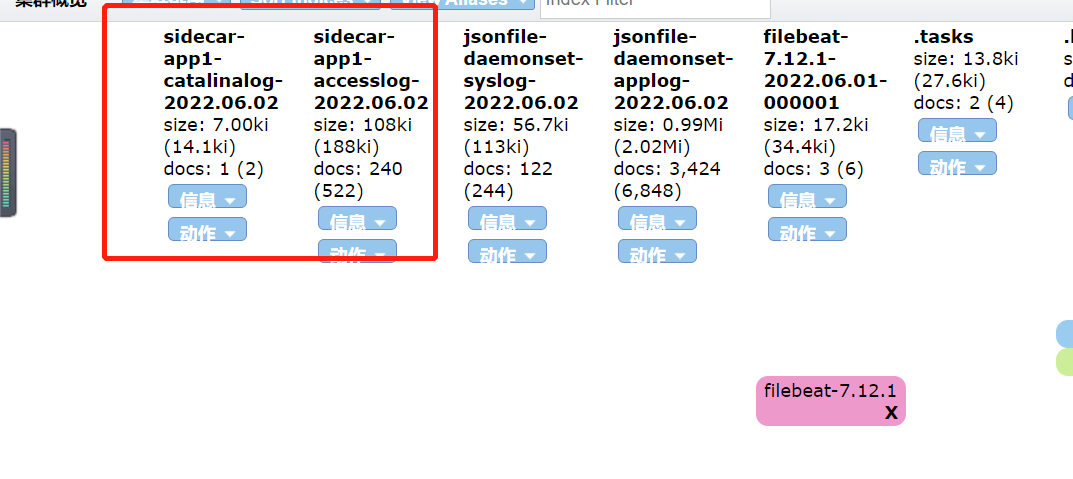

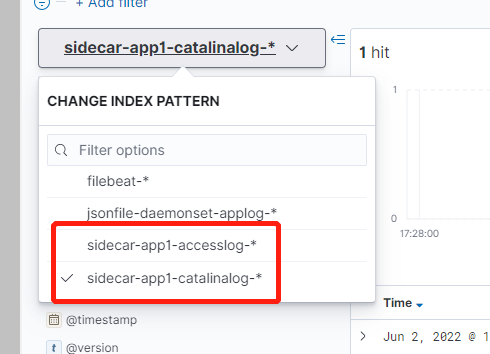

2.2.6 最终去验证日志

2.7 第三种-容器内置日志收集服务进程

其实就是在一个容器里,除了运行自己的服务之外,在运行一个收集日志的程序,比如filebeat

2.7.1 准备所需文件

2.7.2 打业务镜像,比如tomcat,在打镜像的时候,直接就把filebeat嵌入进去

前提:需要准备好这些文件

cat Dockfile

#tomcat web1

FROM harbor.magedu.com/pub-images/tomcat-base:v8.5.43

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

ADD app1.tar.gz /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

RUN chown -R tomcat.tomcat /data/ /apps/

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

2.7.3 镜像打包

#!/bin/bash

TAG=$1

docker build -t harbor.magedu.local/magedu/tomcat-app1:${TAG} .

sleep 3

docker push harbor.magedu.local/magedu/tomcat-app1:${TAG}

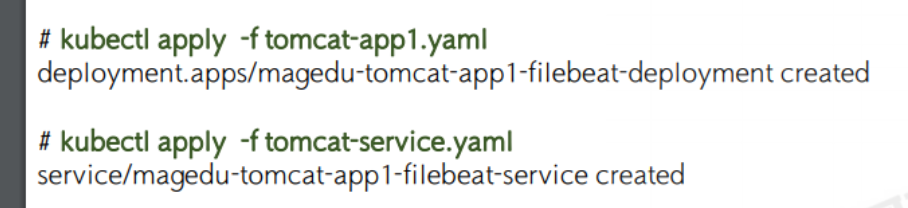

2.7.4 filebeat 配置文件 filebeat.yml

[root@k8s-master1 1.webapp-filebeat-image-Dockerfile]# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /apps/tomcat/logs/catalina.out

fields:

type: filebeat-tomcat-catalina

- type: log

enabled: true

paths:

- /apps/tomcat/logs/localhost_access_log.*.txt

fields:

type: filebeat-tomcat-accesslog

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

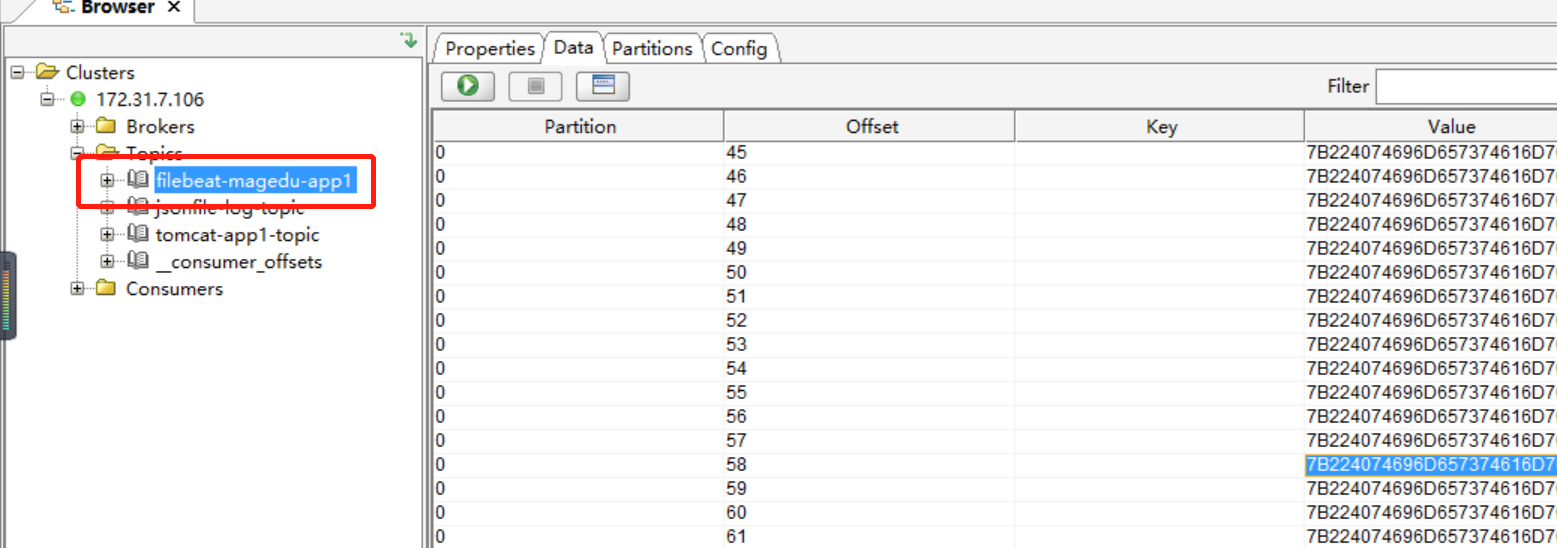

output.kafka:

hosts: ["172.31.7.106:9092"]

required_acks: 1

topic: "filebeat-magedu-app1"

compression: gzip

max_message_bytes: 1000000

2.7.5 run_tomcat.sh

#!/bin/bash

/usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml -path.home /usr/share/filebeat -path.config /etc/filebeat -path.data /var/lib/filebeat -path.logs /var/log/filebeat &

su - tomcat -c "/apps/tomcat/bin/catalina.sh start"

tail -f /etc/hosts

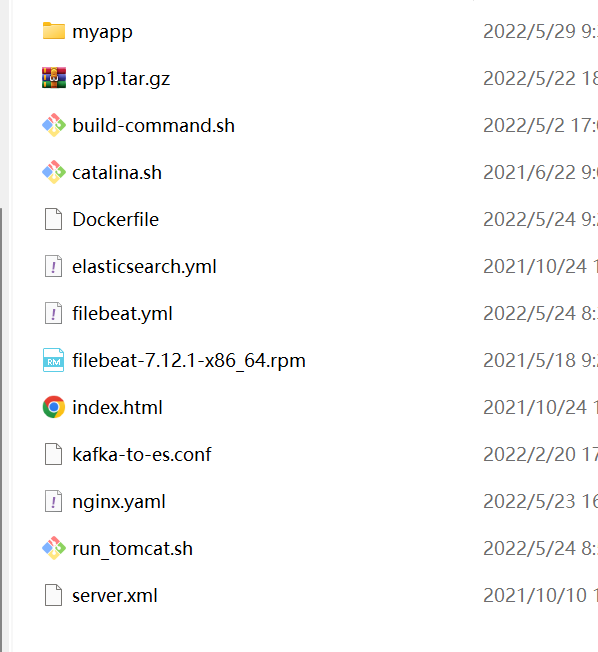

2.7.6 运行业务镜像和service

cat tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-tomcat-app1-filebeat-deployment-label

name: magedu-tomcat-app1-filebeat-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-tomcat-app1-filebeat-selector

template:

metadata:

labels:

app: magedu-tomcat-app1-filebeat-selector

spec:

containers:

- name: magedu-tomcat-app1-filebeat-container

image: harbor.magedu.local/magedu/tomcat-app1:20220529_152220

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

cat 3.tomcat-service.yaml

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-tomcat-app1-service-label

name: magedu-tomcat-app1-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 40080

selector:

app: magedu-tomcat-app1-selector

2.7.7 elk-logstah.conf配置文件内容:

cat 4.logsatsh-sidecar-kafka-to-es.conf

input {

kafka {

bootstrap_servers => "172.31.4.101:9092,172.31.4.102:9092,172.31.4.103:9092"

topics => ["filebeat-magedu-app1"]

codec => "json"

}

}

output {

if [fields][type] == "filebeat-tomcat-catalina" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "filebeat-tomcat-catalina-%{+YYYY.MM.dd}"

}}

if [fields][type] == "filebeat-tomcat-accesslog" {

elasticsearch {

hosts => ["172.31.2.101:9200","172.31.2.102:9200"]

index => "filebeat-tomcat-accesslog-%{+YYYY.MM.dd}"

}}

}

2.7.8 验证

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· Manus爆火,是硬核还是营销?

· 终于写完轮子一部分:tcp代理 了,记录一下

· 【杭电多校比赛记录】2025“钉耙编程”中国大学生算法设计春季联赛(1)