8 k8s运行zookeeper和redis等实例

一 Kubernetes实战案例-自定义镜像结合PV/PVC运行Zookeeper集群

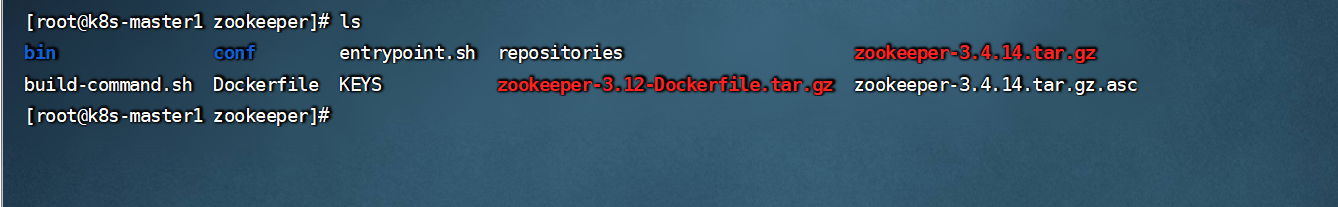

1 构建zookeeper镜像

dockfile内容:

FROM harbor.magedu.com/magedu/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

build-command.sh 构建并上传镜像

#!/bin/bash

TAG=$1

docker build -t harbor.magedu.com/magedu/zookeeper:${TAG} .

sleep 1

docker push harbor.magedu.com/magedu/zookeeper:${TAG}

sh build-command.sh v3

2 创建pv和pvc

前提:需要先在nfs服务器创建三个目录

pv.yaml内容如下:

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.122

path: /data/k8sdata/magedu/zookeeper-datadir-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.122

path: /data/k8sdata/magedu/zookeeper-datadir-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.122

path: /data/k8sdata/magedu/zookeeper-datadir-3

pvc.yaml 内容如下:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: magedu

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3

resources:

requests:

storage: 10Gi

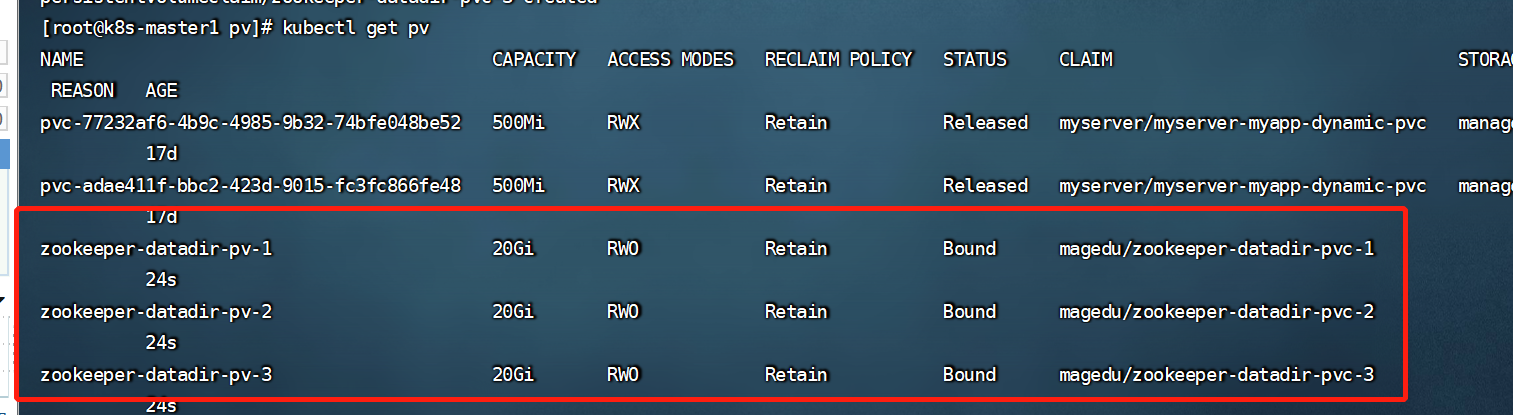

查看pv

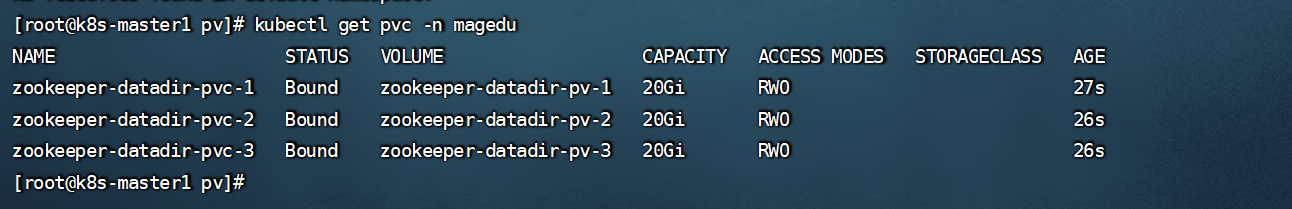

查看pvc

3 在k8s里部署zookepeer

yaml文件内容:

3.1 cat zookeeper.yaml

这个脚本里主要定义了,zookeeper的server-id, 3个service,

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: magedu

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: magedu

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.magedu.com/magedu/zookeeper:v3

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.magedu.net/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.magedu.net/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

3.2 cat entrypoint.sh

这个脚本结合上面的yaml文件,把每个server-id写到每个zookeeper服务器里,也包括端口号

$MYID 和 $SERVERS 都是在yaml里定义好的

#!/bin/bash

echo ${MYID:-1} > /zookeeper/data/myid

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS"

for i in "${!servers[@]}"; do

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg

done

fi

cd /zookeeper

exec "$@

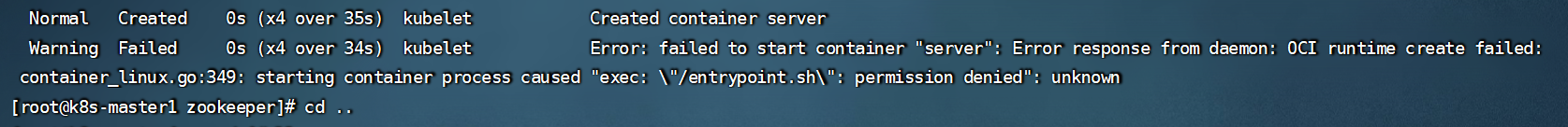

遇到的错误

原因是entrypoint.sh 这个脚本没有执行权限,加上执行权限,再次构建镜像即可

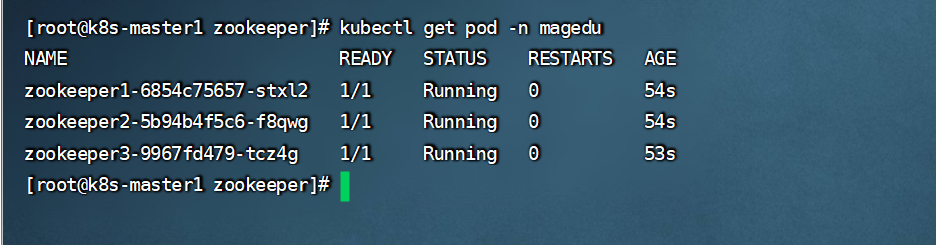

3.3 查看zookeeper的pod

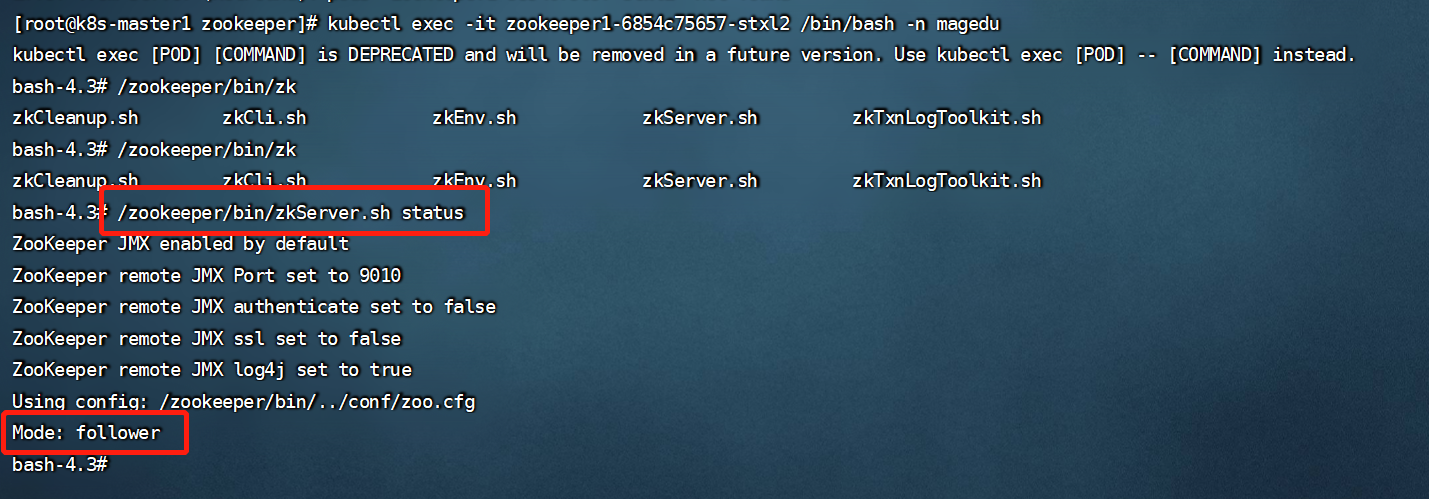

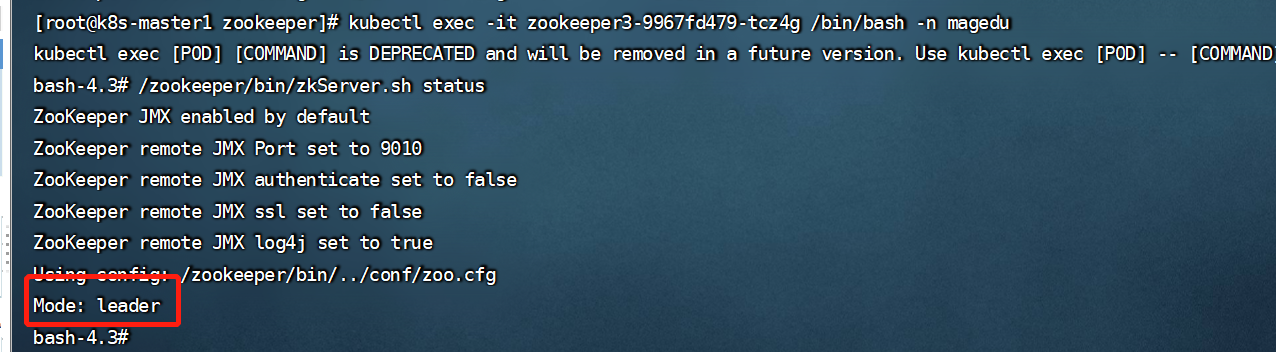

3.4 去zookeeper里面查看主从状态

/zookeeper/bin/zkServer.sh status

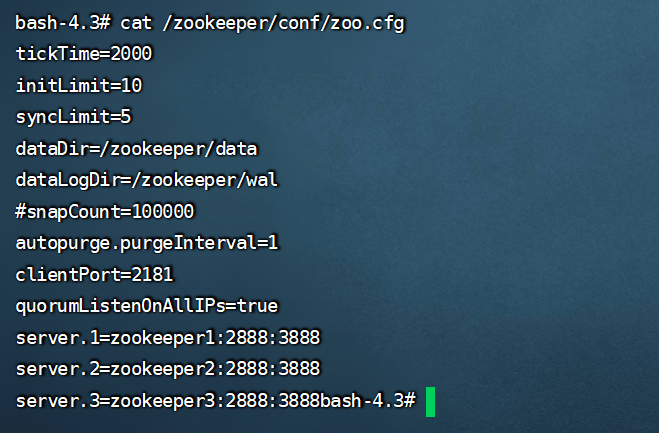

3.5 查看他们的配置文件

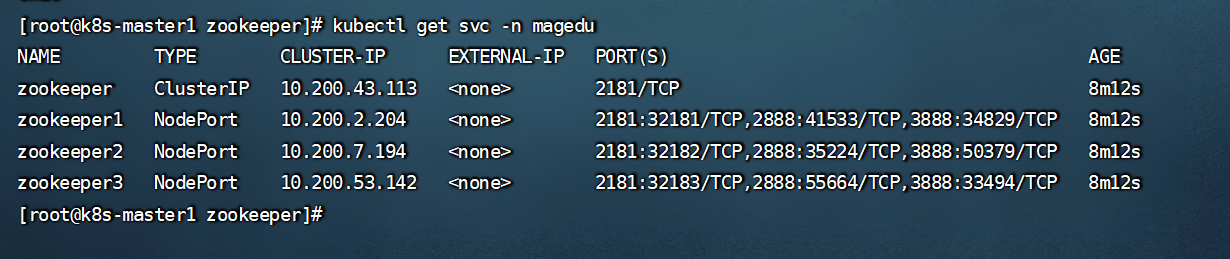

3.6 查看svc

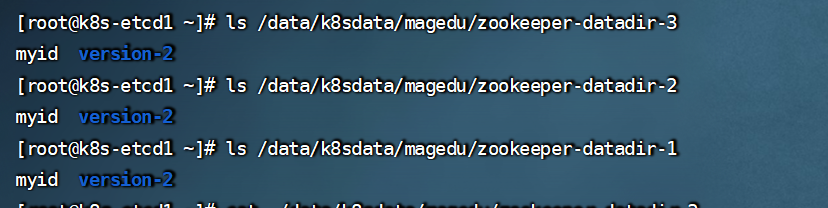

3.7 查看存储目录

二 kubernetes运行Redis单机及Redis Cluster

2.1 部署单机redis

2.1.1 dockfile

#Redis Image

FROM harbor.magedu.com/baseimages/magedu-centos-base:7.9.2009

MAINTAINER zhangshijie "zhangshijie@magedu.net"

ADD redis-4.0.14.tar.gz /usr/local/src

RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && cd /usr/local/redis && make && cp src/redis-cli /usr/sbin/ && cp src/redis-server /usr/sbin/ && mkdir -pv /data/redis-data

ADD redis.conf /usr/local/redis/redis.conf

ADD run_redis.sh /usr/local/redis/run_redis.sh

EXPOSE 6379

CMD ["/usr/local/redis/run_redis.sh"]

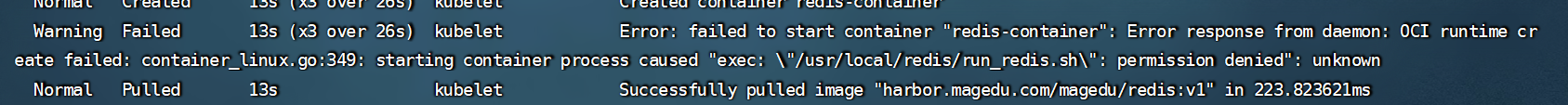

注意构建镜像之前,run_redis.sh这个脚本需要加上,执行权限,不然pod里会报错,如下

构建并上传镜像

[root@k8s-master1 redis]# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.magedu.com/magedu/redis:${TAG} .

sleep 3

docker push harbor.magedu.com/magedu/redis:${TAG}

redis.conf内容:

[root@k8s-master1 redis]# grep -v ^# redis.conf |grep -v "^$"

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize yes

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo yes

save 900 1

save 5 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error no

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data/redis-data

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

slave-lazy-flush no

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble no

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

2.2.2 创建pv和pvc

注意:修改自己的nfs地址和对应目录

pv.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv-1

namespace: magedu

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8sdata/magedu/redis-datadir-1

server: 172.31.7.121

pvc.yaml

[root@k8s-master1 pv]# cat redis-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc-1

namespace: magedu

spec:

volumeName: redis-datadir-pv-1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

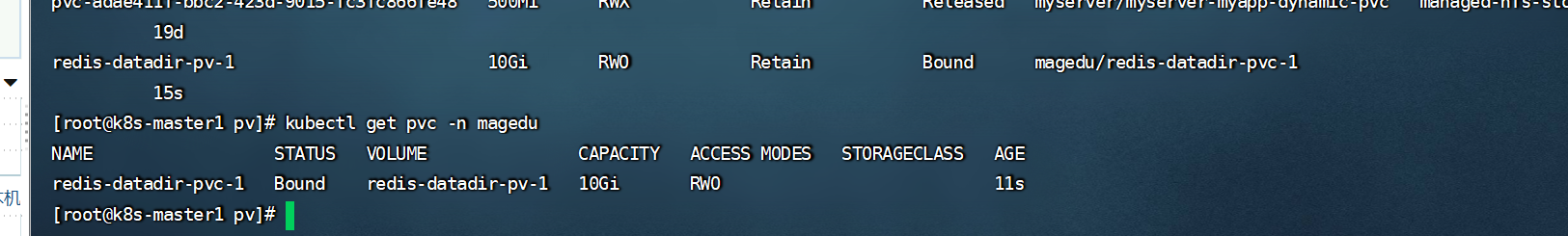

查看pv和pvc

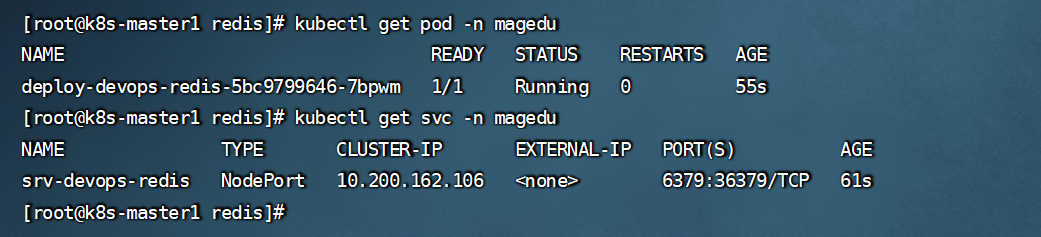

2.2.3 创建redis pod

redis.yaml

[root@k8s-master1 redis]# cat redis.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: devops-redis

name: deploy-devops-redis

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: devops-redis

template:

metadata:

labels:

app: devops-redis

spec:

containers:

- name: redis-container

image: harbor.magedu.com/magedu/redis:v1

imagePullPolicy: Always

volumeMounts:

- mountPath: "/data/redis-data/"

name: redis-datadir

volumes:

- name: redis-datadir

persistentVolumeClaim:

claimName: redis-datadir-pvc-1

---

kind: Service

apiVersion: v1

metadata:

labels:

app: devops-redis

name: srv-devops-redis

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 6379

targetPort: 6379

nodePort: 36379

selector:

app: devops-redis

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

2.2 创建集群环境

2.2.1 在nfs服务器上创建存储目录

mkdir -pv

/data/k8sdata/magedu/{redis0,redis1,redis2,redis3,redis4,redis5}

2.2.2 创建pv

[root@k8s-master1 redis-cluster]# cat pv/redis-cluster-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis0

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv3

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv4

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv5

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 172.31.7.121

path: /data/k8sdata/magedu/redis5

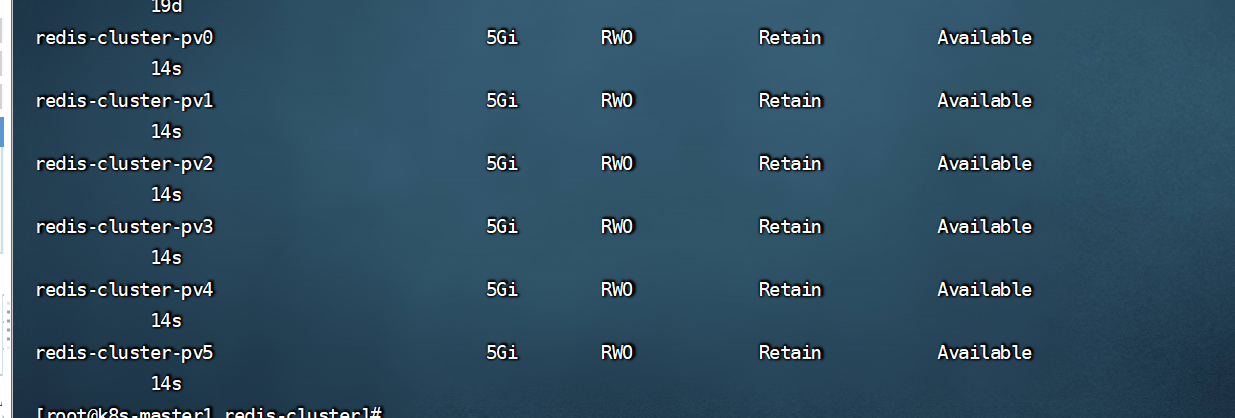

查看pv

2.2.3 创建configmap

kubectl create configmap redis-conf --from-file=redis.conf -n magedu

查看

[root@k8s-master1 redis-cluster]# kubectl get configmaps -n magedu

NAME DATA AGE

kube-root-ca.crt 1 15d

redis-conf 1 23s

查看configmaps内容;

[root@k8s-master1 redis-cluster]# kubectl describe configmaps redis-conf -n magedu

Name: redis-conf

Namespace: magedu

Labels: <none>

Annotations: <none>

Data

====

redis.conf:

----

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

BinaryData

====

Events: <none>

2.2.4 部署redis-cluster

部署完之后,默认是6个单机的,pvc是在yaml文件里自动创建的

redis.yaml文件内容:

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: magedu

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis

port: 6379

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

name: redis-access

namespace: magedu

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis-access

protocol: TCP

port: 6379

targetPort: 6379

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: magedu

spec:

serviceName: redis

replicas: 6

selector:

matchLabels:

app: redis

appCluster: redis-cluster

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: redis:4.0.14

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

resources:

requests:

cpu: "100m"

memory: "100Mi"

ports:

- containerPort: 6379

name: redis

protocol: TCP

- containerPort: 16379

name: cluster

protocol: TCP

volumeMounts:

- name: conf

mountPath: /etc/redis

- name: data

mountPath: /var/lib/redis

volumes:

- name: conf

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

volumeClaimTemplates:

- metadata:

name: data

namespace: magedu

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

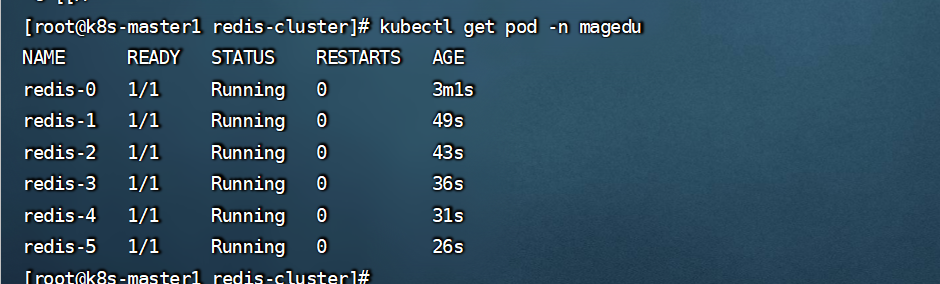

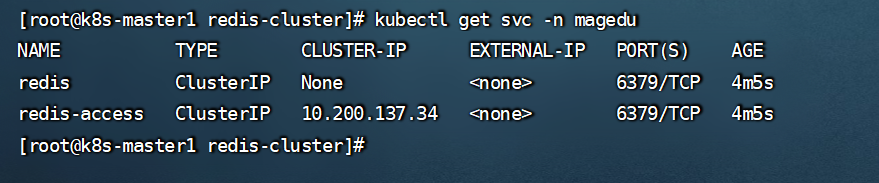

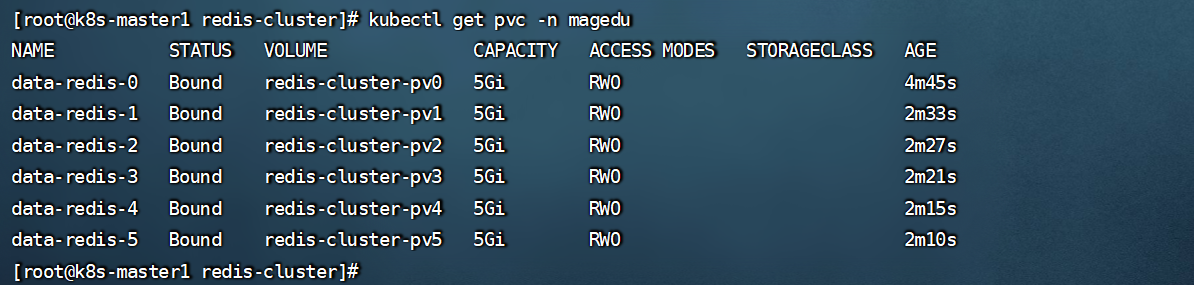

查看pvc

2.2.5 初始化集群

初始化只需要初始化一次,redis 4及之前的版本需要使用redis-tribe工具进行初始化,redis 5开始使用redis-cli。

这里临时创建一个ubuntu的容器,去做redis集群的初始化操作,

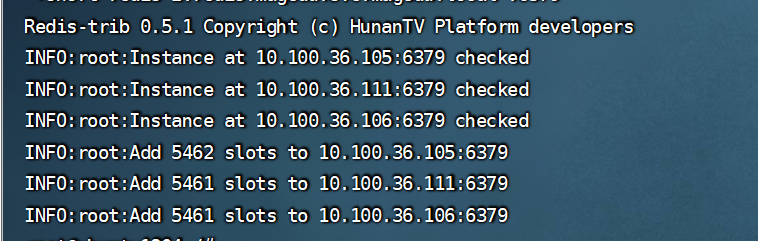

2.2.5.1 redis4版本初始化方法

kubectl run -it ubuntu1804 --image=ubuntu:18.04--restart=Never -n magedu bash

root@ubuntu1804:/# apt install python2.7 python-pip redis-tools dnsutilsiputils-ping net-tools -y

root@ubuntu1804:/# pip install --upgrade pip

root@ubuntu1804:/# pip install redis-trib==0.5.1

创建集群

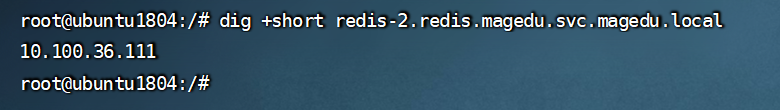

这里dig 解析域名对应的Ip,,redis0,redis1,redis2为主节点

root@ubuntu1804:/# redis-trib.py create \

`dig +short redis-0.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-1.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-2.redis.magedu.svc.magedu.local`:6379

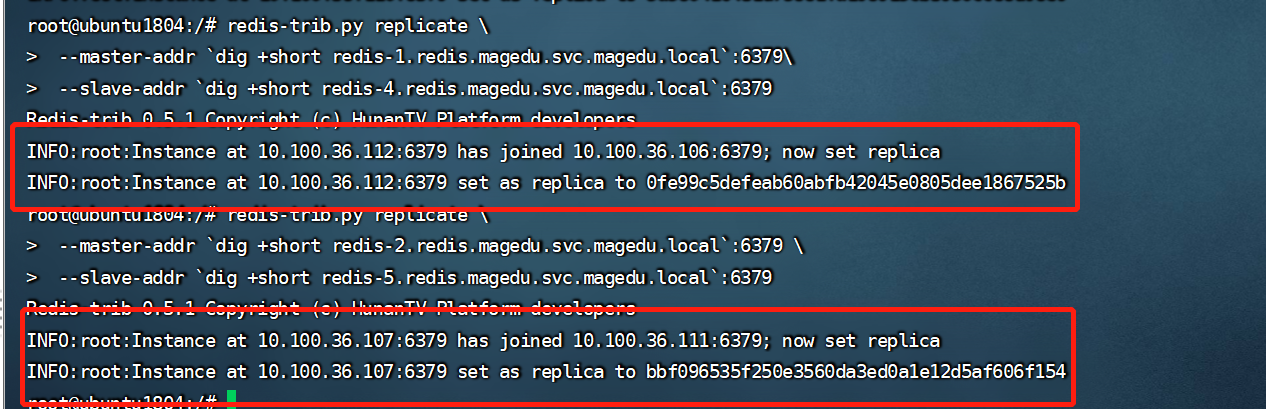

将redis-3加入redis-0:

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-0.redis.magedu.svc.magedu.local`:6379 \

--slave-addr `dig +short redis-3.redis.magedu.svc.magedu.local`:6379

将redis-4加入redis-1:

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-1.redis.magedu.svc.magedu.local`:6379\

--slave-addr `dig +short redis-4.redis.magedu.svc.magedu.local`:6379

将redis-5加入redis-2:

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-2.redis.magedu.svc.magedu.local`:6379 \

--slave-addr `dig +short redis-5.redis.magedu.svc.magedu.local`:6379

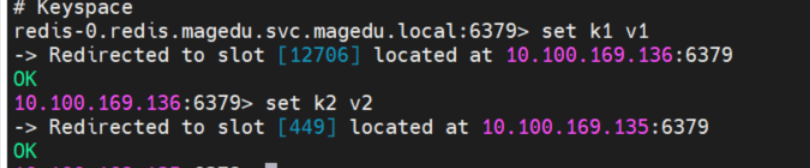

从节点加入到主节点效果

2.2.5.2 redis5版本初始化:

kubectl exec -it -n magedu redis-cluster-0 -- bash

# yum -y install bind-utils

# redis-cli --cluster create \

`dig +short redis-0.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-1.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-2.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-3.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-4.redis.magedu.svc.magedu.local`:6379 \

`dig +short redis-5.redis.magedu.svc.magedu.local`:6379 \

--cluster-replicas 1 \

-a 123456

#######################

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.200.166.242:6379 to 10.200.150.66:6379

Adding replica 10.200.150.69:6379 to 10.200.166.232:6379

Adding replica 10.200.150.68:6379 to 10.200.37.152:6379

M: 25a0eaf7b0bc64c78649e24c2e008e64bc979bf7 10.200.150.66:6379

slots:[0-5460] (5461 slots) master

M: 8a71876eb50e553cb79480f9fefcb505fcf251f5 10.200.166.232:6379

slots:[5461-10922] (5462 slots) master

M: cab5f390c61b2faf613c5eff533772dfb7ea6088 10.200.37.152:6379

slots:[10923-16383] (5461 slots) master

S: 78a248c46fb503bd93664374dbf3e4943043a2cf 10.200.150.68:6379

replicates cab5f390c61b2faf613c5eff533772dfb7ea6088

S: 561f0063c5d72b81d9ac1c0e7f2af82aaa746efe 10.200.166.242:6379

replicates 25a0eaf7b0bc64c78649e24c2e008e64bc979bf7

S: 0b67723f3657467d7db4f525d9124b377ede8906 10.200.150.69:6379

replicates 8a71876eb50e553cb79480f9fefcb505fcf251f5

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 10.200.150.66:6379)

M: 25a0eaf7b0bc64c78649e24c2e008e64bc979bf7 10.200.150.66:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 8a71876eb50e553cb79480f9fefcb505fcf251f5 10.200.166.232:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 0b67723f3657467d7db4f525d9124b377ede8906 10.200.150.69:6379

slots: (0 slots) slave

replicates 8a71876eb50e553cb79480f9fefcb505fcf251f5

S: 561f0063c5d72b81d9ac1c0e7f2af82aaa746efe 10.200.166.242:6379

slots: (0 slots) slave

replicates 25a0eaf7b0bc64c78649e24c2e008e64bc979bf7

M: cab5f390c61b2faf613c5eff533772dfb7ea6088 10.200.37.152:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 78a248c46fb503bd93664374dbf3e4943043a2cf 10.200.150.68:6379

slots: (0 slots) slave

replicates cab5f390c61b2faf613c5eff533772dfb7ea6088

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

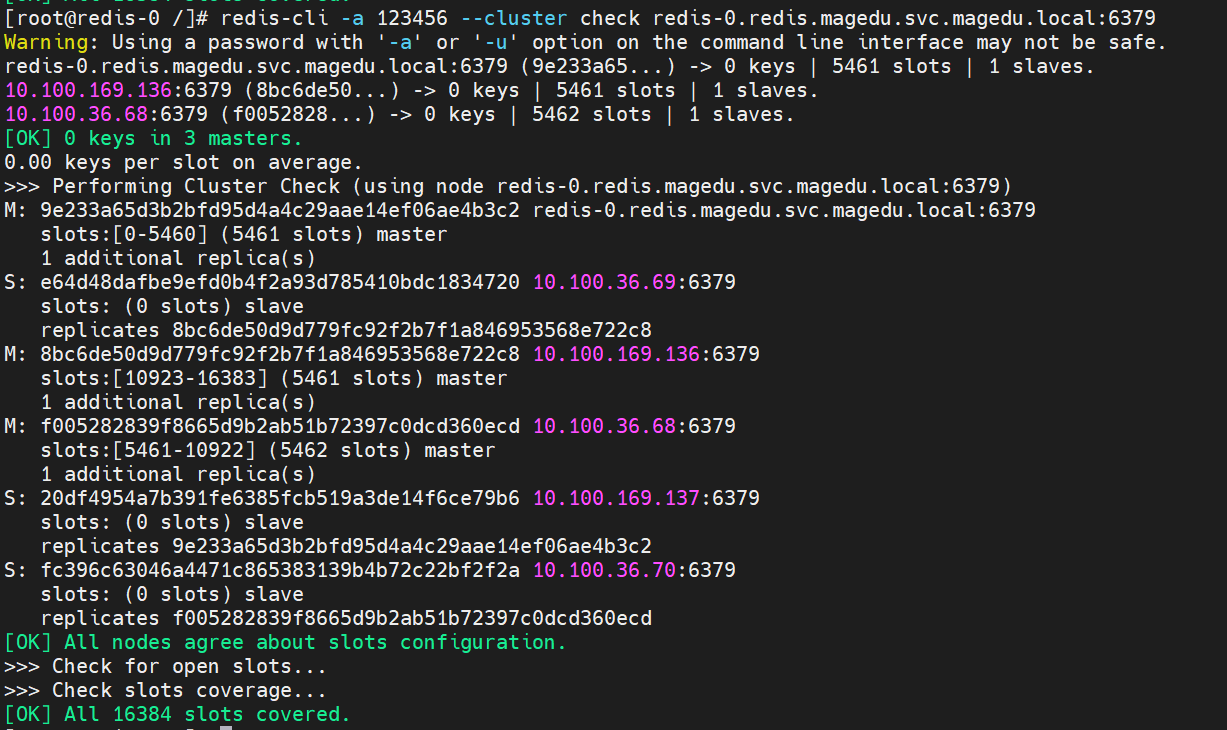

#########检查集群

redis-cli -a 123456 --cluster check redis-0.redis.magedu.svc.magedu.local:6379

检查集群

####登录

redis-cli -c -h redis-0.redis.magedu.svc.magedu.local -p 6379 -a 123456

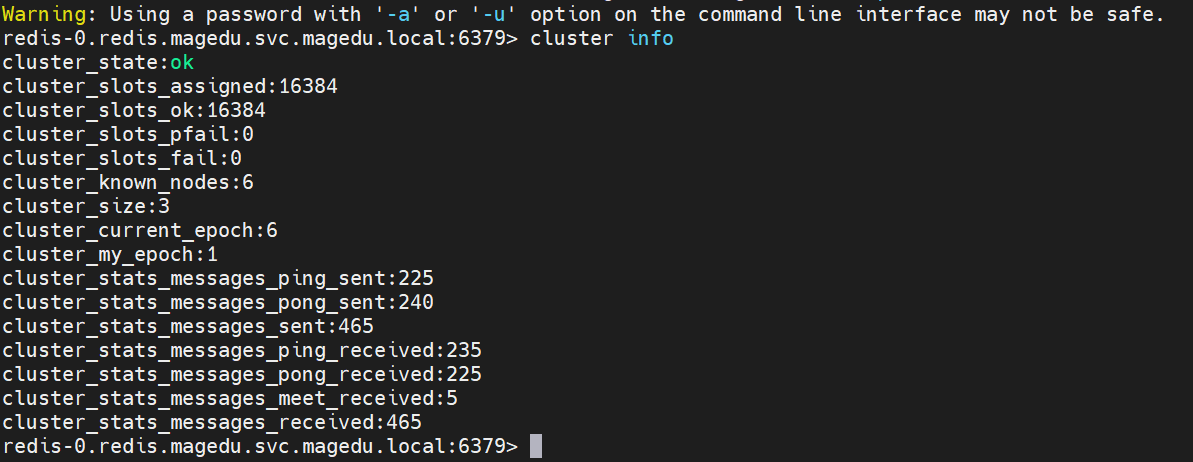

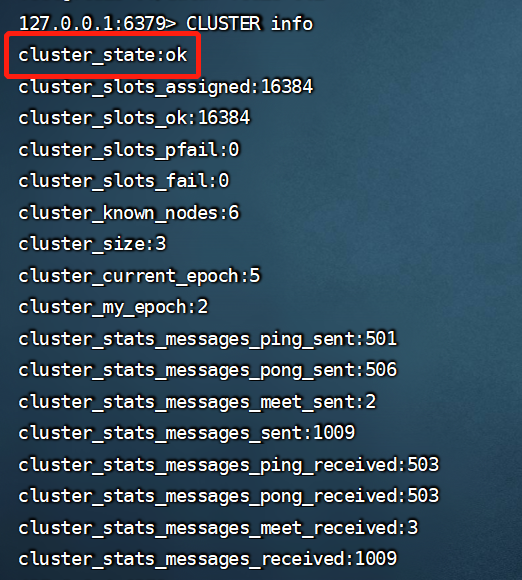

2.2.6 验证集群

[root@k8s-master1 redis]# kubectl exec -it redis-0 bash -n magedu

root@redis-0:/data# redis-cli

127.0.0.1:6379> CLUSTER info

集群状态如下,ok表示正常

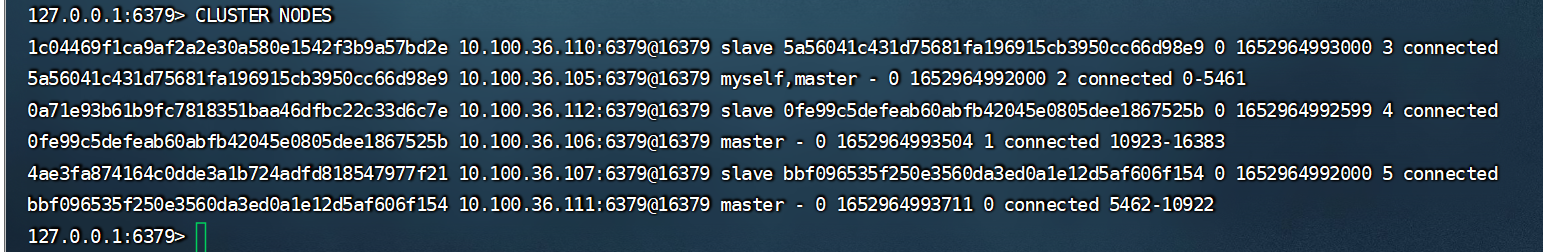

查看集群节点

CLUSTER NODES

2.2.7 k8s集群外部如何访问redis集群

https://mp.weixin.qq.com/s/WqzaNfXBXhs20LLqTtGY8Q

需要用到redis官方提供的proxy插件

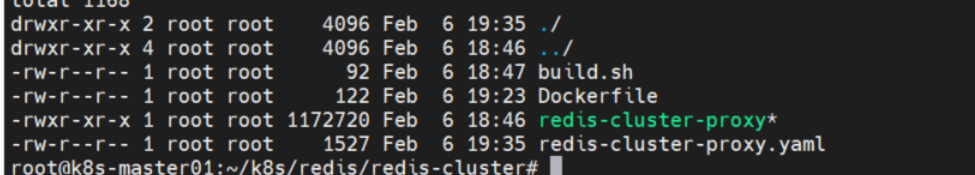

2.2.7.1 编译redis-proxy

#注意:这里用什么系统编译,就需要用什么系统去构建镜像

git clone https://github.com/RedisLabs/redis-cluster-proxy

# cd redis-cluster-proxy/

# make PREFIX=/usr/local/redis_cluster_proxy install

然后会生成一个二进制文件:

/usr/local/redis_cluster_proxy/bin/redis-cluster-proxy

把这个二进制文件拷贝到Dockerfile所在目录

#############

cat build.sh

#!/bin/bash

tag="huningfei/redis-cluster-proxy:v1"

docker build . -t $tag

docker push $tag

###########Dockerfile

cat Dockerfile

FROM ubuntu:20.04

WORKDIR /data

ADD redis-cluster-proxy /usr/local/bin/

EXPOSE 7777

###############################创建pod

cat redis-cluster-proxy.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: redis-proxy

namespace: magedu

data:

proxy.conf: |

cluster redis:6379 # 配置为Redis Clusterip 这个地方一定不能写错

bind 0.0.0.0

port 7777 # redis-cluster-proxy 对外暴露端口

threads 8 # 线程数量

daemonize no

enable-cross-slot yes

auth 123456 # 配置Redis Cluster 认证密码

log-level error

---

apiVersion: v1

kind: Service

metadata:

name: redis-proxy

namespace: magedu

spec:

type: NodePort # 对K8S外部提供服务

ports:

- name: redis-proxy

nodePort: 31777 # 对外提供的端口

port: 7777

protocol: TCP

targetPort: 7777

selector:

app: redis-proxy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-proxy

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: redis-proxy

template:

metadata:

labels:

app: redis-proxy

spec:

containers:

- name: redis-proxy

image: huningfei/redis-cluster-proxy:v1

imagePullPolicy: Always

command: ["redis-cluster-proxy"]

args:

- -c

- /data/proxy.conf # 指定启动配置文件

ports:

- name: redis-7777

containerPort: 7777

protocol: TCP

volumeMounts:

- name: redis-proxy-conf

mountPath: /data/

volumes: # 挂载proxy配置文件

- name: redis-proxy-conf

configMap:

name: redis-proxy

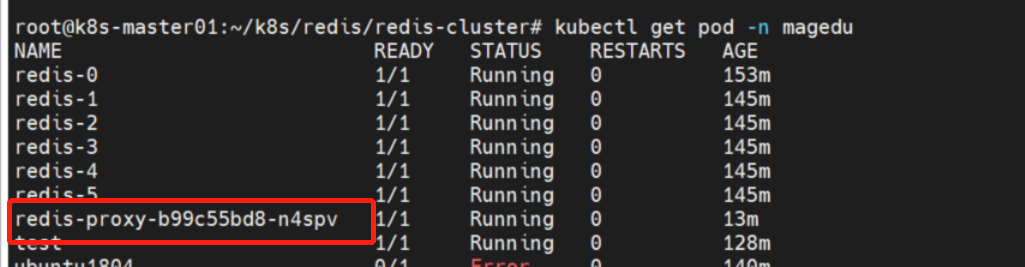

2.2.7.2 查看redis-proxy的pod

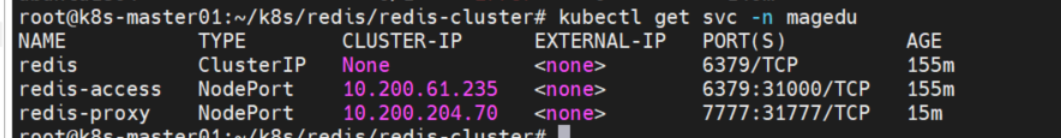

2.2.7.3 从外部访问redis-proxy的svc

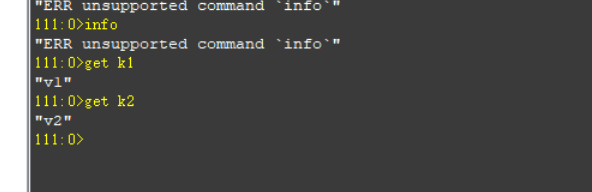

登录redis,设置key

2.2.7.4外部连接测试

查看刚才设置的key

三 kubernetes基于Zookeeper案例实现微服务动态注册和发现案例

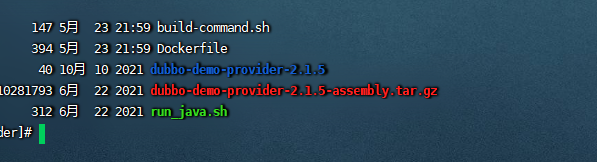

3.1 provider镜像-生产者

Dockfile内容:

[root@k8s-master1 provider]# cat Dockerfile

#Dubbo provider

FROM harbor.magedu.com/pub-images/jdk-base:v8.212

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN yum install file nc -y

RUN useradd nginx -u 2055

RUN mkdir -p /apps/dubbo/provider

ADD dubbo-demo-provider-2.1.5/ /apps/dubbo/provider

ADD run_java.sh /apps/dubbo/provider/bin

RUN chown nginx.nginx /apps -R

RUN chmod a+x /apps/dubbo/provider/bin/*.sh

CMD ["/apps/dubbo/provider/bin/run_java.sh"]

# 在构建镜像之前还有改一下里面zookeeper的地址

vim dubbo-demo-provider-2.1.5/conf/dubbo.properties

dubbo.registry.address=zookeeper://zookeeper1.magedu.svc.magedu.local:2181 | zookeeper://zookeeper2.magedu.svc.magedu.local:2181 | zookeeper://zookeeper3.magedu.svc.magedu.local:2181

构建打包

[root@k8s-master1 provider]# cat build-command.sh

#!/bin/bash

docker build -t harbor.magedu.com/magedu/dubbo-demo-provider:v1 .

sleep 3

docker push harbor.magedu.com/magedu/dubbo-demo-provider:v1

启动脚本内容:

[root@k8s-master1 provider]# cat run_java.sh

#!/bin/bash

su - nginx -c "/apps/dubbo/provider/bin/start.sh"

tail -f /etc/hosts

3.2 6.2 运行provider服务

[root@k8s-master1 provider]# cat provider.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-provider

name: magedu-provider-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-provider

template:

metadata:

labels:

app: magedu-provider

spec:

containers:

- name: magedu-provider-container

image: harbor.magedu.com/magedu/dubbo-demo-provider:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 20880

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-provider

name: magedu-provider-spec

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 20880

#nodePort: 30001

selector:

app: magedu-provider

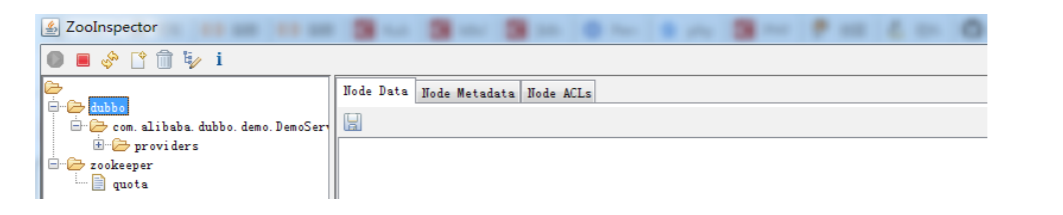

验证:zooinspector zookeeper客户端

https://issues.apache.org/jira/secure/attachment/12436620/ZooInspector.zip #下载地址

https://blog.csdn.net/succing/article/details/121802687 使用方法

也可以进去provider容器,查看日志

/apps/dubbo/provider/logs/stdout.log

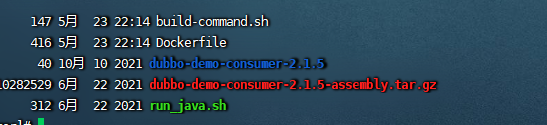

3.3准备consumer镜像-消费者

Dockerfile内容:

[root@k8s-master1 consumer]# cat Dockerfile

#Dubbo consumer

FROM harbor.magedu.com/pub-images/jdk-base:v8.212

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN useradd nginx -u 2055

RUN yum install file -y

RUN mkdir -p /apps/dubbo/consumer

ADD dubbo-demo-consumer-2.1.5 /apps/dubbo/consumer

ADD run_java.sh /apps/dubbo/consumer/bin

RUN chown nginx.nginx /apps -R

RUN chmod a+x /apps/dubbo/consumer/bin/*.sh

CMD ["/apps/dubbo/consumer/bin/run_java.sh"]

##################更改zookeeper的地址

vim dubbo-demo-consumer-2.1.5/conf/dubbo.properties

###########构建打包

[root@k8s-master1 consumer]# cat build-command.sh

#!/bin/bash

docker build -t harbor.magedu.com/magedu/dubbo-demo-consumer:v1 .

sleep 3

docker push harbor.magedu.com/magedu/dubbo-demo-consumer:v1

#######################启动脚本

[root@k8s-master1 consumer]# cat run_java.sh

#!/bin/bash

su - nginx -c "/apps/dubbo/consumer/bin/start.sh"

tail -f /etc/hosts

3.4 运行consumer服务

[root@k8s-master1 consumer]# cat consumer.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-consumer

name: magedu-consumer-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-consumer

template:

metadata:

labels:

app: magedu-consumer

spec:

containers:

- name: magedu-consumer-container

image: harbor.magedu.com/magedu/dubbo-demo-consumer:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-consumer

name: magedu-consumer-server

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

#nodePort: 30001

selector:

app: magedu-consumer

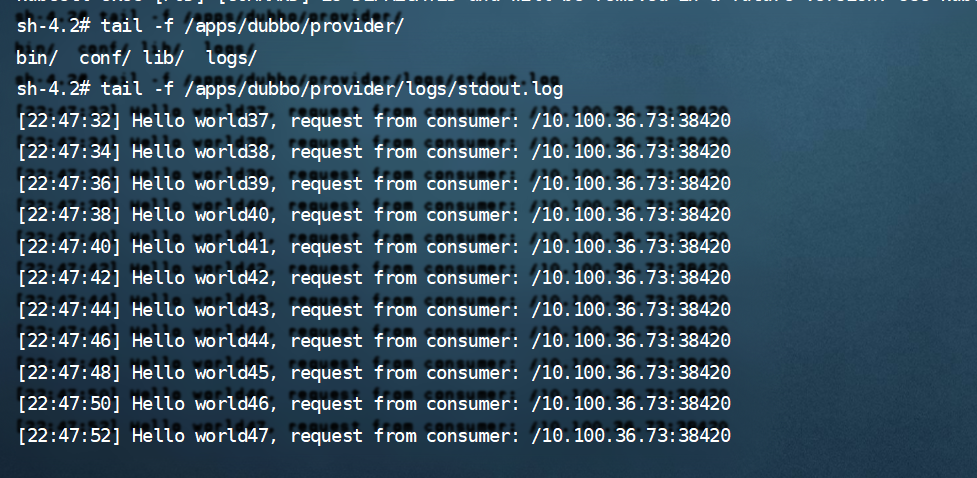

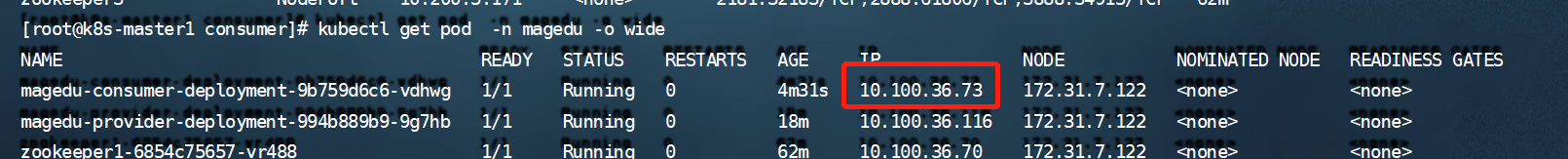

3.5 验证效果

生产者和消费者都创建完成了,然后就需要去验证效果了

进去生产者的Pod里查看日志,消费者在一直调用

这个36.73正是消费者pod的ip

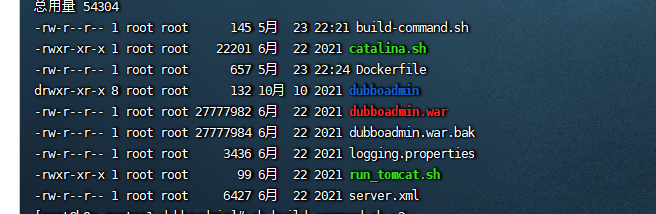

3.6 准备dubboadmin镜像

注意两个sh脚本,必须给加上执行权限,否则服务会不能启动。

也要更改注册中心地址 dubboadmin/WEB-INF/dubbo.properties

[root@k8s-master1 dubboadmin]# cat Dockerfile

#Dubbo dubboadmin

#FROM harbor.magedu.local/pub-images/tomcat-base:v8.5.43

FROM harbor.magedu.com/pub-images/tomcat-base:v8.5.43

MAINTAINER zhangshijie "zhangshijie@magedu.net"

RUN useradd nginx -u 2055

RUN yum install unzip -y

ADD server.xml /apps/tomcat/conf/server.xml

ADD logging.properties /apps/tomcat/conf/logging.properties

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD dubboadmin.war /data/tomcat/webapps/dubboadmin.war

RUN cd /data/tomcat/webapps && unzip dubboadmin.war && rm -rf dubboadmin.war && chown -R nginx.nginx /data /apps

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

#########################构建镜像并上传

[root@k8s-master1 dubboadmin]# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.magedu.com/magedu/dubboadmin:${TAG} .

sleep 3

docker push harbor.magedu.com/magedu/dubboadmin:${TAG}

###############启动脚本

[root@k8s-master1 dubboadmin]# cat run_tomcat.sh

#!/bin/bash

su - nginx -c "/apps/tomcat/bin/catalina.sh start"

su - nginx -c "tail -f /etc/hosts"

3.7 运行dubboadmin镜像

[root@k8s-master1 dubboadmin]# cat dubboadmin.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-dubboadmin

name: magedu-dubboadmin-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-dubboadmin

template:

metadata:

labels:

app: magedu-dubboadmin

spec:

containers:

- name: magedu-dubboadmin-container

image: harbor.magedu.com/magedu/dubboadmin:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-dubboadmin

name: magedu-dubboadmin-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30080

selector:

app: magedu-dubboadmin

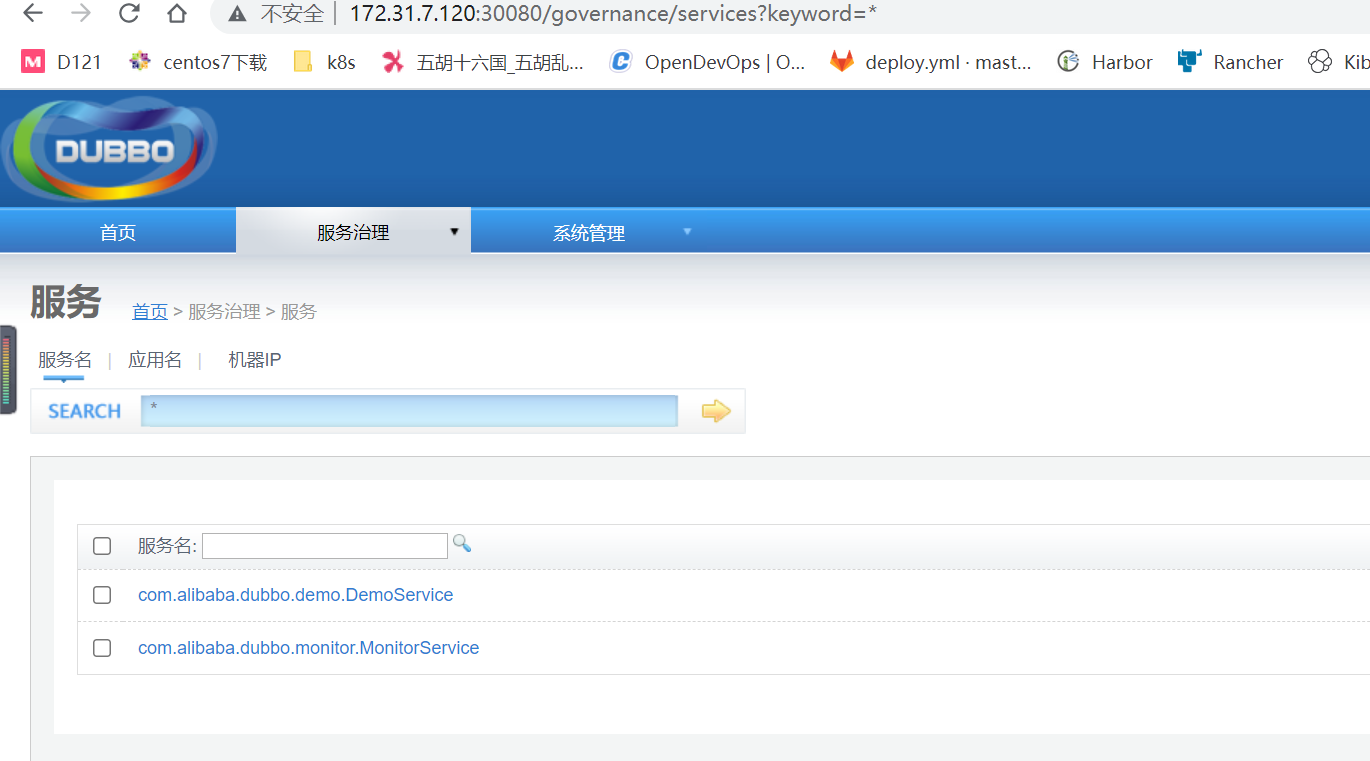

3.8 浏览器里查看

在浏览器访问ip:30080

用户名和密码都是root