elk收集nginx日志 支持地图显示

一 下载geoip

官网 地址 https://dev.maxmind.com/geoip/geoip2/geolite2/

下载 然后解压到 /usr/share/logstash/ 这个目录

yum install geoipupdate

vim /etc/GeoIP.conf

ProductIds GeoLite2-City

mkdir /usr/share/GeoIP

geoipupdate

ll /usr/share/GeoIP

二 更改nginx配置文件,设置nginx日志格式

log_format main '{"@timestamp":"$time_iso8601",'

'"@source":"$server_addr",'

'"hostname":"$hostname",'

'"ip":"$remote_addr",'

'"client":"$remote_addr",'

'"request_method":"$request_method",'

'"scheme":"$scheme",'

'"domain":"$server_name",'

'"referer":"$http_referer",'

'"request":"$request_uri",'

'"args":"$args",'

'"size":$body_bytes_sent,'

'"status": $status,'

'"responsetime":$request_time,'

'"upstreamtime":"$upstream_response_time",'

'"upstreamaddr":"$upstream_addr",'

'"http_user_agent":"$http_user_agent",'

'"https":"$https"'

'}';

三 logstash配置文件

input {

beats {

port => 5045

}

}

filter {

#if [type] == "nginx_log"{

mutate {

convert => [ "status","integer" ]

convert => [ "size","integer" ]

convert => [ "upstreatime","float" ]

convert => ["[geoip][coordinates]", "float"]

remove_field => "message"

}

date {

match => [ "timestamp" ,"dd/MMM/YYYY:HH:mm:ss Z" ]

}

geoip {

source => "client" ##日志格式里的ip来源,这里是client这个字段(client":"$remote_addr")

target => "geoip"

database =>"/usr/share/logstash/GeoLite2-City.mmdb" ##### 下载GeoIP库

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

remove_field => "timestamp"

}

# }

}

output {

if [app] == "sanwenqian" {

if [type] == "nginx_logs"{

elasticsearch {

hosts => ["http://172.17.199.231:9200"]

index => "logstash-sanwenqian-nginx-%{+YYYY-MM}"

}

}

}

stdout { codec => rubydebug }

}

启动logstash

四 设置filebeat

filebeat.inputs:

# 收集 nginx 日志

- type: log

enabled: true

paths:

- /var/log/nginx/*.log

tags: ["nginx_logs"]

# 日志是json开启这个

json.keys_under_root: true

json.overwrite_keys: true

json.add_error_key: true

# 如果值为ture,那么fields存储在输出文档的顶级位置

fields_under_root: true

fields:

app: sanwenqian

type: nginx_logs

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.logstash:

hosts: ["172.17.199.231:5045"]

#output:

# redis:

# hosts: ["172.17.199.231:5044"]

# save_topology: true

# key: "bole-nginx_logs"

# db: 0

# db_topology: 1

# timeout: 5

# reconnect_interval: 1

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

启动filebeat

五 kibana设置

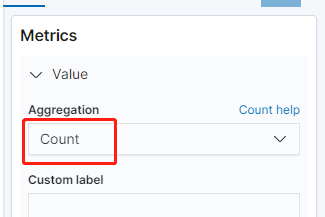

期间遇到的问题:field字段是灰色的无法选择,解决办法,第一个你的索引必须以logstash开头,第二找到managerment-index patterns-找到对应的索引点进去,去刷新如下所示: