Flink-MysqCDC 大事务OOM问题分析与修复

Flink-MysqCDC 大事务OOM问题分析与修复

一、背景:

系统刚上线,有些流程还未完全打通,需要通过人工修数的方式来确保业务运行;订单域和财务域都存在大量的人工修数,大伙干得热火朝天,夜深人静的时候,往往忽略了一些基本的准则,在生产环境发现有数据硬删+几十万的大事务更新操作;

这导致了Flink流大面积OOM,严重影响了同步到Kudu,深圳电商msyql和ES数据的准确性,时效性。

二、系统相关的配置

Flink:Flink 1.13 + MysqlCDC 2.1,Job Manage 2G,TaskManage 6G

Mysql版本:10.4.18-MariaDB

三、问题定位

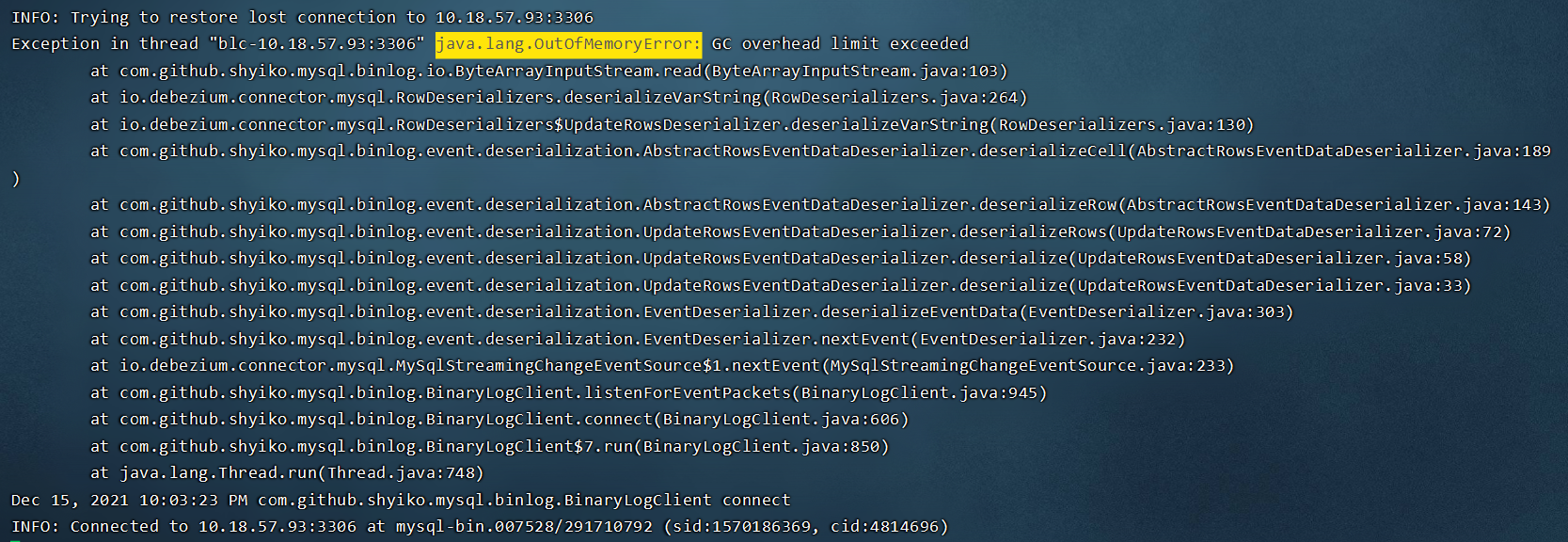

如上图,通过查看TaskManage日志发现,Flink在反序列化event的时候,发生了OOM,并且触发了多次垃圾回收后也没什么效果,爆 GC overhead limit exceeded。

四、问题分析

我们跟着错误日志去翻阅代码,看看能不能有什么收获。

错误日志

INFO: Trying to restore lost connection to 10.18.xx.xx:3306

Exception in thread "blc-10.18.57.93:3306" java.lang.OutOfMemoryError: GC overhead limit exceeded

at com.github.shyiko.mysql.binlog.io.ByteArrayInputStream.read(ByteArrayInputStream.java:103)

at io.debezium.connector.mysql.RowDeserializers.deserializeVarString(RowDeserializers.java:264)

at io.debezium.connector.mysql.RowDeserializers$UpdateRowsDeserializer.deserializeVarString(RowDeserializers.java:130)

at com.github.shyiko.mysql.binlog.event.deserialization.AbstractRowsEventDataDeserializer.deserializeCell(AbstractRowsEventDataDeserializer.java:189)

at com.github.shyiko.mysql.binlog.event.deserialization.AbstractRowsEventDataDeserializer.deserializeRow(AbstractRowsEventDataDeserializer.java:143)

at com.github.shyiko.mysql.binlog.event.deserialization.UpdateRowsEventDataDeserializer.deserializeRows(UpdateRowsEventDataDeserializer.java:72)

at com.github.shyiko.mysql.binlog.event.deserialization.UpdateRowsEventDataDeserializer.deserialize(UpdateRowsEventDataDeserializer.java:58)

at com.github.shyiko.mysql.binlog.event.deserialization.UpdateRowsEventDataDeserializer.deserialize(UpdateRowsEventDataDeserializer.java:33)

at com.github.shyiko.mysql.binlog.event.deserialization.EventDeserializer.deserializeEventData(EventDeserializer.java:303)

at com.github.shyiko.mysql.binlog.event.deserialization.EventDeserializer.nextEvent(EventDeserializer.java:232)

at io.debezium.connector.mysql.MySqlStreamingChangeEventSource$1.nextEvent(MySqlStreamingChangeEventSource.java:233)

at com.github.shyiko.mysql.binlog.BinaryLogClient.listenForEventPackets(BinaryLogClient.java:945)

at com.github.shyiko.mysql.binlog.BinaryLogClient.connect(BinaryLogClient.java:606)

at com.github.shyiko.mysql.binlog.BinaryLogClient$7.run(BinaryLogClient.java:850)

at java.lang.Thread.run(Thread.java:748)

Dec 15, 2021 10:03:23 PM com.github.shyiko.mysql.binlog.BinaryLogClient connect

INFO: Connected to 10.18.xx.xx:3306 at mysql-bin.007528/291710792 (sid:1570186369, cid:4814696)

BinaryLogClient.listenForEventPackets(BinaryLogClient.java:945)

// 监听binlog event数据包的入口

private void listenForEventPackets() throws IOException {

............. 省略

try {

event = eventDeserializer.nextEvent(packetLength == MAX_PACKET_LENGTH ?

new ByteArrayInputStream(readPacketSplitInChunks(inputStream, packetLength - 1)) :

inputStream);

if (event == null) {

throw new EOFException();

}

} catch (Exception e) {

........... 省略

}

................ 省略

}

BinaryLogClient.listenForEventPackets(BinaryLogClient.java:945)

> /**

> Mysql协议规定了packet最大16M,当一个event大于16M的时候,就会分成多个packet来传输,

> readPacketSplitInChunks的作用就是接受多个packet,拼成一个完成的event给后续解析

> */

> // https://dev.mysql.com/doc/internals/en/sending-more-than-16mbyte.html

> private byte[] readPacketSplitInChunks(ByteArrayInputStream inputStream, int packetLength) throws IOException {

> byte[] result = inputStream.read(packetLength);

> int chunkLength;

> do {

> chunkLength = inputStream.readInteger(3);

> inputStream.skip(1); // 1 byte for sequence

> result = Arrays.copyOf(result, result.length + chunkLength);

> inputStream.fill(result, result.length - chunkLength, chunkLength);

> } while (chunkLength == Packet.MAX_LENGTH);

> return result;

> }

MySqlStreamingChangeEventSource$1.nextEvent(MySqlStreamingChangeEventSource.java:233)

/**

根据eventHeader来进行反序列化操作;

具体的binlog的协议可以参考:https://blog.51cto.com/u_3522866/2717255

*/

public Event nextEvent(ByteArrayInputStream inputStream) throws IOException {

if (inputStream.peek() == -1) {

return null;

}

EventHeader eventHeader = eventHeaderDeserializer.deserialize(inputStream);

EventData eventData;

switch (eventHeader.getEventType()) {

case FORMAT_DESCRIPTION:

eventData = deserializeFormatDescriptionEventData(inputStream, eventHeader);

break;

case TABLE_MAP:

eventData = deserializeTableMapEventData(inputStream, eventHeader);

break;

default:

EventDataDeserializer eventDataDeserializer = getEventDataDeserializer(eventHeader.getEventType());

eventData = deserializeEventData(inputStream, eventHeader, eventDataDeserializer);

}

return new Event(eventHeader, eventData);

}

后续的一些方法细节,都是讲怎么去反序列化这个大事务update,最后导致Flink流OOM;

结论:

看完代码,这块的代码,我们可以得出以下的结论:

1、Mysql主从之间的复制(binlog),是通过TCP来传输的,在网络传输过程,在server端会按照16M的大小来拆包;

2、Debizium在接受到packet,会有一个合并packet的过程,最终组装成一个完成的event,给到后续的方法去反序列化。

3、通常意义上,我们理解binlog就是数据一行行的变化,这个其实并不准确,底层是按照event来处理的,并不是一行行来;这里在处理的过程中,其实是没有保证事务的,一个事务,是可以拆分成多个event的。

有点悲哀,这个故事到这里可能就要结束了,在msyql-cdc源码层面,我们没法做什么优化来解决这个大事务OOM的问题。

五、Mysql参数调优

既然我们不能解决问题,是不是可以换个角度,去把产生问题的源头解决了。

上面也讲到,底层既然处理的不是一个事务,处理的是事务分解后的event,那是不是可以通过调整event的大小,来解决呢? (https://www.cnblogs.com/stevenczp/p/6739042.html)

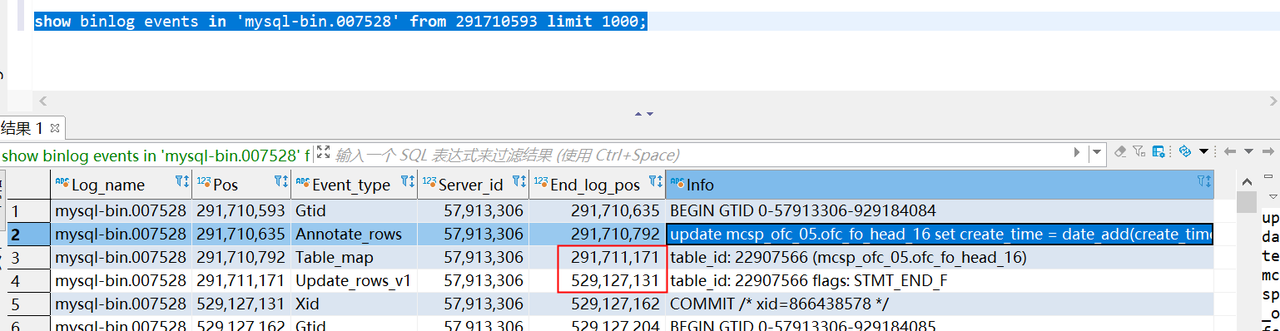

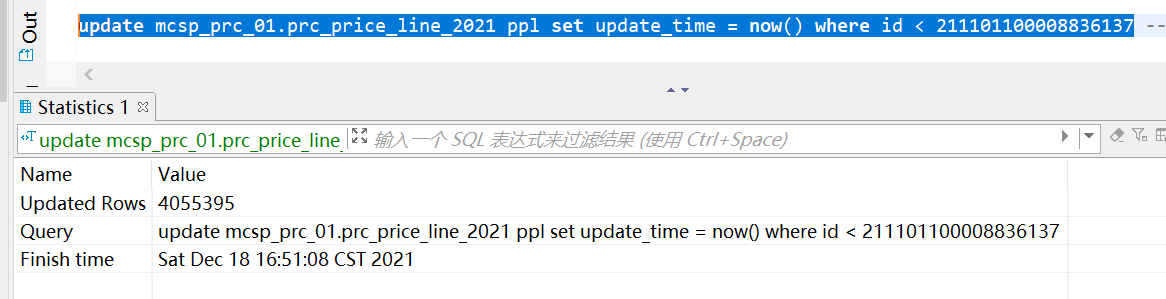

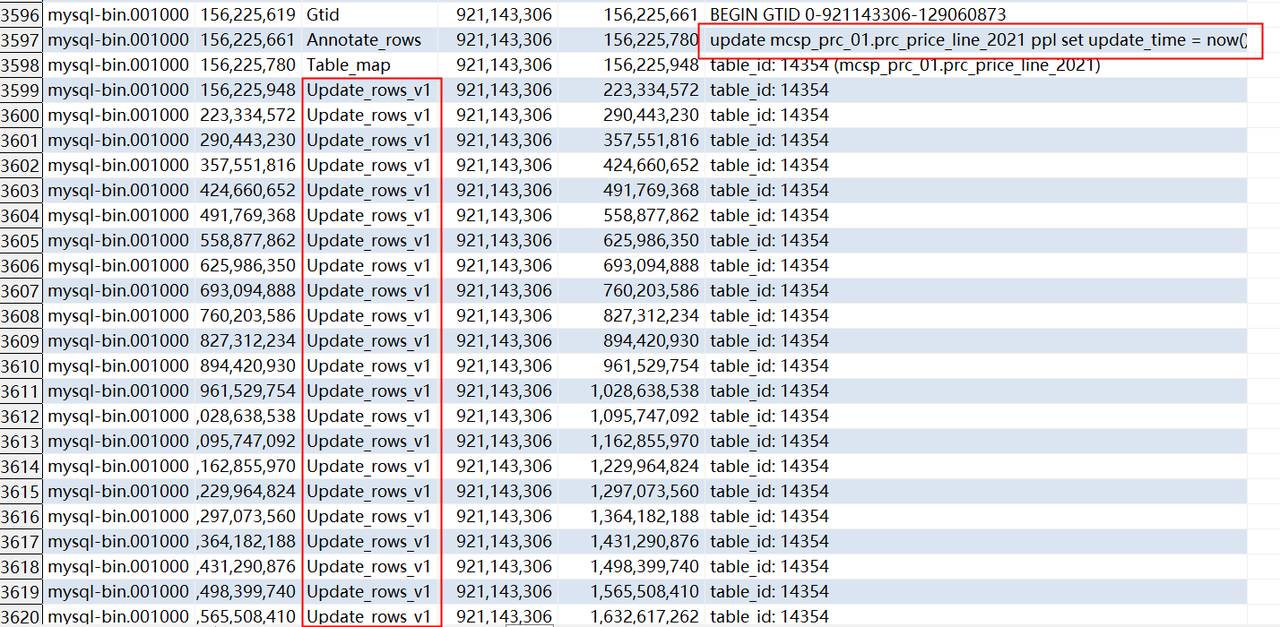

我们找到了其中一个发生OOM的binlog,这里可以看到,一个event几百M,从sql里面可以看到,一个事务更新15万的数据

binlog-row-event-max-size参数调整

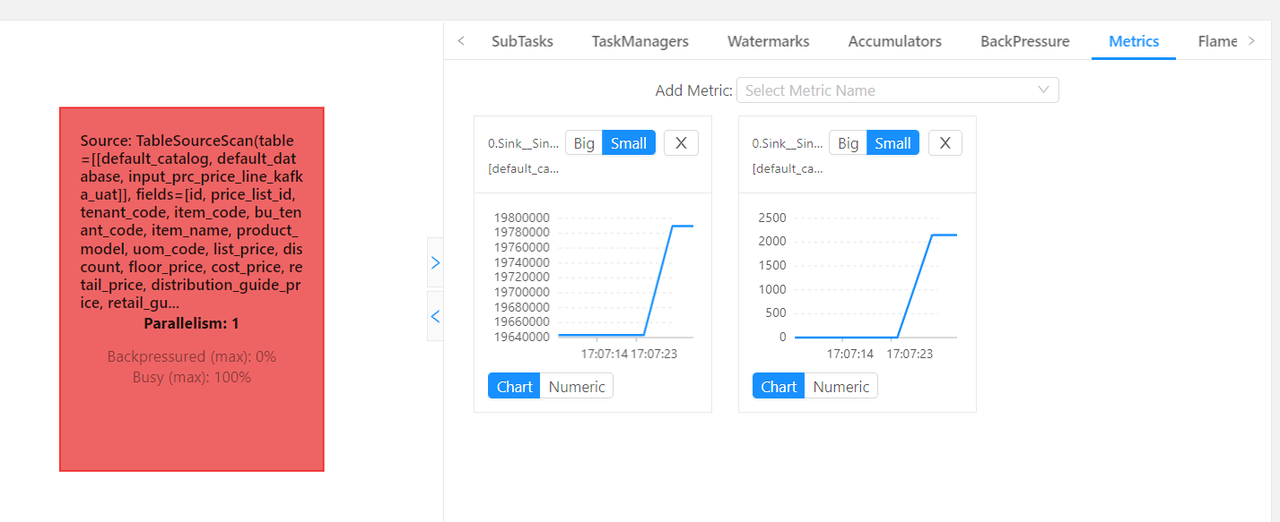

我们在uat环境通过设置mariaDB参数binlog-row-event-max-size=67108864 (64M),再来做一个大事务的更新,一下子更新了400万的数据,这个表40个字段

我们再看切割后的event,确实按照我们的预期,把大的event切成小的,胜利的曙光已经看到了。

timeout参数调整

幸福往往来之不易,解决问题的路上也是坎坎坷坷;当event切细后,flink处理大事务爆了另外一个错误:connection reset

com.ververica.cdc.connectors.shaded.org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.

at io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)

at io.debezium.connector.mysql.MySqlStreamingChangeEventSource$ReaderThreadLifecycleListener.onCommunicationFailure(MySqlStreamingChangeEventSource.java:1185)

at com.github.shyiko.mysql.binlog.BinaryLogClient.listenForEventPackets(BinaryLogClient.java:973)

at com.github.shyiko.mysql.binlog.BinaryLogClient.connect(BinaryLogClient.java:606)

at com.github.shyiko.mysql.binlog.BinaryLogClient$7.run(BinaryLogClient.java:850)

at java.lang.Thread.run(Thread.java:748)

Caused by: io.debezium.DebeziumException: Connection reset

at io.debezium.connector.mysql.MySqlStreamingChangeEventSource.wrap(MySqlStreamingChangeEventSource.java:1146)

... 5 more

Caused by: java.net.SocketException: Connection reset

at java.net.SocketInputStream.read(SocketInputStream.java:210)

at java.net.SocketInputStream.read(SocketInputStream.java:141)

at com.github.shyiko.mysql.binlog.io.BufferedSocketInputStream.read(BufferedSocketInputStream.java:59)

at com.github.shyiko.mysql.binlog.io.ByteArrayInputStream.readWithinBlockBoundaries(ByteArrayInputStream.java:257)

at com.github.shyiko.mysql.binlog.io.ByteArrayInputStream.read(ByteArrayInputStream.java:241)

at com.github.shyiko.mysql.binlog.io.ByteArrayInputStream.fill(ByteArrayInputStream.java:111)

at com.github.shyiko.mysql.binlog.io.ByteArrayInputStream.read(ByteArrayInputStream.java:104)

at com.github.shyiko.mysql.binlog.BinaryLogClient.readPacketSplitInChunks(BinaryLogClient.java:988)

at com.github.shyiko.mysql.binlog.BinaryLogClient.listenForEventPackets(BinaryLogClient.java:946)

... 3 more

遇到这个问题,很自然想到,debizium模拟的是msyql的从库,有一方的主动的关闭了链接;经过查看debizium的官方问题,没找到相关的参数,最后还是得从数据库参数调优下手。

我们先理解下mysql几个超时参数的含义,具体看blog:https://blog.51cto.com/lookingdream/2513405;

最后我们调整了以下几个参数,其中最关键的是:net_read_timeout和net_write_timeout,给与flink更多时间来处理大事务相关的event

show variables like '%slave_net_timeout%' -- 120

show variables like '%thread_pool_idle_timeout%' -- 120

show variables like '%net_read_timeout%'; -- 120

show variables like '%net_write_timeout%'; -- 120

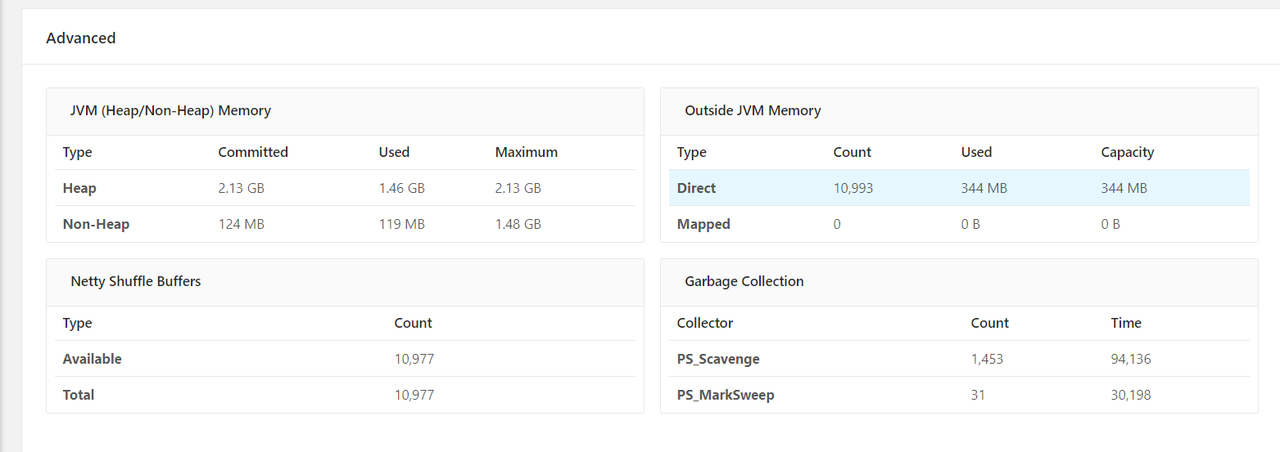

六、效果

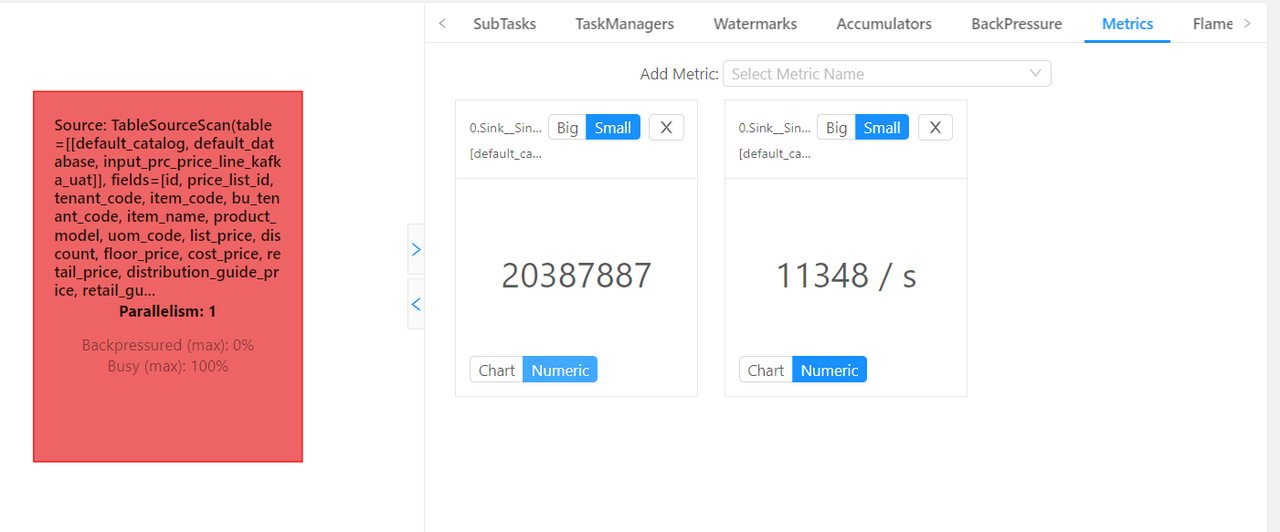

如下图:一个百万级的大事务更新,flink流产生了背压,但是很稳定,处理增量的能力1.2W/s

整体的jvm监控还算健康