kubernetes(三)--资源控制器

一、控制器简介

1.1、什么是控制器

Kubernetes 中内建了很多 controller(控制器),这些相当于一个状态机,用来控制 Pod 的具体状态和行为

1.2、控制器类型

1)ReplicationController 和 ReplicaSet

2)Deployment

3)DaemonSet

4)StateFulSet

5)Job/CronJob

6)Horizontal Pod Autoscaling

二、控制器详解

2.1、ReplicationController和ReplicaSet

1)ReplicationController(RC)用来确保容器应用的副本数始终保持在用户定义的副本数,即如果有容器异常退出,会自动创建新的 Pod 来替代;而如果异常多出来的容器也会自动回收;

2)在新版本的 Kubernetes 中建议使用 ReplicaSet 来取代 ReplicationController 。ReplicaSet 跟ReplicationController 没有本质的不同,只是名字不一样,并且 ReplicaSet 支持集合式的 selector(标签 )

#创建rs

[root@k8s-master01 k8s]# cat rs.yaml

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: myapp

image: hub.dianchou.com/library/myapp:v1

env:

- name: GET_HOSTS_FROM

value: dns

ports:

- containerPort: 80

[root@k8s-master01 k8s]# kubectl create -f rs.yaml

replicaset.extensions/frontend created

#查看

[root@k8s-master01 k8s]# kubectl get rs

NAME DESIRED CURRENT READY AGE

frontend 3 3 3 3m40s

[root@k8s-master01 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

frontend-6tdfn 1/1 Running 0 6s

frontend-sr7m9 1/1 Running 0 6s

frontend-xdwjk 1/1 Running 0 6s

#当删除pod,会重新生成相同数量的pod

[root@k8s-master01 k8s]# kubectl delete pod --all

pod "frontend-6tdfn" deleted

pod "frontend-sr7m9" deleted

pod "frontend-xdwjk" deleted

[root@k8s-master01 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

frontend-9xsw9 1/1 Running 0 16s

frontend-g8spw 1/1 Running 0 16s

frontend-gjtsl 1/1 Running 0 16s

#查看标签

[root@k8s-master01 k8s]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

frontend-9xsw9 1/1 Running 0 50s tier=frontend

frontend-g8spw 1/1 Running 0 50s tier=frontend

frontend-gjtsl 1/1 Running 0 50s tier=frontend

#给一个pod修改标签,整体rs控制的pod数量不会变

[root@k8s-master01 k8s]# kubectl label pod frontend-9xsw9 tier=frontend1

error: 'tier' already has a value (frontend), and --overwrite is false

[root@k8s-master01 k8s]# kubectl label pod frontend-9xsw9 tier=frontend1 --overwrite=True

pod/frontend-9xsw9 labeled

[root@k8s-master01 k8s]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

frontend-9xsw9 1/1 Running 0 2m49s tier=frontend1

frontend-g8spw 1/1 Running 0 2m49s tier=frontend

frontend-gjtsl 1/1 Running 0 2m49s tier=frontend

frontend-pfjqz 1/1 Running 0 5s tier=frontend

[root@k8s-master01 k8s]# kubectl delete rs --all

replicaset.extensions "frontend" deleted

[root@k8s-master01 k8s]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

frontend-9xsw9 1/1 Running 0 4m8s tier=frontend12.2、Deployment

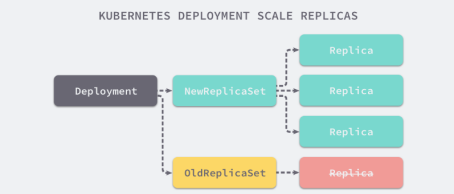

Deployment 为 Pod 和 ReplicaSet 提供了一个声明式定义 (declarative) 方法,用来替代以前的ReplicationController 来方便的管理应用。典型的应用场景包括;

- 定义 Deployment 来创建 Pod 和 ReplicaSet

- 滚动升级和回滚应用

- 扩容和缩容

- 暂停和继续 Deployment

[root@k8s-master01 k8s]# cat deployment.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: hub.dianchou.com/library/myapp:v1

ports:

- containerPort: 80

[root@k8s-master01 k8s]# kubectl apply -f deployment.yaml --record #--record记录命令,方便查看每次版本变化

deployment.extensions/nginx-deployment created

[root@k8s-master01 k8s]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deployment 3/3 3 3 6s

[root@k8s-master01 k8s]# kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deployment-59d7d9d5b4 3 3 3 9s

[root@k8s-master01 k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-59d7d9d5b4-csxvt 1/1 Running 0 12s

nginx-deployment-59d7d9d5b4-ldk6v 1/1 Running 0 12s

nginx-deployment-59d7d9d5b4-q7ffl 1/1 Running 0 12s

扩容:

[root@k8s-master01 k8s]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-59d7d9d5b4-csxvt 1/1 Running 0 52m nginx-deployment-59d7d9d5b4-ldk6v 1/1 Running 0 52m nginx-deployment-59d7d9d5b4-q7ffl 1/1 Running 0 52m [root@k8s-master01 k8s]# kubectl scale deployment nginx-deployment --replicas 5 deployment.extensions/nginx-deployment scaled [root@k8s-master01 k8s]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-59d7d9d5b4-csxvt 1/1 Running 0 52m nginx-deployment-59d7d9d5b4-ldk6v 1/1 Running 0 52m nginx-deployment-59d7d9d5b4-pc2zg 1/1 Running 0 2s nginx-deployment-59d7d9d5b4-q7ffl 1/1 Running 0 52m nginx-deployment-59d7d9d5b4-qsx4v 1/1 Running 0 2s [root@k8s-master01 k8s]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-59d7d9d5b4 5 5 5 52m [root@k8s-master01 k8s]# kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 5/5 5 5 53m

自动扩展

#如果集群支持 horizontal pod autoscaling 的话,还可以为Deployment设置自动扩展 kubectl autoscale deployment nginx-deployment --min=10--max=15--cpu-percent=80

滚动更新:

[root@k8s-master01 k8s]# kubectl set image deployment/nginx-deployment nginx=hub.dianchou.com/library/myapp:v2 deployment.extensions/nginx-deployment image updated [root@k8s-master01 k8s]# kubectl get pod NAME READY STATUS RESTARTS AGE nginx-deployment-7b47d7d5c7-2tndh 1/1 Running 0 47s nginx-deployment-7b47d7d5c7-4jxmq 1/1 Running 0 45s nginx-deployment-7b47d7d5c7-h7k2n 1/1 Running 0 43s nginx-deployment-7b47d7d5c7-msflp 1/1 Running 0 47s nginx-deployment-7b47d7d5c7-nbflp 1/1 Running 0 45s [root@k8s-master01 k8s]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-7b47d7d5c7-2tndh 1/1 Running 0 61s 10.244.2.16 k8s-node02 <none> <none> nginx-deployment-7b47d7d5c7-4jxmq 1/1 Running 0 59s 10.244.1.17 k8s-node01 <none> <none> nginx-deployment-7b47d7d5c7-h7k2n 1/1 Running 0 57s 10.244.1.18 k8s-node01 <none> <none> nginx-deployment-7b47d7d5c7-msflp 1/1 Running 0 61s 10.244.1.16 k8s-node01 <none> <none> nginx-deployment-7b47d7d5c7-nbflp 1/1 Running 0 59s 10.244.2.17 k8s-node02 <none> <none> [root@k8s-master01 k8s]# curl 10.244.2.16 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> [root@k8s-master01 k8s]# kubectl get rs #会出现两个rs NAME DESIRED CURRENT READY AGE nginx-deployment-59d7d9d5b4 0 0 0 63m nginx-deployment-7b47d7d5c7 5 5 5 87s

回滚:

[root@k8s-master01 k8s]# kubectl rollout undo deployment/nginx-deployment deployment.extensions/nginx-deployment rolled back [root@k8s-master01 k8s]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-59d7d9d5b4 4 4 2 66m nginx-deployment-7b47d7d5c7 2 2 2 5m20s [root@k8s-master01 k8s]# kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deployment-59d7d9d5b4 5 5 5 67m nginx-deployment-7b47d7d5c7 0 0 0 5m23s [root@k8s-master01 k8s]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-deployment-59d7d9d5b4-5slgt 1/1 Running 0 15s 10.244.1.20 k8s-node01 <none> <none> nginx-deployment-59d7d9d5b4-856q5 1/1 Running 0 16s 10.244.1.19 k8s-node01 <none> <none> nginx-deployment-59d7d9d5b4-bq6nz 1/1 Running 0 14s 10.244.2.20 k8s-node02 <none> <none> nginx-deployment-59d7d9d5b4-jnkbk 1/1 Running 0 15s 10.244.2.19 k8s-node02 <none> <none> nginx-deployment-59d7d9d5b4-z899b 1/1 Running 0 17s 10.244.2.18 k8s-node02 <none> <none> [root@k8s-master01 k8s]# curl 10.244.1.20 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

更新deployment:

#方式一 [root@k8s-master01 k8s]# kubectl set image deployment/nginx-deployment nginx=hub.dianchou.com/library/myapp:v2 deployment.extensions/nginx-deployment image updated #方式二 #使用kubectl edit编辑修改镜像版本,即时生效 [root@k8s-master01 k8s]# kubectl edit deployment/nginx-deployment deployment.extensions/nginx-deployment edited

查看rollout状态:

[root@k8s-master01 k8s]# kubectl rollout status deployment/nginx-deployment deployment "nginx-deployment" successfully rolled out

Deployment更新策略:

1)Deployment 可以保证在升级时只有一定数量的 Pod 是 down 的。默认的,它会确保至少有比期望的Pod数量少一个是up状态(最多一个不可用)

2)Deployment 同时也可以确保只创建出超过期望数量的一定数量的 Pod。默认的,它会确保最多比期望的Pod数量多一个的 Pod 是 up 的(最多1个 surge )

3)未来的 Kuberentes 版本中,将从1-1变成25%-25%

4)假如您创建了一个有5个niginx:1.7.9 replica的 Deployment,但是当还只有3个nginx:1.7.9的 replica 创建出来的时候您就开始更新含有5个nginx:1.9.1 replica 的 Deployment。在这种情况下,Deployment 会立即杀掉已创建的3个nginx:1.7.9的 Pod,并开始创建nginx:1.9.1的 Pod。它不会等到所有的5个nginx:1.7.9的Pod 都创建完成后才开始改变航道

Deployment回退命令整理:

kubectl set image deployment/nginx-deployment nginx=nginx:1.91

kubectl rollout status deployments nginx-deployment

kubectl rollout history deployment/nginx-deployment #显示回退历史

[root@k8s-master01 k8s]# kubectl rollout history deployment/nginx-deployment

deployment.extensions/nginx-deployment

REVISION CHANGE-CAUSE

1 kubectl apply --filename=deployment.yaml --record=true

3 kubectl apply --filename=deployment.yaml --record=true

4 kubectl apply --filename=deployment.yaml --record=true

kubectl rollout undo deployment/nginx-deployment

kubectl rollout undo deployment/nginx-deployment --to-revision=2 #可以使用 --revision参数指定某个历史版本

kubectl rollout pause deployment/nginx-deployment #暂停 deployment 的更新清理Policy:

通过设置.spec.revisonHistoryLimit项来指定 deployment 最多保留多少 revision 历史记录。默认的会保留所有的 revision;如果将该项设置为0,Deployment 就不允许回退了

2.3、DaemonSet

DaemonSet确保全部(或者一些)Node 上运行一个 Pod 的副本。当有 Node 加入集群时,也会为他们新增一个Pod 。当有 Node 从集群移除时,这些 Pod 也会被回收。删除 DaemonSet 将会删除它创建的所有 Pod

使用 DaemonSet 的一些典型用法:

1)运行集群存储 daemon,例如在每个 Node 上运行glusterd、ceph

2)在每个 Node 上运行日志收集 daemon,例如fluentd、logstash

3)在每个 Node 上运行监控 daemon,例如Prometheus Node Exporter、collectd、Datadog 代理、New Relic 代理,或 Ganglia gmond

[root@k8s-master01 k8s]# cat DaemonSet.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: daemonset-example

labels:

app: daemonset

spec:

selector:

matchLabels:

name: daemonset-example

template:

metadata:

labels:

name: daemonset-example

spec:

containers:

- name: daemonset-example

image: hub.dianchou.com/library/myapp:v3

[root@k8s-master01 k8s]# kubectl create -f DaemonSet.yaml

daemonset.apps/daemonset-example created

[root@k8s-master01 k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-example-dk494 1/1 Running 0 2s 10.244.1.31 k8s-node01 <none> <none>

daemonset-example-wmwhg 1/1 Running 0 2s 10.244.2.32 k8s-node02 <none> <none>

[root@k8s-master01 k8s]# kubectl delete pod daemonset-example-dk494

pod "daemonset-example-dk494" deleted

[root@k8s-master01 k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-example-q4p9n 1/1 Running 0 2s 10.244.1.32 k8s-node01 <none> <none>

daemonset-example-wmwhg 1/1 Running 0 35s 10.244.2.32 k8s-node02 <none> <none>2.4、Job

Job 负责批处理任务,即仅执行一次的任务,它保证批处理任务的一个或多个 Pod 成功结束

#计算pi小数点100位

[root@k8s-master01 k8s]# cat job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

metadata:

name: pi

spec:

containers:

- name: pi

image: hub.dianchou.com/library/perl:v1

command: ["perl","-Mbignum=bpi","-wle","print bpi(100)"]

restartPolicy: Never

[root@k8s-master01 k8s]# kubectl create -f job.yaml

job.batch/pi created

[root@k8s-master01 k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pi-6ffqs 0/1 Completed 0 7s 10.244.2.34 k8s-node02 <none> <none>

[root@k8s-master01 k8s]# kubectl get job

NAME COMPLETIONS DURATION AGE

pi 1/1 1s 19s

[root@k8s-master01 k8s]# kubectl log pi-6ffqs

log is DEPRECATED and will be removed in a future version. Use logs instead.

3.141592653589793238462643383279502884197169399375105820974944592307816406286208998628034825342117068

[root@k8s-master01 k8s]# kubectl logs pi-6ffqs

3.1415926535897932384626433832795028841971693993751058209749445923078164062862089986280348253421170682.5、CronJob

管理基于时间的 Job,即:

- 在给定时间点只运行一次

- 周期性地在给定时间点运行

使用前提条件:当前使用的 Kubernetes 集群,版本 >= 1.8(对 CronJob)。对于先前版本的集群,版本 <1.8,启动 API Server时,通过传递选项--runtime-config=batch/v2alpha1=true可以开启 batch/v2alpha1API

典型的用法如下所示:

1)在给定的时间点调度 Job 运行

2)创建周期性运行的 Job,例如:数据库备份、发送邮件

相关字段解释说明:

spec.template格式同Pod

RestartPolicy仅支持Never或OnFailure

单个Pod时,默认Pod成功运行后Job即结束

.spec.completions:标志Job结束需要成功运行的Pod个数,默认为1

.spec.parallelism:标志并行运行的Pod的个数,默认为1

spec.activeDeadlineSeconds:标志失败Pod的重试最大时间,超过这个时间不会继续重试

.spec.schedule:调度,必需字段,指定任务运行周期,格式同 Cron

.spec.jobTemplate:Job 模板,必需字段,指定需要运行的任务,格式同 Job

.spec.startingDeadlineSeconds:启动 Job 的期限(秒级别),该字段是可选的。如果因为任何原因而错过了被调度的时间,那么错过执行时间的 Job 将被认为是失败的。如果没有指定,则没有期限

.spec.concurrencyPolicy:并发策略,该字段也是可选的。它指定了如何处理被 Cron Job 创建的 Job 的并发执行。只允许指定下面策略中的一种:

- Allow(默认):允许并发运行 Job

- Forbid:禁止并发运行,如果前一个还没有完成,则直接跳过下一个

- Replace:取消当前正在运行的 Job,用一个新的来替换

注意,当前策略只能应用于同一个 Cron Job 创建的 Job。如果存在多个 Cron Job,它们创建的 Job 之间总是允许并发运行。

.spec.suspend:挂起,该字段也是可选的。如果设置为true,后续所有执行都会被挂起。它对已经开始执行的 Job 不起作用。默认值为false。

.spec.successfulJobsHistoryLimit和.spec.failedJobsHistoryLimit:历史限制,是可选的字段。它们指定了可以保留多少完成和失败的 Job。默认情况下,它们分别设置为3和1。设置限制的值为0,相关类型的 Job 完成后将不会被保留

[root@k8s-master01 k8s]# cat cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: hub.dianchou.com/library/myapp:v1

args:

- /bin/sh

- -c

- date; echo Hello from the kubernetes cluster

restartPolicy: OnFailure

[root@k8s-master01 k8s]# kubectl create -f cronjob.yaml

cronjob.batch/hello created

[root@k8s-master01 k8s]# kubectl get cronjob

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

hello */1 * * * * False 0 <none> 8s

[root@k8s-master01 k8s]# kubectl get job

NAME COMPLETIONS DURATION AGE

hello-1580729040 1/1 1s 2m51s

hello-1580729100 1/1 1s 111s

hello-1580729160 1/1 2s 51s

[root@k8s-master01 k8s]# kubectl get pod #一分钟创建一个新的pod,并执行定时任务

NAME READY STATUS RESTARTS AGE

hello-1580729040-5j8wd 0/1 Completed 0 2m57s

hello-1580729100-6v89q 0/1 Completed 0 117s

hello-1580729160-8pft8 0/1 Completed 0 57s

[root@k8s-master01 k8s]# kubectl logs hello-1580729100-6v89q

Mon Feb 3 11:25:03 UTC 2020

Hello from the kubernetes cluster

# 注意,删除 cronjob 的时候不会自动删除 job,这些 job 可以用 kubectl delete job 来删除

$ kubectl delete cronjob hello2.6、StatefulSet

StatefulSet 作为 Controller 为 Pod 提供唯一的标识。它可以保证部署和 scale 的顺序

StatefulSet是为了解决有状态服务的问题(对应Deployments和ReplicaSets是为无状态服务而设计),其应用场景包括:

1)稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于PVC来实现

2)稳定的网络标志,即Pod重新调度后其PodName和HostName不变,基于Headless Service(即没有Cluster IP的Service)来实现

3)有序部署,有序扩展,即Pod是有顺序的,在部署或者扩展的时候要依据定义的顺序依次依次进行(即从0到N-1,在下一个Pod运行之前所有之前的Pod必须都是Running和Ready状态),基于init containers来实现

4)有序收缩,有序删除(即从N-1到0)

2.7、Horizontal Pod Autoscaling(HPA )

应用的资源使用率通常都有高峰和低谷的时候,如何削峰填谷,提高集群的整体资源利用率,让service中的Pod个数自动调整呢?这就有赖于Horizontal Pod Autoscaling了,顾名思义,使Pod水平自动缩放

-------------------------------------------

个性签名:独学而无友,则孤陋而寡闻。做一个灵魂有趣的人!