Installation Kafka-2.13 on openEuler

一、Installation Kafka-2.13 on openEuler

地址

二、安装准备

1 安装 JDK

安装 kafka 之前必须先安装JDK和zookeeper,如何安装JDK,可以查看:CentOS 7.9 安装 jdk-8u333

2 下载安装 Zookeeper

如何在 openEuler 下安装 Zookeeper,可以查看:openEuler 安装 zookeeper-3.7.1

二、Kafka

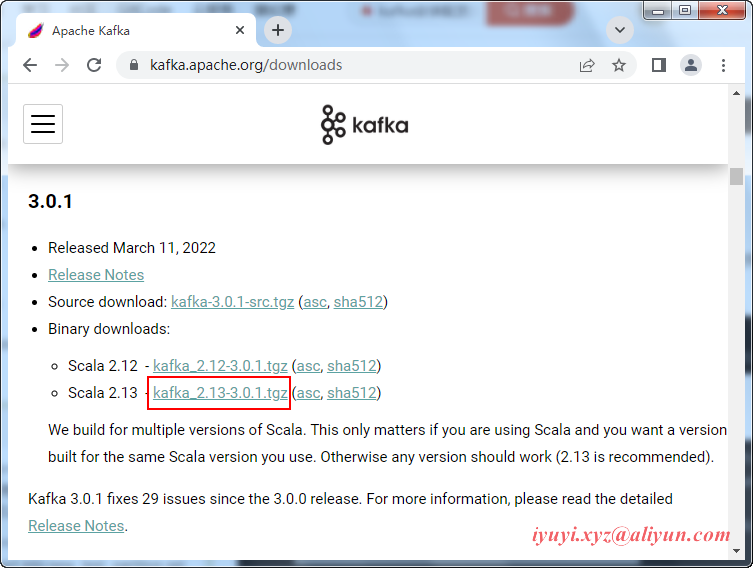

1 进入Apache官网 http://kafka.apache.org/downloads.html 选择Binary downloads,选择版本进行下载

2 wget下载

mkdir /opt/software && cd /opt/software

wget https://archive.apache.org/dist/kafka/3.2.1/kafka_2.13-3.2.1.tgz3 解压

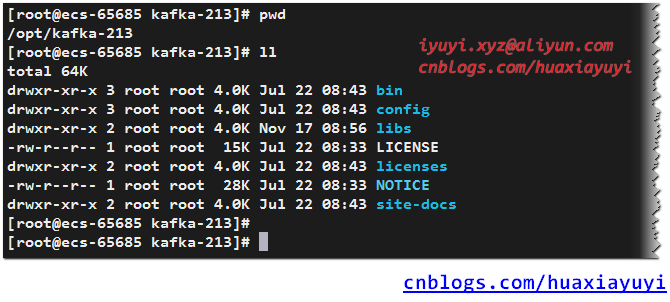

tar -zxvf /opt/software/kafka_2.13-3.2.1.tgz -C /opt && cd /opt/kafka_2.13-3.2.1 && ll

mv /opt/kafka_2.13-3.2.1 /opt/kafka-213 && cd /opt/kafka-2134 进入kafka目录

5 启动kafka之前要确保zookeeper已经启动,如果没有启动,执行以下命令

zkServer.sh start6 修改kafka配置文件中的zookeeper地址,打开配置文件

vim /opt/kafka-213/config/server.properties

24 broker.id=0

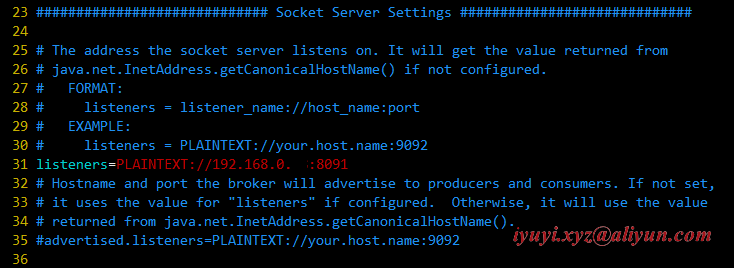

34 listeners=PLAINTEXT://192.168.0...:8091

62 log.dirs=/opt/kafka-213/logs

125 zookeeper.connect=192.168.0...:2181

128 zookeeper.connection.timeout.ms=180007 新建日志储存路径

mkdir /opt/kafka-213/logs8 启动 kafka

/opt/kafka-213/bin/kafka-server-start.sh /opt/kafka-213/config/server.properties

## 后台启动

/opt/kafka-213/bin/kafka-server-start.sh -daemon /opt/kafka-213/config/server.properties

四、设置开机自动启动

1 切换到/lib/systemd/system/目录,创建自启动文件

cat > /lib/systemd/system/kafka.service << EOF

[Unit]

Description=kafkaservice

After=network.target

[Service]

WorkingDirectory=/opt/kafka-213

ExecStart=/opt/kafka-213/bin/kafka-server-start.sh /opt/kafka-213/config/server.properties

ExecStop=/opt/kafka-213/bin/kafka-server-stop.sh

User=root

Group=root

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

## 确认下

cat /lib/systemd/system/kafka.service2 设置自启动

systemctl start kafka

systemctl enable kafka

systemctl status kafka

## systemctl stop kafka

五、Kafka命令测试

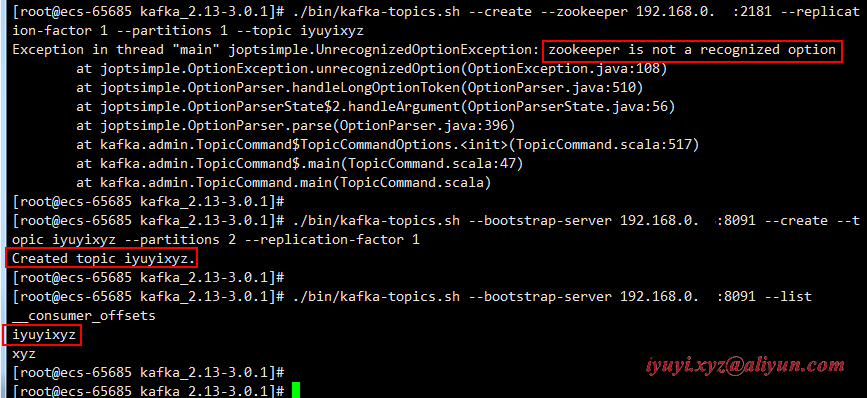

1 创建topic

- create:创建topic

- partitions:topic创建时,若不指定分区个数,则使用server.properties中配置的num.partitions值,也可以自己指定

- replication:用来设置主题的副本数。每个主题可以有多个副本,副本位于集群中不同的broker上,也就是说副本的数量不能超过broker的数量,否则创建主题时会失败

/opt/kafka-213/bin/kafka-topics.sh --bootstrap-server 192.168.0..:8091 --create --topic iyuyixyz --partitions 2 --replication-factor 12 列出所有topic

/opt/kafka-213/bin/kafka-topics.sh --bootstrap-server 192.168.0...:8091 --list3 列出所有topic的信息

/opt/kafka-213/bin/kafka-topics.sh --bootstrap-server 192.168.0...:8091 --describe4 列出指定topic的信息

[root@ecs-65685 ~]# /opt/kafka-213/bin/kafka-topics.sh --bootstrap-server 192.168.0...:8091 --describe --topic iyuyixyz

Topic: iyuyixyz TopicId: l_5Jn-ShRsuI4_69nRycRQ PartitionCount: 2 ReplicationFactor: 1 Configs: segment.bytes=1073741824

Topic: iyuyixyz Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Topic: iyuyixyz Partition: 1 Leader: 0 Replicas: 0 Isr: 05 生产者(消息发送程序)

/opt/kafka-213/bin/kafka-console-producer.sh --broker-list 192.168.0...:8091 --topic iyuyixyz6 消费者(消息接收程序)

/opt/kafka-213/bin/kafka-console-consumer.sh --bootstrap-server 192.168.0...:8091 --topic iyuyixyz

六、配置系统环境变量

vim /etc/profile

export PATH=$PATH:/opt/kafka-213/bin

## 使配置生效

source /etc/profile

X、One Step Success

1 启动日志

查看代码

[root@ecs-65685 ~]# /opt/kafka-213/bin/kafka-server-start.sh config/server.properties

[2022-11-17 09:19:41,363] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2022-11-17 09:19:41,644] INFO Setting -D jdk.tls.rejectClientInitiatedRenegotiation=true to disable client-initiated TLS renegotiation (org.apache.zookeeper.common.X509Util)

[2022-11-17 09:19:41,730] INFO Registered signal handlers for TERM, INT, HUP (org.apache.kafka.common.utils.LoggingSignalHandler)

[2022-11-17 09:19:41,733] INFO starting (kafka.server.KafkaServer)

[2022-11-17 09:19:41,733] INFO Connecting to zookeeper on 192.168.0...:2181 (kafka.server.KafkaServer)

[2022-11-17 09:19:41,745] INFO [ZooKeeperClient Kafka server] Initializing a new session to 192.168.0...:2181. (kafka.zookeeper.ZooKeeperClient)

[2022-11-17 09:19:41,750] INFO Client environment:zookeeper.version=3.6.3--6401e4ad2087061bc6b9f80dec2d69f2e3c8660a, built on 04/08/2021 16:35 GMT (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,750] INFO Client environment:host.name=localhost (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,750] INFO Client environment:java.version=1.8.0_333 (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.home=/opt/jdk1.8.0_333/jre (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.class.path=/opt/jdk1.8.0_333/lib/tools.jar:/opt/jdk1.8.0_333/lib/dt.jar:/opt/jdk1.8.0_333/lib:/opt/kafka-213/bin/../libs/activation-1.1.1.jar:/opt/kafka-213/bin/../libs/aopalliance-repackaged-2.6.1.jar:/opt/kafka-213/bin/../libs/argparse4j-0.7.0.jar:/opt/kafka-213/bin/../libs/audience-annotations-0.5.0.jar:/opt/kafka-213/bin/../libs/commons-cli-1.4.jar:/opt/kafka-213/bin/../libs/commons-lang3-3.8.1.jar:/opt/kafka-213/bin/../libs/connect-api-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-basic-auth-extension-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-json-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-mirror-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-mirror-client-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-runtime-3.2.1.jar:/opt/kafka-213/bin/../libs/connect-transforms-3.2.1.jar:/opt/kafka-213/bin/../libs/hk2-api-2.6.1.jar:/opt/kafka-213/bin/../libs/hk2-locator-2.6.1.jar:/opt/kafka-213/bin/../libs/hk2-utils-2.6.1.jar:/opt/kafka-213/bin/../libs/jackson-annotations-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-core-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-databind-2.12.6.1.jar:/opt/kafka-213/bin/../libs/jackson-dataformat-csv-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-datatype-jdk8-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-jaxrs-base-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-jaxrs-json-provider-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-module-jaxb-annotations-2.12.6.jar:/opt/kafka-213/bin/../libs/jackson-module-scala_2.13-2.12.6.jar:/opt/kafka-213/bin/../libs/jakarta.activation-api-1.2.1.jar:/opt/kafka-213/bin/../libs/jakarta.annotation-api-1.3.5.jar:/opt/kafka-213/bin/../libs/jakarta.inject-2.6.1.jar:/opt/kafka-213/bin/../libs/jakarta.validation-api-2.0.2.jar:/opt/kafka-213/bin/../libs/jakarta.ws.rs-api-2.1.6.jar:/opt/kafka-213/bin/../libs/jakarta.xml.bind-api-2.3.2.jar:/opt/kafka-213/bin/../libs/javassist-3.27.0-GA.jar:/opt/kafka-213/bin/../libs/javax.servlet-api-3.1.0.jar:/opt/kafka-213/bin/../libs/javax.ws.rs-api-2.1.1.jar:/opt/kafka-213/bin/../libs/jaxb-api-2.3.0.jar:/opt/kafka-213/bin/../libs/jersey-client-2.34.jar:/opt/kafka-213/bin/../libs/jersey-common-2.34.jar:/opt/kafka-213/bin/../libs/jersey-container-servlet-2.34.jar:/opt/kafka-213/bin/../libs/jersey-container-servlet-core-2.34.jar:/opt/kafka-213/bin/../libs/jersey-hk2-2.34.jar:/opt/kafka-213/bin/../libs/jersey-server-2.34.jar:/opt/kafka-213/bin/../libs/jetty-client-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-continuation-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-http-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-io-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-security-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-server-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-servlet-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-servlets-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-util-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jetty-util-ajax-9.4.44.v20210927.jar:/opt/kafka-213/bin/../libs/jline-3.21.0.jar:/opt/kafka-213/bin/../libs/jopt-simple-5.0.4.jar:/opt/kafka-213/bin/../libs/jose4j-0.7.9.jar:/opt/kafka-213/bin/../libs/kafka_2.13-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-clients-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-log4j-appender-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-metadata-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-raft-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-server-common-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-shell-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-storage-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-storage-api-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-streams-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-streams-examples-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-streams-scala_2.13-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-streams-test-utils-3.2.1.jar:/opt/kafka-213/bin/../libs/kafka-tools-3.2.1.jar:/opt/kafka-213/bin/../libs/lz4-java-1.8.0.jar:/opt/kafka-213/bin/../libs/maven-artifact-3.8.4.jar:/opt/kafka-213/bin/../libs/metrics-core-2.2.0.jar:/opt/kafka-213/bin/../libs/metrics-core-4.1.12.1.jar:/opt/kafka-213/bin/../libs/netty-buffer-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-codec-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-common-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-handler-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-resolver-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-tcnative-classes-2.0.46.Final.jar:/opt/kafka-213/bin/../libs/netty-transport-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-transport-classes-epoll-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-transport-native-epoll-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/netty-transport-native-unix-common-4.1.73.Final.jar:/opt/kafka-213/bin/../libs/osgi-resource-locator-1.0.3.jar:/opt/kafka-213/bin/../libs/paranamer-2.8.jar:/opt/kafka-213/bin/../libs/plexus-utils-3.3.0.jar:/opt/kafka-213/bin/../libs/reflections-0.9.12.jar:/opt/kafka-213/bin/../libs/reload4j-1.2.19.jar:/opt/kafka-213/bin/../libs/rocksdbjni-6.29.4.1.jar:/opt/kafka-213/bin/../libs/scala-collection-compat_2.13-2.6.0.jar:/opt/kafka-213/bin/../libs/scala-java8-compat_2.13-1.0.2.jar:/opt/kafka-213/bin/../libs/scala-library-2.13.8.jar:/opt/kafka-213/bin/../libs/scala-logging_2.13-3.9.4.jar:/opt/kafka-213/bin/../libs/scala-reflect-2.13.8.jar:/opt/kafka-213/bin/../libs/slf4j-api-1.7.36.jar:/opt/kafka-213/bin/../libs/slf4j-reload4j-1.7.36.jar:/opt/kafka-213/bin/../libs/snappy-java-1.1.8.4.jar:/opt/kafka-213/bin/../libs/trogdor-3.2.1.jar:/opt/kafka-213/bin/../libs/zookeeper-3.6.3.jar:/opt/kafka-213/bin/../libs/zookeeper-jute-3.6.3.jar:/opt/kafka-213/bin/../libs/zstd-jni-1.5.2-1.jar (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.version=4.19.90-2003.4.0.0036.oe1.x86_64 (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:user.dir=/opt/kafka-213 (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.memory.free=1009MB (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.memory.max=1024MB (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,751] INFO Client environment:os.memory.total=1024MB (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,754] INFO Initiating client connection, connectString=192.168.0...:2181 sessionTimeout=18000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@5e0e82ae (org.apache.zookeeper.ZooKeeper)

[2022-11-17 09:19:41,758] INFO jute.maxbuffer value is 4194304 Bytes (org.apache.zookeeper.ClientCnxnSocket)

[2022-11-17 09:19:41,763] INFO zookeeper.request.timeout value is 0. feature enabled=false (org.apache.zookeeper.ClientCnxn)

[2022-11-17 09:19:41,765] INFO Opening socket connection to server /192.168.0...:2181. (org.apache.zookeeper.ClientCnxn)

[2022-11-17 09:19:41,766] INFO [ZooKeeperClient Kafka server] Waiting until connected. (kafka.zookeeper.ZooKeeperClient)

[2022-11-17 09:19:41,767] INFO Socket connection established, initiating session, client: /192.168.0...:34722, server: /192.168.0...:2181 (org.apache.zookeeper.ClientCnxn)

[2022-11-17 09:19:41,790] INFO Session establishment complete on server /192.168.0...:2181, session id = 0x1000010cc870000, negotiated timeout = 18000 (org.apache.zookeeper.ClientCnxn)

[2022-11-17 09:19:41,793] INFO [ZooKeeperClient Kafka server] Connected. (kafka.zookeeper.ZooKeeperClient)

[2022-11-17 09:19:41,886] INFO [feature-zk-node-event-process-thread]: Starting (kafka.server.FinalizedFeatureChangeListener$ChangeNotificationProcessorThread)

[2022-11-17 09:19:41,896] INFO Feature ZK node at path: /feature does not exist (kafka.server.FinalizedFeatureChangeListener)

[2022-11-17 09:19:41,896] INFO Cleared cache (kafka.server.FinalizedFeatureCache)

[2022-11-17 09:19:42,040] INFO Cluster ID = 2tJIdaFHSWu7j2WCIjfWpg (kafka.server.KafkaServer)

[2022-11-17 09:19:42,043] WARN No meta.properties file under dir /opt/kafka-213/logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[2022-11-17 09:19:42,085] INFO KafkaConfig values:

advertised.listeners = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.heartbeat.interval.ms = 2000

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

broker.session.timeout.ms = 9000

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

connections.max.reauth.ms = 0

control.plane.listener.name = null

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.listener.names = null

controller.quorum.append.linger.ms = 25

controller.quorum.election.backoff.max.ms = 1000

controller.quorum.election.timeout.ms = 1000

controller.quorum.fetch.timeout.ms = 2000

controller.quorum.request.timeout.ms = 2000

controller.quorum.retry.backoff.ms = 20

controller.quorum.voters = []

controller.quota.window.num = 11

controller.quota.window.size.seconds = 1

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delegation.token.secret.key = null

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.max.bytes = 57671680

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 1800000

group.max.size = 2147483647

group.min.session.timeout.ms = 6000

initial.broker.registration.timeout.ms = 60000

inter.broker.listener.name = null

inter.broker.protocol.version = 3.2-IV0

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = PLAINTEXT://192.168.0...:8091

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.max.compaction.lag.ms = 9223372036854775807

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /opt/kafka-213/logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 3.0-IV1

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connection.creation.rate = 2147483647

max.connections = 2147483647

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1048588

metadata.log.dir = null

metadata.log.max.record.bytes.between.snapshots = 20971520

metadata.log.segment.bytes = 1073741824

metadata.log.segment.min.bytes = 8388608

metadata.log.segment.ms = 604800000

metadata.max.retention.bytes = -1

metadata.max.retention.ms = 604800000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

node.id = 0

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

principal.builder.class = class org.apache.kafka.common.security.authenticator.DefaultKafkaPrincipalBuilder

process.roles = []

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.window.num = 11

quota.window.size.seconds = 1

remote.log.index.file.cache.total.size.bytes = 1073741824

remote.log.manager.task.interval.ms = 30000

remote.log.manager.task.retry.backoff.max.ms = 30000

remote.log.manager.task.retry.backoff.ms = 500

remote.log.manager.task.retry.jitter = 0.2

remote.log.manager.thread.pool.size = 10

remote.log.metadata.manager.class.name = null

remote.log.metadata.manager.class.path = null

remote.log.metadata.manager.impl.prefix = null

remote.log.metadata.manager.listener.name = null

remote.log.reader.max.pending.tasks = 100

remote.log.reader.threads = 10

remote.log.storage.manager.class.name = null

remote.log.storage.manager.class.path = null

remote.log.storage.manager.impl.prefix = null

remote.log.storage.system.enable = false

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 30000

replica.selector.class = null

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.connect.timeout.ms = null

sasl.login.read.timeout.ms = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.login.retry.backoff.max.ms = 10000

sasl.login.retry.backoff.ms = 100

sasl.mechanism.controller.protocol = GSSAPI

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.oauthbearer.clock.skew.seconds = 30

sasl.oauthbearer.expected.audience = null

sasl.oauthbearer.expected.issuer = null

sasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000

sasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000

sasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100

sasl.oauthbearer.jwks.endpoint.url = null

sasl.oauthbearer.scope.claim.name = scope

sasl.oauthbearer.sub.claim.name = sub

sasl.oauthbearer.token.endpoint.url = null

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

security.providers = null

socket.connection.setup.timeout.max.ms = 30000

socket.connection.setup.timeout.ms = 10000

socket.listen.backlog.size = 50

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2]

ssl.endpoint.identification.algorithm = https

ssl.engine.factory.class = null

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.certificate.chain = null

ssl.keystore.key = null

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.principal.mapping.rules = DEFAULT

ssl.protocol = TLSv1.2

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.certificates = null

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 10000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.clientCnxnSocket = null

zookeeper.connect = 192.168.0...:2181

zookeeper.connection.timeout.ms = 18000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 18000

zookeeper.set.acl = false

zookeeper.ssl.cipher.suites = null

zookeeper.ssl.client.enable = false

zookeeper.ssl.crl.enable = false

zookeeper.ssl.enabled.protocols = null

zookeeper.ssl.endpoint.identification.algorithm = HTTPS

zookeeper.ssl.keystore.location = null

zookeeper.ssl.keystore.password = null

zookeeper.ssl.keystore.type = null

zookeeper.ssl.ocsp.enable = false

zookeeper.ssl.protocol = TLSv1.2

zookeeper.ssl.truststore.location = null

zookeeper.ssl.truststore.password = null

zookeeper.ssl.truststore.type = null

(kafka.server.KafkaConfig)

[2022-11-17 09:19:42,118] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2022-11-17 09:19:42,119] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2022-11-17 09:19:42,119] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2022-11-17 09:19:42,120] INFO [ThrottledChannelReaper-ControllerMutation]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2022-11-17 09:19:42,150] INFO Loading logs from log dirs ArraySeq(/opt/kafka-213/logs) (kafka.log.LogManager)

[2022-11-17 09:19:42,152] INFO Attempting recovery for all logs in /opt/kafka-213/logs since no clean shutdown file was found (kafka.log.LogManager)

[2022-11-17 09:19:42,156] INFO Loaded 0 logs in 6ms. (kafka.log.LogManager)

[2022-11-17 09:19:42,157] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2022-11-17 09:19:42,159] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2022-11-17 09:19:42,436] INFO [BrokerToControllerChannelManager broker=0 name=forwarding]: Starting (kafka.server.BrokerToControllerRequestThread)

[2022-11-17 09:19:42,564] INFO Updated connection-accept-rate max connection creation rate to 2147483647 (kafka.network.ConnectionQuotas)

[2022-11-17 09:19:42,566] INFO Awaiting socket connections on 192.168.0...:8091. (kafka.network.DataPlaneAcceptor)

[2022-11-17 09:19:42,588] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Created data-plane acceptor and processors for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer)

[2022-11-17 09:19:42,595] INFO [BrokerToControllerChannelManager broker=0 name=alterPartition]: Starting (kafka.server.BrokerToControllerRequestThread)

[2022-11-17 09:19:42,614] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,615] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,616] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,617] INFO [ExpirationReaper-0-ElectLeader]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,628] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2022-11-17 09:19:42,651] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2022-11-17 09:19:42,669] INFO Stat of the created znode at /brokers/ids/0 is: 27,27,1668647982662,1668647982662,1,0,0,72057666188804096,208,0,27

(kafka.zk.KafkaZkClient)

[2022-11-17 09:19:42,670] INFO Registered broker 0 at path /brokers/ids/0 with addresses: PLAINTEXT://192.168.0...:8091, czxid (broker epoch): 27 (kafka.zk.KafkaZkClient)

[2022-11-17 09:19:42,733] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,737] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,743] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,744] INFO Successfully created /controller_epoch with initial epoch 0 (kafka.zk.KafkaZkClient)

[2022-11-17 09:19:42,753] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2022-11-17 09:19:42,756] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2022-11-17 09:19:42,759] INFO Feature ZK node created at path: /feature (kafka.server.FinalizedFeatureChangeListener)

[2022-11-17 09:19:42,771] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-11-17 09:19:42,774] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2022-11-17 09:19:42,783] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-11-17 09:19:42,805] INFO Updated cache from existing <empty> to latest FinalizedFeaturesAndEpoch(features=Features{}, epoch=0). (kafka.server.FinalizedFeatureCache)

[2022-11-17 09:19:42,827] INFO [ExpirationReaper-0-AlterAcls]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-11-17 09:19:42,851] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2022-11-17 09:19:42,888] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Starting socket server acceptors and processors (kafka.network.SocketServer)

[2022-11-17 09:19:42,893] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Started data-plane acceptor and processor(s) for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer)

[2022-11-17 09:19:42,893] INFO [SocketServer listenerType=ZK_BROKER, nodeId=0] Started socket server acceptors and processors (kafka.network.SocketServer)

[2022-11-17 09:19:42,896] INFO Kafka version: 3.2.1 (org.apache.kafka.common.utils.AppInfoParser)

[2022-11-17 09:19:42,896] INFO Kafka commitId: b172a0a94f4ebb9f (org.apache.kafka.common.utils.AppInfoParser)

[2022-11-17 09:19:42,896] INFO Kafka startTimeMs: 1668647982894 (org.apache.kafka.common.utils.AppInfoParser)

[2022-11-17 09:19:42,897] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

[2022-11-17 09:19:42,952] INFO [BrokerToControllerChannelManager broker=0 name=forwarding]: Recorded new controller, from now on will use broker 192.168.0...:8091 (id: 0 rack: null) (kafka.server.BrokerToControllerRequestThread)

[2022-11-17 09:19:43,001] INFO [BrokerToControllerChannelManager broker=0 name=alterPartition]: Recorded new controller, from now on will use broker 192.168.0...:8091 (id: 0 rack: null) (kafka.server.BrokerToControllerRequestThread)

Y、Error message

1 zookeeper is not a recognized option

新版已不支持zookeeper参数,需要换成bootstrap-server参数

./bin/kafka-topics.sh --create --zookeeper 192.168.0...:2181 --replication-factor 1 --partitions 1 --topic iyuyixyz原来新版本的kafka,已经不需要依赖zookeeper来创建topic,新版的kafka创建topic指令为下

./bin/kafka-topics.sh --bootstrap-server 192.168.0...:8091 --create --topic iyuyixyz --partitions 2 --replication-factor 1

2 节点响应超时(请求超时)

[2022-10-13 01:11:34,670] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (/192.168.0...:8092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2022-10-13 01:11:37,676] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (/192.168.0...:8092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

[2022-10-13 01:11:40,682] WARN [AdminClient clientId=adminclient-1] Connection to node -1 (/192.168.0...:8092) could not be established. Broker may not be available. (org.apache.kafka.clients.NetworkClient)

Error while executing topic command : Timed out waiting for a node assignment. Call: createTopics

[2022-10-13 01:11:43,600] ERROR org.apache.kafka.common.errors.TimeoutException: Timed out waiting for a node assignment. Call: createTopics

(kafka.admin.TopicCommand$)该错误是由于没有用对应版本的命令,访问了错的主机名(或IP)和端口,所以请求失败导致超时。

其中的createTopics、listTopics等都会出现该错误。如果没配置server.properties文件的listeners值,也会报以上错误

listeners值默认是PLAINTEXT://:9092,要改为PLAINTEXT://localhost:9092或PLAINTEXT://IP:9092等

Z、Related Links

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 园子的第一款AI主题卫衣上架——"HELLO! HOW CAN I ASSIST YOU TODAY

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本