使用docker-compose 一键部署你的分布式调用链跟踪框架skywalking

一旦你的程序docker化之后,你会遇到各种问题,比如原来采用的本地记日志的方式就不再方便了,虽然你可以挂载到宿主机,但你使用 --scale 的话,会导致

记录日志异常,所以最好的方式还是要做日志中心化,另一个问题,原来一个请求在一个进程中的痉挛失败,你可以在日志中巡查出调用堆栈,但是docker化之后,

原来一个进程的东西会拆成几个微服务,这时候最好就要有一个分布式的调用链跟踪,类似于wcf中的svctraceview工具。

一:搭建skywalking

gihub地址是:https://github.com/apache/incubator-skywalking 从文档中大概看的出来,大体分三个部分:存储,收集器,探针,存储这里就选用推荐的

elasticsearch。收集器准备和es部署在一起,探针就有各自语言的实现了,总之这里就有三个docker container: es,kibana,skywalking, 如果不用容器编排工具

的话就比较麻烦。

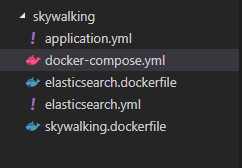

下面是本次搭建的一个目录结构:

1. elasticsearch.yml

es的配置文件,不过这里有一个坑,就是一定要将 network.publish_host: 0.0.0.0 ,否则skywalking会连不上 9300端口。

network.publish_host: 0.0.0.0 transport.tcp.port: 9300 network.host: 0.0.0.0

2. elasticsearch.dockerfile

在up的时候,将这个es文件copy到 容器的config文件夹下。

FROM elasticsearch:5.6.4 EXPOSE 9200 9300 COPY elasticsearch.yml /usr/share/elasticsearch/config/

3. application.yml

skywalking的配置文件,这里也有一个坑:连接es的地址中,配置的 clustername一定要修改成和es的clustername保持一致,否则会连不上,这里容器之间用link

进行互联,所以es的ip改成elasticsearch就可以了,其他的ip改成0.0.0.0 。

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. #cluster: # zookeeper: # hostPort: localhost:2181 # sessionTimeout: 100000 naming: jetty: host: 0.0.0.0 port: 10800 contextPath: / cache: # guava: caffeine: remote: gRPC: host: 0.0.0.0 port: 11800 agent_gRPC: gRPC: host: 0.0.0.0 port: 11800 #Set these two setting to open ssl #sslCertChainFile: $path #sslPrivateKeyFile: $path #Set your own token to active auth #authentication: xxxxxx agent_jetty: jetty: host: 0.0.0.0 port: 12800 contextPath: / analysis_register: default: analysis_jvm: default: analysis_segment_parser: default: bufferFilePath: ../buffer/ bufferOffsetMaxFileSize: 10M bufferSegmentMaxFileSize: 500M bufferFileCleanWhenRestart: true ui: jetty: host: 0.0.0.0 port: 12800 contextPath: / storage: elasticsearch: clusterName: elasticsearch clusterTransportSniffer: true clusterNodes: elasticsearch:9300 indexShardsNumber: 2 indexReplicasNumber: 0 highPerformanceMode: true ttl: 7 #storage: # h2: # url: jdbc:h2:~/memorydb # userName: sa configuration: default: # namespace: xxxxx # alarm threshold applicationApdexThreshold: 2000 serviceErrorRateThreshold: 10.00 serviceAverageResponseTimeThreshold: 2000 instanceErrorRateThreshold: 10.00 instanceAverageResponseTimeThreshold: 2000 applicationErrorRateThreshold: 10.00 applicationAverageResponseTimeThreshold: 2000 # thermodynamic thermodynamicResponseTimeStep: 50 thermodynamicCountOfResponseTimeSteps: 40

4. skywalking.dockerfile

接下来就是 skywalking的 下载安装,使用dockerfile流程化。

FROM centos:7 LABEL username="hxc@qq.com" WORKDIR /app RUN yum install -y wget && \ yum install -y java-1.8.0-openjdk ADD http://mirrors.hust.edu.cn/apache/incubator/skywalking/5.0.0-RC2/apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz /app RUN tar -xf apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz && \ mv apache-skywalking-apm-incubating skywalking RUN ls /app #copy文件 COPY application.yml /app/skywalking/config/application.yml WORKDIR /app/skywalking/bin USER root RUN echo "tail -f /dev/null" >> /app/skywalking/bin/startup.sh CMD ["/bin/sh","-c","/app/skywalking/bin/startup.sh" ]

5. docker-compose.yml

最后就是将这三个容器进行编排,要注意的是,因为收集器会将数据放入到es中,所有一定要将es的data挂载到宿主机的大硬盘下,否则你的空间会不足的。

version: '3.1' services: #elastic 镜像 elasticsearch: build: context: . dockerfile: elasticsearch.dockerfile # ports: # - "9200:9200" # - "9300:9300" volumes: - "/data/es2:/usr/share/elasticsearch/data" #kibana 可视化查询,暴露 5601 kibana: image: kibana links: - elasticsearch ports: - 5601:5601 depends_on: - "elasticsearch" #skywalking skywalking: build: context: . dockerfile: skywalking.dockerfile ports: - "10800:10800" - "11800:11800" - "12800:12800" - "8080:8080" links: - elasticsearch depends_on: - "elasticsearch"

二:一键部署

要部署在docker中,你还得需要安装docker-ce 和 docker-compose,大家可以参照官方安装一下。

1. Docker-ce 的安装

sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engine sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2 sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum install docker-ce

然后启动一下docker 服务,可以看到版本是18.06.1

[root@localhost ~]# service docker start Redirecting to /bin/systemctl start docker.service [root@localhost ~]# docker -v Docker version 18.06.1-ce, build e68fc7a

2. docker-compose的安装

sudo curl -L "https://github.com/docker/compose/releases/download/1.22.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose

3. 最后在centos上执行 docker-compopse up --build 就可以了,如果不想terminal上运行,可以加 -d 使用后台执行。

[root@localhost docker]# docker-compose up --build Creating network "docker_default" with the default driver Building elasticsearch Step 1/3 : FROM elasticsearch:5.6.4 ---> 7a047c21aa48 Step 2/3 : EXPOSE 9200 9300 ---> Using cache ---> 8d66bb57b09d Step 3/3 : COPY elasticsearch.yml /usr/share/elasticsearch/config/ ---> Using cache ---> 02b516c03b95 Successfully built 02b516c03b95 Successfully tagged docker_elasticsearch:latest Building skywalking Step 1/12 : FROM centos:7 ---> 5182e96772bf Step 2/12 : LABEL username="hxc@qq.com" ---> Using cache ---> b95b96a92042 Step 3/12 : WORKDIR /app ---> Using cache ---> afdf4efe3426 Step 4/12 : RUN yum install -y wget && yum install -y java-1.8.0-openjdk ---> Using cache ---> 46be0ca0f7b5 Step 5/12 : ADD http://mirrors.hust.edu.cn/apache/incubator/skywalking/5.0.0-RC2/apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz /app ---> Using cache ---> d5c30bcfd5ea Step 6/12 : RUN tar -xf apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz && mv apache-skywalking-apm-incubating skywalking ---> Using cache ---> 1438d08d18fa Step 7/12 : RUN ls /app ---> Using cache ---> b594124672ea Step 8/12 : COPY application.yml /app/skywalking/config/application.yml ---> Using cache ---> 10eaf0805a65 Step 9/12 : WORKDIR /app/skywalking/bin ---> Using cache ---> bc0f02291536 Step 10/12 : USER root ---> Using cache ---> 4498afca5fe6 Step 11/12 : RUN echo "tail -f /dev/null" >> /app/skywalking/bin/startup.sh ---> Using cache ---> 1c4be7c6b32a Step 12/12 : CMD ["/bin/sh","-c","/app/skywalking/bin/startup.sh" ] ---> Using cache ---> ecfc97e4c97d Successfully built ecfc97e4c97d Successfully tagged docker_skywalking:latest Creating docker_elasticsearch_1 ... done Creating docker_skywalking_1 ... done Creating docker_kibana_1 ... done Attaching to docker_elasticsearch_1, docker_kibana_1, docker_skywalking_1 elasticsearch_1 | [2018-09-17T23:51:47,611][INFO ][o.e.n.Node ] [] initializing ... elasticsearch_1 | [2018-09-17T23:51:47,729][INFO ][o.e.e.NodeEnvironment ] [FC_bOh1] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/sda3)]], net usable_space [5gb], net total_space [22.1gb], spins? [possibly], types [xfs] elasticsearch_1 | [2018-09-17T23:51:47,730][INFO ][o.e.e.NodeEnvironment ] [FC_bOh1] heap size [1.9gb], compressed ordinary object pointers [true] elasticsearch_1 | [2018-09-17T23:51:47,731][INFO ][o.e.n.Node ] node name [FC_bOh1] derived from node ID [FC_bOh1nS_uW6JKy_46iBg]; set [node.name] to override elasticsearch_1 | [2018-09-17T23:51:47,732][INFO ][o.e.n.Node ] version[5.6.4], pid[1], build[8bbedf5/2017-10-31T18:55:38.105Z], OS[Linux/3.10.0-327.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_151/25.151-b12] elasticsearch_1 | [2018-09-17T23:51:47,732][INFO ][o.e.n.Node ] JVM arguments [-Xms2g, -Xmx2g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -Djdk.io.permissionsUseCanonicalPath=true, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Dlog4j.skipJansi=true, -XX:+HeapDumpOnOutOfMemoryError, -Des.path.home=/usr/share/elasticsearch] skywalking_1 | SkyWalking Collector started successfully! elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [aggs-matrix-stats] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [ingest-common] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-expression] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-groovy] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-mustache] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-painless] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [parent-join] elasticsearch_1 | [2018-09-17T23:51:49,068][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [percolator] elasticsearch_1 | [2018-09-17T23:51:49,068][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [reindex] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [transport-netty3] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [transport-netty4] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] no plugins loaded skywalking_1 | SkyWalking Web Application started successfully! elasticsearch_1 | [2018-09-17T23:51:51,950][INFO ][o.e.d.DiscoveryModule ] [FC_bOh1] using discovery type [zen] kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:kibana@5.6.11","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} elasticsearch_1 | [2018-09-17T23:51:53,456][INFO ][o.e.n.Node ] initialized elasticsearch_1 | [2018-09-17T23:51:53,457][INFO ][o.e.n.Node ] [FC_bOh1] starting ... kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:elasticsearch@5.6.11","info"],"pid":12,"state":"yellow","message":"Status changed from uninitialized to yellow - Waiting for Elasticsearch","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:console@5.6.11","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["error","elasticsearch","admin"],"pid":12,"message":"Request error, retrying\nHEAD http://elasticsearch:9200/ => connect ECONNREFUSED 172.21.0.2:9200"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"Unable to revive connection: http://elasticsearch:9200/"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"No living connections"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:metrics@5.6.11","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:elasticsearch@5.6.11","error"],"pid":12,"state":"red","message":"Status changed from yellow to red - Unable to connect to Elasticsearch at http://elasticsearch:9200.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"} elasticsearch_1 | [2018-09-17T23:51:53,829][INFO ][o.e.t.TransportService ] [FC_bOh1] publish_address {172.21.0.2:9300}, bound_addresses {0.0.0.0:9300} elasticsearch_1 | [2018-09-17T23:51:53,870][INFO ][o.e.b.BootstrapChecks ] [FC_bOh1] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:timelion@5.6.11","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["listening","info"],"pid":12,"message":"Server running at http://0.0.0.0:5601"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","ui settings","error"],"pid":12,"state":"red","message":"Status changed from uninitialized to red - Elasticsearch plugin is red","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:56Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"Unable to revive connection: http://elasticsearch:9200/"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:56Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"No living connections"} elasticsearch_1 | [2018-09-17T23:51:57,094][INFO ][o.e.c.s.ClusterService ] [FC_bOh1] new_master {FC_bOh1}{FC_bOh1nS_uW6JKy_46iBg}{tNMEW5HYQm6O4aiqpU0uWA}{172.21.0.2}{172.21.0.2:9300}, reason: zen-disco-elected-as-master ([0] nodes joined) elasticsearch_1 | [2018-09-17T23:51:57,129][INFO ][o.e.h.n.Netty4HttpServerTransport] [FC_bOh1] publish_address {172.21.0.2:9200}, bound_addresses {0.0.0.0:9200} elasticsearch_1 | [2018-09-17T23:51:57,129][INFO ][o.e.n.Node ] [FC_bOh1] started elasticsearch_1 | [2018-09-17T23:51:57,157][INFO ][o.e.g.GatewayService ] [FC_bOh1] recovered [0] indices into cluster_state elasticsearch_1 | [2018-09-17T23:51:57,368][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm_list_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,557][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_alarm_list_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:57,685][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_pool_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,742][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[memory_pool_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:57,886][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,962][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,115][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:58,176][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,356][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:58,437][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,550][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_mapping_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:58,601][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_mapping_month][1]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,725][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:58Z","tags":["warning"],"pid":12,"kibanaVersion":"5.6.11","nodes":[{"version":"5.6.4","http":{"publish_address":"172.21.0.2:9200"},"ip":"172.21.0.2"}],"message":"You're running Kibana 5.6.11 with some different versions of Elasticsearch. Update Kibana or Elasticsearch to the same version to prevent compatibility issues: v5.6.4 @ 172.21.0.2:9200 (172.21.0.2)"} elasticsearch_1 | [2018-09-17T23:51:58,886][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_reference_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,023][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,090][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_reference_alarm][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,137][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_alarm_list] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,258][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_alarm_list][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,355][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_mapping_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,410][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_mapping_minute][1], [application_mapping_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,484][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [response_time_distribution_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,562][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[response_time_distribution_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,633][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,709][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,761][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:59,839][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_reference_metric_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:51:59,980][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,073][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[memory_metric_minute][1], [memory_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,159][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm_list_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,258][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_alarm_list_day][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,350][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,463][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_reference_alarm][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,535][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,599][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,714][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [segment] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,766][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[segment][1], [segment][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,801][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [global_trace] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,865][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[global_trace][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:00,917][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_alarm_list] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:00,985][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_alarm_list][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,034][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,116][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,207][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,289][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_reference_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,388][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_component_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,491][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_component_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,527][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,613][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,723][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,761][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_reference_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,844][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:01,914][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_reference_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:01,986][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [cpu_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,067][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[cpu_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,096][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [gc_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,174][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[gc_metric_hour][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,221][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [response_time_distribution_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,281][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[response_time_distribution_month][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,337][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,421][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_reference_metric_minute][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,558][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_mapping_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,590][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_mapping_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,637][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [cpu_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,694][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[cpu_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,732][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,787][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_metric_month][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:02,849][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_mapping_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:02,916][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_mapping_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,030][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,062][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_alarm][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,096][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_pool_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,123][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[memory_pool_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,154][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_component_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,180][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_component_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,199][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_name] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,221][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_name][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,240][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [cpu_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,268][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[cpu_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,286][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,333][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_reference_metric_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,376][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [network_address] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,422][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[network_address][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,440][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [cpu_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,466][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[cpu_metric_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,487][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_mapping_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,510][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_mapping_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,527][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [gc_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,553][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[gc_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,572][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,590][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[memory_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,613][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,633][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_reference_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,683][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,731][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_reference_metric_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,767][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_component_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,796][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_component_minute][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,809][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,829][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_alarm][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,847][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:03,867][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[instance_metric_hour][0]] ...]). elasticsearch_1 | [2018-09-17T23:52:03,908][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:52:03Z","tags":["status","plugin:elasticsearch@5.6.11","info"],"pid":12,"state":"yellow","message":"Status changed from red to yellow - No existing Kibana index found","prevState":"red","prevMsg":"Unable to connect to Elasticsearch at http://elasticsearch:9200."} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:52:03Z","tags":["status","ui settings","info"],"pid":12,"state":"yellow","message":"Status changed from red to yellow - Elasticsearch plugin is yellow","prevState":"red","prevMsg":"Elasticsearch plugin is red"} elasticsearch_1 | [2018-09-17T23:52:03,953][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [.kibana] creating index, cause [api], templates [], shards [1]/[1], mappings [_default_, index-pattern, server, visualization, search, timelion-sheet, config, dashboard, url] elasticsearch_1 | [2018-09-17T23:52:04,003][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_alarm_list] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,111][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,228][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_pool_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,285][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [segment_duration] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,342][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,395][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [response_time_distribution_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,433][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_component_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,475][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,512][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm_list_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,575][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,617][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,675][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,742][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,841][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,920][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_reference_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:04,987][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_reference_alarm_list] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,045][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [gc_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:52:05Z","tags":["status","plugin:elasticsearch@5.6.11","info"],"pid":12,"state":"green","message":"Status changed from yellow to green - Kibana index ready","prevState":"yellow","prevMsg":"No existing Kibana index found"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:52:05Z","tags":["status","ui settings","info"],"pid":12,"state":"green","message":"Status changed from yellow to green - Ready","prevState":"yellow","prevMsg":"Elasticsearch plugin is yellow"} elasticsearch_1 | [2018-09-17T23:52:05,106][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_reference_alarm_list] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,143][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_pool_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,180][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm_list_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,225][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_mapping_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,268][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [instance_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,350][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_mapping_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,392][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_mapping_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,434][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [response_time_distribution_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,470][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:52:05,544][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [gc_metric_minute] creating index, cause [api], templates [], shards [2]/[0], mappings [type]

从上图中可以看到 es,kibana,skywalking都启动成功了,你也可以通过docker-compose ps 看一下是否都起来了,netstat 看一下宿主机开放了哪些端口。

[root@localhost docker]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9aa90401ca16 kibana "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 0.0.0.0:5601->5601/tcp docker_kibana_1 c551248e32af docker_skywalking "/bin/sh -c /app/sky…" 2 minutes ago Up 2 minutes 0.0.0.0:8080->8080/tcp, 0.0.0.0:10800->10800/tcp, 0.0.0.0:11800->11800/tcp, 0.0.0.0:12800->12800/tcp docker_skywalking_1 765d38469ff1 docker_elasticsearch "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 9200/tcp, 9300/tcp docker_elasticsearch_1 [root@localhost docker]# netstat -tlnp Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 2013/dnsmasq tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1141/sshd tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 1139/cupsd tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1622/master tcp6 0 0 :::8080 :::* LISTEN 38262/docker-proxy tcp6 0 0 :::10800 :::* LISTEN 38248/docker-proxy tcp6 0 0 :::22 :::* LISTEN 1141/sshd tcp6 0 0 ::1:631 :::* LISTEN 1139/cupsd tcp6 0 0 :::11800 :::* LISTEN 38234/docker-proxy tcp6 0 0 ::1:25 :::* LISTEN 1622/master tcp6 0 0 :::12800 :::* LISTEN 38222/docker-proxy tcp6 0 0 :::5601 :::* LISTEN 38274/docker-proxy [root@localhost docker]#

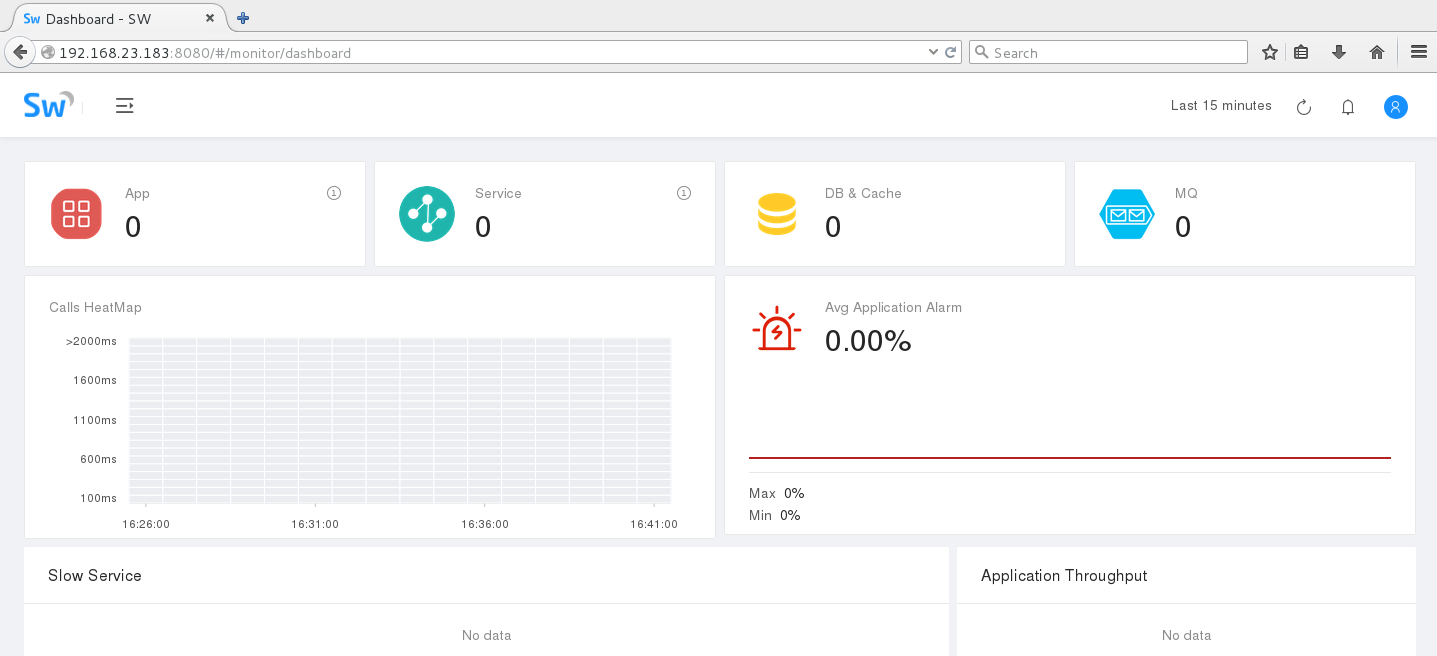

然后就可以看一些8080端口的可视化UI,默认用户名密码admin,admin,一个比较耐看的UI就出来了。

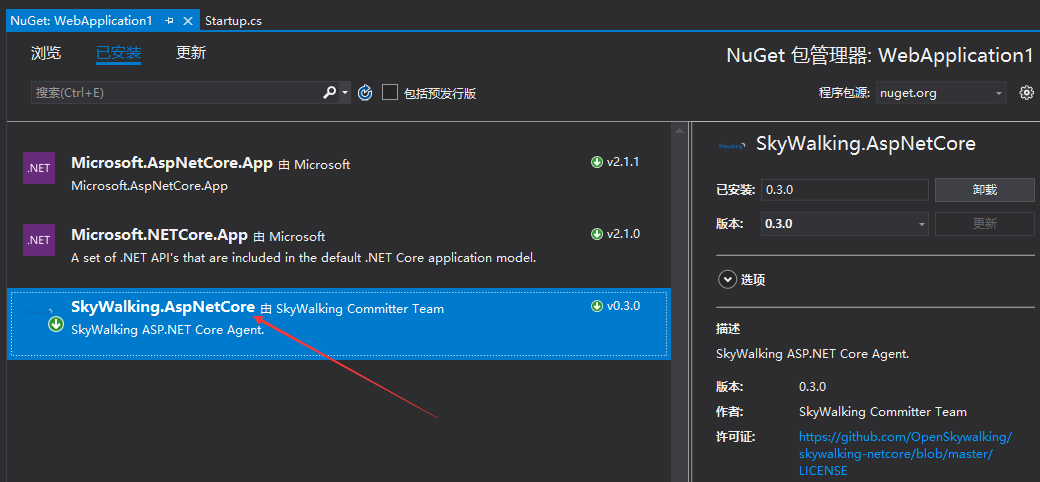

三: net下的探针

从nuget上拉取一个SkyWalking.AspNetCore探针进行代码埋点,github地址:https://github.com/OpenSkywalking/skywalking-netcore

在startup类中进行注入,在页面请求中进行一次cnblogs.com的请求操作,然后仔细观察一下调用链跟踪是一个什么样子?

using System; using System.Collections.Generic; using System.Linq; using System.Threading.Tasks; using Microsoft.AspNetCore.Builder; using Microsoft.AspNetCore.Hosting; using Microsoft.AspNetCore.Http; using Microsoft.Extensions.DependencyInjection; using SkyWalking.Extensions; using SkyWalking.AspNetCore; using System.Net; namespace WebApplication1 { public class Startup { // This method gets called by the runtime. Use this method to add services to the container. // For more information on how to configure your application, visit https://go.microsoft.com/fwlink/?LinkID=398940 public void ConfigureServices(IServiceCollection services) { services.AddSkyWalking(option => { // Application code is showed in sky-walking-ui option.ApplicationCode = "10001 测试站点"; //Collector agent_gRPC/grpc service addresses. option.DirectServers = "192.168.23.183:11800"; }); } // This method gets called by the runtime. Use this method to configure the HTTP request pipeline. public void Configure(IApplicationBuilder app, IHostingEnvironment env) { if (env.IsDevelopment()) { app.UseDeveloperExceptionPage(); } app.Run(async (context) => { WebClient client = new WebClient(); var str = client.DownloadString("http://cnblogs.com"); await context.Response.WriteAsync(str); }); } } }

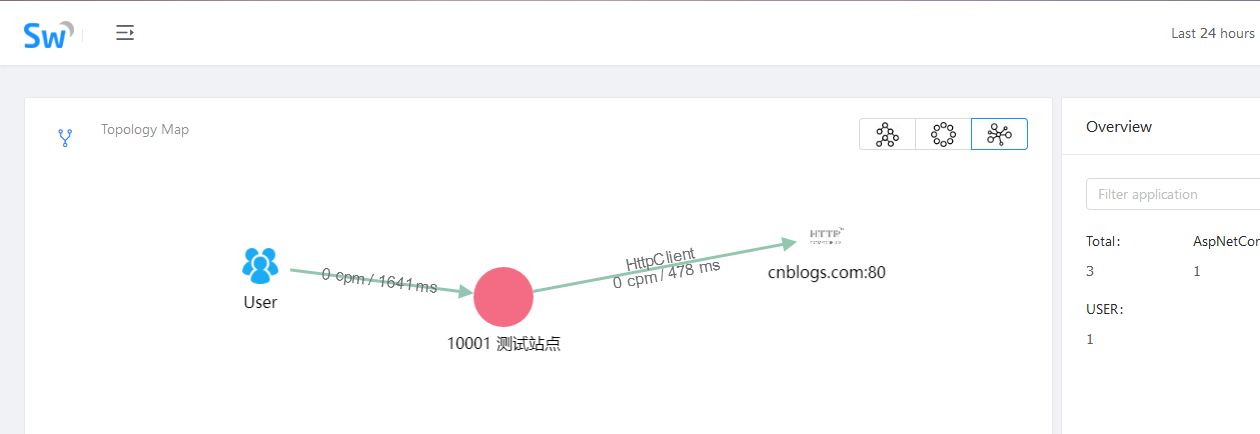

可以看到这张图还是蛮漂亮的哈,也方便我们快速的跟踪代码,发现问题,找出问题, 还有更多的功能期待你的挖掘啦。 好了,本篇就说到这里,希望对你有帮助。