scrapy框架之格式化&持久化

格式化处理

在parse方法中直接处理是简单的处理方式,不太建议,如果对于想要获取更多的数据处理,则可以利用Scrapy的items将数据格式化,然后统一交由pipelines来处理

以爬取校花网校花图片相关信息为例:

1 import scrapy 2 from scrapy.selector import HtmlXPathSelector 3 from scrapy.http.request import Request 4 from scrapy.http.cookies import CookieJar 5 from scrapy import FormRequest 6 7 8 class XiaoHuarSpider(scrapy.Spider): 9 # 爬虫应用的名称,通过此名称启动爬虫命令 10 name = "xiaohuar" 11 # 允许的域名 12 allowed_domains = ["xiaohuar.com"] 13 14 start_urls = [ 15 "http://www.xiaohuar.com/list-1-1.html", 16 ] 17 # custom_settings = { 18 # 'ITEM_PIPELINES':{ 19 # 'spider1.pipelines.JsonPipeline': 100 20 # } 21 # } 22 has_request_set = {} 23 24 def parse(self, response): 25 # 分析页面 26 # 找到页面中符合规则的内容(校花图片),保存 27 # 找到所有的a标签,再访问其他a标签,一层一层的搞下去 28 29 hxs = HtmlXPathSelector(response) 30 31 items = hxs.select('//div[@class="item_list infinite_scroll"]/div') 32 for item in items: 33 src = item.select('.//div[@class="img"]/a/img/@src').extract_first() 34 name = item.select('.//div[@class="img"]/span/text()').extract_first() 35 school = item.select('.//div[@class="img"]/div[@class="btns"]/a/text()').extract_first() 36 url = "http://www.xiaohuar.com%s" % src 37 from ..items import XiaoHuarItem 38 obj = XiaoHuarItem(name=name, school=school, url=url) 39 yield obj 40 41 urls = hxs.select('//a[re:test(@href, "http://www.xiaohuar.com/list-1-\d+.html")]/@href') 42 for url in urls: 43 key = self.md5(url) 44 if key in self.has_request_set: 45 pass 46 else: 47 self.has_request_set[key] = url 48 req = Request(url=url,method='GET',callback=self.parse) 49 yield req 50 51 @staticmethod 52 def md5(val): 53 import hashlib 54 ha = hashlib.md5() 55 ha.update(bytes(val, encoding='utf-8')) 56 key = ha.hexdigest() 57 return key

1 import scrapy 2 3 class XiaohuarItem(scrapy.Item): 4 name = scrapy.Field() 5 school = scrapy.Field() 6 url = scrapy.Field()

1 import json 2 import os 3 import requests 4 5 class Test002Pipeline(object): 6 def process_item(self, item, spider): 7 # print(item['title'],item['href']) 8 return item 9 10 class JsonPipeline(object): 11 12 def __init__(self): 13 self.file = open('xiaohua.txt','w') 14 15 def process_item(self,item,spider): 16 v = json.dumps(dict(item),ensure_ascii=False) 17 self.file.write(v) 18 self.file.write('/n') 19 self.file.flush() #把文件从内存buffer(缓冲区)中强制刷新到硬盘中,同时清空缓冲区 20 return item 21 22 class FilePipeline(object): 23 24 def __init__(self): 25 if not os.path.exists('girls_img'): 26 os.mkdir('girls_img') 27 28 def process_item(self,item,spider): 29 response = requests.get(item['url'],stream=True) 30 file_name = '%s_%s.jpg' % (item['name'],item['school']) 31 with open(os.path.join('girls_img',file_name),'wb') as f: 32 f.write(response.content) 33 return item

1 ITEM_PIPELINES = { 2 'spider1.pipelines.JsonPipeline': 100, 3 'spider1.pipelines.FilePipeline': 300, 4 } 5 # 每行后面的整型值,确定了他们运行的顺序,item按数字从低到高的顺序,通过pipeline,通常将这些数字定义在0-1000范围内。

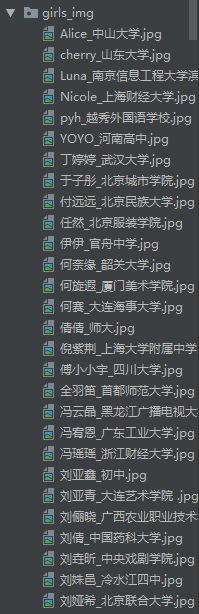

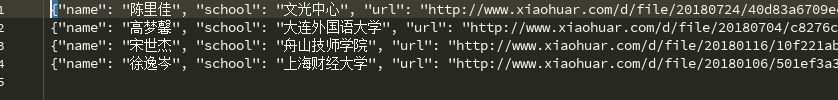

爬取结果如下:

自定义pipeline:

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 import os,requests 8 from scrapy.exceptions import DropItem 9 10 class Test001Pipeline(object): 11 12 def __init__(self,conn_str): 13 """ 14 数据的初始化 15 :param conn_str: 16 """ 17 self.conn_str = conn_str 18 self.conn = None 19 20 @classmethod 21 def from_crawler(cls, crawler): 22 """ 23 初始化时候,用于创建pipeline对象,读取配置文件 24 :param crawler: 25 :return: 26 """ 27 conn_str = crawler.settings.get('DB') #settings文件封装到crawler中 28 return cls(conn_str) 29 30 def open_spider(self, spider): 31 """ 32 爬虫开始执行时,调用 33 :param spider: 34 :return: 35 """ 36 print('Spider Starts!!!') 37 self.conn = open(self.conn_str, 'a+') 38 39 def close_spider(self, spider): 40 """ 41 爬虫关闭时,被调用 42 :param spider: 43 :return: 44 """ 45 print('Spider close!!!') 46 self.conn.close() 47 48 def process_item(self, item, spider): 49 """ 50 每当数据需要持久化时,都会被调用 51 :param item: 52 :param spider: 53 :return: 54 """ 55 #if spider.name == "chouti" ->>>>对不同的爬虫进行不同的数据处理 56 # print(item,spider) 57 tpl = "%s\n%s\n\n" % (item['title'],item['href']) 58 self.conn.write(tpl) 59 60 # return表示会被后续的pipeline继续处理,按照优先级大小执行 61 # return item 62 63 # 表示将item丢弃,不会被后续pipeline处理 64 raise DropItem() 65 66 class Test001Pipeline2(object): 67 68 def __init__(self,conn_str): 69 """ 70 数据的初始化 71 :param conn_str: 72 """ 73 self.conn_str = conn_str 74 self.conn = None 75 76 @classmethod 77 def from_crawler(cls, crawler): 78 """ 79 初始化时候,用于创建pipeline对象,读取配置文件 80 :param crawler: 81 :return: 82 """ 83 conn_str = crawler.settings.get('DB') #settings文件封装到crawler中 84 return cls(conn_str) 85 86 def open_spider(self, spider): 87 """ 88 爬虫开始执行时,调用 89 :param spider: 90 :return: 91 """ 92 print('Spider Starts!!!') 93 self.conn = open(self.conn_str, 'a+') 94 95 def close_spider(self, spider): 96 """ 97 爬虫关闭时,被调用 98 :param spider: 99 :return: 100 """ 101 print('Spider close!!!') 102 self.conn.close() 103 104 def process_item(self, item, spider): 105 """ 106 每当数据需要持久化时,都会被调用 107 :param item: 108 :param spider: 109 :return: 110 """ 111 #if spider.name == "chouti" ->>>>对不同的爬虫进行不同的数据处理 112 # print(item,spider) 113 tpl = "%s\n%s\n\n" % (item['title'],item['href']) 114 self.conn.write(tpl)

1 ITEM_PIPELINES = { 2 'test002.pipelines.Test001Pipeline': 300, 3 'test002.pipelines.Test001Pipeline2': 400, 4 # 'test002.pipelines.JsonPipeline': 100, 5 # 'test002.pipelines.FilePipeline': 200, 6 }

总结:

1 1. 4个方法 2 2. crawler.settings.get('settings中的配置文件名称且必须大写') 3 3. process_item方法中,如果抛出异常DropItem表示终止,否者继续交给后续的pipeline处理 4 4. spider参数进行判断spider.name判断爬虫