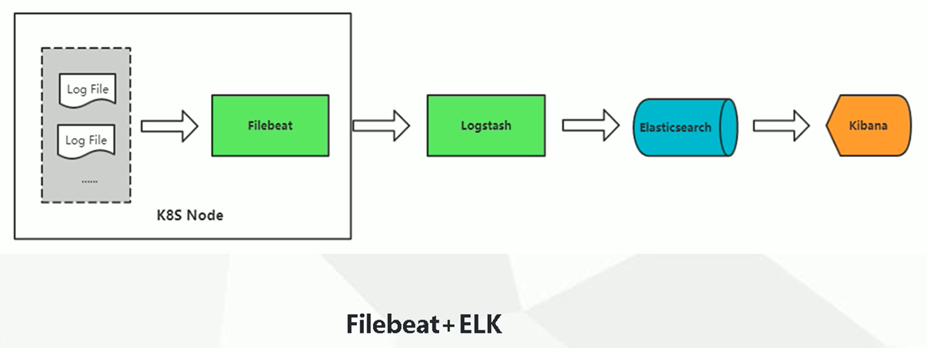

ELK+filebeat收集K8S平台日志

如果把日志保存在容器内部或通过数据卷挂载在宿主机上还是保持在远程存储上,比如保存在容器内部,也就是说没有经过任何改动,自是在容器里原封不动的启动了,起来之后日志还是和原来一样保持在原来的目录里,但是这个容器是会经常的删除,销毁和创建是常态。因此我们需要一种持久化的保存日志方式。

如果日志还是放在容器内部,会随着容器删除而被删除

容器数量很多,按照传统的查看日志方式变得不太现实

容器本身特性

容器密集,采集目标多:容器日志输出到控制台,docker本身提供了一种能力来采集日志了。如果落地到本地文件目前还没有一种好的采集方式 容器的弹性伸缩:新扩容的pod属性信息(日志文件路径,日志源)可能会发送变化

收集那些日志

K8S系统的组件日志和应用程序日志,组件日志就是打到宿主机的固定文件和传统的日志收集一样,应用程序日志又分为了标准输出和日志文件

ELK日志收集的三个方案

大致分为采集阶段→数据存储→分析→展示

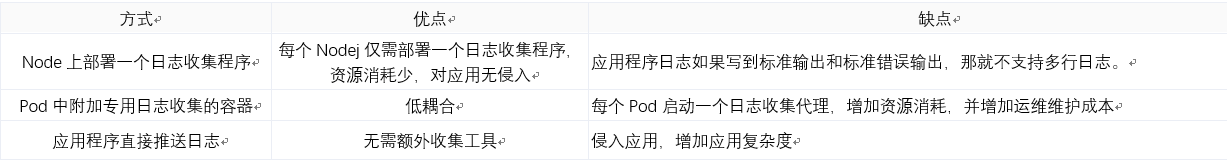

Node上部署一个日志收集程序

DaemonSet方式部署日志收集程序,对本节点/var/log/pods/或/var/lib/docker/containers/两个目录下的日志进行收集

Pod中附加专用日志收集的容器

每个运行应用程序的Pod中增加一个日志收集容器,使用emtyDir共享日志目录让日志收集程序读取到

应用程序直接推送日志

应用程序直接将日志推送到远程存储上,不经过docker的管理和kubernetes的管理

集群规划(kubeadm安装)

|

IP地址 |

节点名称 |

安装的服务 |

|

192.168.10.171 |

K8s-master |

NFS,K8S的必要组件服务 |

|

192.168.10.172 |

node-1 |

NFS,K8S的必要组件服务 |

|

192.168.10.173 |

node-2 |

NFS-server,K8S的必要组件服务 |

部署NFS

部署NFS是为了实现动态供给存储

部署NFS服务器,前提需要关闭防火墙和selinux yum install -y nfs-utils #所有的节点都需要安装 配置NFS共享的目录,no_root_squash是挂载后以匿名用户进行使用,通常变成nobody [root@node-2 ~]# echo "/ifs/kubernetes 192.168.10.0/24(rw,no_root_squash)" > /etc/exports #多行不能使用清空方法,需要使用 >>进行追加 [root@node-2 ~]# mkdir -p /ifs/kubernetes #共享目录不存在的话需要创建 启动NFS 并设置开机自启动 [root@node-2 ~]# systemctl enable nfs && systemctl start nfs 查看已经共享的目录 (没有启动NFS服务的节点不能查询) [root@node-2 ~]# showmount -e Export list for node-2: /ifs/kubernetes 192.168.10.0/24 [root@node-2 ~]#

部署NFS实现自动创建PV插件

yum install -y git git clone https://github.com/kubernetes-incubator/external-storage cd external-storage/nfs-client/deploy/ #顺序部署 kubectl apply -f rbac.yaml # 授权访问apiserver kubectl apply -f deployment.yaml # 部署插件,需要修改里面NFS服务器地址和共享目录 kubectl apply -f class.yaml # 创建存储类型,是否启用归档 kubectl get sc # 查看存储类型

在K8S中部署ELK

部署elasticsearch

[root@k8s-maste ~]# cat elasticsearch.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch

namespace: kube-system

labels:

k8s-app: elasticsearch

spec:

serviceName: elasticsearch

selector:

matchLabels:

k8s-app: elasticsearch

template:

metadata:

labels:

k8s-app: elasticsearch

spec:

containers:

- image: elasticsearch:7.5.0

name: elasticsearch

resources:

limits:

cpu: 1

memory: 2Gi

requests:

cpu: 0.5

memory: 500Mi

env:

- name: "discovery.type"

value: "single-node"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx2g"

ports:

- containerPort: 9200

name: db

protocol: TCP

volumeMounts:

- name: elasticsearch-data

mountPath: /usr/share/elasticsearch/data

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

storageClassName: "managed-nfs-storage"

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 20Gi

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

namespace: kube-system

spec:

clusterIP: None

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch

生效清单文件

[root@k8s-maste ~]# kubectl apply -f elasticsearch.yaml statefulset.apps/elasticsearch created service/elasticsearch created [root@k8s-maste ~]# kubectl get pods -n kube-system elasticsearch-0 NAME READY STATUS RESTARTS AGE elasticsearch-0 1/1 Running 0 50s [root@k8s-maste ~]#

部署kibana

[root@k8s-maste ~]# cat kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: kube-system

labels:

k8s-app: kibana

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

template:

metadata:

labels:

k8s-app: kibana

spec:

containers:

- name: kibana

image: kibana:7.5.0

resources:

limits:

cpu: 1

memory: 500Mi

requests:

cpu: 0.5

memory: 200Mi

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-0.elasticsearch.kube-system:9200

- name: I18N_LOCALE

value: zh-CN

ports:

- containerPort: 5601

name: ui

protocol: TCP

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-system

spec:

type: NodePort

ports:

- port: 5601

protocol: TCP

targetPort: ui

nodePort: 30601

selector:

k8s-app: kibana

生效清单文件

[root@k8s-maste ~]# kubectl apply -f kibana.yaml deployment.apps/kibana created service/kibana created [root@k8s-maste ~]# kubectl get pods -n kube-system |grep kibana NAME READY STATUS RESTARTS AGE kibana-6cd7b9d48b-jrx79 1/1 Running 0 3m3s [root@k8s-maste ~]# kubectl get svc -n kube-system kibana NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kibana NodePort 10.98.15.252 <none> 5601:30601/TCP 105s [root@k8s-maste ~]#

filebeat采集标准输出日志

filebeat支持动态的获取容器的日志

[root@k8s-maste ~]# cat filebeat-kubernetes.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: kube-system

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

filebeat.config:

inputs:

# Mounted `filebeat-inputs` configmap:

path: ${path.config}/inputs.d/*.yml

# Reload inputs configs as they change:

reload.enabled: false

modules:

path: ${path.config}/modules.d/*.yml

# Reload module configs as they change:

reload.enabled: false

# To enable hints based autodiscover, remove `filebeat.config.inputs` configuration and uncomment this:

#filebeat.autodiscover:

# providers:

# - type: kubernetes

# hints.enabled: true

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

namespace: kube-system

labels:

k8s-app: filebeat

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: elastic/filebeat:7.5.0

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch-0.elasticsearch.kube-system

- name: ELASTICSEARCH_PORT

value: "9200"

securityContext:

runAsUser: 0

# If using Red Hat OpenShift uncomment this:

#privileged: true

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

# data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: kube-system

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: kube-system

labels:

k8s-app: filebeat

这里指定了es的路径

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

这里是一个处理器,他会自动的为日志添加k8s属性。传统配置日志采集工具重要的参数是什么呢?日志路径、日志源、写正则,格式化日志。add_kubernetes_metadata这个处理器可以自动的为k8s添加日志属性信息

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

这里使用了hostpath挂载了docker的工作目录

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

生效清单文件

[root@k8s-maste ~]# kubectl apply -f filebeat-kubernetes.yaml configmap/filebeat-config created configmap/filebeat-inputs created daemonset.apps/filebeat created clusterrolebinding.rbac.authorization.k8s.io/filebeat created clusterrole.rbac.authorization.k8s.io/filebeat created serviceaccount/filebeat created [root@k8s-maste ~]# kubectl get pods -n kube-system | egrep "kibana|elasticsearch|filebeat" NAME READY STATUS RESTARTS AGE elasticsearch-0 1/1 Running 0 12m filebeat-bf5tt 1/1 Running 0 3m15s filebeat-vjbf5 1/1 Running 0 3m15s filebeat-sw1zt 1/1 Running 0 3m15s kibana-6cd7b9d48b-jrx79 1/1 Running 0 35m

运行起来之后就会自动采集日志

收集日志中落盘的日志文件

收集/var/log/message的日志,在所有node上部署一个filebeat,也就是用daemonsets去部署,挂载宿主机的messages文件到容器,编写配置文件去读message文件就可以了撒,所以YAML文件如下,Configmap和daemonset写到一起了

注意:如果希望在master也分配需要配置容忍污点

[root@k8s-maste ~]# cat k8s-logs.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: k8s-logs-filebeat-config

namespace: kube-system

data:

filebeat.yml: |

filebeat.inputs:

- type: log

paths:

- /var/log/messages

fields:

app: k8s

type: module

fields_under_root: true

setup.ilm.enabled: false

setup.template.name: "k8s-module"

setup.template.pattern: "k8s-module-*"

output.elasticsearch:

hosts: ['elasticsearch-0.elasticsearch.kube-system:9200']

index: "k8s-module-%{+yyyy.MM.dd}"

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: k8s-logs

namespace: kube-system

spec:

selector:

matchLabels:

project: k8s

app: filebeat

template:

metadata:

labels:

project: k8s

app: filebeat

spec:

containers:

- name: filebeat

image: elastic/filebeat:7.5.0

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

resources:

requests:

cpu: 100m

memory: 100Mi

limits:

cpu: 500m

memory: 500Mi

securityContext:

runAsUser: 0

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-logs

mountPath: /var/log/messages

volumes:

- name: k8s-logs

hostPath:

path: /var/log/messages

- name: filebeat-config

configMap:

name: k8s-logs-filebeat-config

这里主要将宿主机的目录挂载到容器中直接通过filebeat进行收集

volumeMounts:

- name: filebeat-config

mountPath: /etc/filebeat.yml

subPath: filebeat.yml

- name: k8s-logs

mountPath: /var/log/messages

volumes:

- name: k8s-logs

hostPath:

path: /var/log/messages

- name: filebeat-config

configMap:

name: k8s-logs-filebeat-config

生效清单文件

[root@k8s-maste ~]# kubectl apply -f k8s-logs.yaml configmap/k8s-logs-filebeat-config created daemonset.apps/k8s-logs created [root@k8s-maste ~]# kubectl get pods -n kube-system|grep k8s-logs NAME READY STATUS RESTARTS AGE k8s-logs-6q6f6 1/1 Running 0 37s k8s-logs-7qkrt 1/1 Running 0 37s k8s-logs-y58hj 1/1 Running 0 37s [root@k8s-maste ~]#

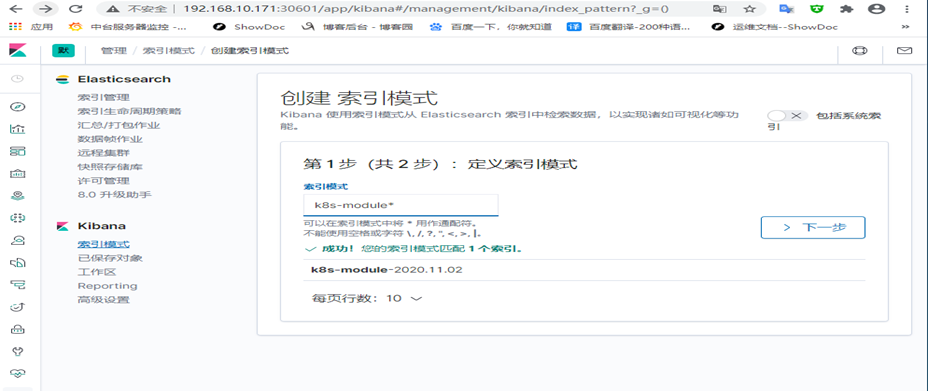

去kibana中添加k8s-module的索引

参考地址:https://www.cnblogs.com/opesn/p/13218079.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号