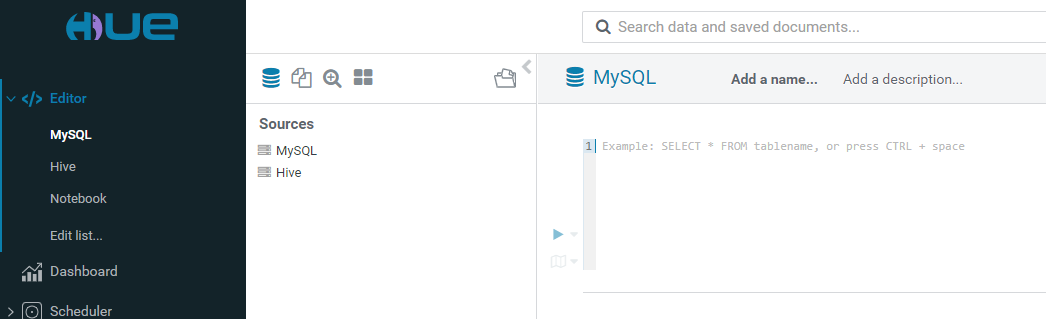

hue安装连接mysql,hive和hdfs过程和遇到的问题

1.打开官网

https://docs.gethue.com/administrator/installation/

查看了下,实在有点乱,我梳理下。

2.依赖

a.

1 | yum -y install ant asciidoc cyrus-sasl-devel cyrus-sasl-gssapi cyrus-sasl-plain gcc gcc-c++ krb5-devel libffi-devel libxml2-devel libxslt-devel make mysql-devel openldap-devel python-devel sqlite-devel gmp-devel rsync |

缺失依赖可能遇到(我遇到的,Hue安装成功后,连接hive时)

1 | Could not start SASL: Error in sasl_client_start (-4) SASL(-4): no mechanism available |

b. java环境

c.Python 2.7 或 Python 3.6+

d.mysql(或者其他数据库)

e.NodeJs

1 2 | curl -sL https://rpm.nodesource.com/setup_14.x | sudo bash -sudo yum install -y nodejs |

3.下载 make install

下载

https://docs.gethue.com/releases/

1 2 3 4 | 解压cd 进目录执行PREFIX=/usr/share make install |

4.配置

1 2 | cd /usr/share/hue/desktop/conf配置hue.ini |

a. API Server. e.g. pointing to a relational database where Hue saves users and queries, selecting the login method like LDAP, customizing the look & feel, activating special features, disabling some apps

1 2 3 4 5 6 7 | [[database]] host=localhostport=3306engine=mysqluser=huepassword=secretpasswordname=hue |

执行生成元数据,注意要在mysql创建databae "hue"

1 | ./build/env/bin/hue migrate |

b. Connectors. e.g. pointing to Data Warehouse services you want to make easy to query or browse. Those are typically which databases we want users to query with SQL or filesystems to browse.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | [[interpreters]] # Define the name and how to connect and execute the language. # https://docs.gethue.com/administrator/configuration/editor/ [[[mysql]]] name = MySQL interface=sqlalchemy ## https://docs.sqlalchemy.org/en/latest/dialects/mysql.html options='{"url": "mysql://root:hadoop@bigdata:3306/hue"}' [[[hive]]] name=Hive interface=hiveserver2 interface=hiveserver2[beeswax] # Host where HiveServer2 is running. # If Kerberos security is enabled, use fully-qualified domain name (FQDN). hive_server_host=bigdata # Binary thrift port for HiveServer2. hive_server_port=10000 hive_conf_dir=/root/app/hive/conf[hadoop] # Configuration for HDFS NameNode # ------------------------------------------------------------------------ [[hdfs_clusters]] # HA support by using HttpFs [[[default]]] # Enter the filesystem uri fs_defaultfs=hdfs://localhost:8020 # NameNode logical name. ## logical_name= # Use WebHdfs/HttpFs as the communication mechanism. # Domain should be the NameNode or HttpFs host. # Default port is 14000 for HttpFs. webhdfs_url=http://bigdata:14000/webhdfs/v1 |

c.配制hadoop配置文件

在 hdfs-site.xml 中增加配置

1 2 3 4 5 6 7 8 9 | <!-- HUE --><property> <name>dfs.webhdfs.enabled</name> <value>true</value></property><property> <name>dfs.permissions.enabled</name> <value>false</value></property> |

在 core-site.xml 中增加配置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | <!-- HUE --><property> <name>hadoop.proxyuser.hue.hosts</name> <value>*</value></property><property> <name>hadoop.proxyuser.hue.groups</name> <value>*</value></property><property> <name>hadoop.proxyuser.hdfs.hosts</name> <value>*</value></property><property> <name>hadoop.proxyuser.hdfs.groups</name> <value>*</value></property> <property> <name>hadoop.proxyuser.httpfs.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.httpfs.groups</name> <value>*</value> </property> |

httpfs-site.xml 文件,加入配置

1 2 3 4 5 6 7 8 9 | <!-- HUE --><property> <name>httpfs.proxyuser.hue.hosts</name> <value>*</value></property><property> <name>httpfs.proxyuser.hue.groups</name> <value>*</value></property> |

重启集群

1 2 | cd /usr/share/huebuild/env/bin/supervisor |

访问http://bigdata:8888

6.注意

支持hive,要保证hiveserver2和metastore服务正常启动

hiveserver2启动无法监控10000请看我另一篇文章

https://www.cnblogs.com/huangguoming/p/15796456.html

7.关于Hue 两种方式访问 Hdfs (涉及到hadoopHA的可以了解)

WebHDFS:提供高速的数据传输,客户端直接和 DataNode 交互。HttpFS:一个代理服务,方便与集群外部的系统集成。

两者都支持 HTTP REST API,但是 Hue 只能配置其中一种方式;对于 HDFS HA部署方式,只能使用 HttpFS。

本文配置选用的HttpFS,若选用WebHDFS,注意

1 2 | webhdfs_url=http://bigdata:50070/webhdfs/v1 namenode web ui监听的50070端口在hadoop3.x是9870 |

参考:https://www.cnblogs.com/zlslch/p/6817360.html

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 25岁的心里话

· 闲置电脑爆改个人服务器(超详细) #公网映射 #Vmware虚拟网络编辑器

· 基于 Docker 搭建 FRP 内网穿透开源项目(很简单哒)

· 零经验选手,Compose 一天开发一款小游戏!

· 一起来玩mcp_server_sqlite,让AI帮你做增删改查!!