修改hadoop配置参数导致hive.ql.metadata.HiveException问题

执行依据sql

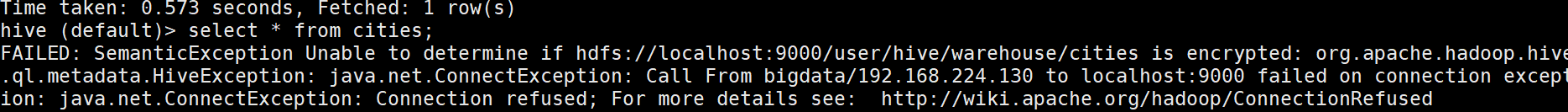

select * from cities limit 10;

报错

FAILED: SemanticException Unable to determine if hdfs://localhost:9000/user/hive/warehouse/cities is encrypted:

org.apache.hadoop.hive.ql.metadata.HiveException: java.net.ConnectException: Call From bigdata/192.168.224.130 to

localhost:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see:

http://wiki.apache.org/hadoop/ConnectionRefused

原因是 mysql中dbs 和sds 有存储着hive 的元数据信息,我修改core-site的fs.defaultFS,

导致元数据不匹配。

原

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://bigdata:9000</value>

</property>

<property>

现

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://bigdata:9000</value>

</property>

<property>

所以解决方案便是修改

update DBS set DB_LOCATION_URI=REPLACE (DB_LOCATION_URI,'localhost:9000','bigdata:9000'); update SDS set LOCATION=REPLACE (LOCATION,'localhost:9000','bigdata:9000');